AdaMatch: A Unified Approach to Semi-Supervised Learning and Domain Adaptation

Jun 08, 2021David Berthelot, Rebecca Roelofs, Kihyuk Sohn, Nicholas Carlini, Alex Kurakin

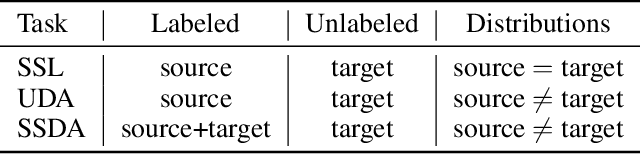

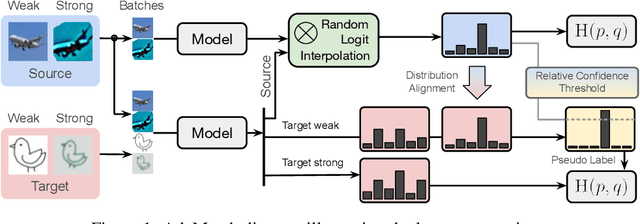

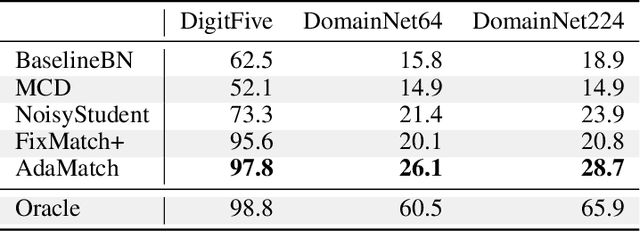

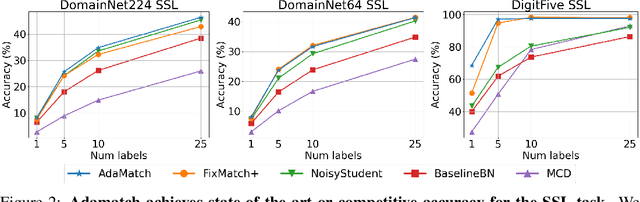

We extend semi-supervised learning to the problem of domain adaptation to learn significantly higher-accuracy models that train on one data distribution and test on a different one. With the goal of generality, we introduce AdaMatch, a method that unifies the tasks of unsupervised domain adaptation (UDA), semi-supervised learning (SSL), and semi-supervised domain adaptation (SSDA). In an extensive experimental study, we compare its behavior with respective state-of-the-art techniques from SSL, SSDA, and UDA on vision classification tasks. We find AdaMatch either matches or significantly exceeds the state-of-the-art in each case using the same hyper-parameters regardless of the dataset or task. For example, AdaMatch nearly doubles the accuracy compared to that of the prior state-of-the-art on the UDA task for DomainNet and even exceeds the accuracy of the prior state-of-the-art obtained with pre-training by 6.4% when AdaMatch is trained completely from scratch. Furthermore, by providing AdaMatch with just one labeled example per class from the target domain (i.e., the SSDA setting), we increase the target accuracy by an additional 6.1%, and with 5 labeled examples, by 13.6%.

Handcrafted Backdoors in Deep Neural Networks

Jun 08, 2021Sanghyun Hong, Nicholas Carlini, Alexey Kurakin

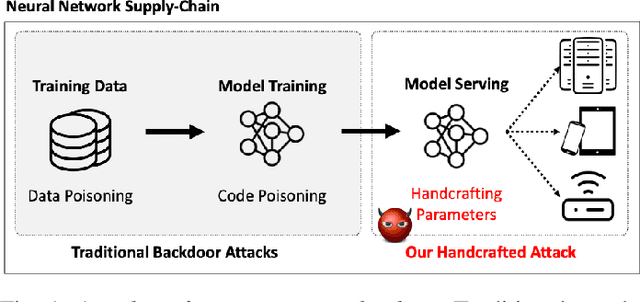

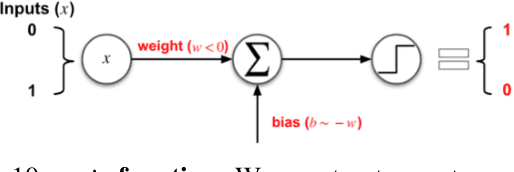

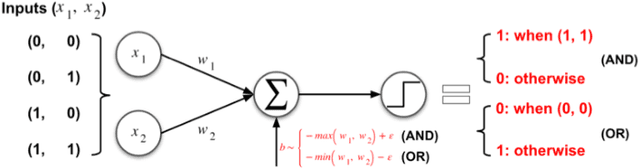

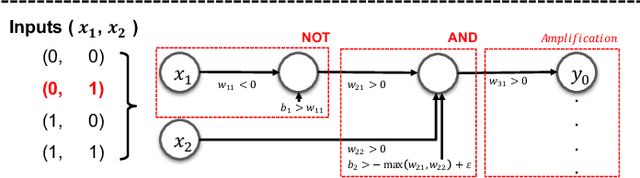

Deep neural networks (DNNs), while accurate, are expensive to train. Many practitioners, therefore, outsource the training process to third parties or use pre-trained DNNs. This practice makes DNNs vulnerable to $backdoor$ $attacks$: the third party who trains the model may act maliciously to inject hidden behaviors into the otherwise accurate model. Until now, the mechanism to inject backdoors has been limited to $poisoning$. We argue that such a supply-chain attacker has more attack techniques available. To study this hypothesis, we introduce a handcrafted attack that directly manipulates the parameters of a pre-trained model to inject backdoors. Our handcrafted attacker has more degrees of freedom in manipulating model parameters than poisoning. This makes it difficult for a defender to identify or remove the manipulations with straightforward methods, such as statistical analysis, adding random noises to model parameters, or clipping their values within a certain range. Further, our attacker can combine the handcrafting process with additional techniques, $e.g.$, jointly optimizing a trigger pattern, to inject backdoors into complex networks effectively$-$the meet-in-the-middle attack. In evaluations, our handcrafted backdoors remain effective across four datasets and four network architectures with a success rate above 96%. Our backdoored models are resilient to both parameter-level backdoor removal techniques and can evade existing defenses by slightly changing the backdoor attack configurations. Moreover, we demonstrate the feasibility of suppressing unwanted behaviors otherwise caused by poisoning. Our results suggest that further research is needed for understanding the complete space of supply-chain backdoor attacks.

Poisoning the Unlabeled Dataset of Semi-Supervised Learning

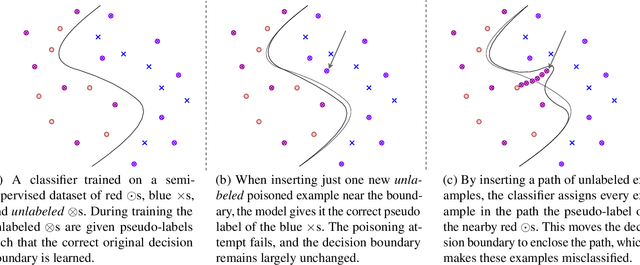

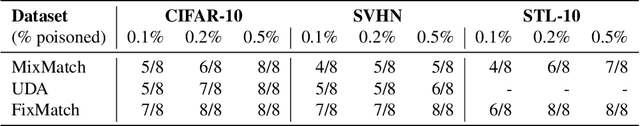

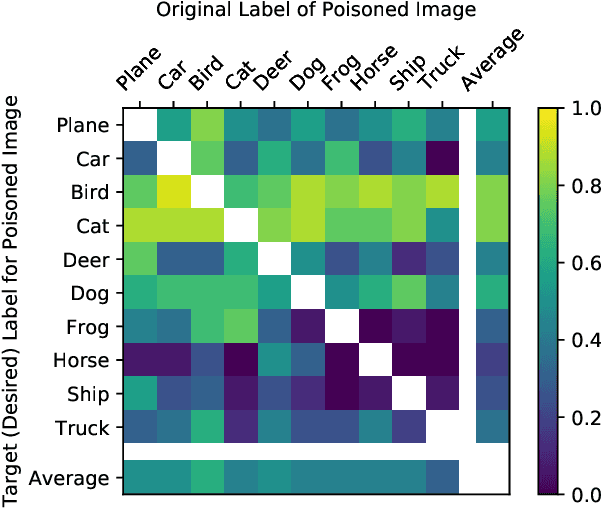

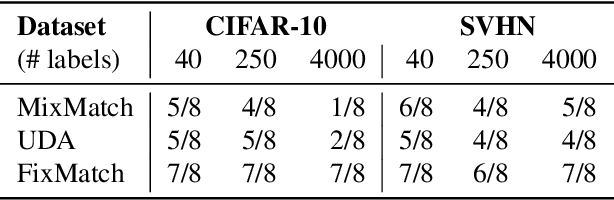

May 04, 2021Nicholas Carlini

Semi-supervised machine learning models learn from a (small) set of labeled training examples, and a (large) set of unlabeled training examples. State-of-the-art models can reach within a few percentage points of fully-supervised training, while requiring 100x less labeled data. We study a new class of vulnerabilities: poisoning attacks that modify the unlabeled dataset. In order to be useful, unlabeled datasets are given strictly less review than labeled datasets, and adversaries can therefore poison them easily. By inserting maliciously-crafted unlabeled examples totaling just 0.1% of the dataset size, we can manipulate a model trained on this poisoned dataset to misclassify arbitrary examples at test time (as any desired label). Our attacks are highly effective across datasets and semi-supervised learning methods. We find that more accurate methods (thus more likely to be used) are significantly more vulnerable to poisoning attacks, and as such better training methods are unlikely to prevent this attack. To counter this we explore the space of defenses, and propose two methods that mitigate our attack.

Adversary Instantiation: Lower Bounds for Differentially Private Machine Learning

Jan 11, 2021Milad Nasr, Shuang Song, Abhradeep Thakurta, Nicolas Papernot, Nicholas Carlini

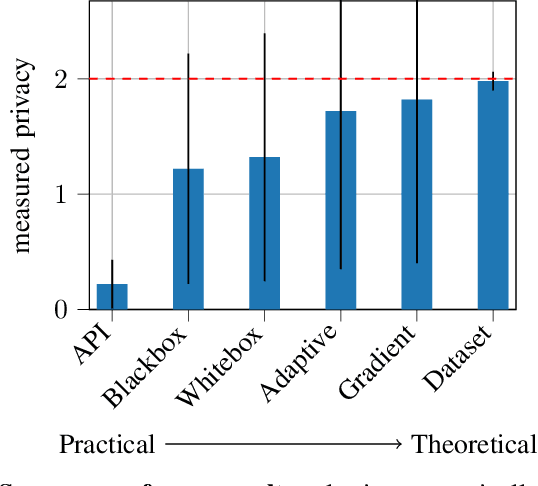

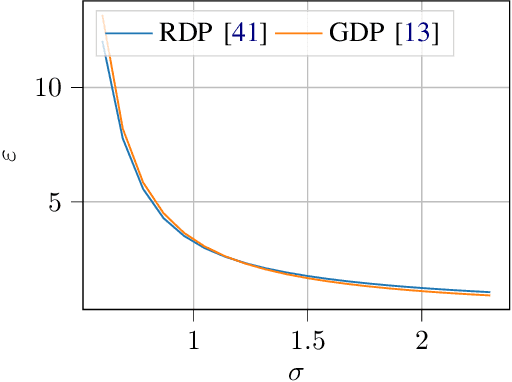

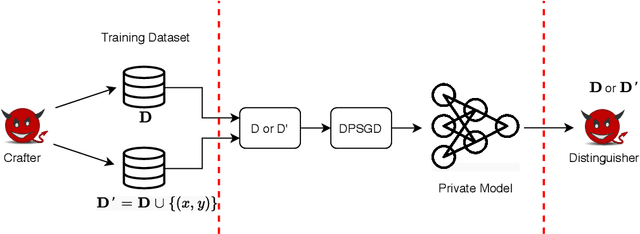

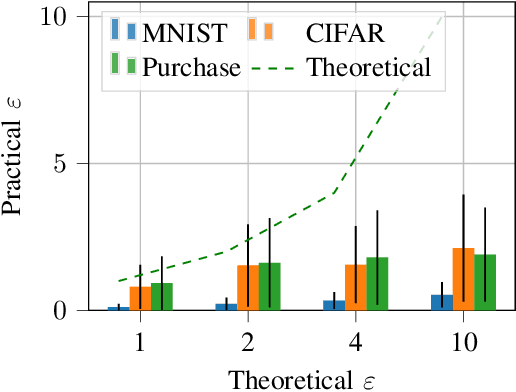

Differentially private (DP) machine learning allows us to train models on private data while limiting data leakage. DP formalizes this data leakage through a cryptographic game, where an adversary must predict if a model was trained on a dataset D, or a dataset D' that differs in just one example.If observing the training algorithm does not meaningfully increase the adversary's odds of successfully guessing which dataset the model was trained on, then the algorithm is said to be differentially private. Hence, the purpose of privacy analysis is to upper bound the probability that any adversary could successfully guess which dataset the model was trained on.In our paper, we instantiate this hypothetical adversary in order to establish lower bounds on the probability that this distinguishing game can be won. We use this adversary to evaluate the importance of the adversary capabilities allowed in the privacy analysis of DP training algorithms.For DP-SGD, the most common method for training neural networks with differential privacy, our lower bounds are tight and match the theoretical upper bound. This implies that in order to prove better upper bounds, it will be necessary to make use of additional assumptions. Fortunately, we find that our attacks are significantly weaker when additional (realistic)restrictions are put in place on the adversary's capabilities.Thus, in the practical setting common to many real-world deployments, there is a gap between our lower bounds and the upper bounds provided by the analysis: differential privacy is conservative and adversaries may not be able to leak as much information as suggested by the theoretical bound.

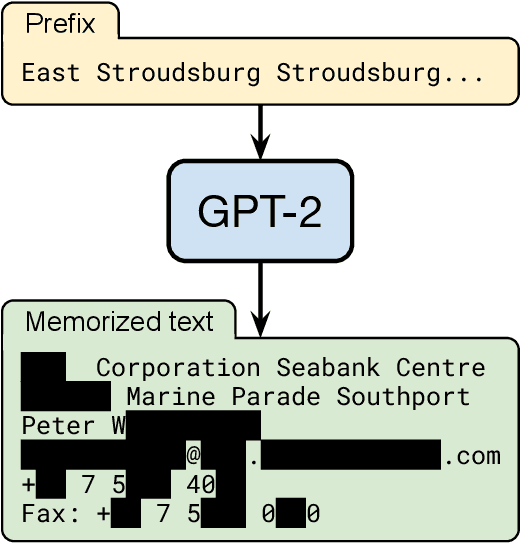

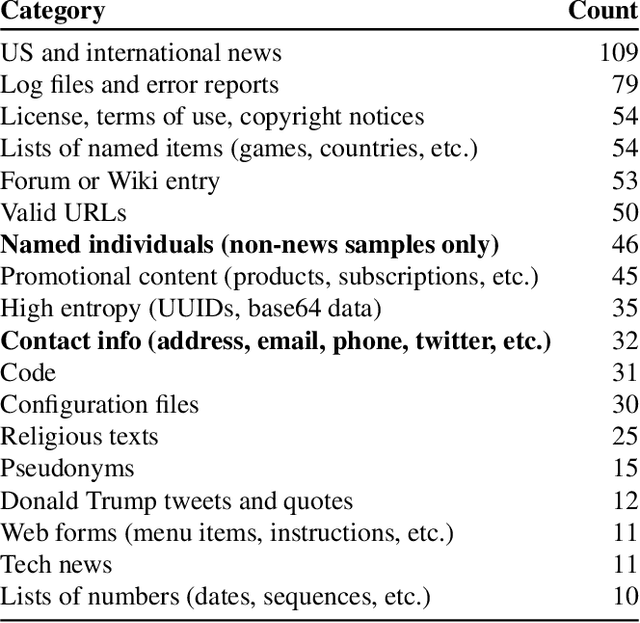

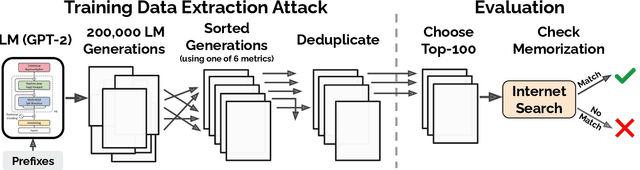

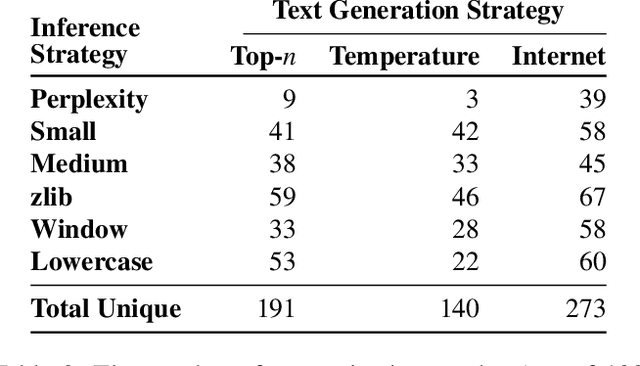

Extracting Training Data from Large Language Models

Dec 14, 2020Nicholas Carlini, Florian Tramer, Eric Wallace, Matthew Jagielski, Ariel Herbert-Voss, Katherine Lee, Adam Roberts, Tom Brown, Dawn Song, Ulfar Erlingsson, Alina Oprea, Colin Raffel

It has become common to publish large (billion parameter) language models that have been trained on private datasets. This paper demonstrates that in such settings, an adversary can perform a training data extraction attack to recover individual training examples by querying the language model. We demonstrate our attack on GPT-2, a language model trained on scrapes of the public Internet, and are able to extract hundreds of verbatim text sequences from the model's training data. These extracted examples include (public) personally identifiable information (names, phone numbers, and email addresses), IRC conversations, code, and 128-bit UUIDs. Our attack is possible even though each of the above sequences are included in just one document in the training data. We comprehensively evaluate our extraction attack to understand the factors that contribute to its success. For example, we find that larger models are more vulnerable than smaller models. We conclude by drawing lessons and discussing possible safeguards for training large language models.

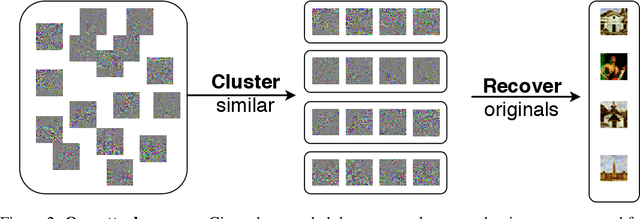

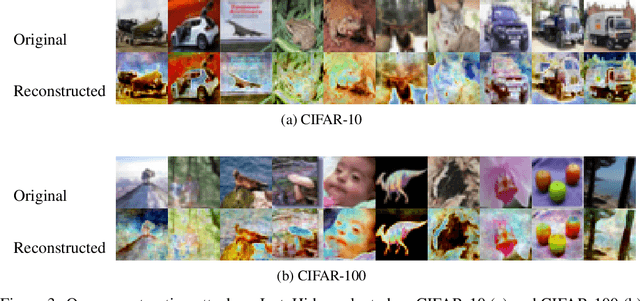

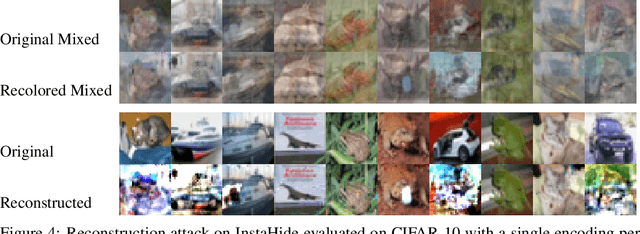

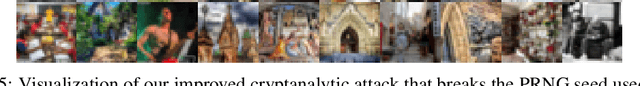

An Attack on InstaHide: Is Private Learning Possible with Instance Encoding?

Nov 10, 2020Nicholas Carlini, Samuel Deng, Sanjam Garg, Somesh Jha, Saeed Mahloujifar, Mohammad Mahmoody, Shuang Song, Abhradeep Thakurta, Florian Tramer

A learning algorithm is private if the produced model does not reveal (too much) about its training set. InstaHide [Huang, Song, Li, Arora, ICML'20] is a recent proposal that claims to preserve privacy by an encoding mechanism that modifies the inputs before being processed by the normal learner. We present a reconstruction attack on InstaHide that is able to use the encoded images to recover visually recognizable versions of the original images. Our attack is effective and efficient, and empirically breaks InstaHide on CIFAR-10, CIFAR-100, and the recently released InstaHide Challenge. We further formalize various privacy notions of learning through instance encoding and investigate the possibility of achieving these notions. We prove barriers against achieving (indistinguishability based notions of) privacy through any learning protocol that uses instance encoding.

Erratum Concerning the Obfuscated Gradients Attack on Stochastic Activation Pruning

Sep 30, 2020Guneet S. Dhillon, Nicholas Carlini

Stochastic Activation Pruning (SAP) (Dhillon et al., 2018) is a defense to adversarial examples that was attacked and found to be broken by the "Obfuscated Gradients" paper (Athalye et al., 2018). We discover a flaw in the re-implementation that artificially weakens SAP. When SAP is applied properly, the proposed attack is not effective. However, we show that a new use of the BPDA attack technique can still reduce the accuracy of SAP to 0.1%.

A Partial Break of the Honeypots Defense to Catch Adversarial Attacks

Sep 23, 2020Nicholas Carlini

A recent defense proposes to inject "honeypots" into neural networks in order to detect adversarial attacks. We break the baseline version of this defense by reducing the detection true positive rate to 0\% and the detection AUC to 0.02, maintaining the original distortion bounds. The authors of the original paper have amended the defense in their CCS'20 paper to mitigate this attacks. To aid further research, we release the complete 2.5 hour keystroke-by-keystroke screen recording of our attack process at https://nicholas.carlini.com/code/ccs_honeypot_break.

Label-Only Membership Inference Attacks

Jul 28, 2020Christopher A. Choquette Choo, Florian Tramer, Nicholas Carlini, Nicolas Papernot

Membership inference attacks are one of the simplest forms of privacy leakage for machine learning models: given a data point and model, determine whether the point was used to train the model. Existing membership inference attacks exploit models' abnormal confidence when queried on their training data. These attacks do not apply if the adversary only gets access to models' predicted labels, without a confidence measure. In this paper, we introduce label-only membership inference attacks. Instead of relying on confidence scores, our attacks evaluate the robustness of a model's predicted labels under perturbations to obtain a fine-grained membership signal. These perturbations include common data augmentations or adversarial examples. We empirically show that our label-only membership inference attacks perform on par with prior attacks that required access to model confidences. We further demonstrate that label-only attacks break multiple defenses against membership inference attacks that (implicitly or explicitly) rely on a phenomenon we call confidence masking. These defenses modify a model's confidence scores in order to thwart attacks, but leave the model's predicted labels unchanged. Our label-only attacks demonstrate that confidence-masking is not a viable defense strategy against membership inference. Finally, we investigate worst-case label-only attacks, that infer membership for a small number of outlier data points. We show that label-only attacks also match confidence-based attacks in this setting. We find that training models with differential privacy and (strong) L2 regularization are the only known defense strategies that successfully prevents all attacks. This remains true even when the differential privacy budget is too high to offer meaningful provable guarantees.

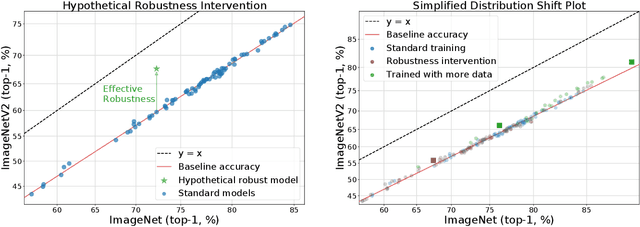

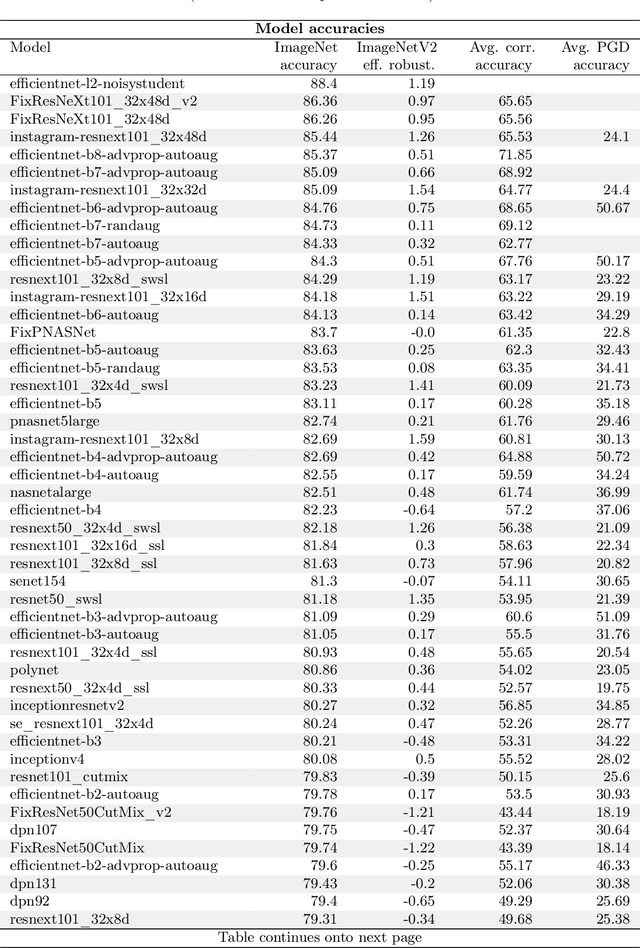

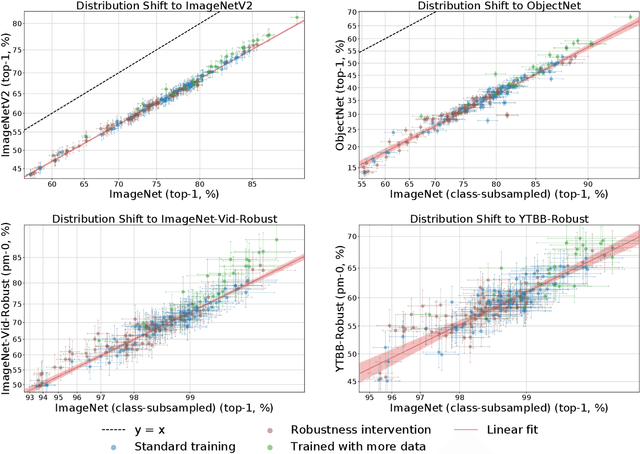

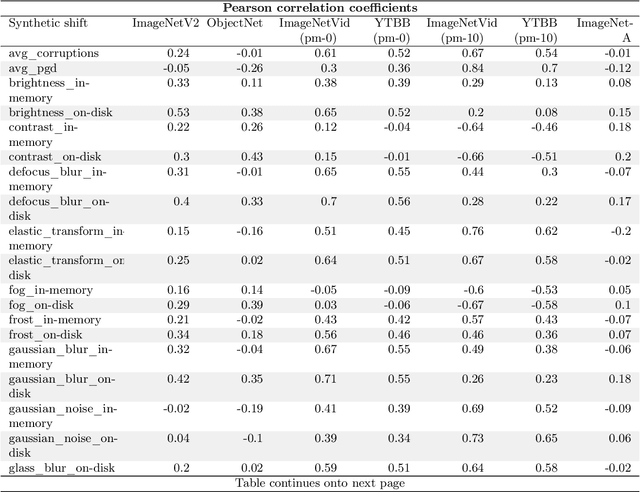

Measuring Robustness to Natural Distribution Shifts in Image Classification

Jul 01, 2020Rohan Taori, Achal Dave, Vaishaal Shankar, Nicholas Carlini, Benjamin Recht, Ludwig Schmidt

We study how robust current ImageNet models are to distribution shifts arising from natural variations in datasets. Most research on robustness focuses on synthetic image perturbations (noise, simulated weather artifacts, adversarial examples, etc.), which leaves open how robustness on synthetic distribution shift relates to distribution shift arising in real data. Informed by an evaluation of 196 ImageNet models in 211 different test conditions, we find that there is little to no transfer of robustness from current synthetic to natural distribution shift. Moreover, most current techniques provide no robustness to the natural distribution shifts in our testbed. The main exception is training on larger datasets, which in some cases offers small gains in robustness. Our results indicate that distribution shifts arising in real data are currently an open research problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge