Efficient Evaluation of Natural Stochastic Policies in Offline Reinforcement Learning

Jun 06, 2020Nathan Kallus, Masatoshi Uehara

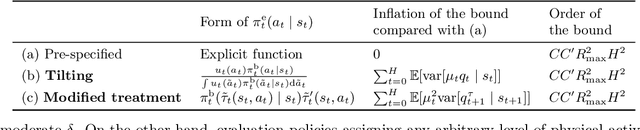

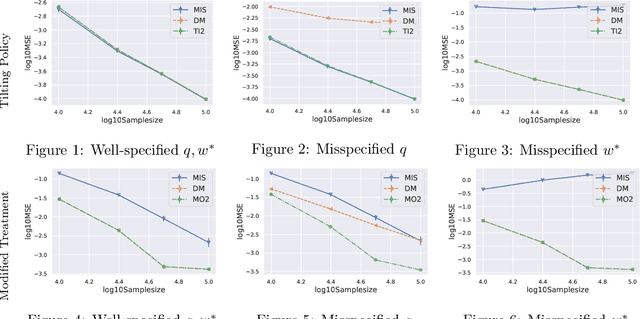

We study the efficient off-policy evaluation of natural stochastic policies, which are defined in terms of deviations from the behavior policy. This is a departure from the literature on off-policy evaluation where most work consider the evaluation of explicitly specified policies. Crucially, offline reinforcement learning with natural stochastic policies can help alleviate issues of weak overlap, lead to policies that build upon current practice, and improve policies' implementability in practice. Compared with the classic case of a pre-specified evaluation policy, when evaluating natural stochastic policies, the efficiency bound, which measures the best-achievable estimation error, is inflated since the evaluation policy itself is unknown. In this paper we derive the efficiency bounds of two major types of natural stochastic policies: tilting policies and modified treatment policies. We then propose efficient nonparametric estimators that attain the efficiency bounds under very lax conditions. These also enjoy a (partial) double robustness property.

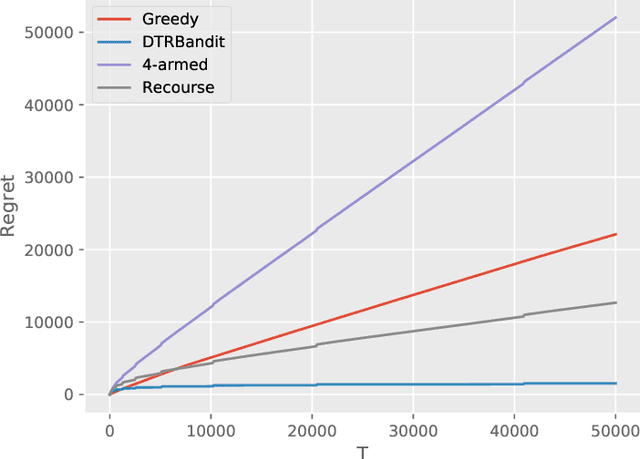

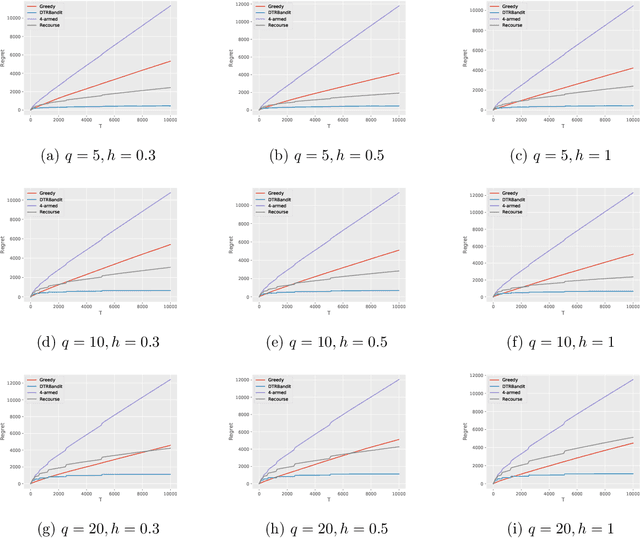

DTR Bandit: Learning to Make Response-Adaptive Decisions With Low Regret

Jun 05, 2020Yichun Hu, Nathan Kallus

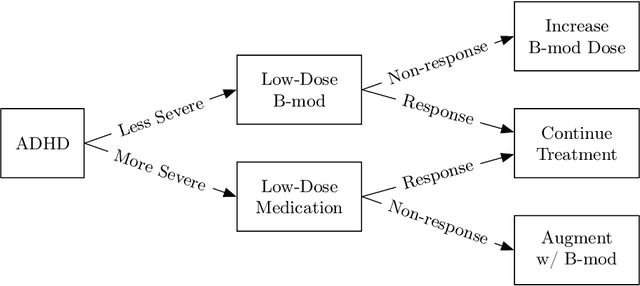

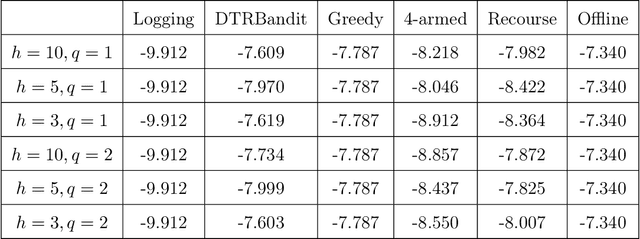

Dynamic treatment regimes (DTRs) are personalized, adaptive, multi-stage treatment plans that adapt treatment decisions both to an individual's initial features and to intermediate outcomes and features at each subsequent stage, which are affected by decisions in prior stages. Examples include personalized first- and second-line treatments of chronic conditions like diabetes, cancer, and depression, which adapt to patient response to first-line treatment, disease progression, and individual characteristics. While existing literature mostly focuses on estimating the optimal DTR from offline data such as from sequentially randomized trials, we study the problem of developing the optimal DTR in an online manner, where the interaction with each individual affect both our cumulative reward and our data collection for future learning. We term this the DTR bandit problem. We propose a novel algorithm that, by carefully balancing exploration and exploitation, is guaranteed to achieve rate-optimal regret when the transition and reward models are linear. We demonstrate our algorithm and its benefits both in synthetic experiments and in a case study of adaptive treatment of major depressive disorder using real-world data.

On the Optimality of Randomization in Experimental Design: How to Randomize for Minimax Variance and Design-Based Inference

May 06, 2020Nathan Kallus

I study the minimax-optimal design for a two-arm controlled experiment where conditional mean outcomes may vary in a given set. When this set is permutation symmetric, the optimal design is complete randomization, and using a single partition (i.e., the design that only randomizes the treatment labels for each side of the partition) has minimax risk larger by a factor of $n-1$. More generally, the optimal design is shown to be the mixed-strategy optimal design (MSOD) of Kallus (2018). Notably, even when the set of conditional mean outcomes has structure (i.e., is not permutation symmetric), being minimax-optimal for variance still requires randomization beyond a single partition. Nonetheless, since this targets precision, it may still not ensure sufficient uniformity in randomization to enable randomization (i.e., design-based) inference by Fisher's exact test to appropriately detect violations of null. I therefore propose the inference-constrained MSOD, which is minimax-optimal among all designs subject to such uniformity constraints. On the way, I discuss Johansson et al. (2020) who recently compared rerandomization of Morgan and Rubin (2012) and the pure-strategy optimal design (PSOD) of Kallus (2018). I point out some errors therein and set straight that randomization is minimax-optimal and that the "no free lunch" theorem and example in Kallus (2018) are correct.

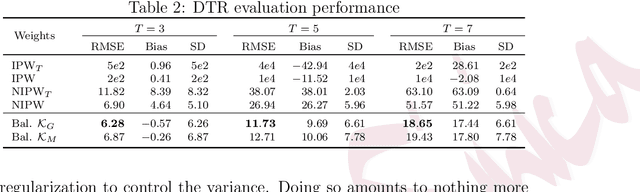

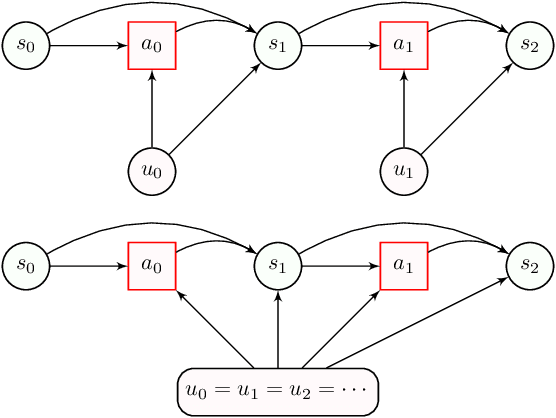

Comment: Entropy Learning for Dynamic Treatment Regimes

Apr 06, 2020Nathan Kallus

I congratulate Profs. Binyan Jiang, Rui Song, Jialiang Li, and Donglin Zeng (JSLZ) for an exciting development in conducting inferences on optimal dynamic treatment regimes (DTRs) learned via empirical risk minimization using the entropy loss as a surrogate. JSLZ's approach leverages a rejection-and-importance-sampling estimate of the value of a given decision rule based on inverse probability weighting (IPW) and its interpretation as a weighted (or cost-sensitive) classification. Their use of smooth classification surrogates enables their careful approach to analyzing asymptotic distributions. However, even for evaluation purposes, the IPW estimate is problematic as it leads to weights that discard most of the data and are extremely variable on whatever remains. In this comment, I discuss an optimization-based alternative to evaluating DTRs, review several connections, and suggest directions forward. This extends the balanced policy evaluation approach of Kallus (2018a) to the longitudinal setting.

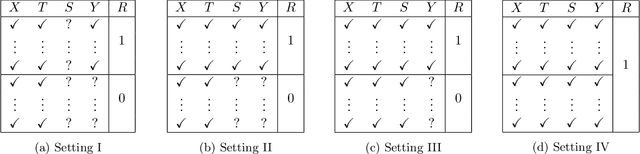

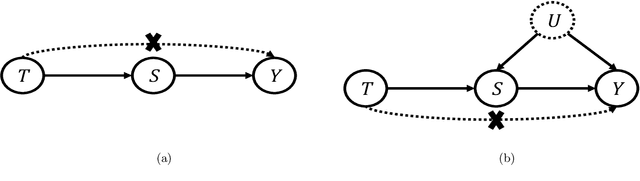

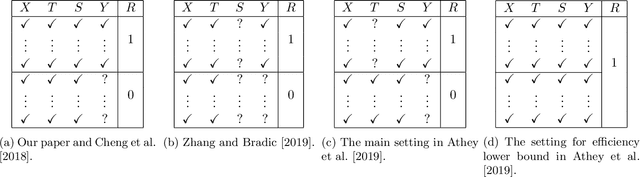

On the role of surrogates in the efficient estimation of treatment effects with limited outcome data

Mar 27, 2020Nathan Kallus, Xiaojie Mao

We study the problem of estimating treatment effects when the outcome of primary interest (e.g., long-term health status) is only seldom observed but abundant surrogate observations (e.g., short-term health outcomes) are available. To investigate the role of surrogates in this setting, we derive the semiparametric efficiency lower bounds of average treatment effect (ATE) both with and without presence of surrogates, as well as several intermediary settings. These bounds characterize the best-possible precision of ATE estimation in each case, and their difference quantifies the efficiency gains from optimally leveraging the surrogates in terms of key problem characteristics when only limited outcome data are available. We show these results apply in two important regimes: when the number of surrogate observations is comparable to primary-outcome observations and when the former dominates the latter. Importantly, we take a missing-data approach that circumvents strong surrogate conditions which are commonly assumed in previous literature but almost always fail in practice. To show how to leverage the efficiency gains of surrogate observations, we propose ATE estimators and inferential methods based on flexible machine learning methods to estimate nuisance parameters that appear in the influence functions. We show our estimators enjoy efficiency and robustness guarantees under weak conditions.

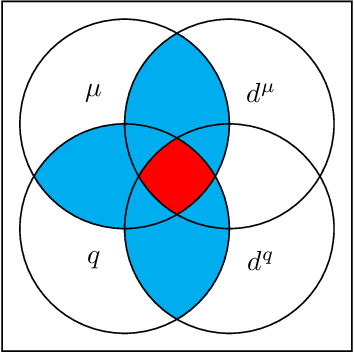

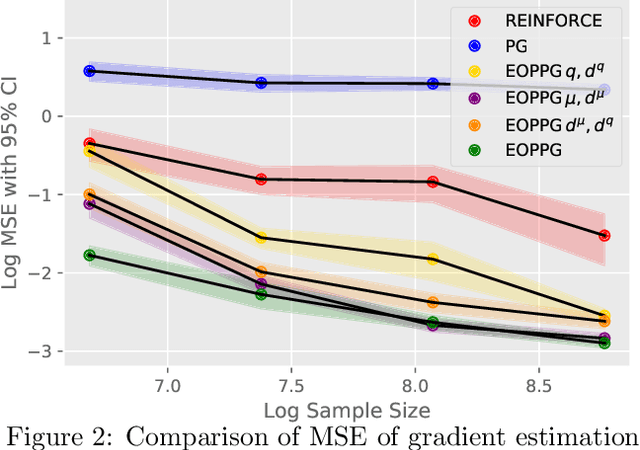

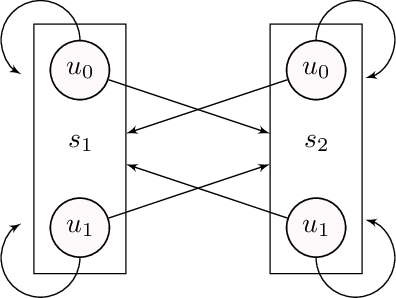

Statistically Efficient Off-Policy Policy Gradients

Feb 20, 2020Nathan Kallus, Masatoshi Uehara

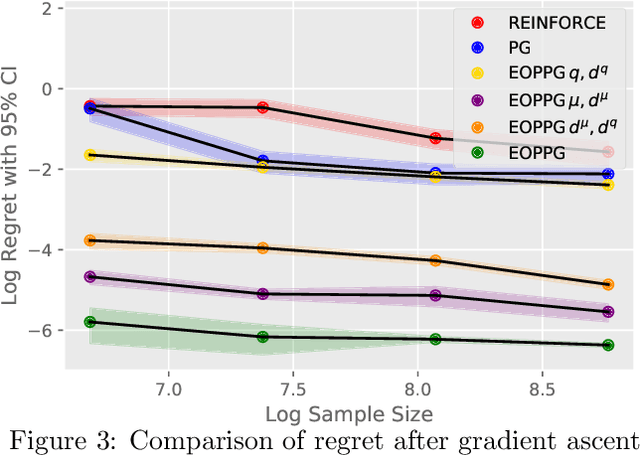

Policy gradient methods in reinforcement learning update policy parameters by taking steps in the direction of an estimated gradient of policy value. In this paper, we consider the statistically efficient estimation of policy gradients from off-policy data, where the estimation is particularly non-trivial. We derive the asymptotic lower bound on the feasible mean-squared error in both Markov and non-Markov decision processes and show that existing estimators fail to achieve it in general settings. We propose a meta-algorithm that achieves the lower bound without any parametric assumptions and exhibits a unique 3-way double robustness property. We discuss how to estimate nuisances that the algorithm relies on. Finally, we establish guarantees on the rate at which we approach a stationary point when we take steps in the direction of our new estimated policy gradient.

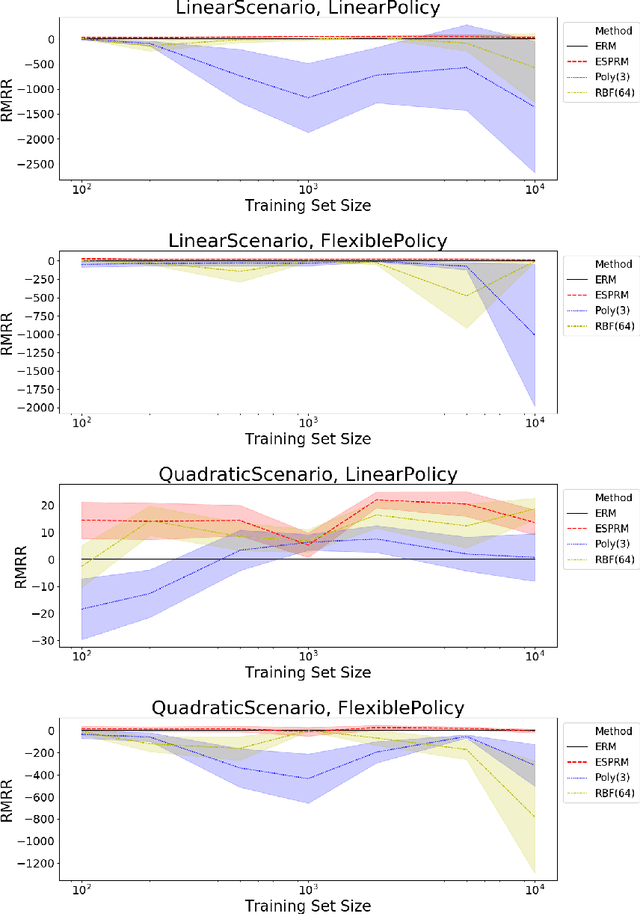

Efficient Policy Learning from Surrogate-Loss Classification Reductions

Feb 12, 2020Andrew Bennett, Nathan Kallus

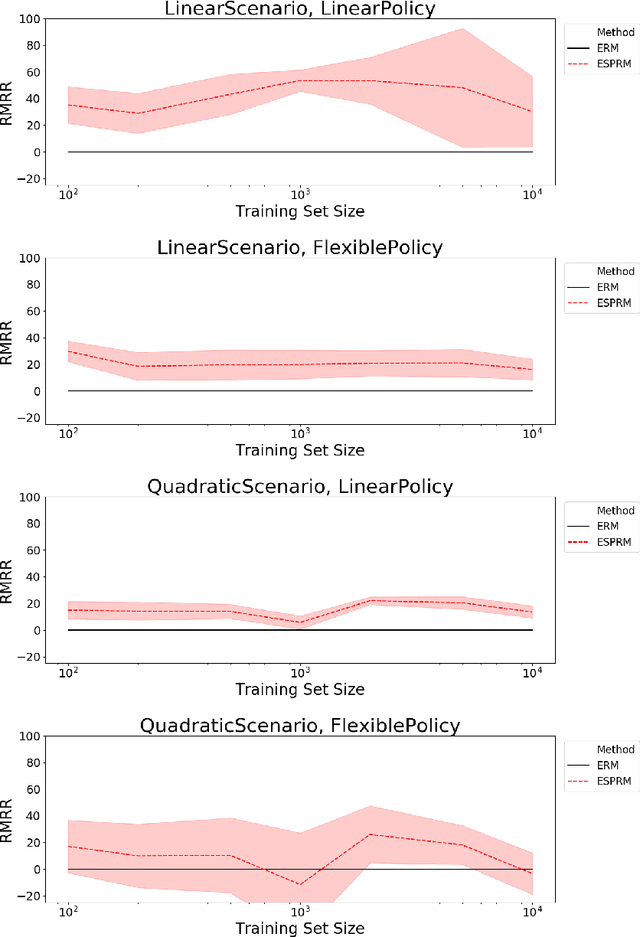

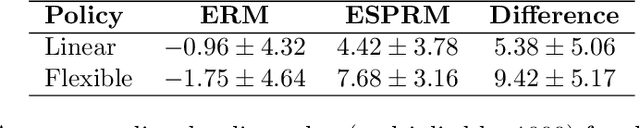

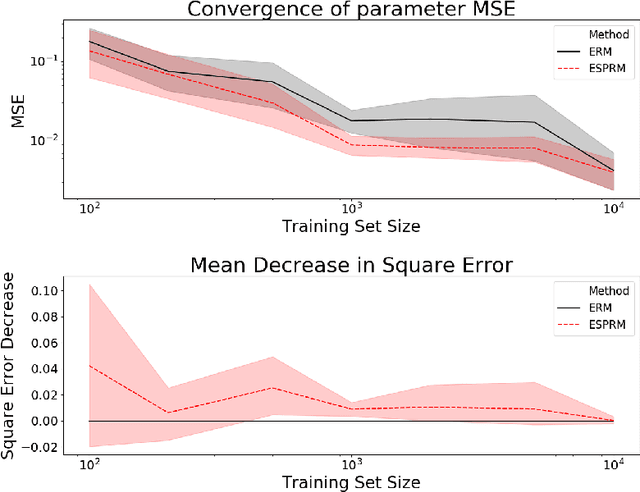

Recent work on policy learning from observational data has highlighted the importance of efficient policy evaluation and has proposed reductions to weighted (cost-sensitive) classification. But, efficient policy evaluation need not yield efficient estimation of policy parameters. We consider the estimation problem given by a weighted surrogate-loss classification reduction of policy learning with any score function, either direct, inverse-propensity weighted, or doubly robust. We show that, under a correct specification assumption, the weighted classification formulation need not be efficient for policy parameters. We draw a contrast to actual (possibly weighted) binary classification, where correct specification implies a parametric model, while for policy learning it only implies a semiparametric model. In light of this, we instead propose an estimation approach based on generalized method of moments, which is efficient for the policy parameters. We propose a particular method based on recent developments on solving moment problems using neural networks and demonstrate the efficiency and regret benefits of this method empirically.

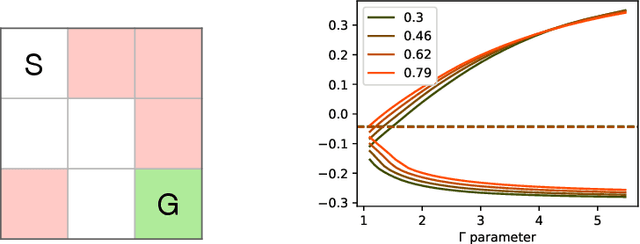

Confounding-Robust Policy Evaluation in Infinite-Horizon Reinforcement Learning

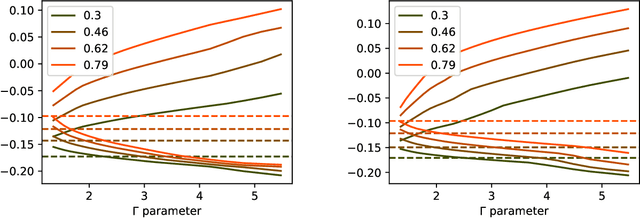

Feb 11, 2020Nathan Kallus, Angela Zhou

Off-policy evaluation of sequential decision policies from observational data is necessary in applications of batch reinforcement learning such as education and healthcare. In such settings, however, observed actions are often confounded with transitions by unobserved variables, rendering exact evaluation of new policies impossible, i.e., unidentifiable. We develop a robust approach that estimates sharp bounds on the (unidentifiable) value of a given policy in an infinite-horizon problem given data from another policy with unobserved confounding subject to a sensitivity model. We phrase the problem precisely as computing the support function of the set of all stationary state-occupancy ratios that agree with both the data and the sensitivity model. We show how to express this set using a new partially identified estimating equation and prove convergence to the sharp bounds, as we collect more confounded data. We prove that membership in the set can be checked by solving a linear program, while the support function is given by a difficult nonconvex optimization problem. We leverage an analytical solution for the finite-state-space case to develop approximations based on nonconvex projected gradient descent. We demonstrate the resulting bounds empirically.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge