Murali Mohana Krishna Dandu

Composable NLP Workflows for BERT-based Ranking and QA System

Apr 13, 2025Abstract:There has been a lot of progress towards building NLP models that scale to multiple tasks. However, real-world systems contain multiple components and it is tedious to handle cross-task interaction with varying levels of text granularity. In this work, we built an end-to-end Ranking and Question-Answering (QA) system using Forte, a toolkit that makes composable NLP pipelines. We utilized state-of-the-art deep learning models such as BERT, RoBERTa in our pipeline, evaluated the performance on MS-MARCO and Covid-19 datasets using metrics such as BLUE, MRR, F1 and compared the results of ranking and QA systems with their corresponding benchmark results. The modular nature of our pipeline and low latency of reranker makes it easy to build complex NLP applications easily.

Unsupervised paradigm for information extraction from transcripts using BERT

Oct 09, 2021

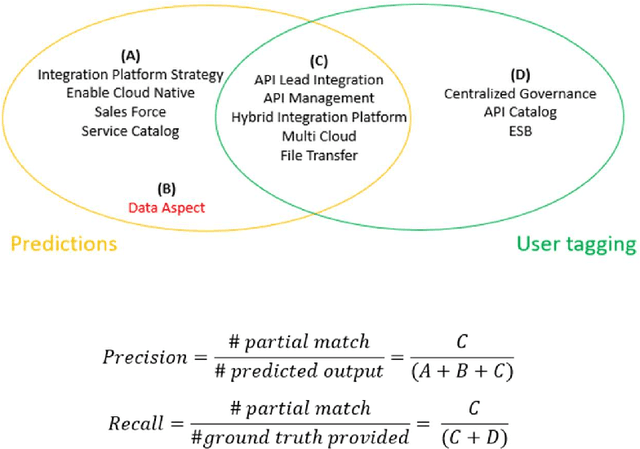

Abstract:Audio call transcripts are one of the valuable sources of information for multiple downstream use cases such as understanding the voice of the customer and analyzing agent performance. However, these transcripts are noisy in nature and in an industry setting, getting tagged ground truth data is a challenge. In this paper, we present a solution implemented in the industry using BERT Language Models as part of our pipeline to extract key topics and multiple open intents discussed in the call. Another problem statement we looked at was the automatic tagging of transcripts into predefined categories, which traditionally is solved using supervised approach. To overcome the lack of tagged data, all our proposed approaches use unsupervised methods to solve the outlined problems. We evaluate the results by quantitatively comparing the automatically extracted topics, intents and tagged categories with human tagged ground truth and by qualitatively measuring the valuable concepts and intents that are not present in the ground truth. We achieved near human accuracy in extraction of these topics and intents using our novel approach

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge