Morteza Haghir Chehreghani

Model-Centric and Data-Centric Aspects of Active Learning for Neural Network Models

Oct 08, 2020

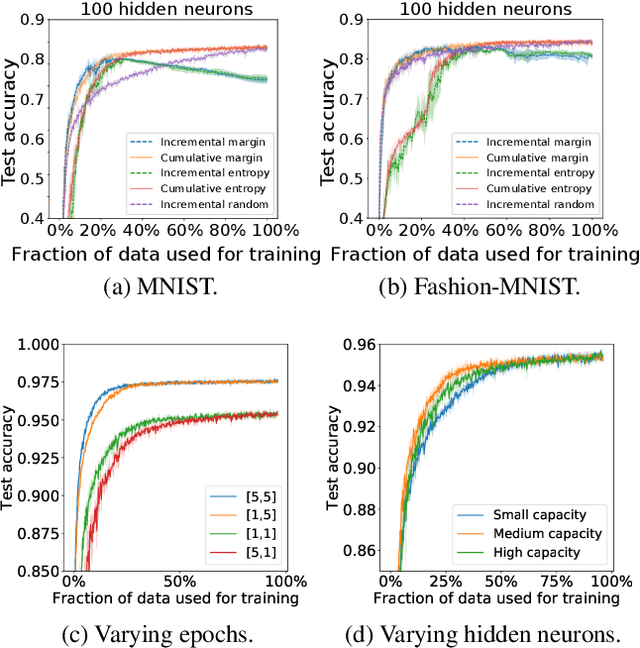

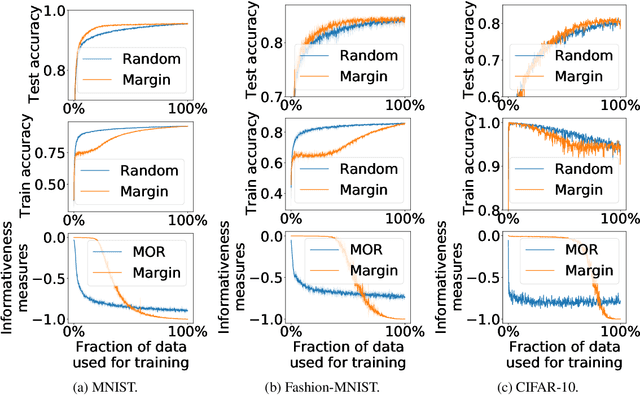

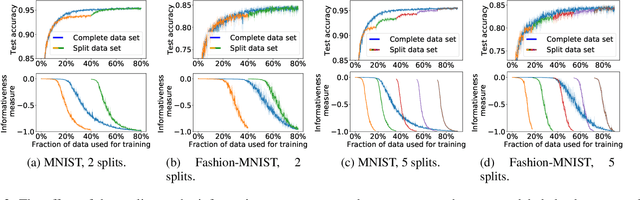

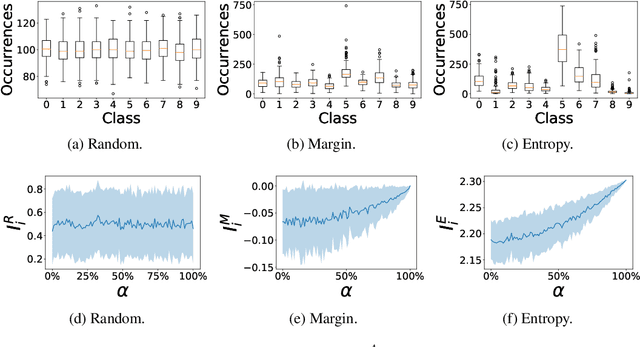

Abstract:We study different data-centric and model-centric aspects of active learning with neural network models. i) We investigate incremental and cumulative training modes that specify how the currently labeled data are used for training. ii) Neural networks are models with a large capacity. Thus, we study how active learning depends on the number of epochs and neurons as well as the choice of batch size. iii) We analyze in detail the behavior of query strategies and their corresponding informativeness measures and accordingly propose more efficient querying and active learning paradigms. iv) We perform statistical analyses, e.g., on actively learned classes and test error estimation, that reveal several insights about active learning.

A Generic Framework for Clustering Vehicle Motion Trajectories

Sep 25, 2020

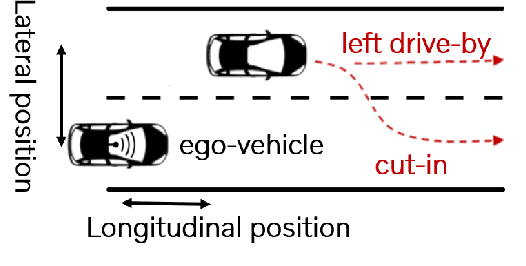

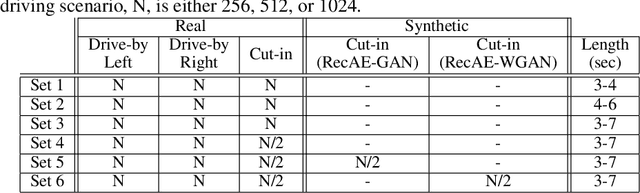

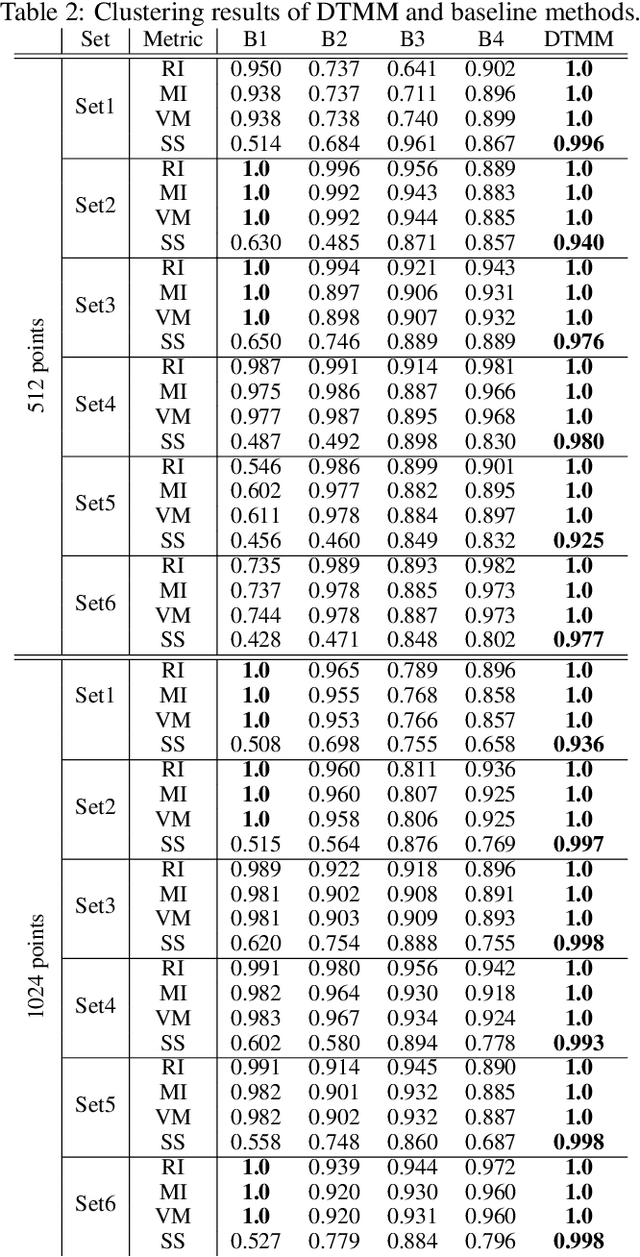

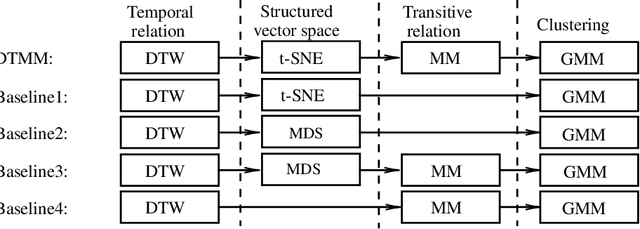

Abstract:The development of autonomous vehicles requires having access to a large amount of data in the concerning driving scenarios. However, manual annotation of such driving scenarios is costly and subject to the errors in the rule-based trajectory labeling systems. To address this issue, we propose an effective non-parametric trajectory clustering framework consisting of five stages: (1) aligning trajectories and quantifying their pairwise temporal dissimilarities, (2) embedding the trajectory-based dissimilarities into a vector space, (3) extracting transitive relations, (4) embedding the transitive relations into a new vector space, and (5) clustering the trajectories with an optimal number of clusters. We investigate and evaluate the proposed framework on a challenging real-world dataset consisting of annotated trajectories. We observe that the proposed framework achieves promising results, despite the complexity caused by having trajectories of varying length. Furthermore, we extend the framework to validate the augmentation of the real dataset with synthetic data generated by a Generative Adversarial Network (GAN) where we examine whether the generated trajectories are consistent with the true underlying clusters.

Efficient Optimization of Dominant Set Clustering with Frank-Wolfe Algorithms

Aug 05, 2020

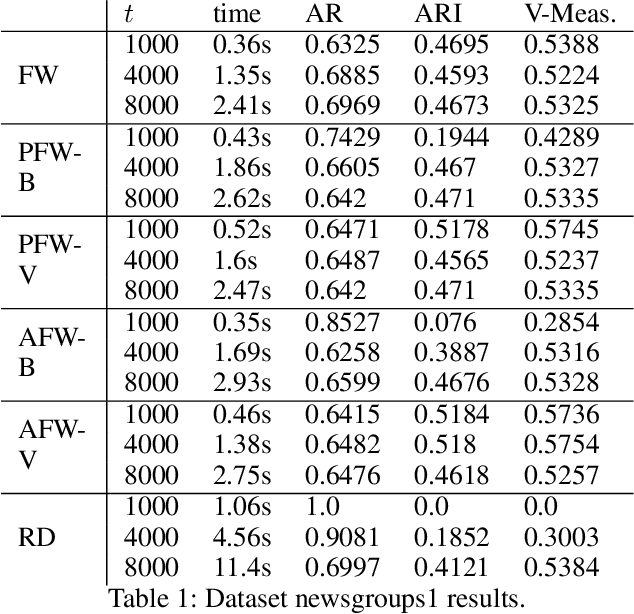

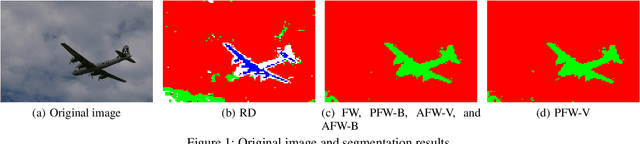

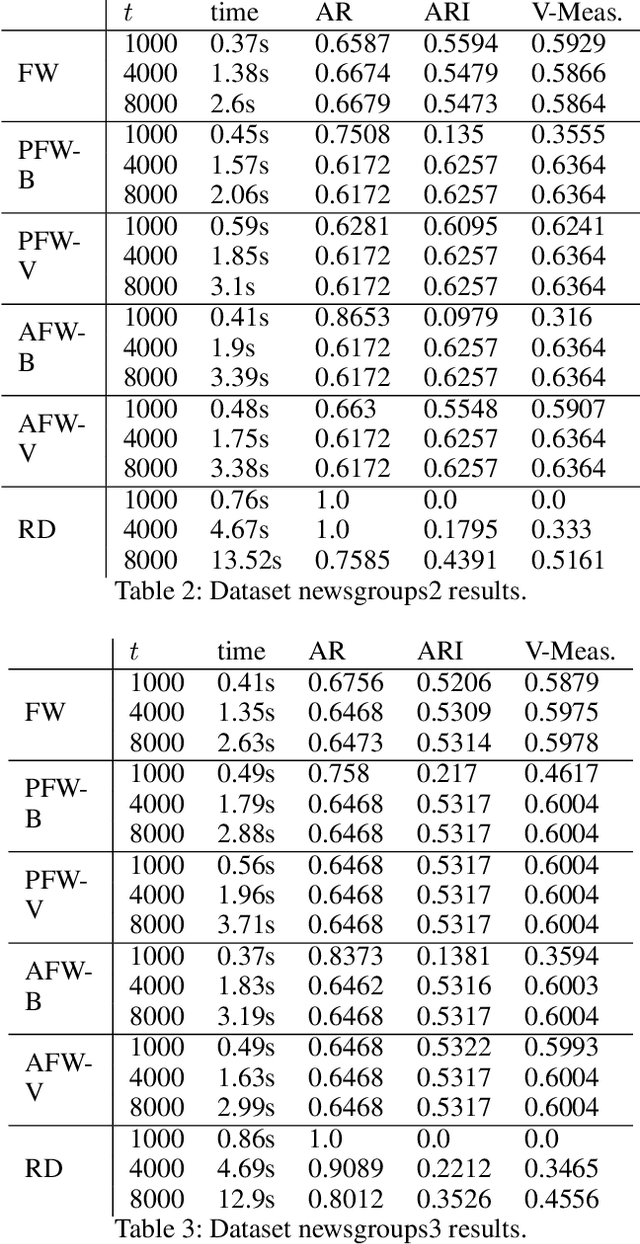

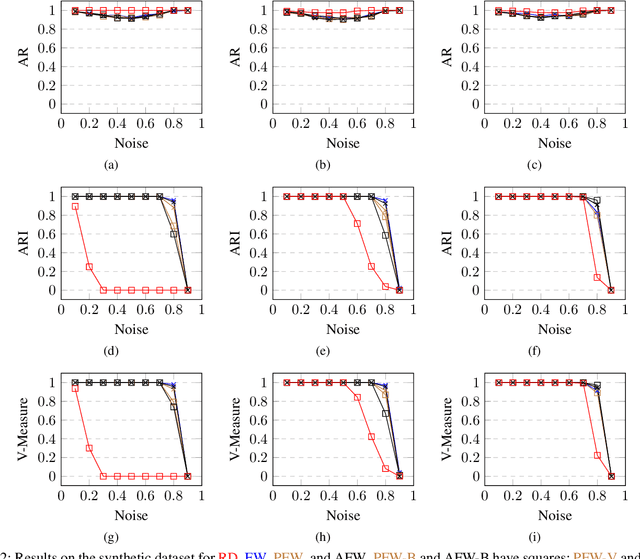

Abstract:We study Frank-Wolfe algorithms -- standard, pairwise, and away-steps -- for efficient optimization of Dominant Set Clustering. We present a unified and computationally efficient framework to employ the different variants of Frank-Wolfe methods, and we investigate its effectiveness via several experimental studies. In addition, we provide explicit convergence rates for the algorithms in terms of the so-called Frank-Wolfe gap. The theoretical analysis has been specialized to the problem of Dominant Set Clustering and is thus more easily accessible compared to prior work.

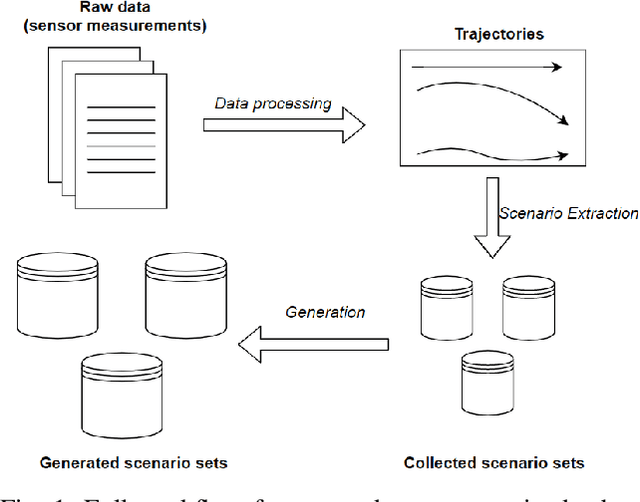

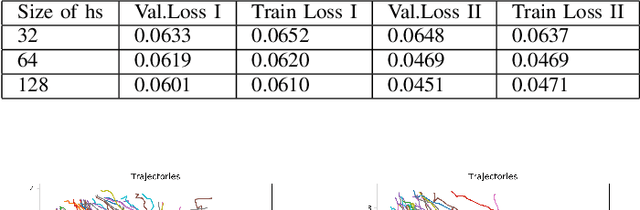

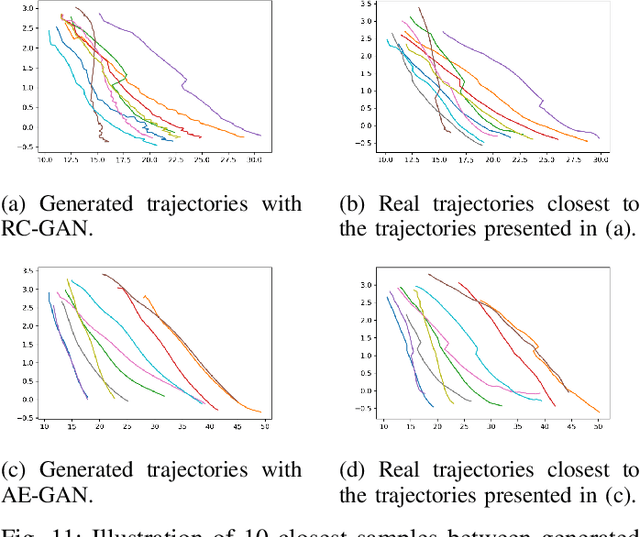

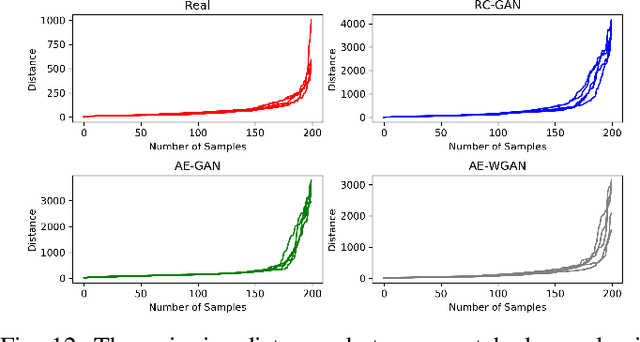

A Deep Learning Framework for Generation and Analysis of Driving Scenario Trajectories

Jul 28, 2020

Abstract:We propose a unified deep learning framework for generation and analysis of driving scenario trajectories, and validate its effectiveness in a principled way. In order to model and generate scenarios of trajectories with different length, we develop two approaches. First, we adapt the Recurrent Conditional Generative Adversarial Networks (RC-GAN) by conditioning on the length of the trajectories. This provides us flexibility to generate variable-length driving trajectories, a desirable feature for scenario test case generation in the verification of self-driving cars. Second, we develop an architecture based on Recurrent Autoencoder with GANs in order to obviate the variable length issue, wherein we train a GAN to learn/generate the latent representations of original trajectories. In this approach, we train an integrated feed-forward neural network to estimate the length of the trajectories to be able to bring them back from the latent space representation. In addition to trajectory generation, we employ the trained autoencoder as a feature extractor, for the purpose of clustering and anomaly detection, in order to obtain further insights on the collected scenario dataset. We experimentally investigate the performance of the proposed framework on real-world scenario trajectories obtained from in-field data collection.

Memory-Efficient Sampling for Minimax Distance Measures

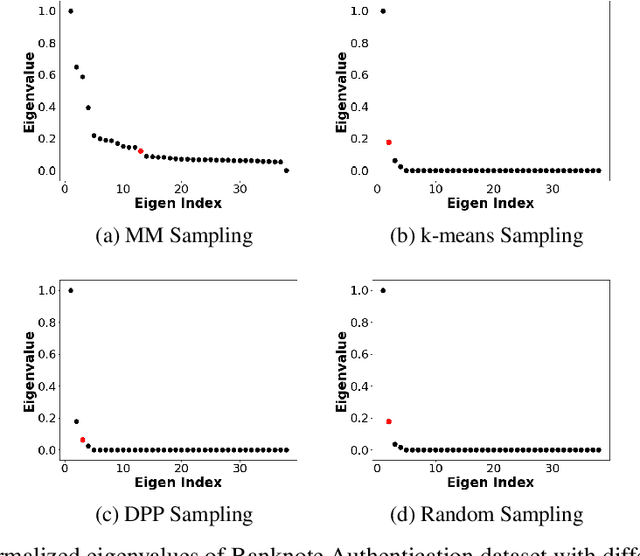

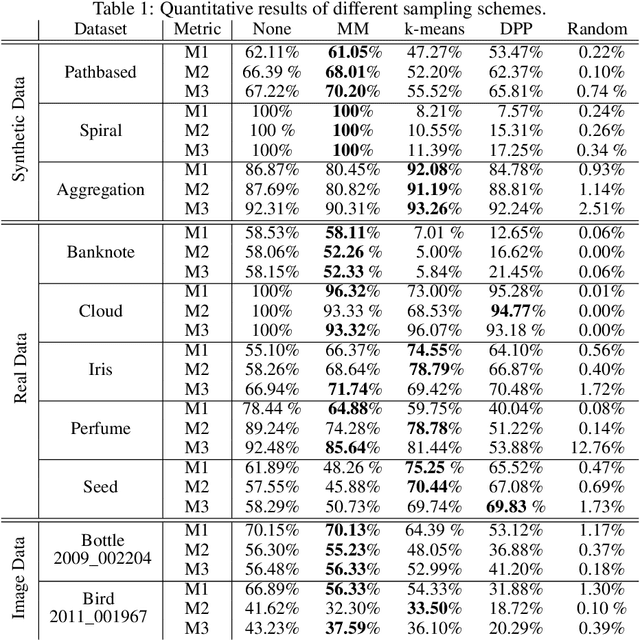

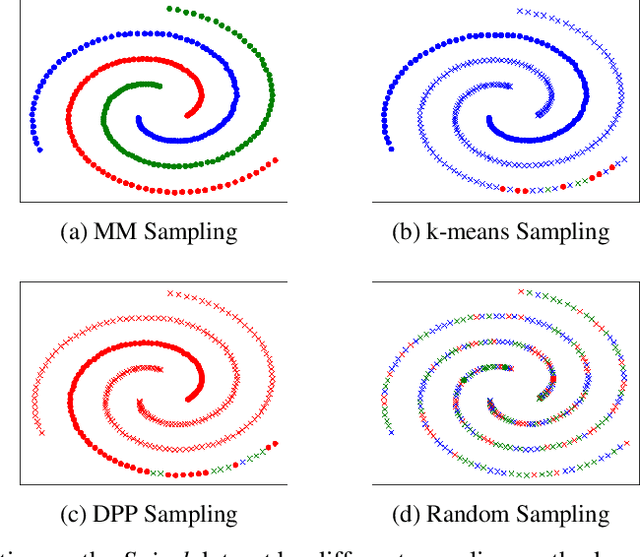

May 26, 2020

Abstract:Minimax distance measure extracts the underlying patterns and manifolds in an unsupervised manner. The existing methods require a quadratic memory with respect to the number of objects. In this paper, we investigate efficient sampling schemes in order to reduce the memory requirement and provide a linear space complexity. In particular, we propose a novel sampling technique that adapts well with Minimax distances. We evaluate the methods on real-world datasets from different domains and analyze the results.

On the Unreasonable Effectiveness of Knowledge Distillation: Analysis in the Kernel Regime

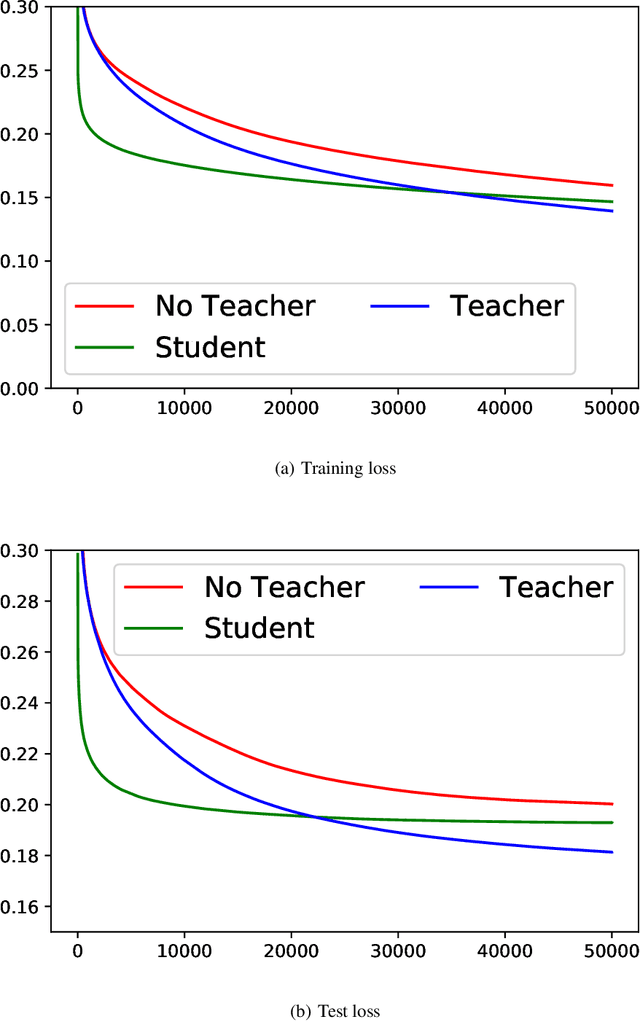

Mar 30, 2020

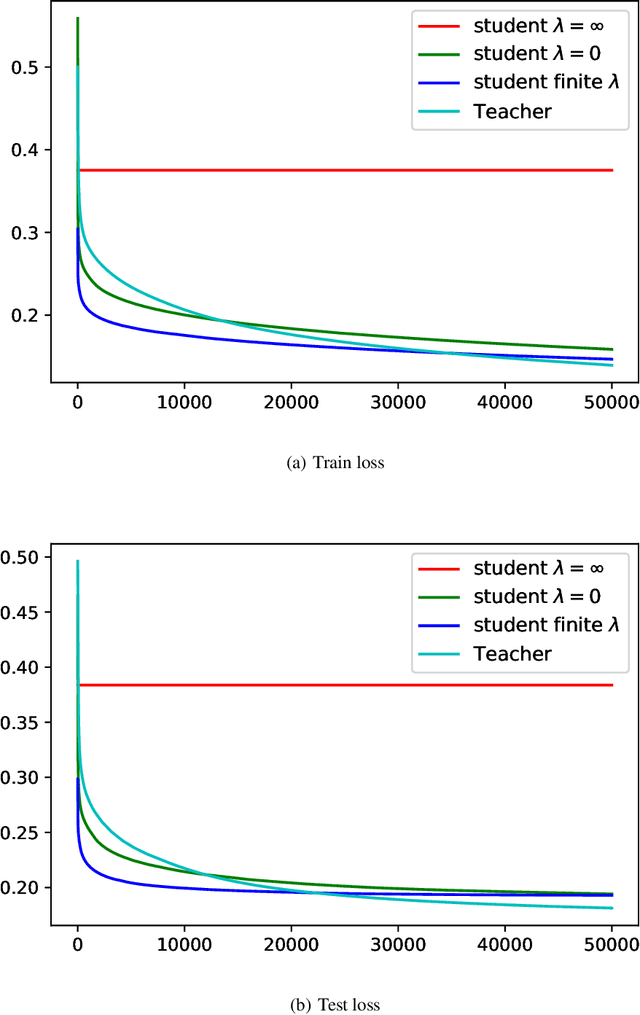

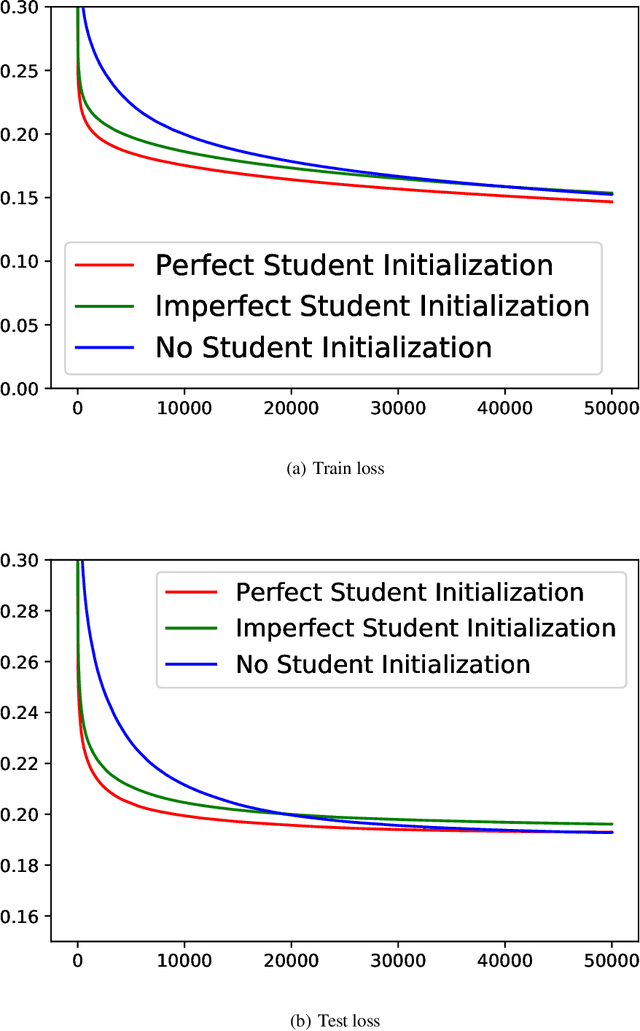

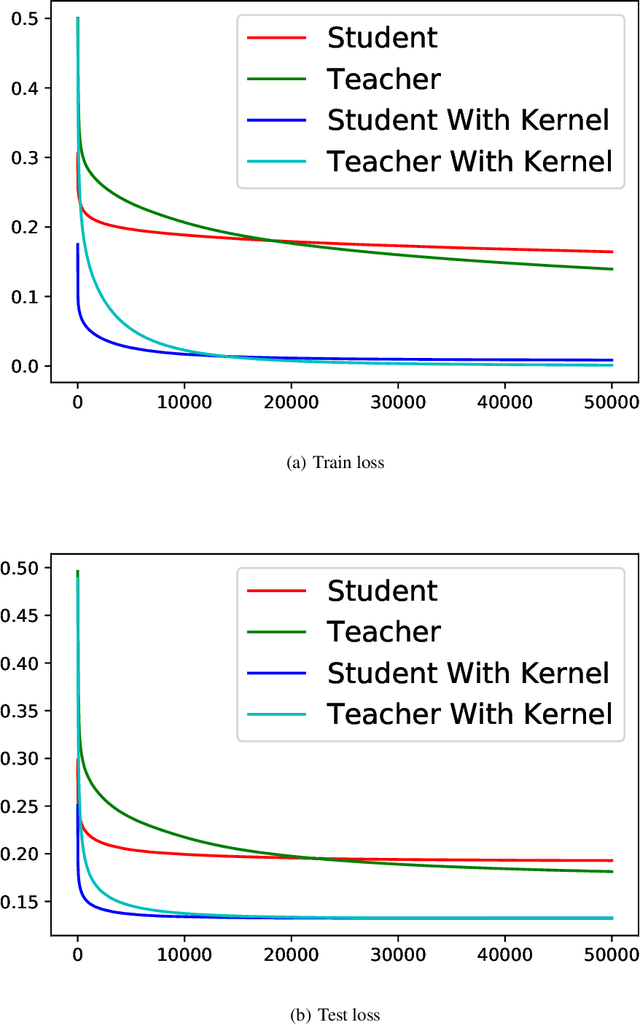

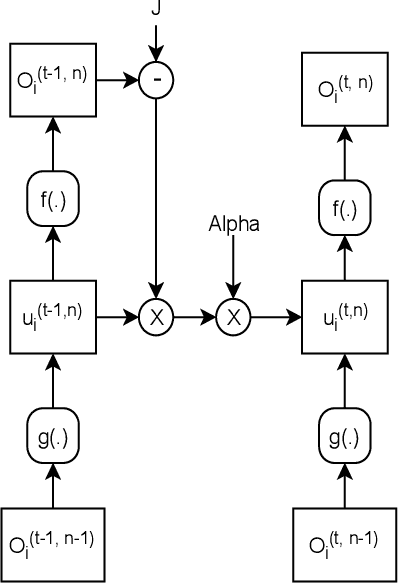

Abstract:Knowledge distillation (KD), i.e. one classifier being trained on the outputs of another classifier, is an empirically very successful technique for knowledge transfer between classifiers. It has even been observed that classifiers learn much faster and more reliably if trained with the outputs of another classifier as soft labels, instead of from ground truth data. However, there has been little or no theoretical analysis of this phenomenon. We provide the first theoretical analysis of KD in the setting of extremely wide two layer non-linear networks in model and regime in (Arora et al., 2019; Du & Hu, 2019; Cao & Gu, 2019). We prove results on what the student network learns and on the rate of convergence for the student network. Intriguingly, we also confirm the lottery ticket hypothesis (Frankle & Carbin, 2019) in this model. To prove our results, we extend the repertoire of techniques from linear systems dynamics. We give corresponding experimental analysis that validates the theoretical results and yields additional insights.

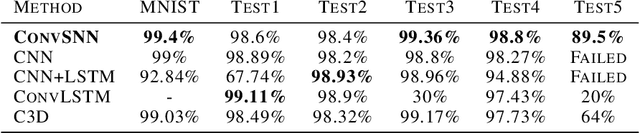

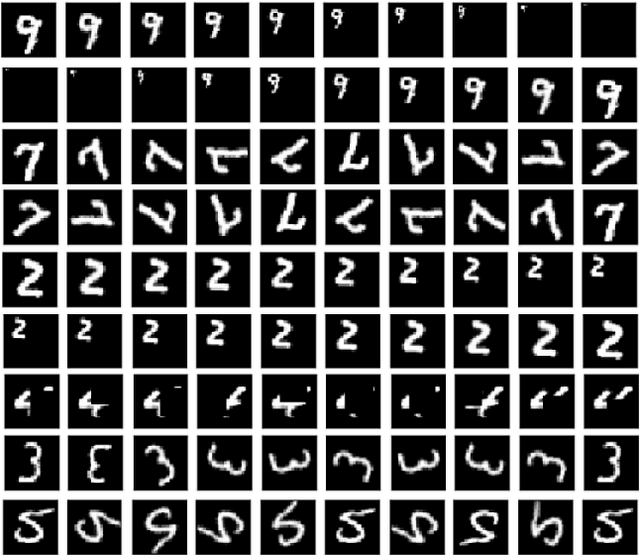

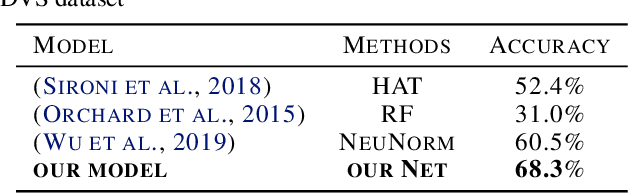

Convolutional Spiking Neural Networks for Spatio-Temporal Feature Extraction

Mar 27, 2020

Abstract:Spiking neural networks (SNNs) can be used in low-power and embedded systems (such as emerging neuromorphic chips) due to their event-based nature. Also, they have the advantage of low computation cost in contrast to conventional artificial neural networks (ANNs), while preserving ANN's properties. However, temporal coding in layers of convolutional spiking neural networks and other types of SNNs has yet to be studied. In this paper, we provide insight into spatio-temporal feature extraction of convolutional SNNs in experiments designed to exploit this property. Our proposed shallow convolutional SNN outperforms state-of-the-art spatio-temporal feature extractor methods such as C3D, ConvLstm, and similar networks. Furthermore, we present a new deep spiking architecture to tackle real-world problems (in particular classification tasks), and the model achieved superior performance compared to other SNN methods on CIFAR10-DVS. It is also worth noting that the training process is implemented based on spatio-temporal backpropagation, and ANN to SNN conversion methods will serve no use.

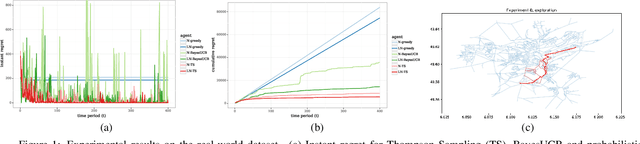

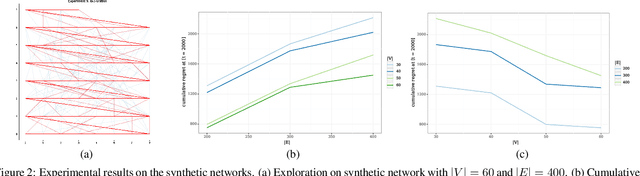

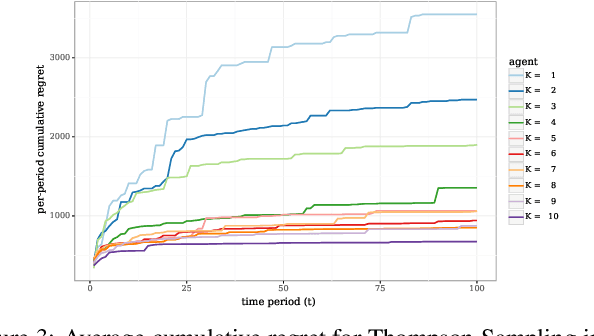

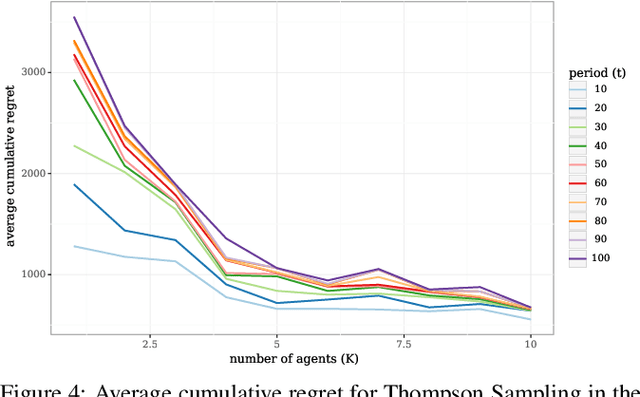

An Online Learning Framework for Energy-Efficient Navigation of Electric Vehicles

Mar 03, 2020

Abstract:Energy-efficient navigation constitutes an important challenge in electric vehicles, due to their limited battery capacity. We employ a Bayesian approach to model energy consumption at road-segments for efficient navigation. In order to learn the model parameters, we develop an online learning framework and investigate several exploration strategies such as Thompson Sampling and Upper Confidence Bound. We then extend our online learning framework to multi-agent setting, where multiple vehicles adaptively navigate and learn the parameters of the energy model. We analyze Thompson Sampling and establish rigorous regret bounds on its performance. Finally, we demonstrate the performance of our methods via several real-world experiments on Luxembourg SUMO Traffic dataset.

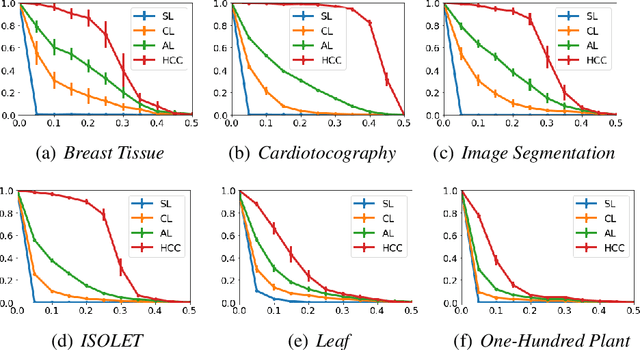

Hierarchical Correlation Clustering and Tree Preserving Embedding

Feb 18, 2020

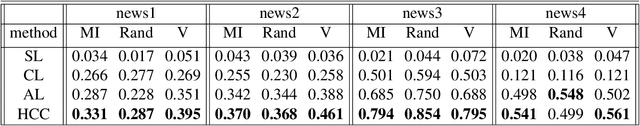

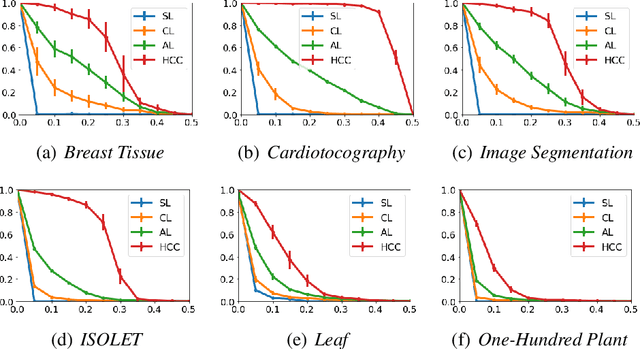

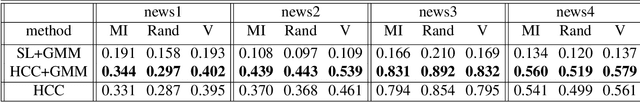

Abstract:We propose a hierarchical correlation clustering method that extends the well-known correlation clustering to produce hierarchical clusters. We then investigate embedding the respective hierarchy to be used for (tree preserving) embedding and feature extraction. We study the connection of such an embedding to single linkage embedding and minimax distances, and in particular study minimax distances for correlation clustering. Finally, we demonstrate the performance of our methods on several UCI and 20 newsgroup datasets.

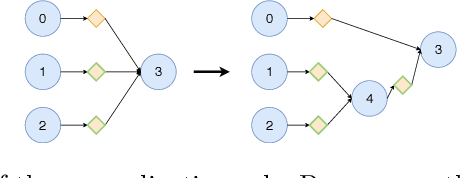

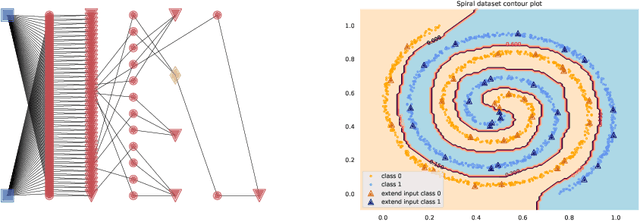

Lifelong Learning Starting From Zero

Jun 24, 2019

Abstract:We present a deep neural-network model for lifelong learning inspired by several forms of neuroplasticity. The neural network develops continuously in response to signals from the environment. In the beginning, the network is a blank slate with no nodes at all. It develops according to four rules: (i) expansion, which adds new nodes to memorize new input combinations; (ii) generalization, which adds new nodes that generalize from existing ones; (iii) forgetting, which removes nodes that are of relatively little use; and (iv) backpropagation, which fine-tunes the network parameters. We analyze the model from the perspective of accuracy, energy efficiency, and versatility and compare it to other network models, finding better performance in several cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge