Morteza Haghir Chehreghani

Autonomous Drug Design with Multi-armed Bandits

Jul 04, 2022

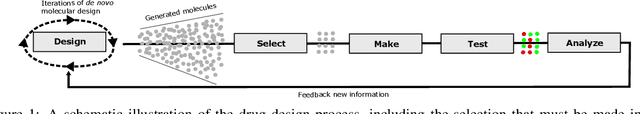

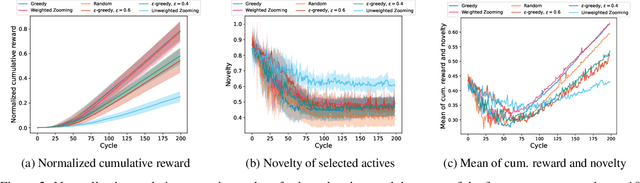

Abstract:Recent developments in artificial intelligence and automation could potentially enable a new drug design paradigm: autonomous drug design. Under this paradigm, generative models provide suggestions on thousands of molecules with specific properties. However, since only a limited number of molecules can be synthesized and tested, an obvious challenge is how to efficiently select these. We formulate this task as a contextual stochastic multi-armed bandit problem with multiple plays and volatile arms. Then, to solve it, we extend previous work on multi-armed bandits to reflect this setting, and compare our solution with random sampling, greedy selection and decaying-epsilon-greedy selection. To investigate how the different selection strategies affect the cumulative reward and the diversity of the selections, we simulate the drug design process. According to the simulation results, our approach has the potential for better exploring and exploiting the chemical space for autonomous drug design.

A Contextual Combinatorial Semi-Bandit Approach to Network Bottleneck Identification

Jun 16, 2022

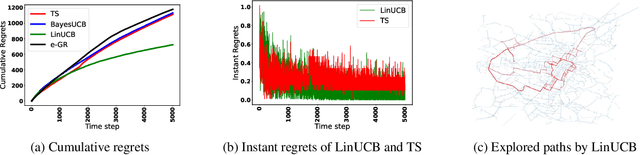

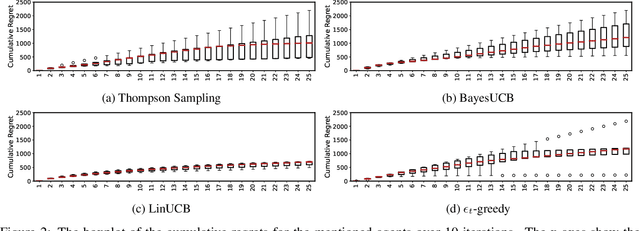

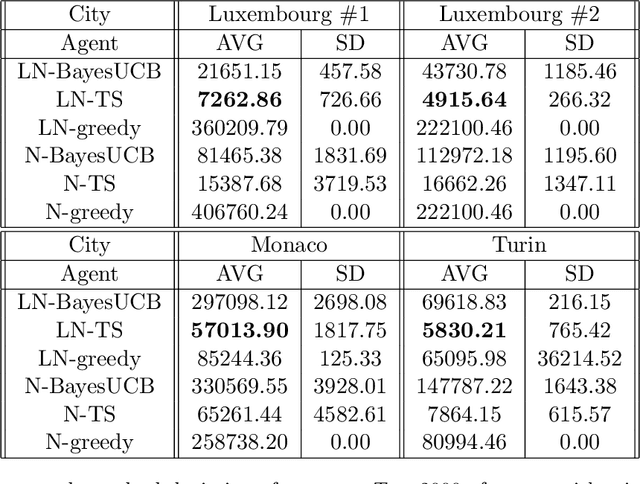

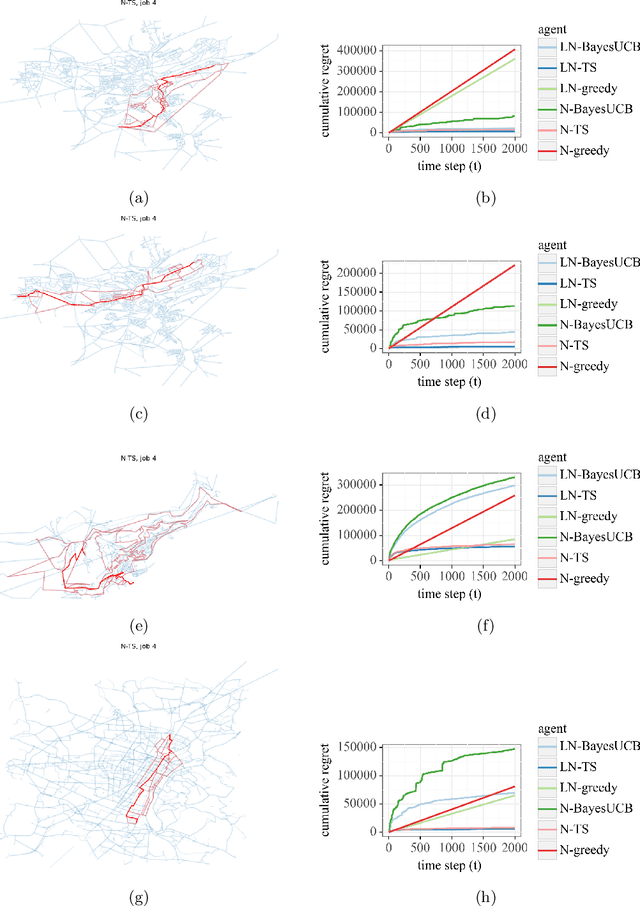

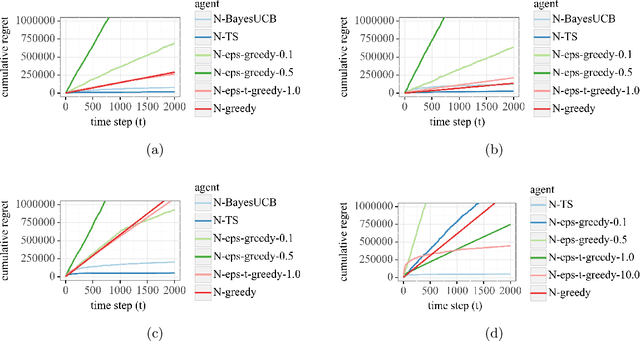

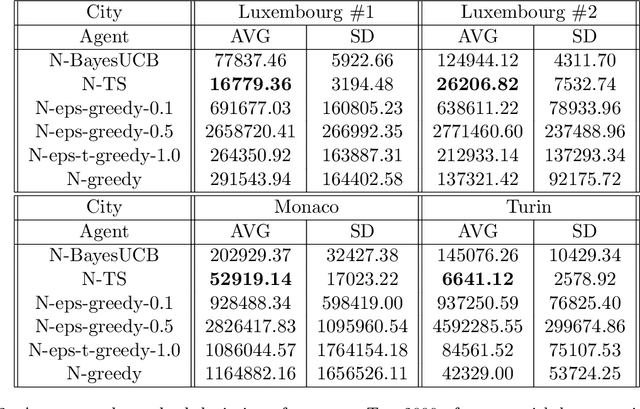

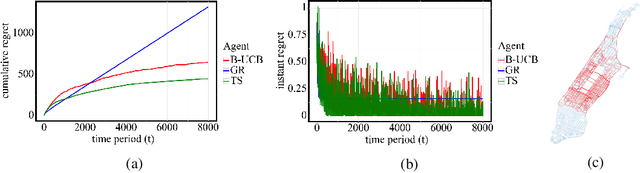

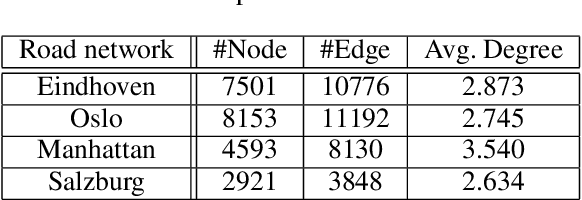

Abstract:Bottleneck identification is a challenging task in network analysis, especially when the network is not fully specified. To address this task, we develop a unified online learning framework based on combinatorial semi-bandits that performs bottleneck identification alongside learning the specifications of the underlying network. Within this framework, we adapt and investigate several combinatorial semi-bandit methods such as epsilon-greedy, LinUCB, BayesUCB, and Thompson Sampling. Our framework is able to employ contextual information in the form of contextual bandits. We evaluate our framework on the real-world application of road networks and demonstrate its effectiveness in different settings.

Passive and Active Learning of Driver Behavior from Electric Vehicles

Mar 04, 2022

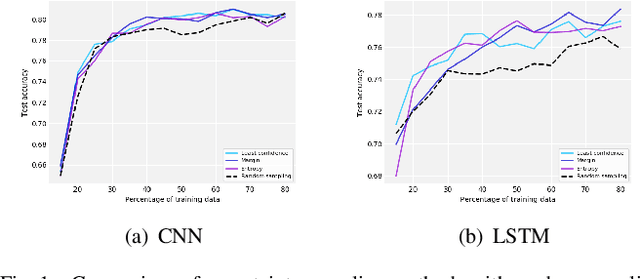

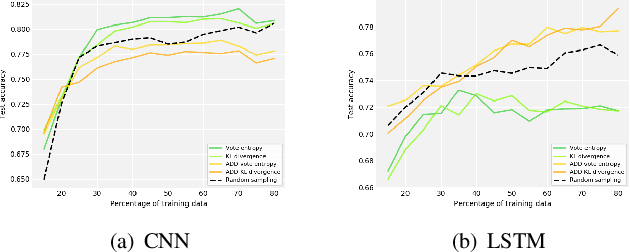

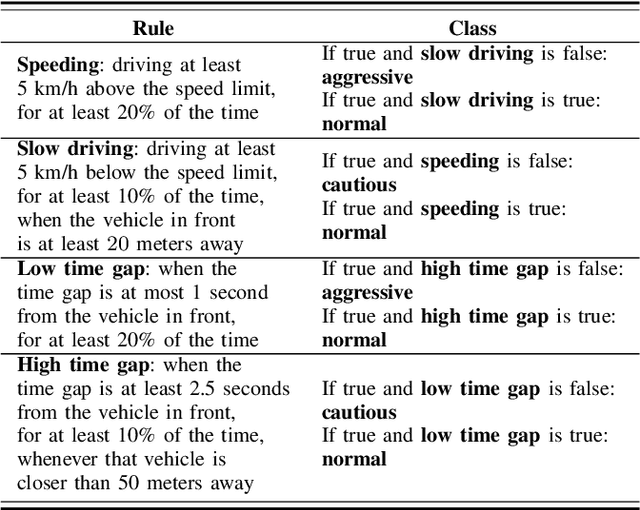

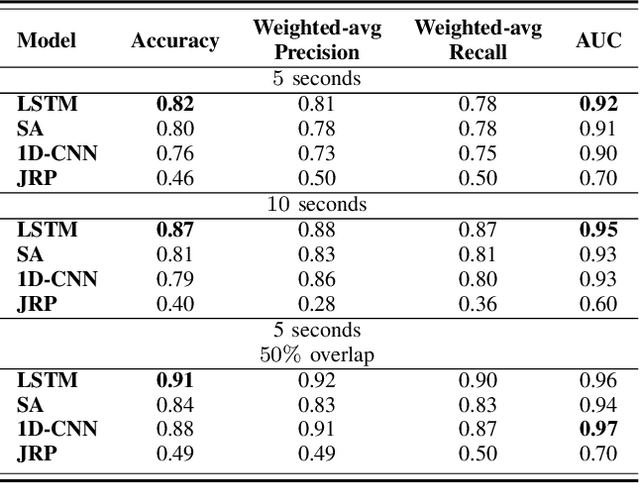

Abstract:Modeling driver behavior provides several advantages in the automotive industry, including prediction of electric vehicle energy consumption. Studies have shown that aggressive driving can consume up to 30% more energy than moderate driving, in certain driving scenarios. Machine learning methods are widely used for driver behavior classification, which, however, may yield some challenges such as sequence modeling on long time windows and lack of labeled data due to expensive annotation. To address the first challenge, passive learning of driver behavior, we investigate non-recurrent architectures such as self-attention models and convolutional neural networks with joint recurrence plots (JRP), and compare them with recurrent models. We find that self-attention models yield good performance, while JRP does not exhibit any significant improvement. However, with the window lengths of 5 and 10 seconds used in our study, none of the non-recurrent models outperform the recurrent models. To address the second challenge, we investigate several active learning methods with different informativeness measures. We evaluate uncertainty sampling, as well as more advanced methods, such as query by committee and active deep dropout. Our experiments demonstrate that some active sampling techniques can outperform random sampling, and therefore decrease the effort needed for annotation.

Deep Q-learning: a robust control approach

Jan 21, 2022

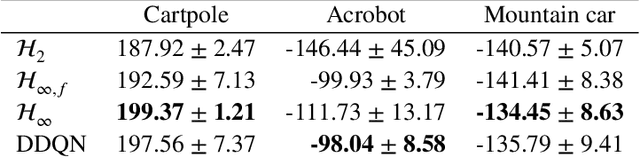

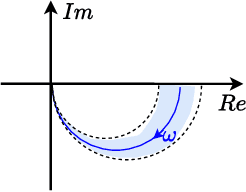

Abstract:In this paper, we place deep Q-learning into a control-oriented perspective and study its learning dynamics with well-established techniques from robust control. We formulate an uncertain linear time-invariant model by means of the neural tangent kernel to describe learning. We show the instability of learning and analyze the agent's behavior in frequency-domain. Then, we ensure convergence via robust controllers acting as dynamical rewards in the loss function. We synthesize three controllers: state-feedback gain scheduling $\mathcal{H}_2$, dynamic $\mathcal{H}_\infty$, and constant gain $\mathcal{H}_\infty$ controllers. Setting up the learning agent with a control-oriented tuning methodology is more transparent and has well-established literature compared to the heuristics in reinforcement learning. In addition, our approach does not use a target network and randomized replay memory. The role of the target network is overtaken by the control input, which also exploits the temporal dependency of samples (opposed to a randomized memory buffer). Numerical simulations in different OpenAI Gym environments suggest that the $\mathcal{H}_\infty$ controlled learning performs slightly better than Double deep Q-learning.

Online Learning of Energy Consumption for Navigation of Electric Vehicles

Nov 03, 2021

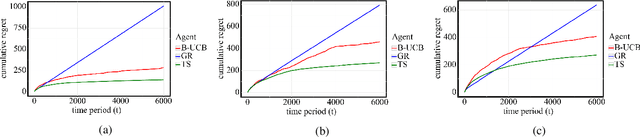

Abstract:Energy-efficient navigation constitutes an important challenge in electric vehicles, due to their limited battery capacity. We employ a Bayesian approach to model the energy consumption at road segments for efficient navigation. In order to learn the model parameters, we develop an online learning framework and investigate several exploration strategies such as Thompson Sampling and Upper Confidence Bound. We then extend our online learning framework to multi-agent setting, where multiple vehicles adaptively navigate and learn the parameters of the energy model. We analyze Thompson Sampling and establish rigorous regret bounds on its performance in the single-agent and multi-agent settings, through an analysis of the algorithm under batched feedback. Finally, we demonstrate the performance of our methods via experiments on several real-world city road networks.

Shift of Pairwise Similarities for Data Clustering

Oct 25, 2021

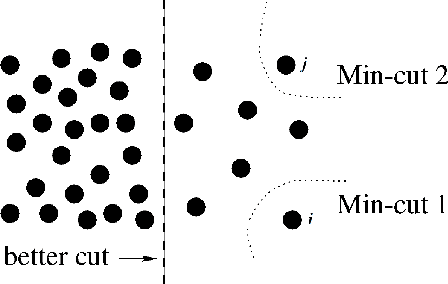

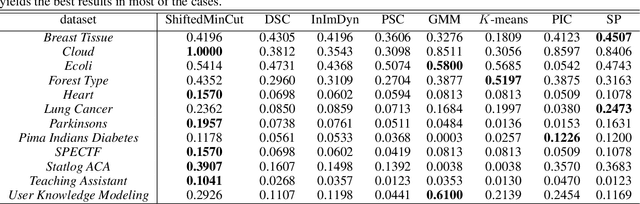

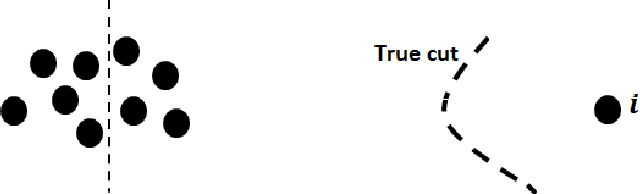

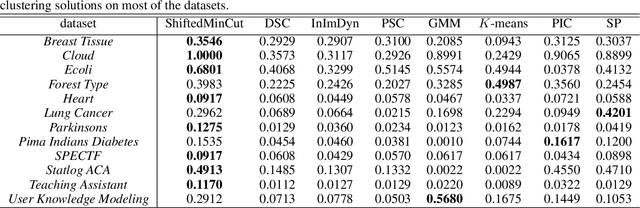

Abstract:Several clustering methods (e.g., Normalized Cut and Ratio Cut) divide the Min Cut cost function by a cluster-dependent factor (e.g., the size or the degree of the clusters), in order to yield a more balanced partitioning. We, instead, investigate adding such regularizations to the original cost function. We first consider the case where the regularization term is the sum of the squared size of the clusters, and then generalize it to adaptive regularization of the pairwise similarities. This leads to shifting (adaptively) the pairwise similarities which might make some of them negative. We then study the connection of this method to Correlation Clustering and then propose an efficient local search optimization algorithm with fast theoretical convergence rate to solve the new clustering problem. In the following, we investigate the shift of pairwise similarities on some common clustering methods, and finally, we demonstrate the superior performance of the method by extensive experiments on different datasets.

Online Learning of Network Bottlenecks via Minimax Paths

Sep 17, 2021

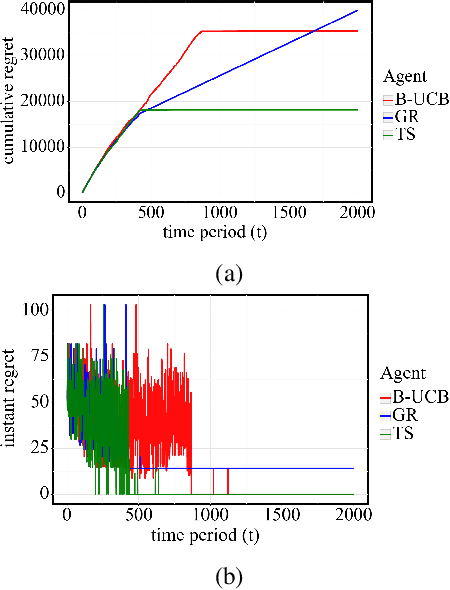

Abstract:In this paper, we study bottleneck identification in networks via extracting minimax paths. Many real-world networks have stochastic weights for which full knowledge is not available in advance. Therefore, we model this task as a combinatorial semi-bandit problem to which we apply a combinatorial version of Thompson Sampling and establish an upper bound on the corresponding Bayesian regret. Due to the computational intractability of the problem, we then devise an alternative problem formulation which approximates the original objective. Finally, we experimentally evaluate the performance of Thompson Sampling with the approximate formulation on real-world directed and undirected networks.

Analysis of Driving Scenario Trajectories with Active Learning

Aug 06, 2021

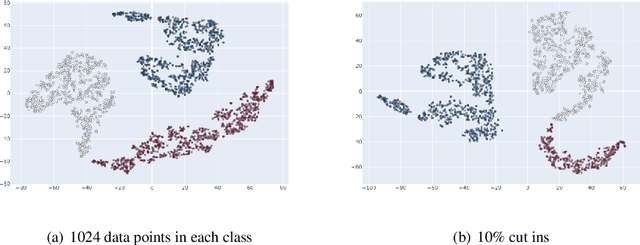

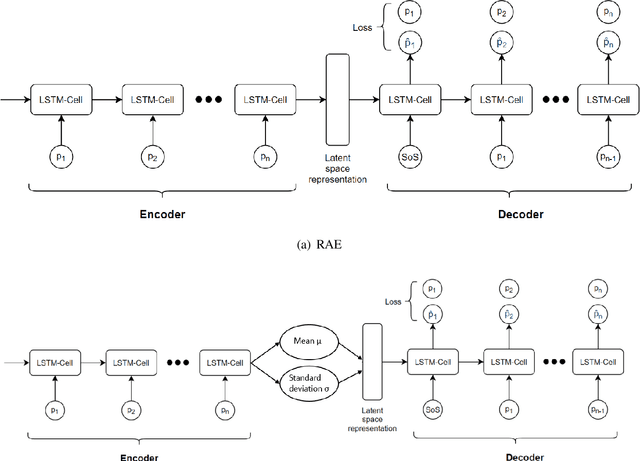

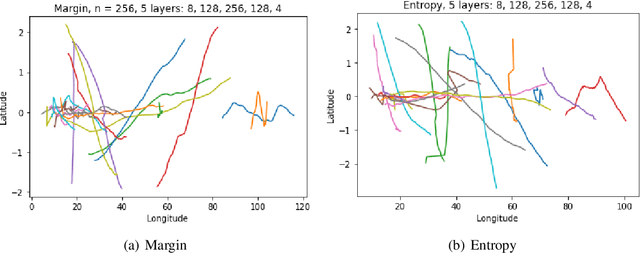

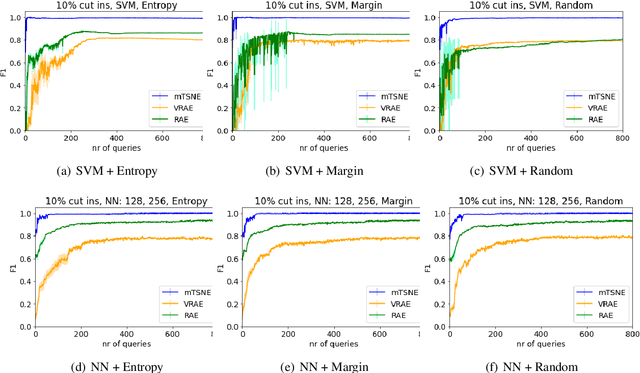

Abstract:Annotating the driving scenario trajectories based only on explicit rules (i.e., knowledge-based methods) can be subject to errors, such as false positive/negative classification of scenarios that lie on the border of two scenario classes, missing unknown scenario classes, and also anomalies. On the other side, verifying the labels by the annotators is not cost-efficient. For this purpose, active learning (AL) could potentially improve the annotation procedure by inclusion of an annotator/expert in an efficient way. In this study, we develop an active learning framework to annotate driving trajectory time-series data. At the first step, we compute an embedding of the time-series trajectories into a latent space in order to extract the temporal nature. For this purpose, we study three different latent space representations: multivariate Time Series t-Distributed Stochastic Neighbor Embedding (mTSNE), Recurrent Auto-Encoder (RAE) and Variational Recurrent Auto-Encoder (VRAE). We then apply different active learning paradigms with different classification models to the embedded data. In particular, we study the two classifiers Neural Network (NN) and Support Vector Machines (SVM), with three active learning query strategies (i.e., entropy, margin and random). In the following, we explore the possibilities of the framework to discover unknown classes and demonstrate how it can be used to identify the out-of-class trajectories.

Constrained Policy Gradient Method for Safe and Fast Reinforcement Learning: a Neural Tangent Kernel Based Approach

Jul 19, 2021

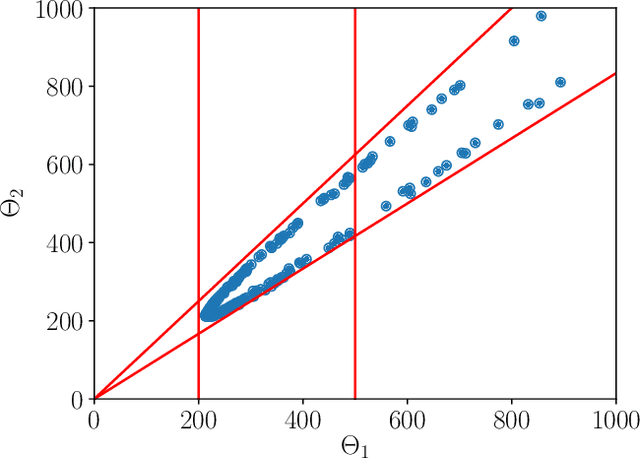

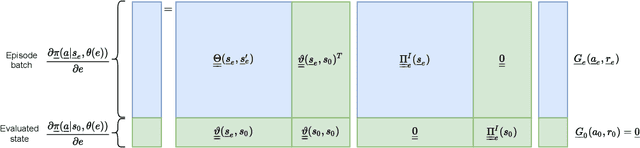

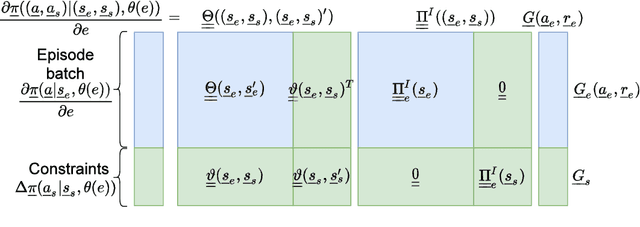

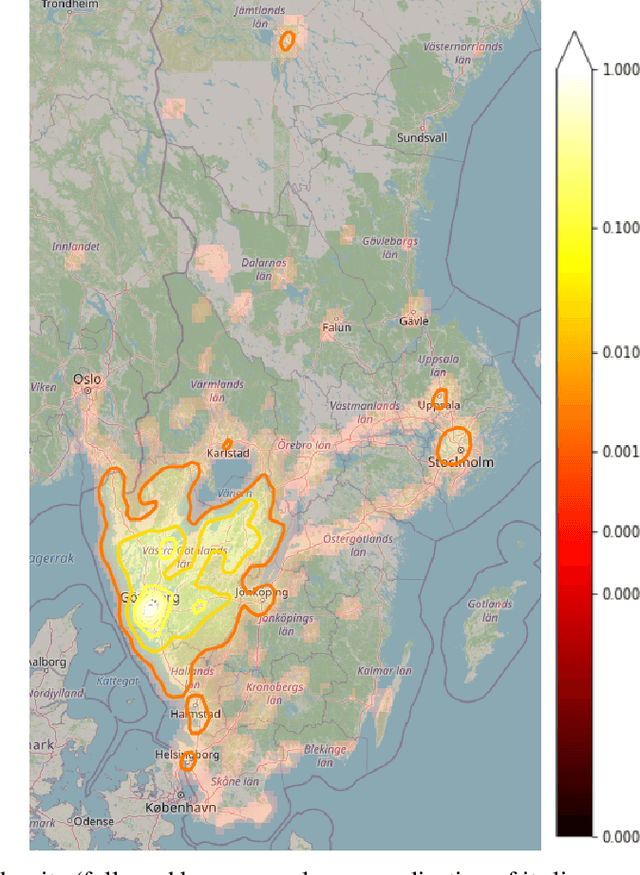

Abstract:This paper presents a constrained policy gradient algorithm. We introduce constraints for safe learning with the following steps. First, learning is slowed down (lazy learning) so that the episodic policy change can be computed with the help of the policy gradient theorem and the neural tangent kernel. Then, this enables us the evaluation of the policy at arbitrary states too. In the same spirit, learning can be guided, ensuring safety via augmenting episode batches with states where the desired action probabilities are prescribed. Finally, exogenous discounted sum of future rewards (returns) can be computed at these specific state-action pairs such that the policy network satisfies constraints. Computing the returns is based on solving a system of linear equations (equality constraints) or a constrained quadratic program (inequality constraints). Simulation results suggest that adding constraints (external information) to the learning can improve learning in terms of speed and safety reasonably if constraints are appropriately selected. The efficiency of the constrained learning was demonstrated with a shallow and wide ReLU network in the Cartpole and Lunar Lander OpenAI gym environments. The main novelty of the paper is giving a practical use of the neural tangent kernel in reinforcement learning.

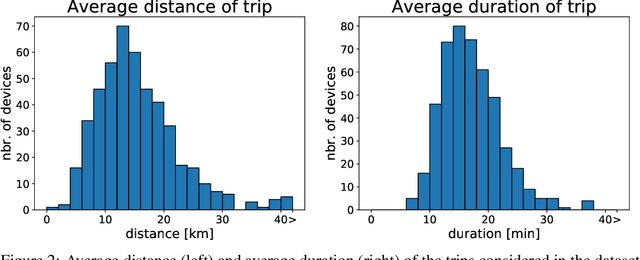

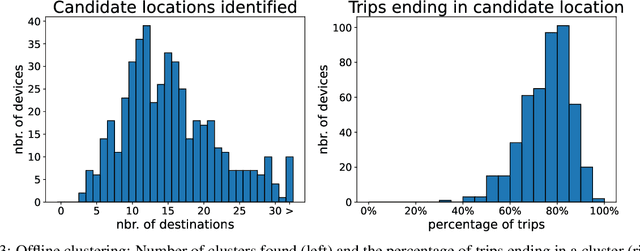

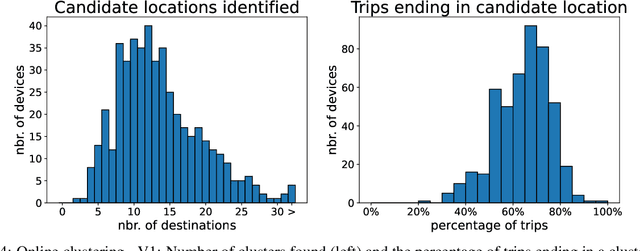

A Unified Framework for Online Trip Destination Prediction

Jan 12, 2021

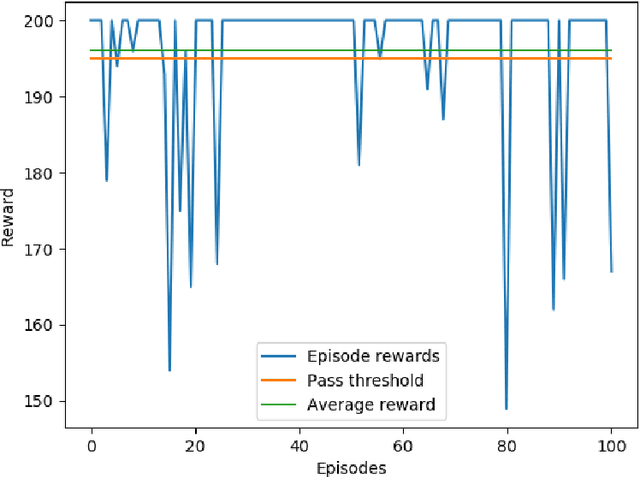

Abstract:Trip destination prediction is an area of increasing importance in many applications such as trip planning, autonomous driving and electric vehicles. Even though this problem could be naturally addressed in an online learning paradigm where data is arriving in a sequential fashion, the majority of research has rather considered the offline setting. In this paper, we present a unified framework for trip destination prediction in an online setting, which is suitable for both online training and online prediction. For this purpose, we develop two clustering algorithms and integrate them within two online prediction models for this problem. We investigate the different configurations of clustering algorithms and prediction models on a real-world dataset. By using traditional clustering metrics and accuracy, we demonstrate that both the clustering and the entire framework yield consistent results compared to the offline setting. Finally, we propose a novel regret metric for evaluating the entire online framework in comparison to its offline counterpart. This metric makes it possible to relate the source of erroneous predictions to either the clustering or the prediction model. Using this metric, we show that the proposed methods converge to a probability distribution resembling the true underlying distribution and enjoy a lower regret than all of the baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge