Mohammad Arif Ul Alam

Enhancing Wearable based Real-Time Glucose Monitoring via Phasic Image Representation Learning based Deep Learning

Jun 12, 2024Abstract:In the U.S., over a third of adults are pre-diabetic, with 80\% unaware of their status. This underlines the need for better glucose monitoring to prevent type 2 diabetes and related heart diseases. Existing wearable glucose monitors are limited by the lack of models trained on small datasets, as collecting extensive glucose data is often costly and impractical. Our study introduces a novel machine learning method using modified recurrence plots in the frequency domain to improve glucose level prediction accuracy from wearable device data, even with limited datasets. This technique combines advanced signal processing with machine learning to extract more meaningful features. We tested our method against existing models using historical data, showing that our approach surpasses the current 87\% accuracy benchmark in predicting real-time interstitial glucose levels.

Crowdotic: A Privacy-Preserving Hospital Waiting Room Crowd Density Estimation with Non-speech Audio

Sep 20, 2023Abstract:Privacy-preserving crowd density analysis finds application across a wide range of scenarios, substantially enhancing smart building operation and management while upholding privacy expectations in various spaces. We propose a non-speech audio-based approach for crowd analytics, leveraging a transformer-based model. Our results demonstrate that non-speech audio alone can be used to conduct such analysis with remarkable accuracy. To the best of our knowledge, this is the first time when non-speech audio signals are proposed for predicting occupancy. As far as we know, there has been no other similar approach of its kind prior to this. To accomplish this, we deployed our sensor-based platform in the waiting room of a large hospital with IRB approval over a period of several months to capture non-speech audio and thermal images for the training and evaluation of our models. The proposed non-speech-based approach outperformed the thermal camera-based model and all other baselines. In addition to demonstrating superior performance without utilizing speech audio, we conduct further analysis using differential privacy techniques to provide additional privacy guarantees. Overall, our work demonstrates the viability of employing non-speech audio data for accurate occupancy estimation, while also ensuring the exclusion of speech-related content and providing robust privacy protections through differential privacy guarantees.

Internet of Things Fault Detection and Classification via Multitask Learning

Jul 03, 2023Abstract:This paper presents a comprehensive investigation into developing a fault detection and classification system for real-world IIoT applications. The study addresses challenges in data collection, annotation, algorithm development, and deployment. Using a real-world IIoT system, three phases of data collection simulate 11 predefined fault categories. We propose SMTCNN for fault detection and category classification in IIoT, evaluating its performance on real-world data. SMTCNN achieves superior specificity (3.5%) and shows significant improvements in precision, recall, and F1 measures compared to existing techniques.

Unbiased Pain Assessment through Wearables and EHR Data: Multi-attribute Fairness Loss-based CNN Approach

Jul 03, 2023Abstract:The combination of diverse health data (IoT, EHR, and clinical surveys) and scalable-adaptable Artificial Intelligence (AI), has enabled the discovery of physical, behavioral, and psycho-social indicators of pain status. Despite the hype and promise to fundamentally alter the healthcare system with technological advancements, much AI adoption in clinical pain evaluation has been hampered by the heterogeneity of the problem itself and other challenges, such as personalization and fairness. Studies have revealed that many AI (i.e., machine learning or deep learning) models display biases and discriminate against specific population segments (such as those based on gender or ethnicity), which breeds skepticism among medical professionals about AI adaptability. In this paper, we propose a Multi-attribute Fairness Loss (MAFL) based CNN model that aims to account for any sensitive attributes included in the data and fairly predict patients' pain status while attempting to minimize the discrepancies between privileged and unprivileged groups. In order to determine whether the trade-off between accuracy and fairness can be satisfied, we compare the proposed model with well-known existing mitigation procedures, and studies reveal that the implemented model performs favorably in contrast to state-of-the-art methods. Utilizing NIH All-Of-US data, where a cohort of 868 distinct individuals with wearables and EHR data gathered over 1500 days has been taken into consideration to analyze our suggested fair pain assessment system.

Augmenting Deep Learning Adaptation for Wearable Sensor Data through Combined Temporal-Frequency Image Encoding

Jul 03, 2023

Abstract:Deep learning advancements have revolutionized scalable classification in many domains including computer vision. However, when it comes to wearable-based classification and domain adaptation, existing computer vision-based deep learning architectures and pretrained models trained on thousands of labeled images for months fall short. This is primarily because wearable sensor data necessitates sensor-specific preprocessing, architectural modification, and extensive data collection. To overcome these challenges, researchers have proposed encoding of wearable temporal sensor data in images using recurrent plots. In this paper, we present a novel modified-recurrent plot-based image representation that seamlessly integrates both temporal and frequency domain information. Our approach incorporates an efficient Fourier transform-based frequency domain angular difference estimation scheme in conjunction with the existing temporal recurrent plot image. Furthermore, we employ mixup image augmentation to enhance the representation. We evaluate the proposed method using accelerometer-based activity recognition data and a pretrained ResNet model, and demonstrate its superior performance compared to existing approaches.

PhysioGait: Context-Aware Physiological Context Modeling for Person Re-identification Attack on Wearable Sensing

Oct 30, 2022Abstract:Person re-identification is a critical privacy breach in publicly shared healthcare data. We investigate the possibility of a new type of privacy threat on publicly shared privacy insensitive large scale wearable sensing data. In this paper, we investigate user specific biometric signatures in terms of two contextual biometric traits, physiological (photoplethysmography and electrodermal activity) and physical (accelerometer) contexts. In this regard, we propose PhysioGait, a context-aware physiological signal model that consists of a Multi-Modal Siamese Convolutional Neural Network (mmSNN) which learns the spatial and temporal information individually and performs sensor fusion in a Siamese cost with the objective of predicting a person's identity. We evaluated PhysioGait attack model using 4 real-time collected datasets (3-data under IRB #HP-00064387 and one publicly available data) and two combined datasets achieving 89% - 93% accuracy of re-identifying persons.

* Accepted in IEEE MSN 2022. arXiv admin note: substantial text overlap with arXiv:2106.11900

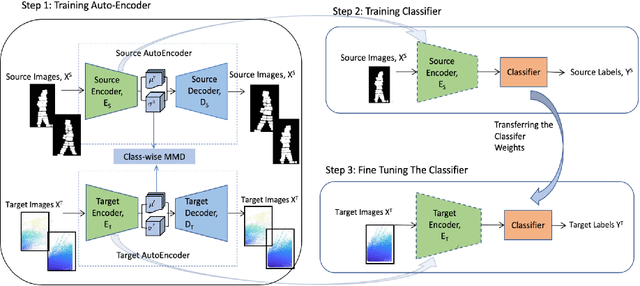

Semi-Supervised Domain Adaptation with Auto-Encoder via Simultaneous Learning

Oct 18, 2022

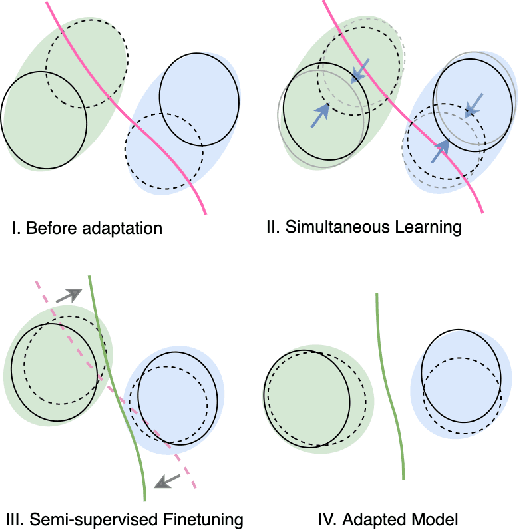

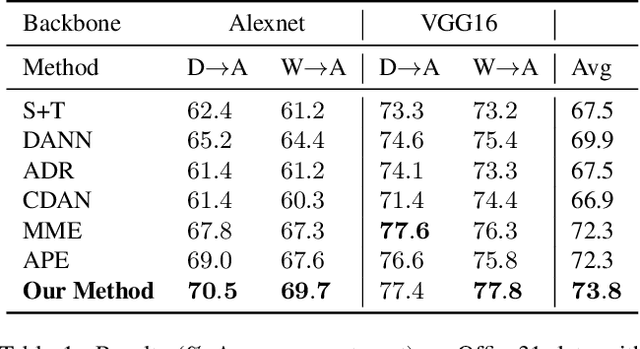

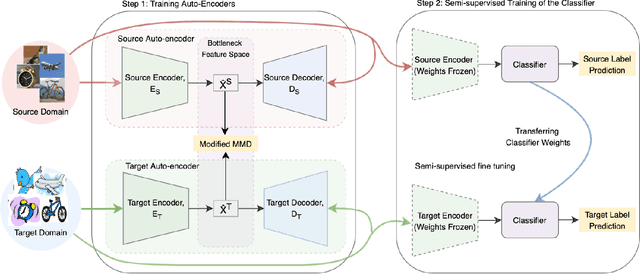

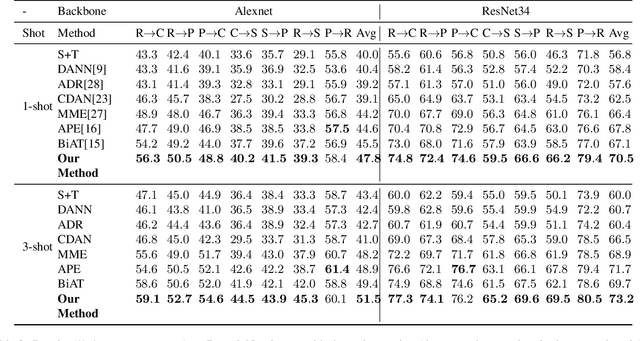

Abstract:We present a new semi-supervised domain adaptation framework that combines a novel auto-encoder-based domain adaptation model with a simultaneous learning scheme providing stable improvements over state-of-the-art domain adaptation models. Our framework holds strong distribution matching property by training both source and target auto-encoders using a novel simultaneous learning scheme on a single graph with an optimally modified MMD loss objective function. Additionally, we design a semi-supervised classification approach by transferring the aligned domain invariant feature spaces from source domain to the target domain. We evaluate on three datasets and show proof that our framework can effectively solve both fragile convergence (adversarial) and weak distribution matching problems between source and target feature space (discrepancy) with a high `speed' of adaptation requiring a very low number of iterations.

Enabling Heterogeneous Domain Adaptation in Multi-inhabitants Smart Home Activity Learning

Oct 18, 2022

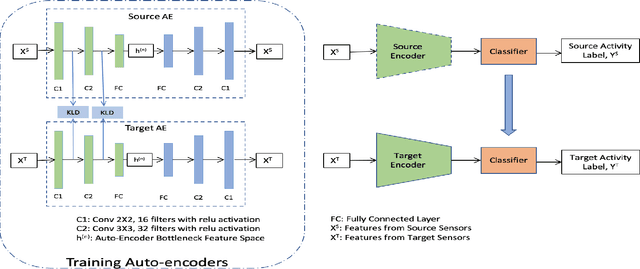

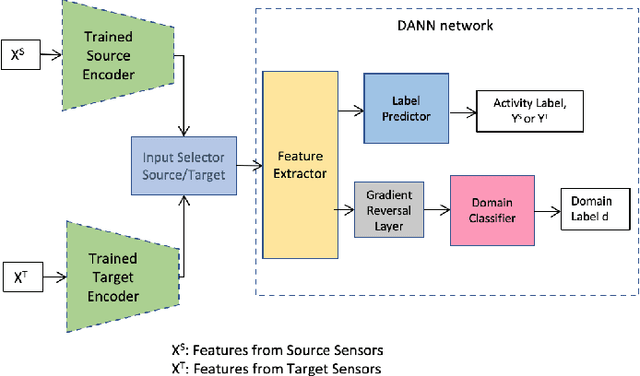

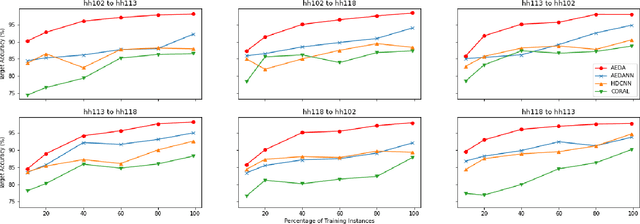

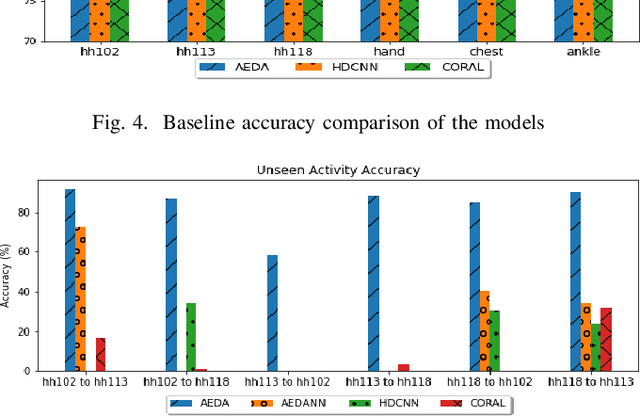

Abstract:Domain adaptation for sensor-based activity learning is of utmost importance in remote health monitoring research. However, many domain adaptation algorithms suffer with failure to operate adaptation in presence of target domain heterogeneity (which is always present in reality) and presence of multiple inhabitants dramatically hinders their generalizability producing unsatisfactory results for semi-supervised and unseen activity learning tasks. We propose \emph{AEDA}, a novel deep auto-encoder-based model to enable semi-supervised domain adaptation in the existence of target domain heterogeneity and how to incorporate it to empower heterogeneity to any homogeneous deep domain adaptation architecture for cross-domain activity learning. Experimental evaluation on 18 different heterogeneous and multi-inhabitants use-cases of 8 different domains created from 2 publicly available human activity datasets (wearable and ambient smart homes) shows that \emph{AEDA} outperforms (max. 12.8\% and 8.9\% improvements for ambient smart home and wearables) over existing domain adaptation techniques for both seen and unseen activity learning in a heterogeneous setting.

College Student Retention Risk Analysis From Educational Database using Multi-Task Multi-Modal Neural Fusion

Sep 11, 2021

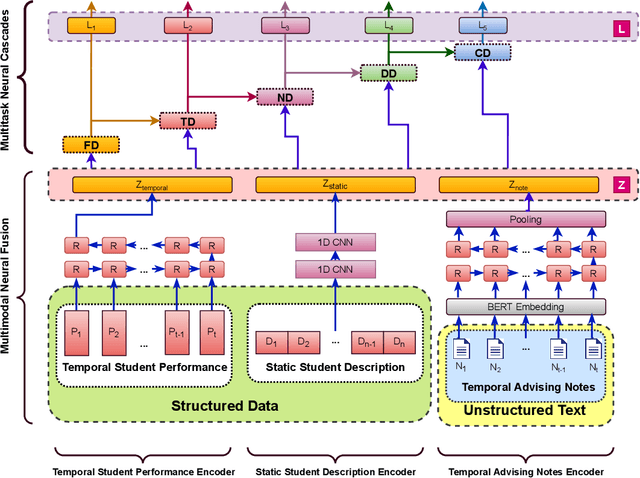

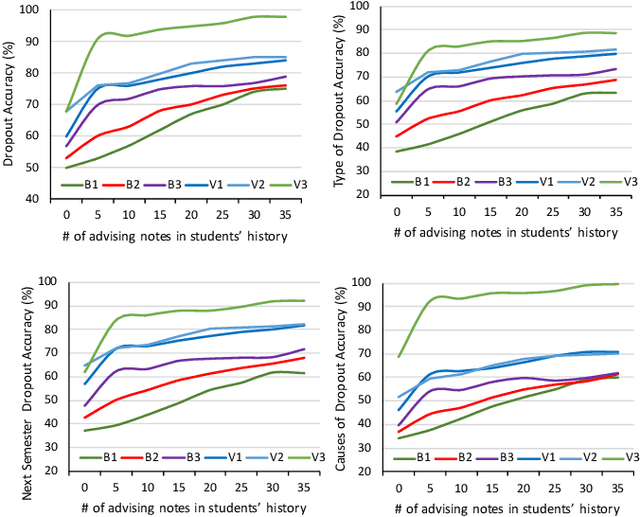

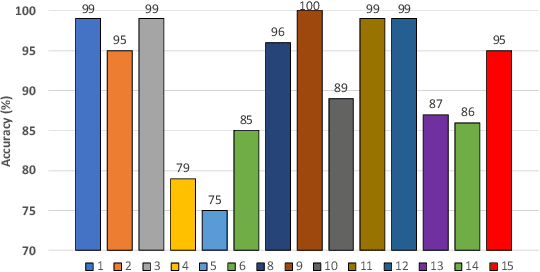

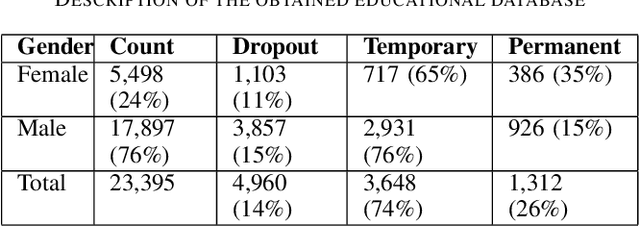

Abstract:We develop a Multimodal Spatiotemporal Neural Fusion network for Multi-Task Learning (MSNF-MTCL) to predict 5 important students' retention risks: future dropout, next semester dropout, type of dropout, duration of dropout and cause of dropout. First, we develop a general purpose multi-modal neural fusion network model MSNF for learning students' academic information representation by fusing spatial and temporal unstructured advising notes with spatiotemporal structured data. MSNF combines a Bidirectional Encoder Representations from Transformers (BERT)-based document embedding framework to represent each advising note, Long-Short Term Memory (LSTM) network to model temporal advising note embeddings, LSTM network to model students' temporal performance variables and students' static demographics altogether. The final fused representation from MSNF has been utilized on a Multi-Task Cascade Learning (MTCL) model towards building MSNF-MTCL for predicting 5 student retention risks. We evaluate MSNFMTCL on a large educational database consists of 36,445 college students over 18 years period of time that provides promising performances comparing with the nearest state-of-art models. Additionally, we test the fairness of such model given the existence of biases.

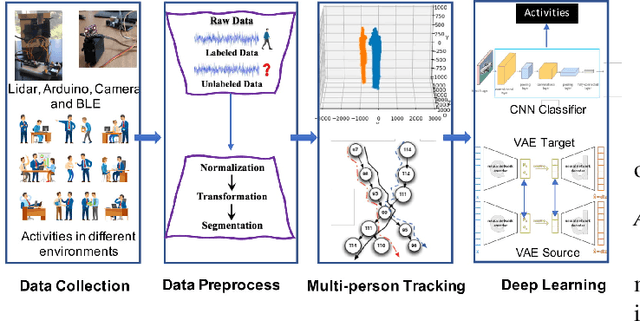

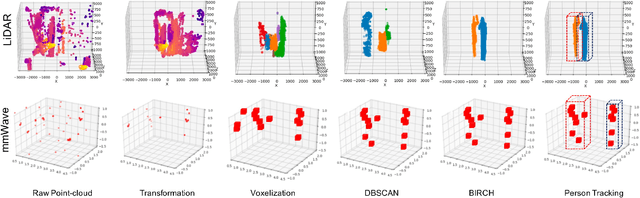

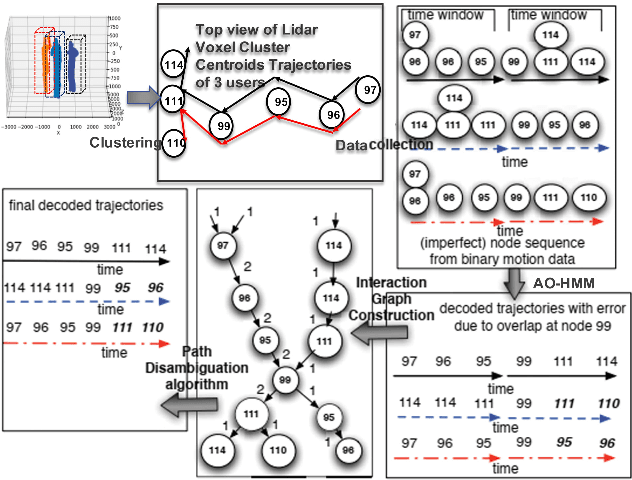

PALMAR: Towards Adaptive Multi-inhabitant Activity Recognition in Point-Cloud Technology

Jun 22, 2021

Abstract:With the advancement of deep neural networks and computer vision-based Human Activity Recognition, employment of Point-Cloud Data technologies (LiDAR, mmWave) has seen a lot interests due to its privacy preserving nature. Given the high promise of accurate PCD technologies, we develop, PALMAR, a multiple-inhabitant activity recognition system by employing efficient signal processing and novel machine learning techniques to track individual person towards developing an adaptive multi-inhabitant tracking and HAR system. More specifically, we propose (i) a voxelized feature representation-based real-time PCD fine-tuning method, (ii) efficient clustering (DBSCAN and BIRCH), Adaptive Order Hidden Markov Model based multi-person tracking and crossover ambiguity reduction techniques and (iii) novel adaptive deep learning-based domain adaptation technique to improve the accuracy of HAR in presence of data scarcity and diversity (device, location and population diversity). We experimentally evaluate our framework and systems using (i) a real-time PCD collected by three devices (3D LiDAR and 79 GHz mmWave) from 6 participants, (ii) one publicly available 3D LiDAR activity data (28 participants) and (iii) an embedded hardware prototype system which provided promising HAR performances in multi-inhabitants (96%) scenario with a 63% improvement of multi-person tracking than state-of-art framework without losing significant system performances in the edge computing device.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge