Minghui Huang

Atomic-SNLI: Fine-Grained Natural Language Inference through Atomic Fact Decomposition

Jan 10, 2026Abstract:Current Natural Language Inference (NLI) systems primarily operate at the sentence level, providing black-box decisions that lack explanatory power. While atomic-level NLI offers a promising alternative by decomposing hypotheses into individual facts, we demonstrate that the conventional assumption that a hypothesis is entailed only when all its atomic facts are entailed fails in practice due to models' poor performance on fine-grained reasoning. Our analysis reveals that existing models perform substantially worse on atomic level inference compared to sentence level tasks. To address this limitation, we introduce Atomic-SNLI, a novel dataset constructed by decomposing SNLI and enriching it with carefully curated atomic level examples through linguistically informed generation strategies. Experimental results demonstrate that models fine-tuned on Atomic-SNLI achieve significant improvements in atomic reasoning capabilities while maintaining strong sentence level performance, enabling both accurate judgements and transparent, explainable results at the fact level.

TPRM: A Topic-based Personalized Ranking Model for Web Search

Aug 13, 2021

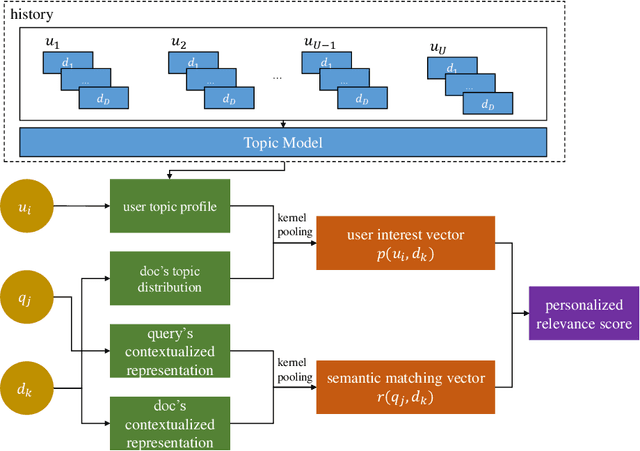

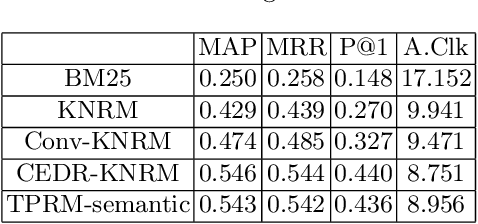

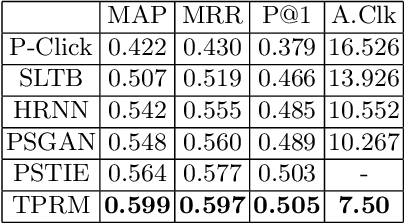

Abstract:Ranking models have achieved promising results, but it remains challenging to design personalized ranking systems to leverage user profiles and semantic representations between queries and documents. In this paper, we propose a topic-based personalized ranking model (TPRM) that integrates user topical profile with pretrained contextualized term representations to tailor the general document ranking list. Experiments on the real-world dataset demonstrate that TPRM outperforms state-of-the-art ad-hoc ranking models and personalized ranking models significantly.

GQE-PRF: Generative Query Expansion with Pseudo-Relevance Feedback

Aug 13, 2021

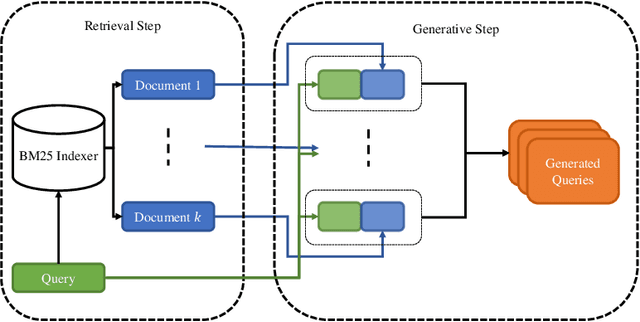

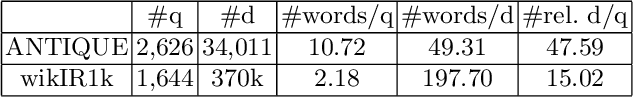

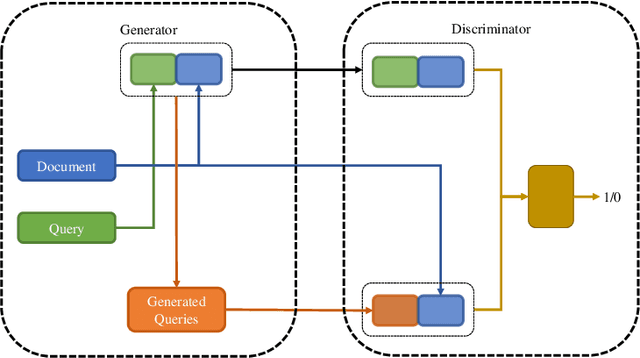

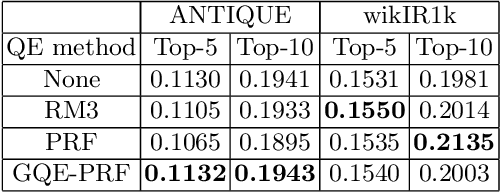

Abstract:Query expansion with pseudo-relevance feedback (PRF) is a powerful approach to enhance the effectiveness in information retrieval. Recently, with the rapid advance of deep learning techniques, neural text generation has achieved promising success in many natural language tasks. To leverage the strength of text generation for information retrieval, in this article, we propose a novel approach which effectively integrates text generation models into PRF-based query expansion. In particular, our approach generates augmented query terms via neural text generation models conditioned on both the initial query and pseudo-relevance feedback. Moreover, in order to train the generative model, we adopt the conditional generative adversarial nets (CGANs) and propose the PRF-CGAN method in which both the generator and the discriminator are conditioned on the pseudo-relevance feedback. We evaluate the performance of our approach on information retrieval tasks using two benchmark datasets. The experimental results show that our approach achieves comparable performance or outperforms traditional query expansion methods on both the retrieval and reranking tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge