Mingchen Gao

Brain Tumor Segmentation on MRI with Missing Modalities

Apr 15, 2019

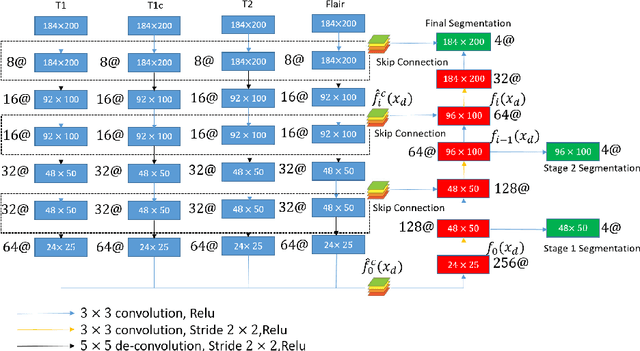

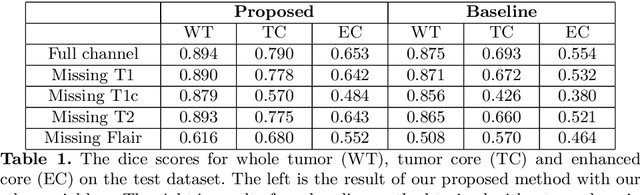

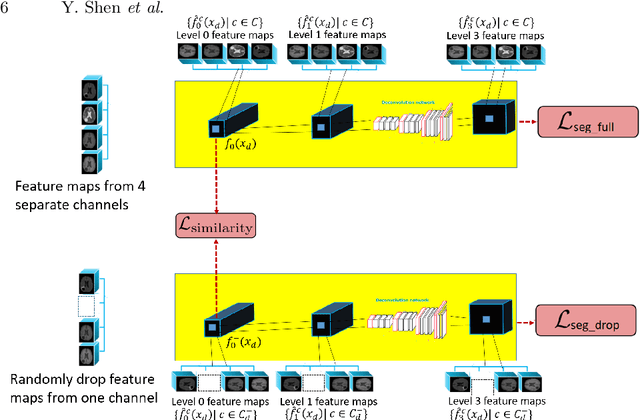

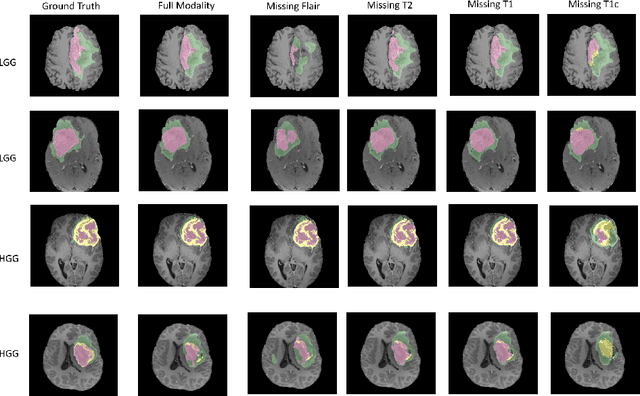

Abstract:Brain Tumor Segmentation from magnetic resonance imaging (MRI) is a critical technique for early diagnosis. However, rather than having complete four modalities as in BraTS dataset, it is common to have missing modalities in clinical scenarios. We design a brain tumor segmentation algorithm that is robust to the absence of any modality. Our network includes a channel-independent encoding path and a feature-fusion decoding path. We use self-supervised training through channel dropout and also propose a novel domain adaptation method on feature maps to recover the information from the missing channel. Our results demonstrate that the quality of the segmentation depends on which modality is missing. Furthermore, we also discuss and visualize the contribution of each modality to the segmentation results. Their contributions are along well with the expert screening routine.

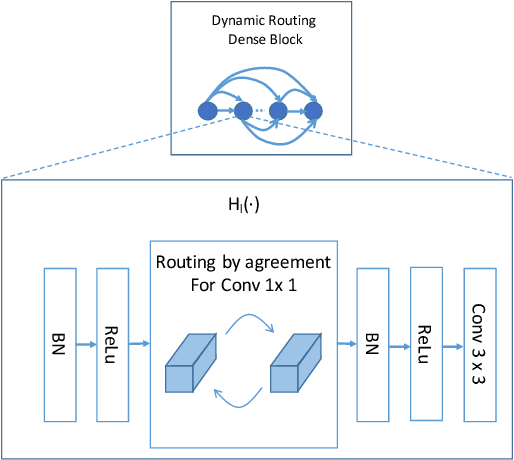

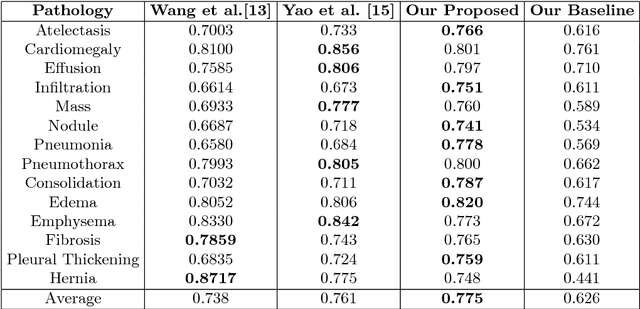

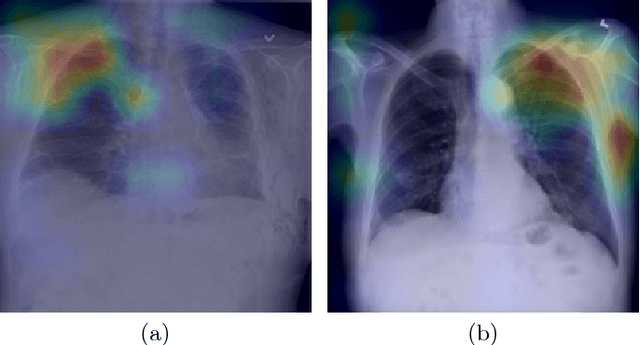

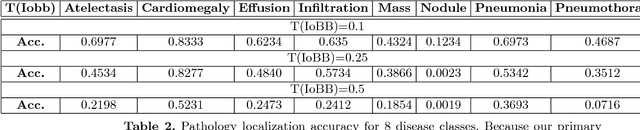

Dynamic Routing on Deep Neural Network for Thoracic Disease Classification and Sensitive Area Localization

Aug 17, 2018

Abstract:We present and evaluate a new deep neural network architecture for automatic thoracic disease detection on chest X-rays. Deep neural networks have shown great success in a plethora of visual recognition tasks such as image classification and object detection by stacking multiple layers of convolutional neural networks (CNN) in a feed-forward manner. However, the performance gain by going deeper has reached bottlenecks as a result of the trade-off between model complexity and discrimination power. We address this problem by utilizing the recently developed routing-by agreement mechanism in our architecture. A novel characteristic of our network structure is that it extends routing to two types of layer connections (1) connection between feature maps in dense layers, (2) connection between primary capsules and prediction capsules in final classification layer. We show that our networks achieve comparable results with much fewer layers in the measurement of AUC score. We further show the combined benefits of model interpretability by generating Gradient-weighted Class Activation Mapping (Grad-CAM) for localization. We demonstrate our results on the NIH chestX-ray14 dataset that consists of 112,120 images on 30,805 unique patients including 14 kinds of lung diseases.

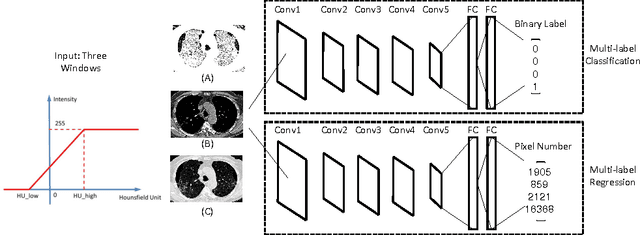

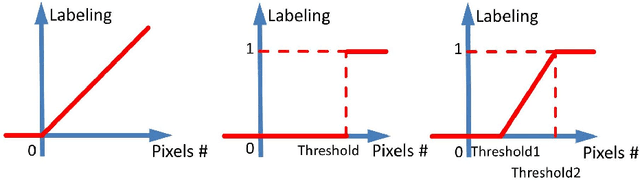

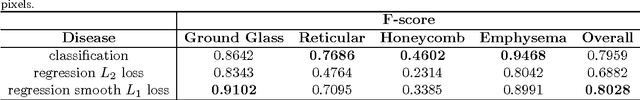

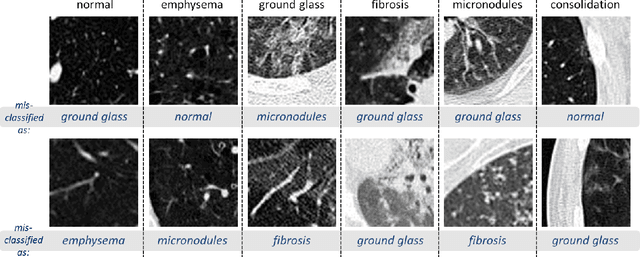

Holistic Interstitial Lung Disease Detection using Deep Convolutional Neural Networks: Multi-label Learning and Unordered Pooling

Jan 19, 2017

Abstract:Accurately predicting and detecting interstitial lung disease (ILD) patterns given any computed tomography (CT) slice without any pre-processing prerequisites, such as manually delineated regions of interest (ROIs), is a clinically desirable, yet challenging goal. The majority of existing work relies on manually-provided ILD ROIs to extract sampled 2D image patches from CT slices and, from there, performs patch-based ILD categorization. Acquiring manual ROIs is labor intensive and serves as a bottleneck towards fully-automated CT imaging ILD screening over large-scale populations. Furthermore, despite the considerable high frequency of more than one ILD pattern on a single CT slice, previous works are only designed to detect one ILD pattern per slice or patch. To tackle these two critical challenges, we present multi-label deep convolutional neural networks (CNNs) for detecting ILDs from holistic CT slices (instead of ROIs or sub-images). Conventional single-labeled CNN models can be augmented to cope with the possible presence of multiple ILD pattern labels, via 1) continuous-valued deep regression based robust norm loss functions or 2) a categorical objective as the sum of element-wise binary logistic losses. Our methods are evaluated and validated using a publicly available database of 658 patient CT scans under five-fold cross-validation, achieving promising performance on detecting four major ILD patterns: Ground Glass, Reticular, Honeycomb, and Emphysema. We also investigate the effectiveness of a CNN activation-based deep-feature encoding scheme using Fisher vector encoding, which treats ILD detection as spatially-unordered deep texture classification.

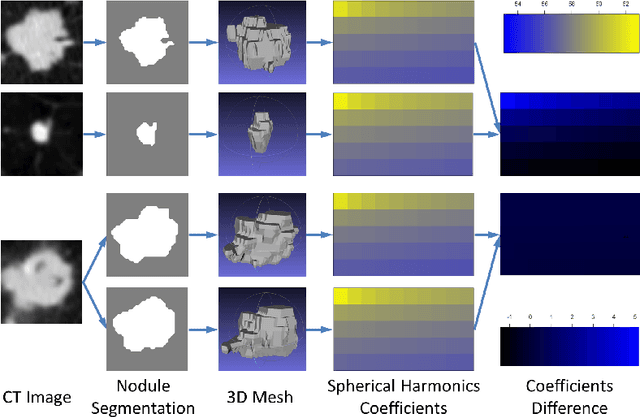

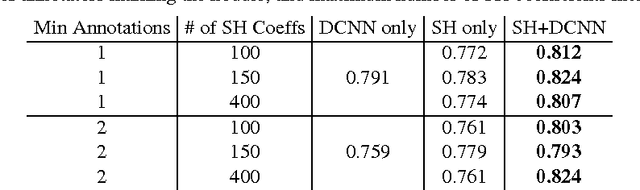

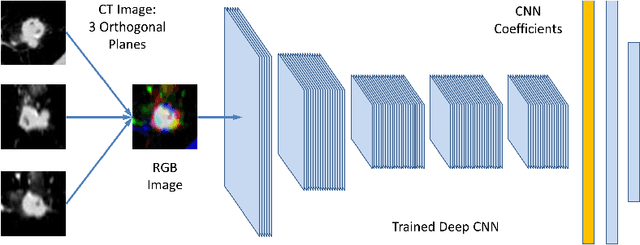

Characterization of Lung Nodule Malignancy using Hybrid Shape and Appearance Features

Sep 21, 2016

Abstract:Computed tomography imaging is a standard modality for detecting and assessing lung cancer. In order to evaluate the malignancy of lung nodules, clinical practice often involves expert qualitative ratings on several criteria describing a nodule's appearance and shape. Translating these features for computer-aided diagnostics is challenging due to their subjective nature and the difficulties in gaining a complete description. In this paper, we propose a computerized approach to quantitatively evaluate both appearance distinctions and 3D surface variations. Nodule shape was modeled and parameterized using spherical harmonics, and appearance features were extracted using deep convolutional neural networks. Both sets of features were combined to estimate the nodule malignancy using a random forest classifier. The proposed algorithm was tested on the publicly available Lung Image Database Consortium dataset, achieving high accuracy. By providing lung nodule characterization, this method can provide a robust alternative reference opinion for lung cancer diagnosis.

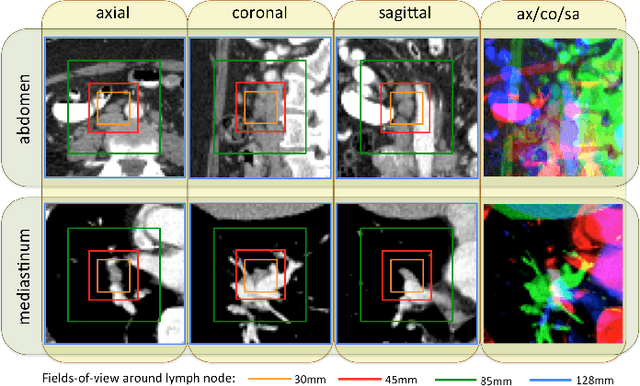

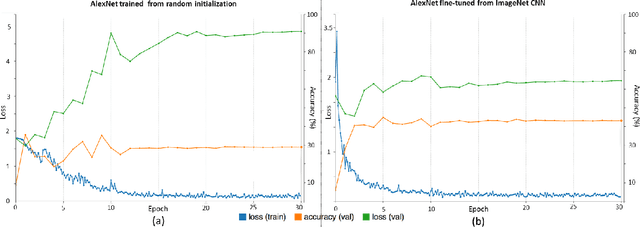

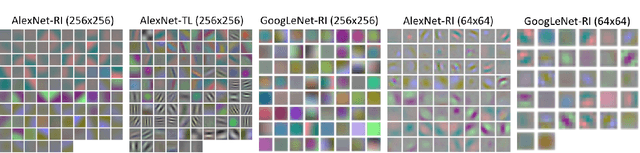

Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning

Feb 10, 2016

Abstract:Remarkable progress has been made in image recognition, primarily due to the availability of large-scale annotated datasets and the revival of deep CNN. CNNs enable learning data-driven, highly representative, layered hierarchical image features from sufficient training data. However, obtaining datasets as comprehensively annotated as ImageNet in the medical imaging domain remains a challenge. There are currently three major techniques that successfully employ CNNs to medical image classification: training the CNN from scratch, using off-the-shelf pre-trained CNN features, and conducting unsupervised CNN pre-training with supervised fine-tuning. Another effective method is transfer learning, i.e., fine-tuning CNN models pre-trained from natural image dataset to medical image tasks. In this paper, we exploit three important, but previously understudied factors of employing deep convolutional neural networks to computer-aided detection problems. We first explore and evaluate different CNN architectures. The studied models contain 5 thousand to 160 million parameters, and vary in numbers of layers. We then evaluate the influence of dataset scale and spatial image context on performance. Finally, we examine when and why transfer learning from pre-trained ImageNet (via fine-tuning) can be useful. We study two specific computer-aided detection (CADe) problems, namely thoraco-abdominal lymph node (LN) detection and interstitial lung disease (ILD) classification. We achieve the state-of-the-art performance on the mediastinal LN detection, with 85% sensitivity at 3 false positive per patient, and report the first five-fold cross-validation classification results on predicting axial CT slices with ILD categories. Our extensive empirical evaluation, CNN model analysis and valuable insights can be extended to the design of high performance CAD systems for other medical imaging tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge