Michalis Vazirgiannis

Ecole Polytechnique, AUEB

Cold Start Similar Artists Ranking with Gravity-Inspired Graph Autoencoders

Aug 02, 2021

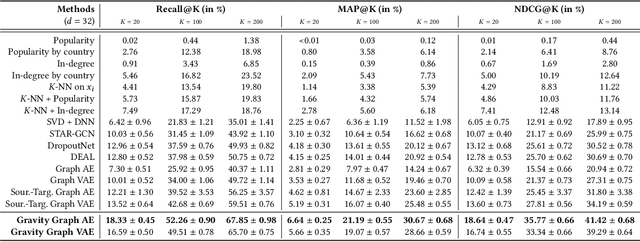

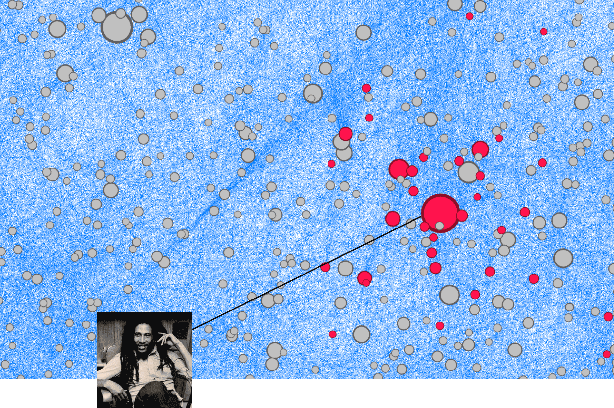

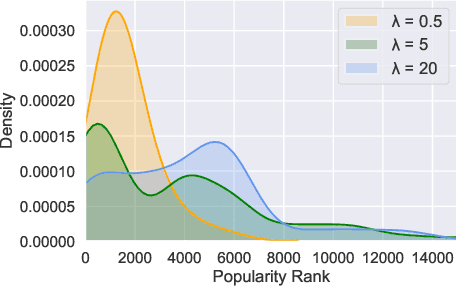

Abstract:On an artist's profile page, music streaming services frequently recommend a ranked list of "similar artists" that fans also liked. However, implementing such a feature is challenging for new artists, for which usage data on the service (e.g. streams or likes) is not yet available. In this paper, we model this cold start similar artists ranking problem as a link prediction task in a directed and attributed graph, connecting artists to their top-k most similar neighbors and incorporating side musical information. Then, we leverage a graph autoencoder architecture to learn node embedding representations from this graph, and to automatically rank the top-k most similar neighbors of new artists using a gravity-inspired mechanism. We empirically show the flexibility and the effectiveness of our framework, by addressing a real-world cold start similar artists ranking problem on a global music streaming service. Along with this paper, we also publicly release our source code as well as the industrial graph data from our experiments.

Operation Embeddings for Neural Architecture Search

May 11, 2021

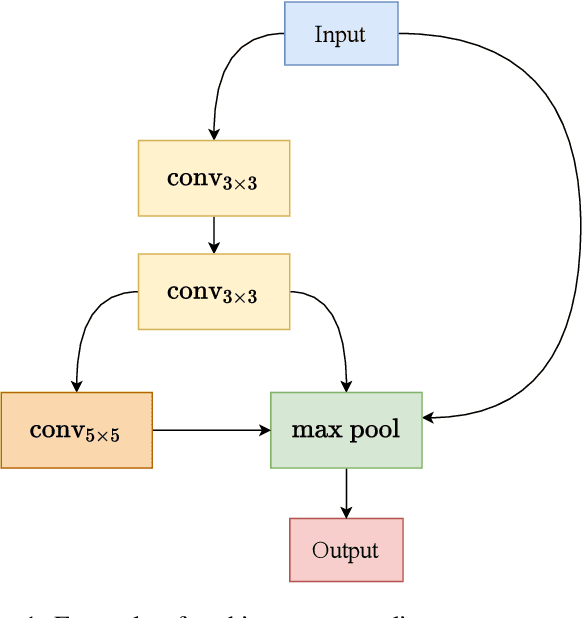

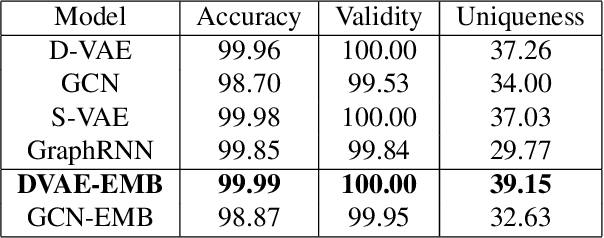

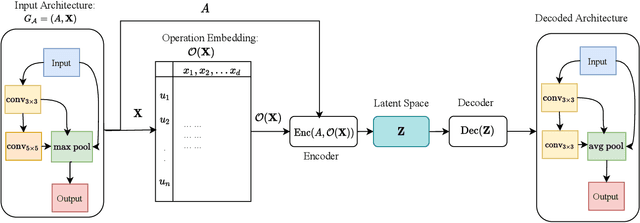

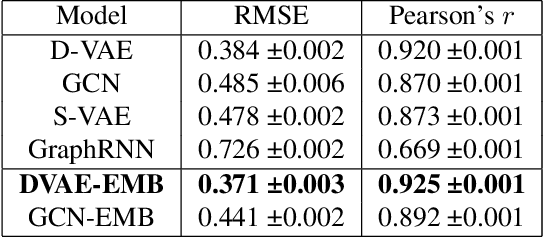

Abstract:Neural Architecture Search (NAS) has recently gained increased attention, as a class of approaches that automatically searches in an input space of network architectures. A crucial part of the NAS pipeline is the encoding of the architecture that consists of the applied computational blocks, namely the operations and the links between them. Most of the existing approaches either fail to capture the structural properties of the architectures or use a hand-engineered vector to encode the operator information. In this paper, we propose the replacement of fixed operator encoding with learnable representations in the optimization process. This approach, which effectively captures the relations of different operations, leads to smoother and more accurate representations of the architectures and consequently to improved performance of the end task. Our extensive evaluation in ENAS benchmark demonstrates the effectiveness of the proposed operation embeddings to the generation of highly accurate models, achieving state-of-the-art performance. Finally, our method produces top-performing architectures that share similar operation and graph patterns, highlighting a strong correlation between architecture's structural properties and performance.

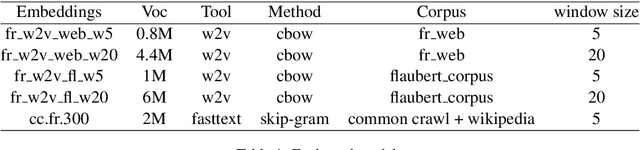

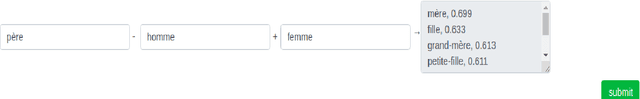

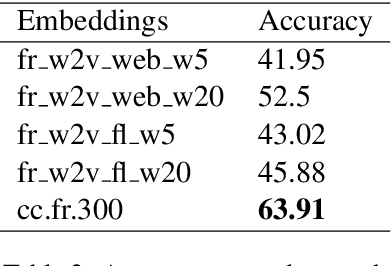

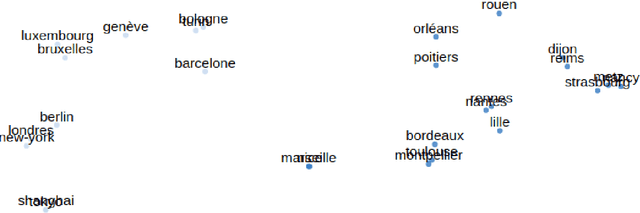

Evaluation Of Word Embeddings From Large-Scale French Web Content

May 05, 2021

Abstract:Distributed word representations are popularly used in many tasks in natural language processing, adding that pre-trained word vectors on huge text corpus achieved high performance in many different NLP tasks. This paper introduces multiple high quality word vectors for the French language where two of them are trained on huge crawled French data and the others are trained on an already existing French corpus. We also evaluate the quality of our proposed word vectors and the existing French word vectors on the French word analogy task. In addition, we do the evaluation on multiple real NLP tasks that show the important performance enhancement of the pre-trained word vectors compared to the existing and random ones. Finally, we created a demo web application to test and visualize the obtained word embeddings. The produced French word embeddings are available to the public, along with the fine-tuning code on the NLU tasks and the demo code.

Ego-based Entropy Measures for Structural Representations on Graphs

Feb 17, 2021

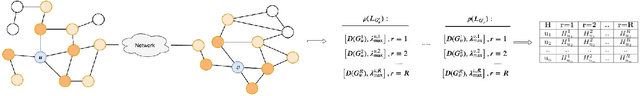

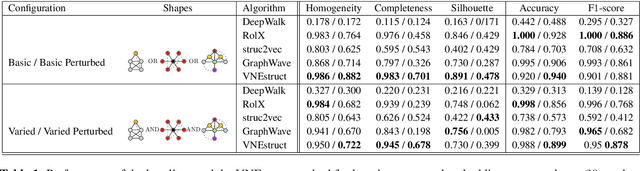

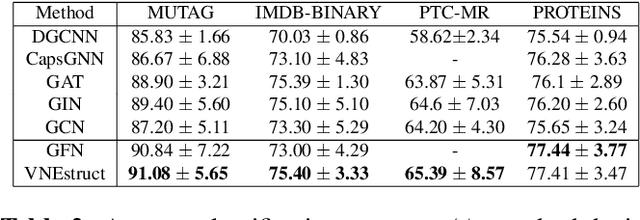

Abstract:Machine learning on graph-structured data has attracted high research interest due to the emergence of Graph Neural Networks (GNNs). Most of the proposed GNNs are based on the node homophily, i.e neighboring nodes share similar characteristics. However, in many complex networks, nodes that lie to distant parts of the graph share structurally equivalent characteristics and exhibit similar roles (e.g chemical properties of distant atoms in a molecule, type of social network users). A growing literature proposed representations that identify structurally equivalent nodes. However, most of the existing methods require high time and space complexity. In this paper, we propose VNEstruct, a simple approach, based on entropy measures of the neighborhood's topology, for generating low-dimensional structural representations, that is time-efficient and robust to graph perturbations. Empirically, we observe that VNEstruct exhibits robustness on structural role identification tasks. Moreover, VNEstruct can achieve state-of-the-art performance on graph classification, without incorporating the graph structure information in the optimization, in contrast to GNN competitors.

How COVID-19 Is Changing Our Language : Detecting Semantic Shift in Twitter Word Embeddings

Feb 15, 2021

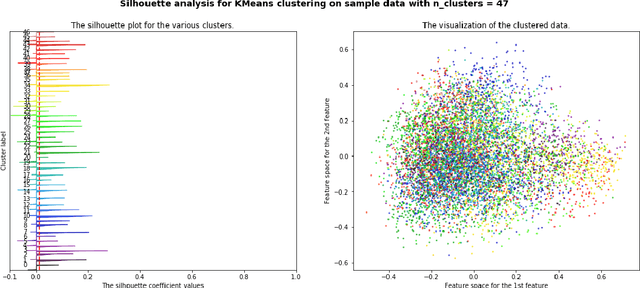

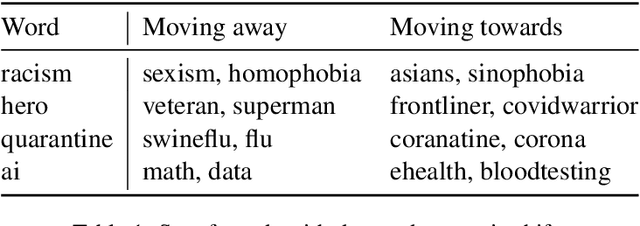

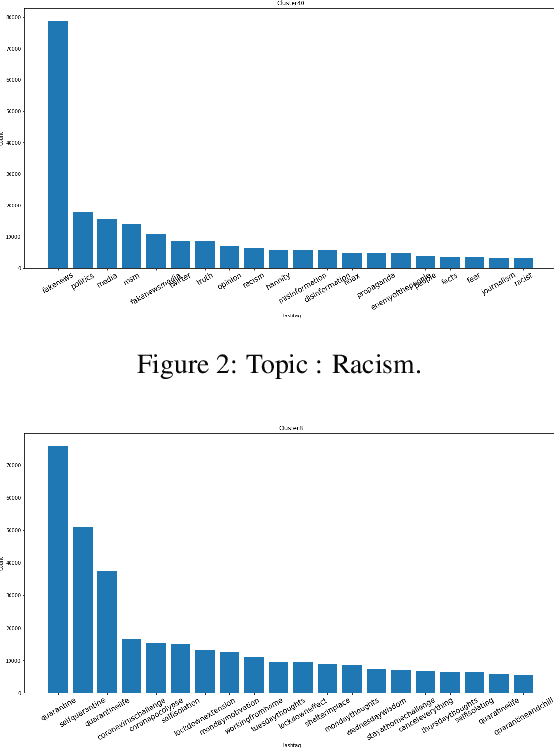

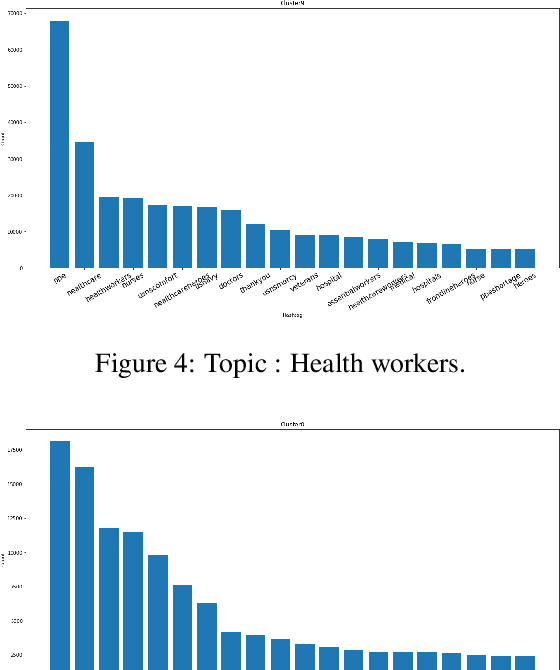

Abstract:Words are malleable objects, influenced by events that are reflected in written texts. Situated in the global outbreak of COVID-19, our research aims at detecting semantic shifts in social media language triggered by the health crisis. With COVID-19 related big data extracted from Twitter, we train separate word embedding models for different time periods after the outbreak. We employ an alignment-based approach to compare these embeddings with a general-purpose Twitter embedding unrelated to COVID-19. We also compare our trained embeddings among them to observe diachronic evolution. Carrying out case studies on a set of words chosen by topic detection, we verify that our alignment approach is valid. Finally, we quantify the size of global semantic shift by a stability measure based on back-and-forth rotational alignment.

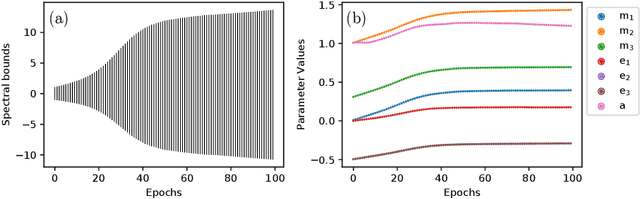

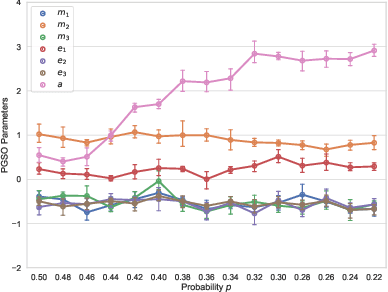

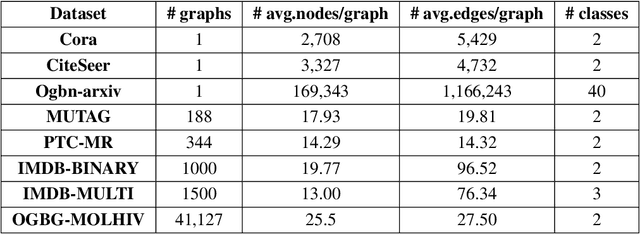

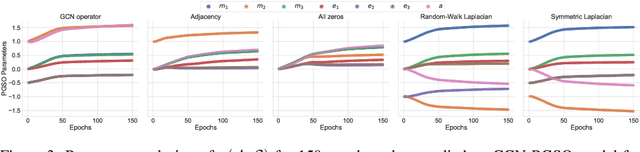

Learning Parametrised Graph Shift Operators

Jan 25, 2021

Abstract:In many domains data is currently represented as graphs and therefore, the graph representation of this data becomes increasingly important in machine learning. Network data is, implicitly or explicitly, always represented using a graph shift operator (GSO) with the most common choices being the adjacency, Laplacian matrices and their normalisations. In this paper, a novel parametrised GSO (PGSO) is proposed, where specific parameter values result in the most commonly used GSOs and message-passing operators in graph neural network (GNN) frameworks. The PGSO is suggested as a replacement of the standard GSOs that are used in state-of-the-art GNN architectures and the optimisation of the PGSO parameters is seamlessly included in the model training. It is proved that the PGSO has real eigenvalues and a set of real eigenvectors independent of the parameter values and spectral bounds on the PGSO are derived. PGSO parameters are shown to adapt to the sparsity of the graph structure in a study on stochastic blockmodel networks, where they are found to automatically replicate the GSO regularisation found in the literature. On several real-world datasets the accuracy of state-of-the-art GNN architectures is improved by the inclusion of the PGSO in both node- and graph-classification tasks.

BARThez: a Skilled Pretrained French Sequence-to-Sequence Model

Oct 23, 2020

Abstract:Inductive transfer learning, enabled by self-supervised learning, have taken the entire Natural Language Processing (NLP) field by storm, with models such as BERT and BART setting new state of the art on countless natural language understanding tasks. While there are some notable exceptions, most of the available models and research have been conducted for the English language. In this work, we introduce BARThez, the first BART model for the French language (to the best of our knowledge). BARThez was pretrained on a very large monolingual French corpus from past research that we adapted to suit BART's perturbation schemes. Unlike already existing BERT-based French language models such as CamemBERT and FlauBERT, BARThez is particularly well-suited for generative tasks, since not only its encoder but also its decoder is pretrained. In addition to discriminative tasks from the FLUE benchmark, we evaluate BARThez on a novel summarization dataset, OrangeSum, that we release with this paper. We also continue the pretraining of an already pretrained multilingual BART on BARThez's corpus, and we show that the resulting model, which we call mBARTHez, provides a significant boost over vanilla BARThez, and is on par with or outperforms CamemBERT and FlauBERT.

United We Stand: Transfer Graph Neural Networks for Pandemic Forecasting

Sep 10, 2020

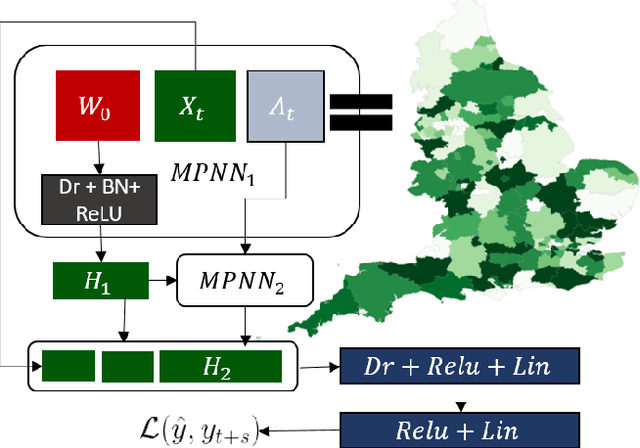

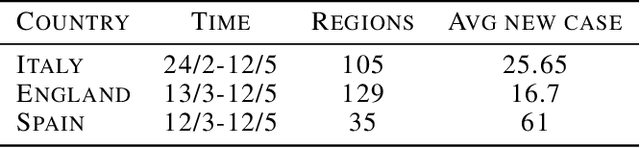

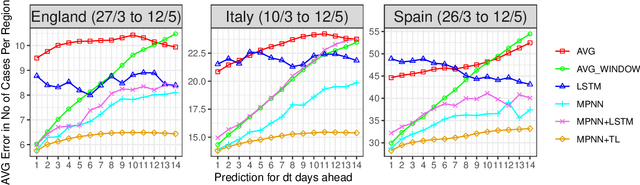

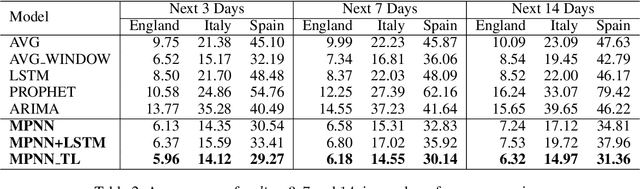

Abstract:The recent outbreak of COVID-19 has affected millions of individuals around the world and has posed a significant challenge to global healthcare. From the early days of the pandemic, it became clear that it is highly contagious and that human mobility contributes significantly to its spread. In this paper, we study the impact of population movement on the spread of COVID-19, and we capitalize on recent advances in the field of representation learning on graphs to capture the underlying dynamics. Specifically, we create a graph where nodes correspond to a country's regions and the edge weights denote human mobility from one region to another. Then, we employ graph neural networks to predict the number of future cases, encoding the underlying diffusion patterns that govern the spread into our learning model. Furthermore, to account for the limited amount of training data, we capitalize on the pandemic's asynchronous outbreaks across countries and use a model-agnostic meta-learning based method to transfer knowledge from one country's model to another's. We compare the proposed approach against simple baselines and more traditional forecasting techniques in 3 European countries. Experimental results demonstrate the superiority of our method, highlighting the usefulness of GNNs in epidemiological prediction. Transfer learning provides the best model, highlighting its potential to improve the accuracy of the predictions in case of secondary waves, if data from past/parallel outbreaks is utilized.

Predicting conversions in display advertising based on URL embeddings

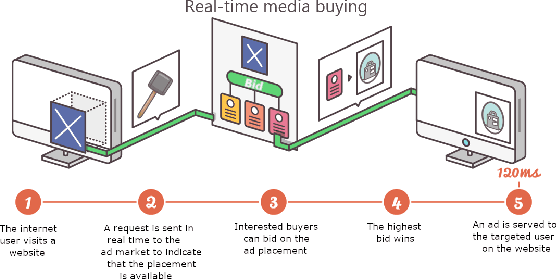

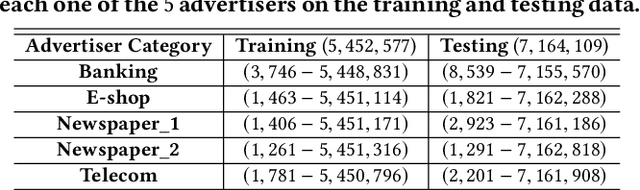

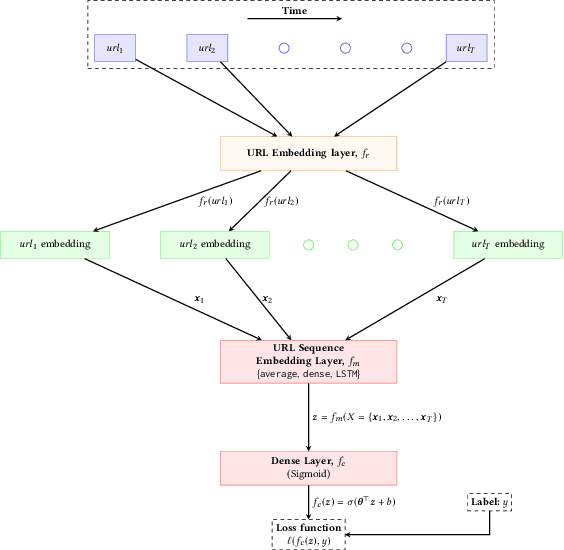

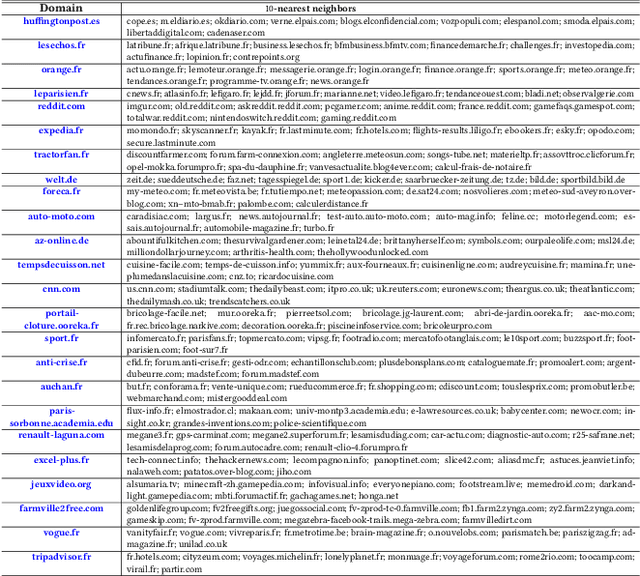

Aug 28, 2020

Abstract:Online display advertising is growing rapidly in recent years thanks to the automation of the ad buying process. Real-time bidding (RTB) allows the automated trading of ad impressions between advertisers and publishers through real-time auctions. In order to increase the effectiveness of their campaigns, advertisers should deliver ads to the users who are highly likely to be converted (i.e., purchase, registration, website visit, etc.) in the near future. In this study, we introduce and examine different models for estimating the probability of a user converting, given their history of visited URLs. Inspired by natural language processing, we introduce three URL embedding models to compute semantically meaningful URL representations. To demonstrate the effectiveness of the different proposed representation and conversion prediction models, we have conducted experiments on real logged events collected from an advertising platform.

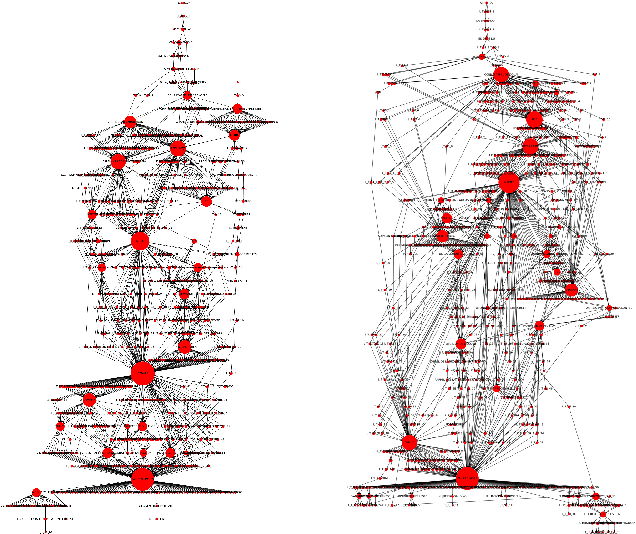

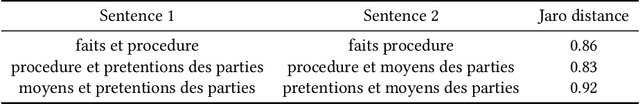

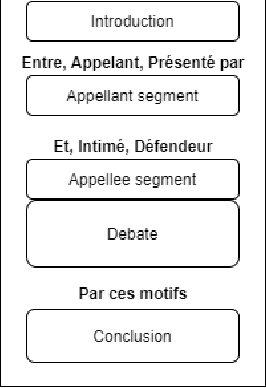

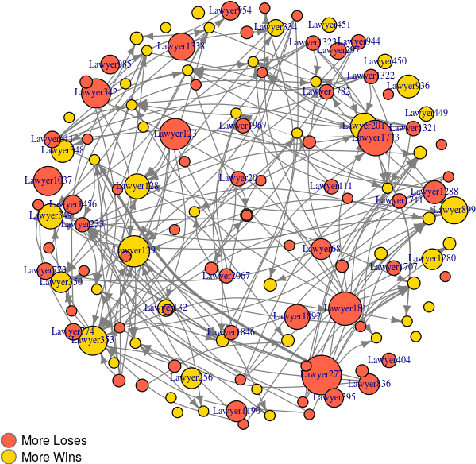

Performance in the Courtroom: Automated Processing and Visualization of Appeal Court Decisions in France

Jul 09, 2020

Abstract:Artificial Intelligence techniques are already popular and important in the legal domain. We extract legal indicators from judicial judgment to decrease the asymmetry of information of the legal system and the access-to-justice gap. We use NLP methods to extract interesting entities/data from judgments to construct networks of lawyers and judgments. We propose metrics to rank lawyers based on their experience, wins/loss ratio and their importance in the network of lawyers. We also perform community detection in the network of judgments and propose metrics to represent the difficulty of cases capitalising on communities features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge