Michalis Vazirgiannis

Ecole Polytechnique, AUEB

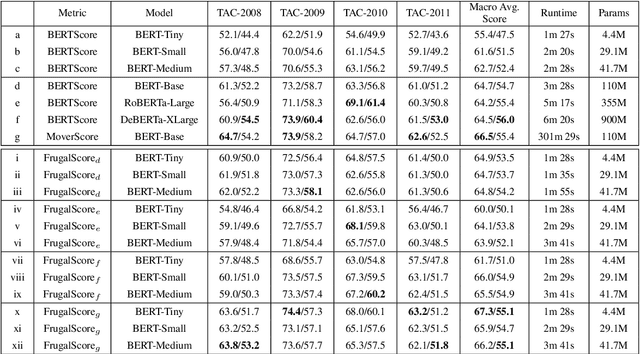

AraBART: a Pretrained Arabic Sequence-to-Sequence Model for Abstractive Summarization

Mar 21, 2022

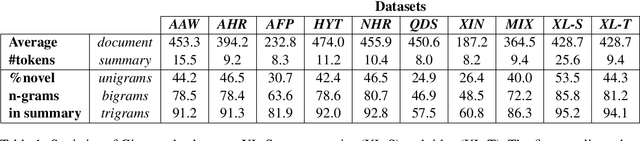

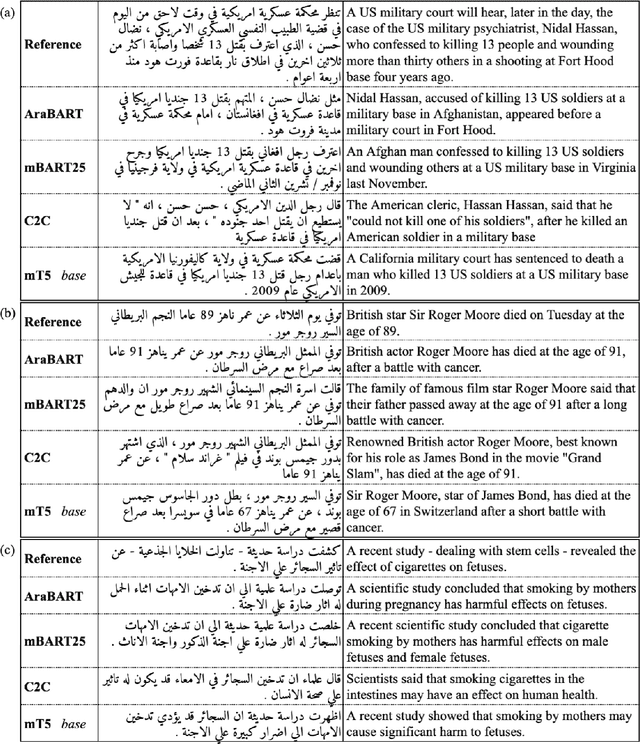

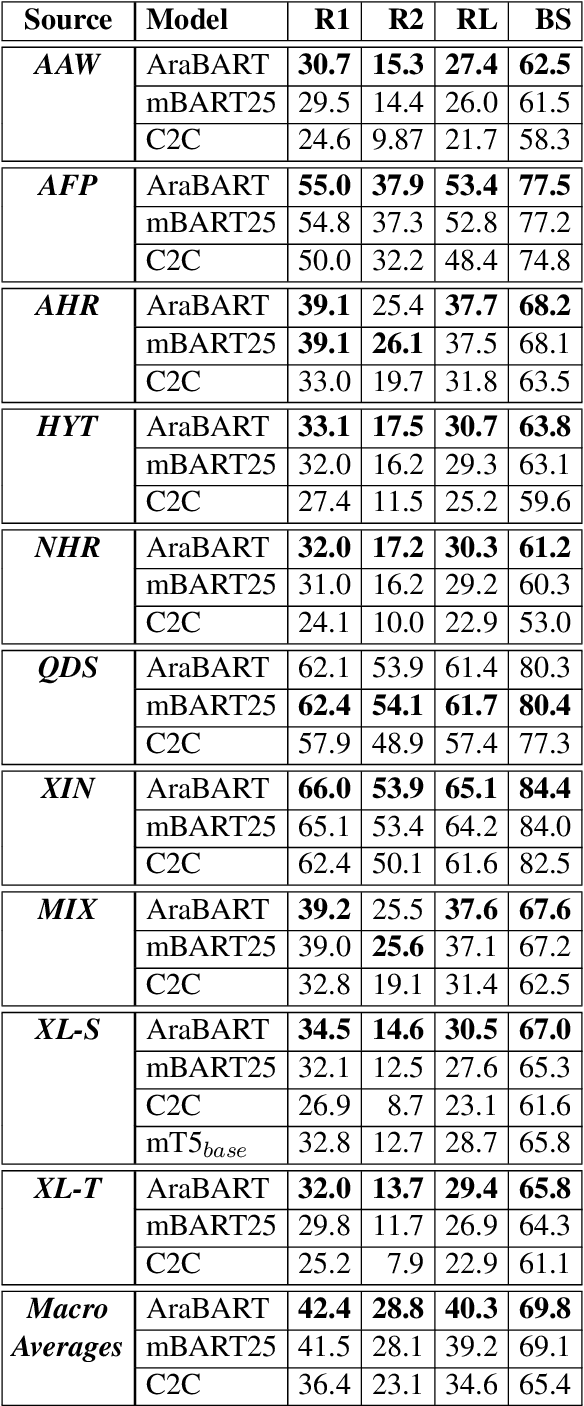

Abstract:Like most natural language understanding and generation tasks, state-of-the-art models for summarization are transformer-based sequence-to-sequence architectures that are pretrained on large corpora. While most existing models focused on English, Arabic remained understudied. In this paper we propose AraBART, the first Arabic model in which the encoder and the decoder are pretrained end-to-end, based on BART. We show that AraBART achieves the best performance on multiple abstractive summarization datasets, outperforming strong baselines including a pretrained Arabic BERT-based model and multilingual mBART and mT5 models.

Modularity-Aware Graph Autoencoders for Joint Community Detection and Link Prediction

Feb 02, 2022

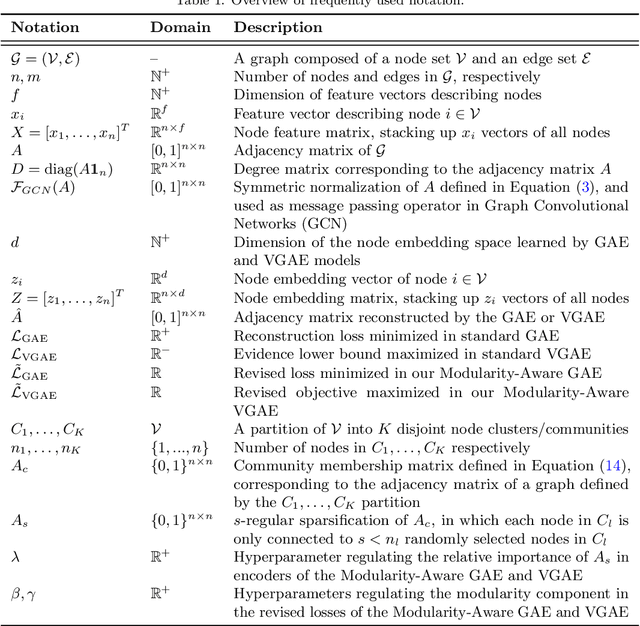

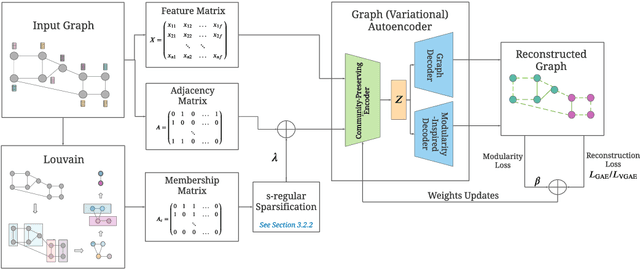

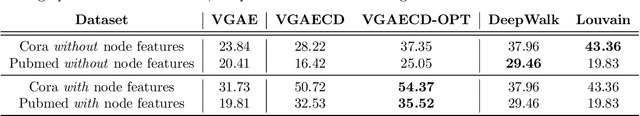

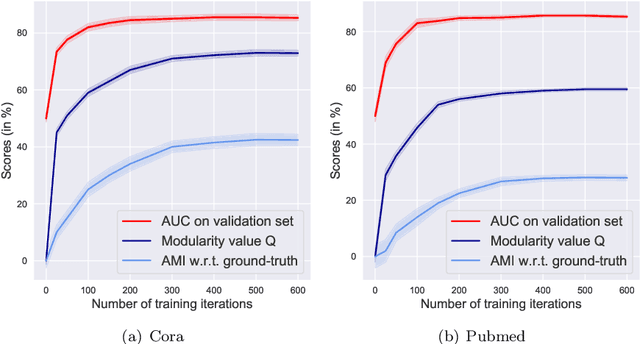

Abstract:Graph autoencoders (GAE) and variational graph autoencoders (VGAE) emerged as powerful methods for link prediction. Their performances are less impressive on community detection problems where, according to recent and concurring experimental evaluations, they are often outperformed by simpler alternatives such as the Louvain method. It is currently still unclear to which extent one can improve community detection with GAE and VGAE, especially in the absence of node features. It is moreover uncertain whether one could do so while simultaneously preserving good performances on link prediction. In this paper, we show that jointly addressing these two tasks with high accuracy is possible. For this purpose, we introduce and theoretically study a community-preserving message passing scheme, doping our GAE and VGAE encoders by considering both the initial graph structure and modularity-based prior communities when computing embedding spaces. We also propose novel training and optimization strategies, including the introduction of a modularity-inspired regularizer complementing the existing reconstruction losses for joint link prediction and community detection. We demonstrate the empirical effectiveness of our approach, referred to as Modularity-Aware GAE and VGAE, through in-depth experimental validation on various real-world graphs.

NLP Research and Resources at DaSciM, Ecole Polytechnique

Dec 01, 2021Abstract:DaSciM (Data Science and Mining) part of LIX at Ecole Polytechnique, established in 2013 and since then producing research results in the area of large scale data analysis via methods of machine and deep learning. The group has been specifically active in the area of NLP and text mining with interesting results at methodological and resources level. Here follow our different contributions of interest to the AFIA community.

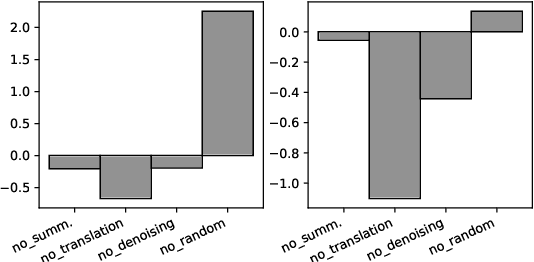

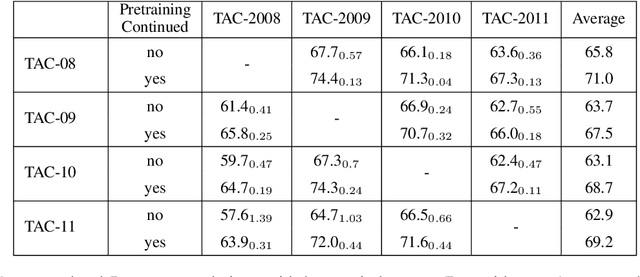

FrugalScore: Learning Cheaper, Lighter and Faster Evaluation Metricsfor Automatic Text Generation

Oct 16, 2021

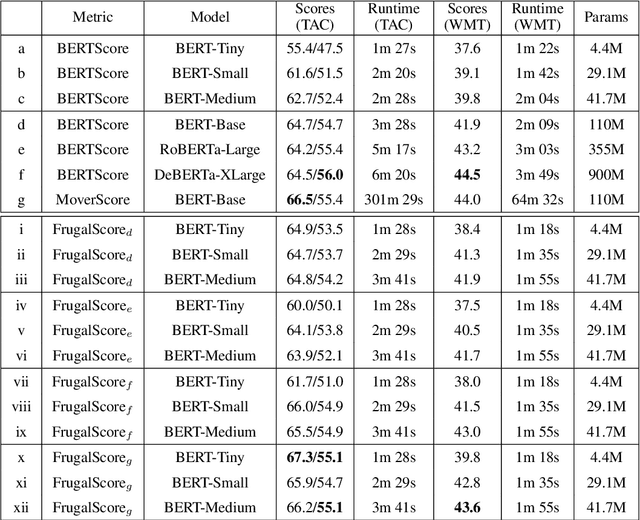

Abstract:Fast and reliable evaluation metrics are key to R&D progress. While traditional natural language generation metrics are fast, they are not very reliable. Conversely, new metrics based on large pretrained language models are much more reliable, but require significant computational resources. In this paper, we propose FrugalScore, an approach to learn a fixed, low cost version of any expensive NLG metric, while retaining most of its original performance. Experiments with BERTScore and MoverScore on summarization and translation show that FrugalScore is on par with the original metrics (and sometimes better), while having several orders of magnitude less parameters and running several times faster. On average over all learned metrics, tasks, and variants, FrugalScore retains 96.8% of the performance, runs 24 times faster, and has 35 times less parameters than the original metrics. We make our trained metrics publicly available, to benefit the entire NLP community and in particular researchers and practitioners with limited resources.

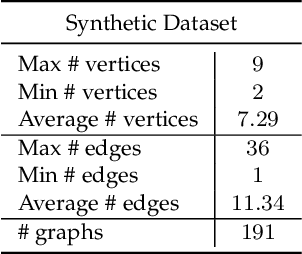

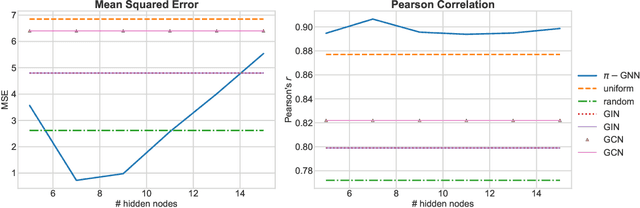

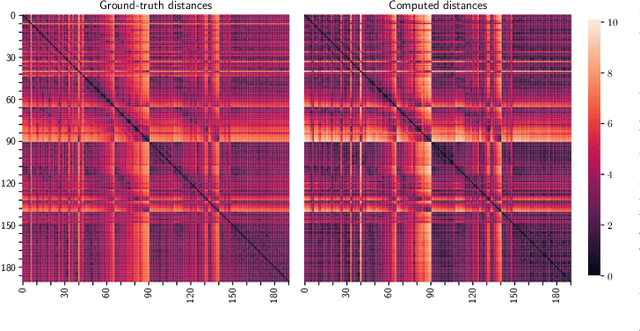

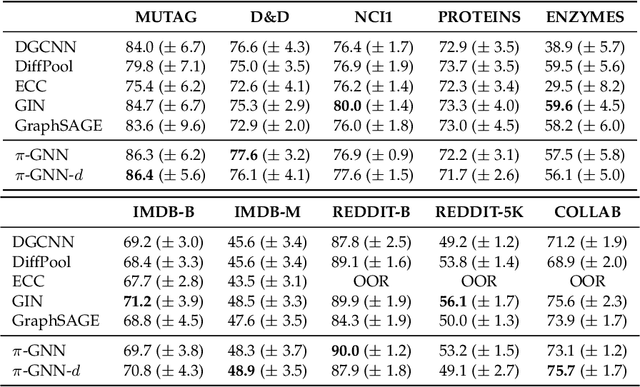

Permute Me Softly: Learning Soft Permutations for Graph Representations

Oct 05, 2021

Abstract:Graph neural networks (GNNs) have recently emerged as a dominant paradigm for machine learning with graphs. Research on GNNs has mainly focused on the family of message passing neural networks (MPNNs). Similar to the Weisfeiler-Leman (WL) test of isomorphism, these models follow an iterative neighborhood aggregation procedure to update vertex representations, and they next compute graph representations by aggregating the representations of the vertices. Although very successful, MPNNs have been studied intensively in the past few years. Thus, there is a need for novel architectures which will allow research in the field to break away from MPNNs. In this paper, we propose a new graph neural network model, so-called $\pi$-GNN which learns a "soft" permutation (i.e., doubly stochastic) matrix for each graph, and thus projects all graphs into a common vector space. The learned matrices impose a "soft" ordering on the vertices of the input graphs, and based on this ordering, the adjacency matrices are mapped into vectors. These vectors can be fed into fully-connected or convolutional layers to deal with supervised learning tasks. In case of large graphs, to make the model more efficient in terms of running time and memory, we further relax the doubly stochastic matrices to row stochastic matrices. We empirically evaluate the model on graph classification and graph regression datasets and show that it achieves performance competitive with state-of-the-art models.

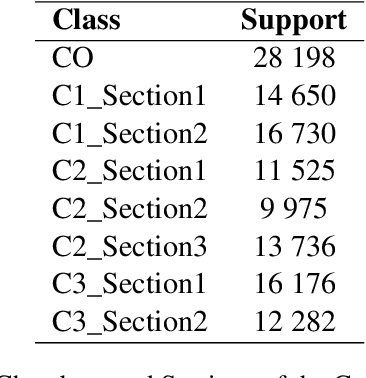

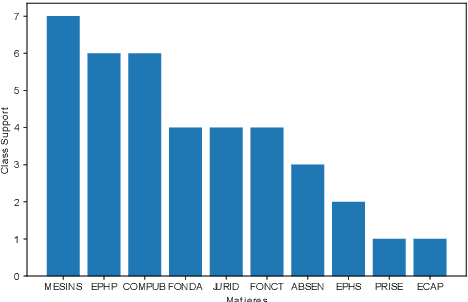

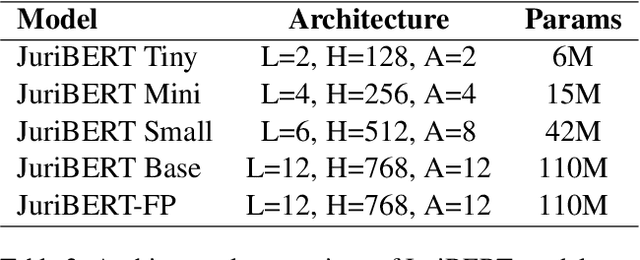

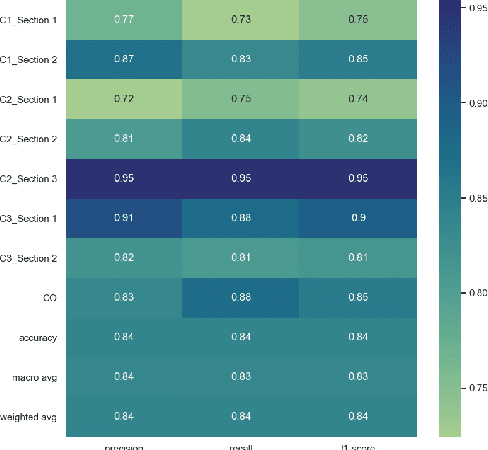

JuriBERT: A Masked-Language Model Adaptation for French Legal Text

Oct 04, 2021

Abstract:Language models have proven to be very useful when adapted to specific domains. Nonetheless, little research has been done on the adaptation of domain-specific BERT models in the French language. In this paper, we focus on creating a language model adapted to French legal text with the goal of helping law professionals. We conclude that some specific tasks do not benefit from generic language models pre-trained on large amounts of data. We explore the use of smaller architectures in domain-specific sub-languages and their benefits for French legal text. We prove that domain-specific pre-trained models can perform better than their equivalent generalised ones in the legal domain. Finally, we release JuriBERT, a new set of BERT models adapted to the French legal domain.

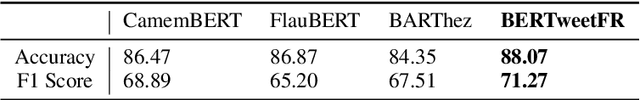

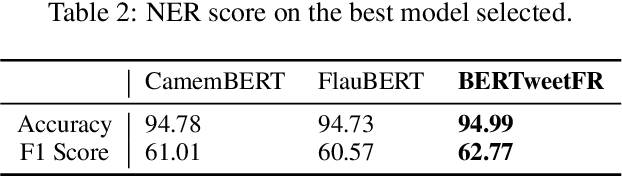

BERTweetFR : Domain Adaptation of Pre-Trained Language Models for French Tweets

Sep 21, 2021

Abstract:We introduce BERTweetFR, the first large-scale pre-trained language model for French tweets. Our model is initialized using the general-domain French language model CamemBERT which follows the base architecture of RoBERTa. Experiments show that BERTweetFR outperforms all previous general-domain French language models on two downstream Twitter NLP tasks of offensiveness identification and named entity recognition. The dataset used in the offensiveness detection task is first created and annotated by our team, filling in the gap of such analytic datasets in French. We make our model publicly available in the transformers library with the aim of promoting future research in analytic tasks for French tweets.

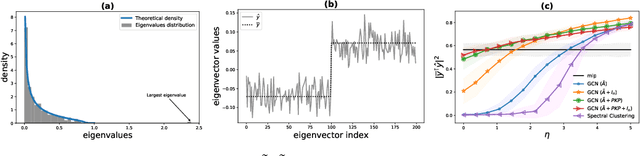

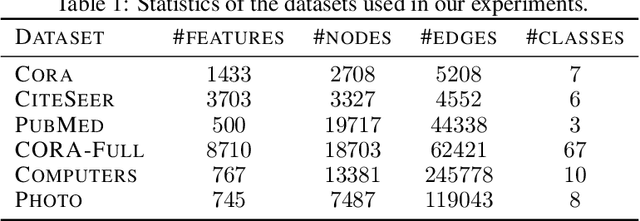

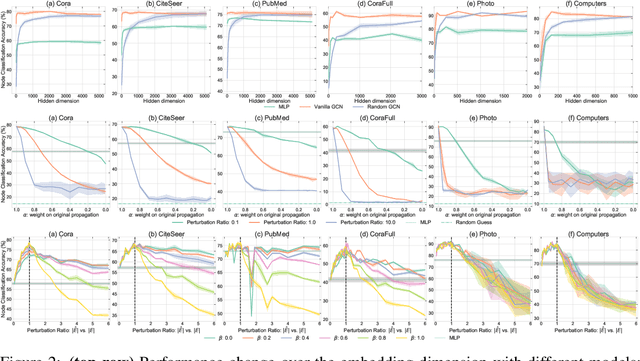

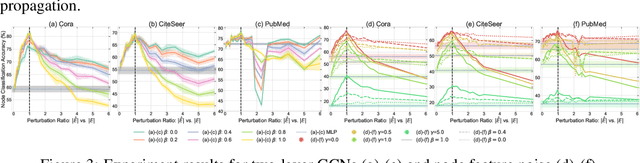

Node Feature Kernels Increase Graph Convolutional Network Robustness

Sep 04, 2021

Abstract:The robustness of the much-used Graph Convolutional Networks (GCNs) to perturbations of their input is becoming a topic of increasing importance. In this paper, the random GCN is introduced for which a random matrix theory analysis is possible. This analysis suggests that if the graph is sufficiently perturbed, or in the extreme case random, then the GCN fails to benefit from the node features. It is furthermore observed that enhancing the message passing step in GCNs by adding the node feature kernel to the adjacency matrix of the graph structure solves this problem. An empirical study of a GCN utilised for node classification on six real datasets further confirms the theoretical findings and demonstrates that perturbations of the graph structure can result in GCNs performing significantly worse than Multi-Layer Perceptrons run on the node features alone. In practice, adding a node feature kernel to the message passing of perturbed graphs results in a significant improvement of the GCN's performance, thereby rendering it more robust to graph perturbations. Our code is publicly available at:https://github.com/ChangminWu/RobustGCN.

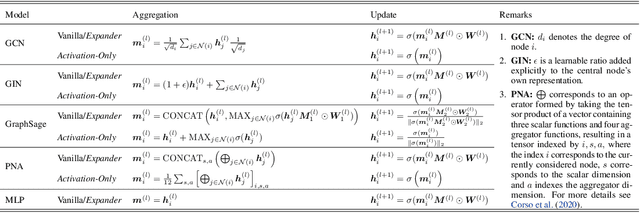

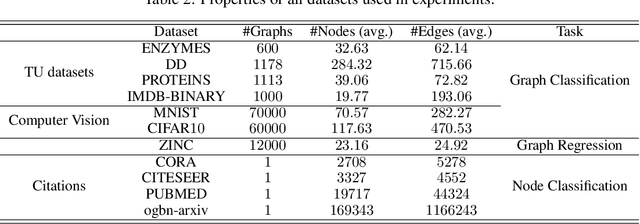

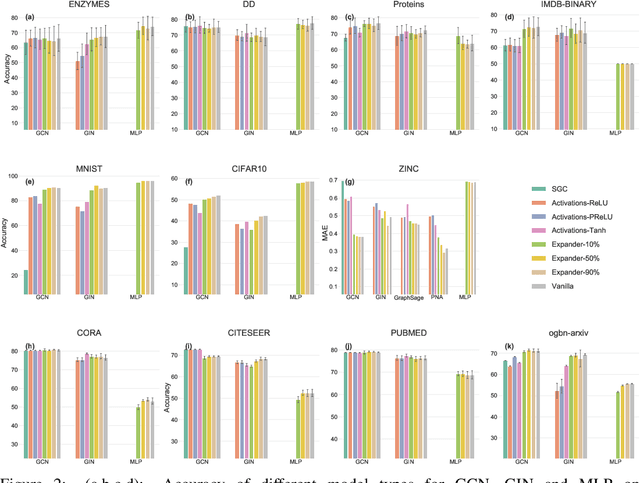

Sparsifying the Update Step in Graph Neural Networks

Sep 02, 2021

Abstract:Message-Passing Neural Networks (MPNNs), the most prominent Graph Neural Network (GNN) framework, celebrate much success in the analysis of graph-structured data. Concurrently, the sparsification of Neural Network models attracts a great amount of academic and industrial interest. In this paper, we conduct a structured study of the effect of sparsification on the trainable part of MPNNs known as the Update step. To this end, we design a series of models to successively sparsify the linear transform in the Update step. Specifically, we propose the ExpanderGNN model with a tuneable sparsification rate and the Activation-Only GNN, which has no linear transform in the Update step. In agreement with a growing trend in the literature, the sparsification paradigm is changed by initialising sparse neural network architectures rather than expensively sparsifying already trained architectures. Our novel benchmark models enable a better understanding of the influence of the Update step on model performance and outperform existing simplified benchmark models such as the Simple Graph Convolution. The ExpanderGNNs, and in some cases the Activation-Only models, achieve performance on par with their vanilla counterparts on several downstream tasks while containing significantly fewer trainable parameters. In experiments with matching parameter numbers, our benchmark models outperform the state-of-the-art GNN models. Our code is publicly available at: https://github.com/ChangminWu/ExpanderGNN.

Learning to Maximize Influence

Aug 10, 2021

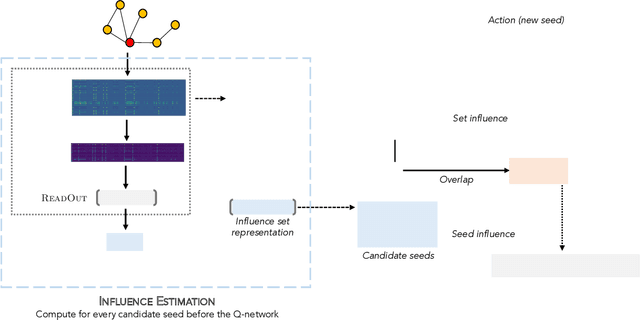

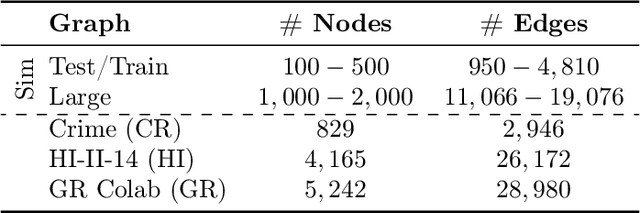

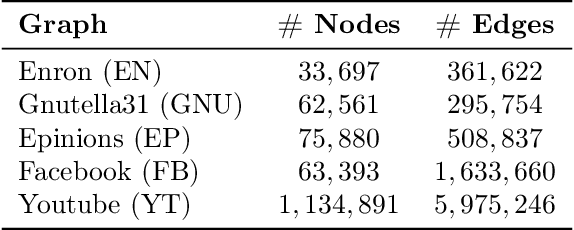

Abstract:As the field of machine learning for combinatorial optimization advances, traditional problems are resurfaced and readdressed through this new perspective. The overwhelming majority of the literature focuses on small graph problems, while several real-world problems are devoted to large graphs. Here, we focus on two such problems that are related: influence estimation, a \#P-hard counting problem, and influence maximization, an NP-hard problem. We develop GLIE, a Graph Neural Network (GNN) that inherently parameterizes an upper bound of influence estimation and train it on small simulated graphs. Experiments show that GLIE can provide accurate predictions faster than the alternatives for graphs 10 times larger than the train set. More importantly, it can be used on arbitrary large graphs for influence maximization, as the predictions can rank effectively seed sets even when the accuracy deteriorates. To showcase this, we propose a version of a standard Influence Maximization (IM) algorithm where we substitute traditional influence estimation with the predictions of GLIE.We also transfer GLIE into a reinforcement learning model that learns how to choose seeds to maximize influence sequentially using GLIE's hidden representations and predictions. The final results show that the proposed methods surpasses a previous GNN-RL approach and perform on par with a state-of-the-art IM algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge