Michael Tanner

Oxford Robotics Institute

Meshed Up: Learnt Error Correction in 3D Reconstructions

Jan 27, 2018

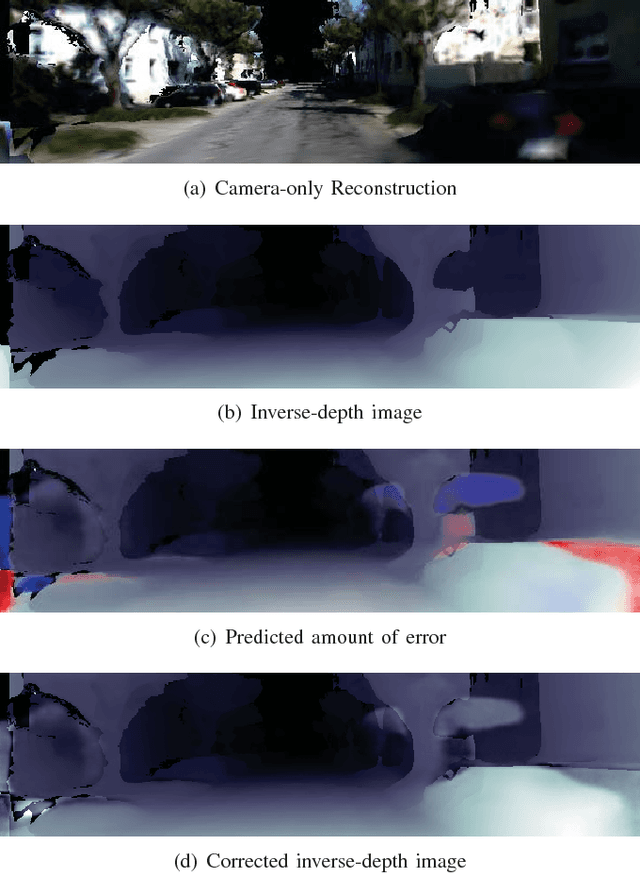

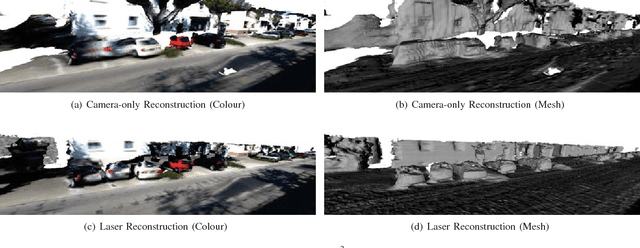

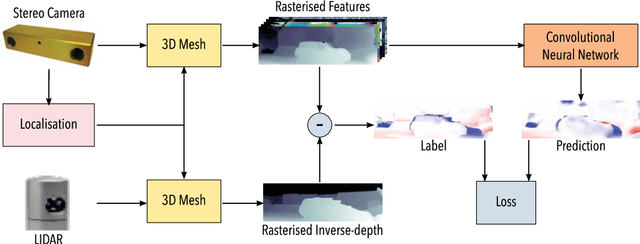

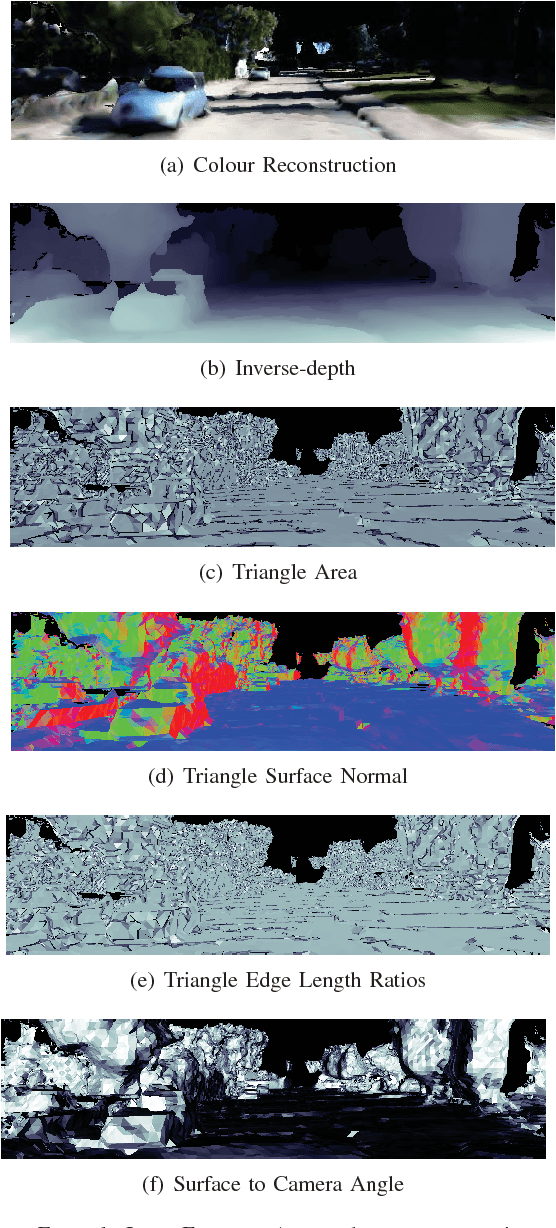

Abstract:Dense reconstructions often contain errors that prior work has so far minimised using high quality sensors and regularising the output. Nevertheless, errors still persist. This paper proposes a machine learning technique to identify errors in three dimensional (3D) meshes. Beyond simply identifying errors, our method quantifies both the magnitude and the direction of depth estimate errors when viewing the scene. This enables us to improve the reconstruction accuracy. We train a suitably deep network architecture with two 3D meshes: a high-quality laser reconstruction, and a lower quality stereo image reconstruction. The network predicts the amount of error in the lower quality reconstruction with respect to the high-quality one, having only view the former through its input. We evaluate our approach by correcting two-dimensional (2D) inverse-depth images extracted from the 3D model, and show that our method improves the quality of these depth reconstructions by up to a relative 10% RMSE.

DENSER Cities: A System for Dense Efficient Reconstructions of Cities

Apr 13, 2016

Abstract:This paper is about the efficient generation of dense, colored models of city-scale environments from range data and in particular, stereo cameras. Better maps make for better understanding; better understanding leads to better robots, but this comes at a cost. The computational and memory requirements of large dense models can be prohibitive. We provide the theory and the system needed to create city-scale dense reconstructions. To do so, we apply a regularizer over a compressed 3D data structure while dealing with the complex boundary conditions this induces during the data-fusion stage. We show that only with these considerations can we swiftly create neat, large, "well behaved" reconstructions. We evaluate our system using the KITTI dataset and provide statistics for the metric errors in all surfaces created compared to those measured with 3D laser. Our regularizer reduces the median error by 40% in 3.4 km of dense reconstructions with a median accuracy of 6 cm. For subjective analysis, we provide a qualitative review of 6.1 km of our dense reconstructions in an attached video. These are the largest dense reconstructions from a single passive camera we are aware of in the literature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge