Meilian Chen

LoRA-PAR: A Flexible Dual-System LoRA Partitioning Approach to Efficient LLM Fine-Tuning

Jul 28, 2025Abstract:Large-scale generative models like DeepSeek-R1 and OpenAI-O1 benefit substantially from chain-of-thought (CoT) reasoning, yet pushing their performance typically requires vast data, large model sizes, and full-parameter fine-tuning. While parameter-efficient fine-tuning (PEFT) helps reduce cost, most existing approaches primarily address domain adaptation or layer-wise allocation rather than explicitly tailoring data and parameters to different response demands. Inspired by "Thinking, Fast and Slow," which characterizes two distinct modes of thought-System 1 (fast, intuitive, often automatic) and System 2 (slower, more deliberative and analytic)-we draw an analogy that different "subregions" of an LLM's parameters might similarly specialize for tasks that demand quick, intuitive responses versus those requiring multi-step logical reasoning. Therefore, we propose LoRA-PAR, a dual-system LoRA framework that partitions both data and parameters by System 1 or System 2 demands, using fewer yet more focused parameters for each task. Specifically, we classify task data via multi-model role-playing and voting, and partition parameters based on importance scoring, then adopt a two-stage fine-tuning strategy of training System 1 tasks with supervised fine-tuning (SFT) to enhance knowledge and intuition and refine System 2 tasks with reinforcement learning (RL) to reinforce deeper logical deliberation next. Extensive experiments show that the two-stage fine-tuning strategy, SFT and RL, lowers active parameter usage while matching or surpassing SOTA PEFT baselines.

A Comprehensive Survey on Evaluating Large Language Model Applications in the Medical Industry

Apr 24, 2024

Abstract:Since the inception of the Transformer architecture in 2017, Large Language Models (LLMs) such as GPT and BERT have evolved significantly, impacting various industries with their advanced capabilities in language understanding and generation. These models have shown potential to transform the medical field, highlighting the necessity for specialized evaluation frameworks to ensure their effective and ethical deployment. This comprehensive survey delineates the extensive application and requisite evaluation of LLMs within healthcare, emphasizing the critical need for empirical validation to fully exploit their capabilities in enhancing healthcare outcomes. Our survey is structured to provide an in-depth analysis of LLM applications across clinical settings, medical text data processing, research, education, and public health awareness. We begin by exploring the roles of LLMs in different medical applications, detailing how they are evaluated based on their performance in tasks such as clinical application, medical text data processing, information retrieval, data analysis, medical scientific writing, educational content generation etc. The subsequent sections delve into the methodologies employed in these evaluations, discussing the benchmarks and metrics used to assess the models' effectiveness, accuracy, and ethical alignment. Through this survey, we aim to equip healthcare professionals, researchers, and policymakers with a comprehensive understanding of the potential strengths and limitations of LLMs in medical applications. By providing detailed insights into the evaluation processes and the challenges faced in integrating LLMs into healthcare, this survey seeks to guide the responsible development and deployment of these powerful models, ensuring they are harnessed to their full potential while maintaining stringent ethical standards.

Training like Playing: A Reinforcement Learning And Knowledge Graph-based framework for building Automatic Consultation System in Medical Field

Jun 14, 2021

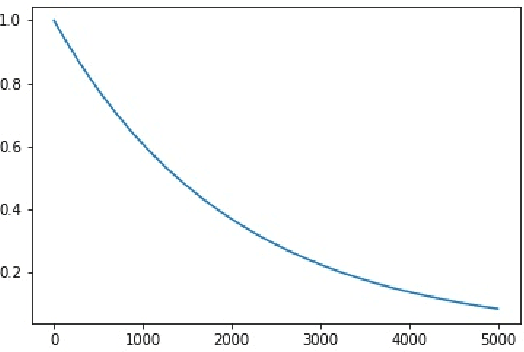

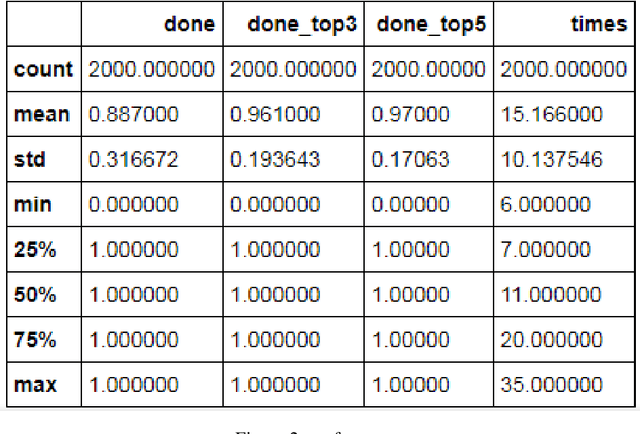

Abstract:We introduce a framework for AI-based medical consultation system with knowledge graph embedding and reinforcement learning components and its implement. Our implement of this framework leverages knowledge organized as a graph to have diagnosis according to evidence collected from patients recurrently and dynamically. According to experiment we designed for evaluating its performance, it archives a good result. More importantly, for getting better performance, researchers can implement it on this framework based on their innovative ideas, well designed experiments and even clinical trials.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge