Matthias A. F. Gsell

Non-Intrusive Parametrized-Background Data-Weak Reconstruction of Cardiac Displacement Fields from Sparse MRI-like Observations

Sep 18, 2025

Abstract:Personalized cardiac diagnostics require accurate reconstruction of myocardial displacement fields from sparse clinical imaging data, yet current methods often demand intrusive access to computational models. In this work, we apply the non-intrusive Parametrized-Background Data-Weak (PBDW) approach to three-dimensional (3D) cardiac displacement field reconstruction from limited Magnetic Resonance Image (MRI)-like observations. Our implementation requires only solution snapshots -- no governing equations, assembly routines, or solver access -- enabling immediate deployment across commercial and research codes using different constitutive models. Additionally, we introduce two enhancements: an H-size minibatch worst-case Orthogonal Matching Pursuit (wOMP) algorithm that improves Sensor Selection (SS) computational efficiency while maintaining reconstruction accuracy, and memory optimization techniques exploiting block matrix structures in vectorial problems. We demonstrate the effectiveness of the method through validation on a 3D left ventricular model with simulated scar tissue. Starting with noise-free reconstruction, we systematically incorporate Gaussian noise and spatial sparsity mimicking realistic MRI acquisition protocols. Results show exceptional accuracy in noise-free conditions (relative L2 error of order O(1e-5)), robust performance with 10% noise (relative L2 error of order O(1e-2)), and effective reconstruction from sparse measurements (relative L2 error of order O(1e-2)). The online reconstruction achieves four-order-of-magnitude computational speed-up compared to full Finite Element (FE) simulations, with reconstruction times under one tenth of second for sparse scenarios, demonstrating significant potential for integration into clinical cardiac modeling workflows.

CaRe-CNN: Cascading Refinement CNN for Myocardial Infarct Segmentation with Microvascular Obstructions

Dec 19, 2023

Abstract:Late gadolinium enhanced (LGE) magnetic resonance (MR) imaging is widely established to assess the viability of myocardial tissue of patients after acute myocardial infarction (MI). We propose the Cascading Refinement CNN (CaRe-CNN), which is a fully 3D, end-to-end trained, 3-stage CNN cascade that exploits the hierarchical structure of such labeled cardiac data. Throughout the three stages of the cascade, the label definition changes and CaRe-CNN learns to gradually refine its intermediate predictions accordingly. Furthermore, to obtain more consistent qualitative predictions, we propose a series of post-processing steps that take anatomical constraints into account. Our CaRe-CNN was submitted to the FIMH 2023 MYOSAIQ challenge, where it ranked second out of 18 participating teams. CaRe-CNN showed great improvements most notably when segmenting the difficult but clinically most relevant myocardial infarct tissue (MIT) as well as microvascular obstructions (MVO). When computing the average scores over all labels, our method obtained the best score in eight out of ten metrics. Thus, accurate cardiac segmentation after acute MI via our CaRe-CNN allows generating patient-specific models of the heart serving as an important step towards personalized medicine.

MedalCare-XL: 16,900 healthy and pathological 12 lead ECGs obtained through electrophysiological simulations

Nov 29, 2022Abstract:Mechanistic cardiac electrophysiology models allow for personalized simulations of the electrical activity in the heart and the ensuing electrocardiogram (ECG) on the body surface. As such, synthetic signals possess known ground truth labels of the underlying disease and can be employed for validation of machine learning ECG analysis tools in addition to clinical signals. Recently, synthetic ECGs were used to enrich sparse clinical data or even replace them completely during training leading to improved performance on real-world clinical test data. We thus generated a novel synthetic database comprising a total of 16,900 12 lead ECGs based on electrophysiological simulations equally distributed into healthy control and 7 pathology classes. The pathological case of myocardial infraction had 6 sub-classes. A comparison of extracted features between the virtual cohort and a publicly available clinical ECG database demonstrated that the synthetic signals represent clinical ECGs for healthy and pathological subpopulations with high fidelity. The ECG database is split into training, validation, and test folds for development and objective assessment of novel machine learning algorithms.

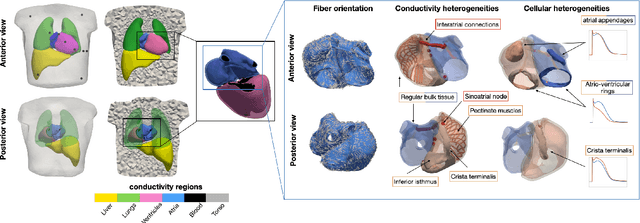

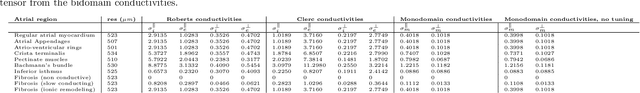

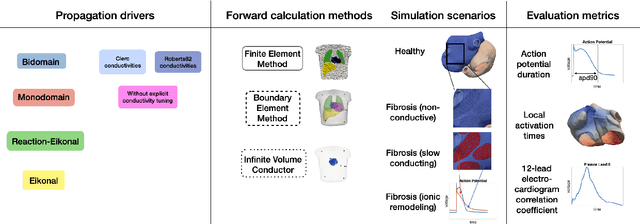

Comparison of propagation models and forward calculation methods on cellular, tissue and organ scale atrial electrophysiology

Mar 15, 2022

Abstract:Objective: The bidomain model and the finite element method are an established standard to mathematically describe cardiac electrophysiology, but are both suboptimal choices for fast and large-scale simulations due to high computational costs. We investigate to what extent simplified approaches for propagation models (monodomain, reaction-eikonal and eikonal) and forward calculation (boundary element and infinite volume conductor) deliver markedly accelerated, yet physiologically accurate simulation results in atrial electrophysiology. Methods: We compared action potential durations, local activation times (LATs), and electrocardiograms (ECGs) for sinus rhythm simulations on healthy and fibrotically infiltrated atrial models. Results: All simplified model solutions yielded LATs and P waves in accurate accordance with the bidomain results. Only for the eikonal model with pre-computed action potential templates shifted in time to derive transmembrane voltages, repolarization behavior notably deviated from the bidomain results. ECGs calculated with the boundary element method were characterized by correlation coefficients >0.9 compared to the finite element method. The infinite volume conductor method led to lower correlation coefficients caused predominantly by systematic overestimations of P wave amplitudes in the precordial leads. Conclusion: Our results demonstrate that the eikonal model yields accurate LATs and combined with the boundary element method precise ECGs compared to markedly more expensive full bidomain simulations. However, for an accurate representation of atrial repolarization dynamics, diffusion terms must be accounted for in simplified models. Significance: Simulations of atrial LATs and ECGs can be notably accelerated to clinically feasible time frames at high accuracy by resorting to the eikonal and boundary element methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge