Answering while Summarizing: Multi-task Learning for Multi-hop QA with Evidence Extraction

May 29, 2019Kosuke Nishida, Kyosuke Nishida, Masaaki Nagata, Atsushi Otsuka, Itsumi Saito, Hisako Asano, Junji Tomita

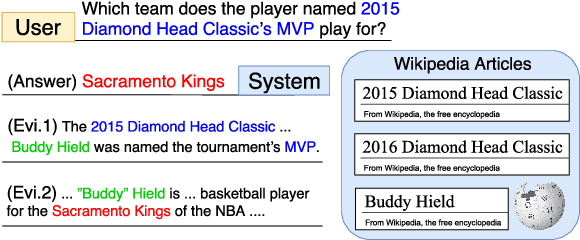

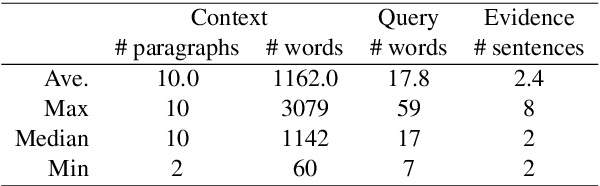

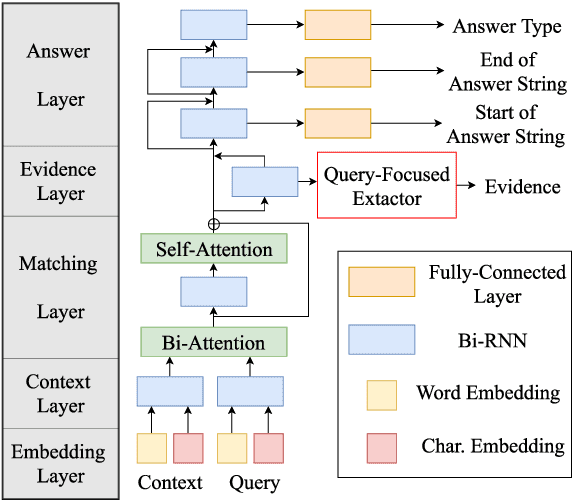

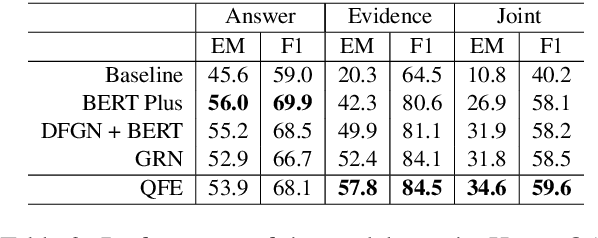

Question answering (QA) using textual sources for purposes such as reading comprehension (RC) has attracted much attention. This study focuses on the task of explainable multi-hop QA, which requires the system to return the answer with evidence sentences by reasoning and gathering disjoint pieces of the reference texts. It proposes the Query Focused Extractor (QFE) model for evidence extraction and uses multi-task learning with the QA model. QFE is inspired by extractive summarization models; compared with the existing method, which extracts each evidence sentence independently, it sequentially extracts evidence sentences by using an RNN with an attention mechanism on the question sentence. It enables QFE to consider the dependency among the evidence sentences and cover important information in the question sentence. Experimental results show that QFE with a simple RC baseline model achieves a state-of-the-art evidence extraction score on HotpotQA. Although designed for RC, it also achieves a state-of-the-art evidence extraction score on FEVER, which is a recognizing textual entailment task on a large textual database.

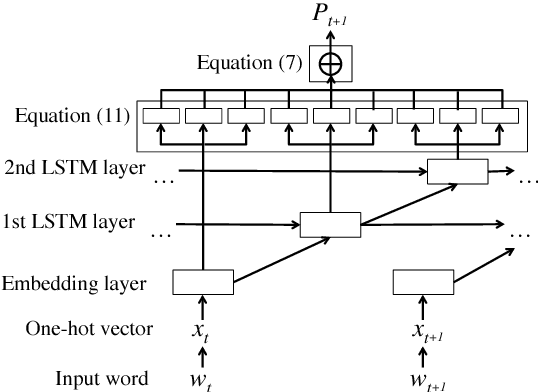

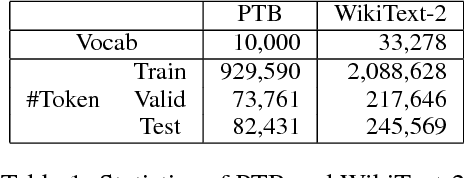

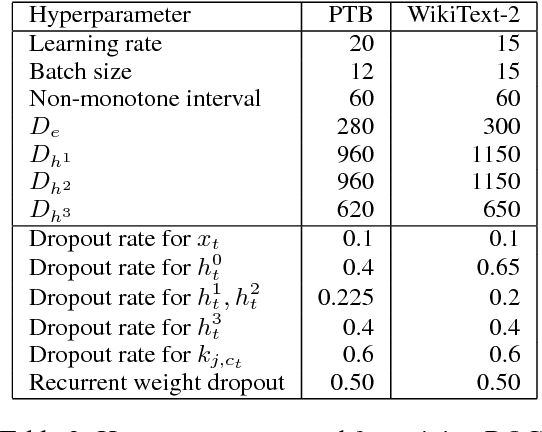

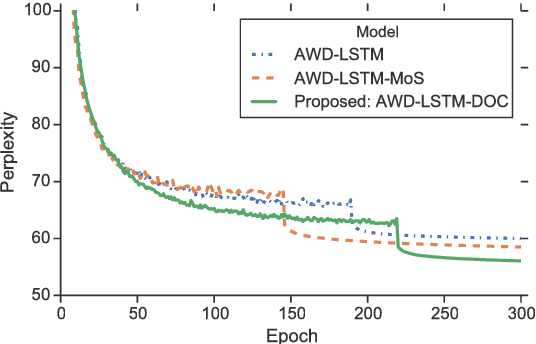

Direct Output Connection for a High-Rank Language Model

Aug 31, 2018Sho Takase, Jun Suzuki, Masaaki Nagata

This paper proposes a state-of-the-art recurrent neural network (RNN) language model that combines probability distributions computed not only from a final RNN layer but also from middle layers. Our proposed method raises the expressive power of a language model based on the matrix factorization interpretation of language modeling introduced by Yang et al. (2018). The proposed method improves the current state-of-the-art language model and achieves the best score on the Penn Treebank and WikiText-2, which are the standard benchmark datasets. Moreover, we indicate our proposed method contributes to two application tasks: machine translation and headline generation. Our code is publicly available at: https://github.com/nttcslab-nlp/doc_lm.

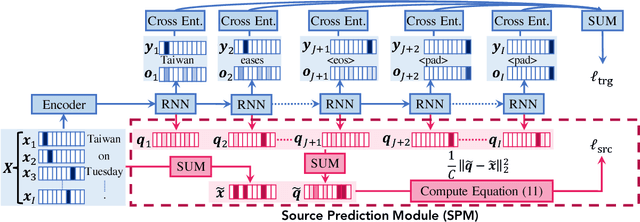

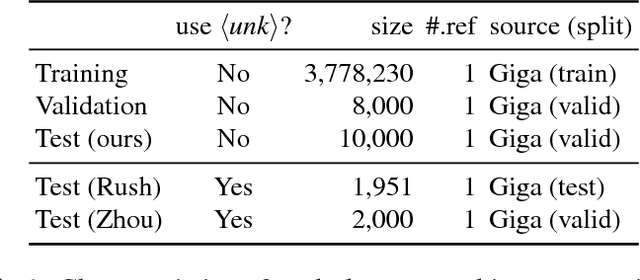

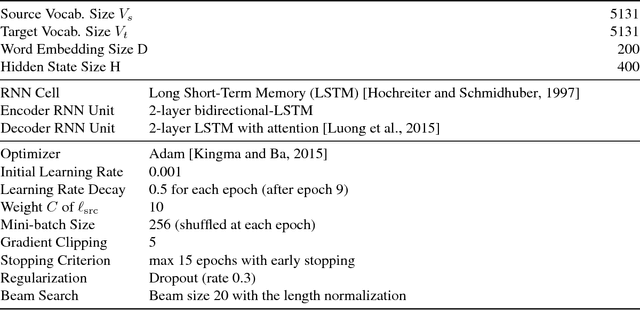

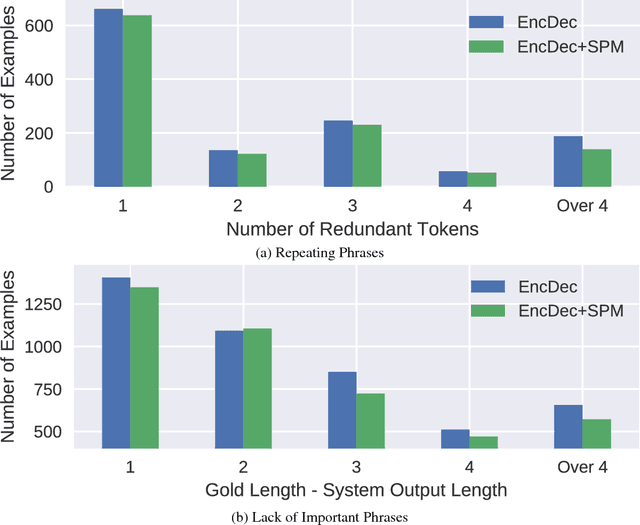

Source-side Prediction for Neural Headline Generation

Dec 22, 2017Shun Kiyono, Sho Takase, Jun Suzuki, Naoaki Okazaki, Kentaro Inui, Masaaki Nagata

The encoder-decoder model is widely used in natural language generation tasks. However, the model sometimes suffers from repeated redundant generation, misses important phrases, and includes irrelevant entities. Toward solving these problems we propose a novel source-side token prediction module. Our method jointly estimates the probability distributions over source and target vocabularies to capture a correspondence between source and target tokens. The experiments show that the proposed model outperforms the current state-of-the-art method in the headline generation task. Additionally, we show that our method has an ability to learn a reasonable token-wise correspondence without knowing any true alignments.

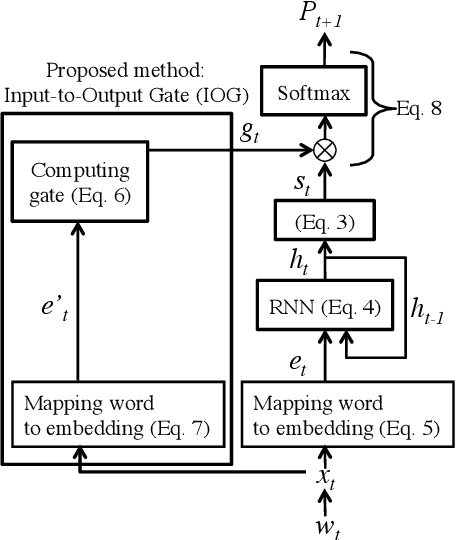

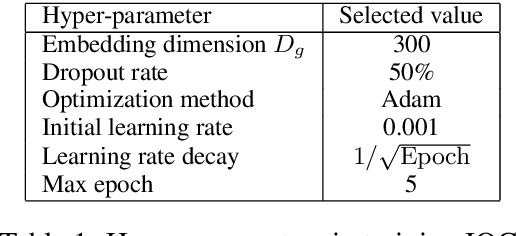

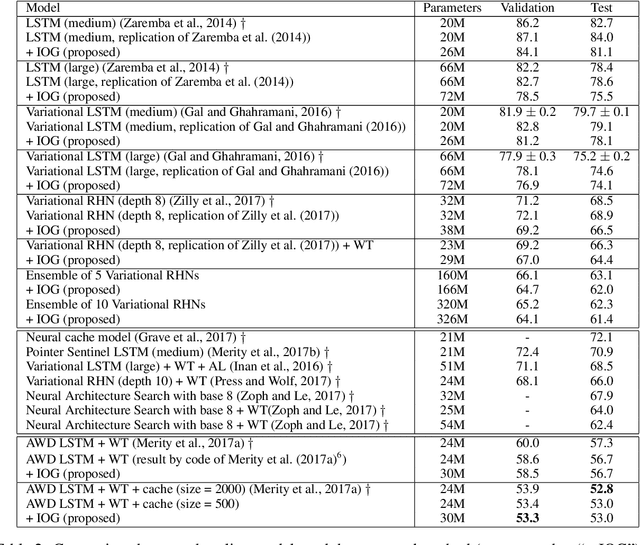

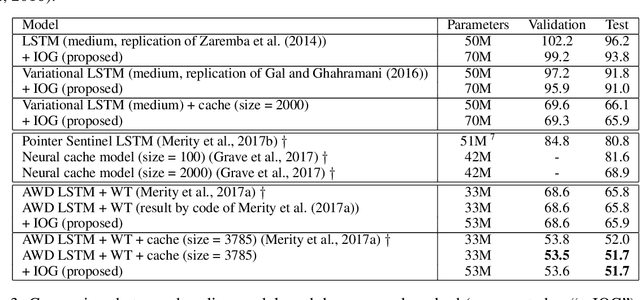

Input-to-Output Gate to Improve RNN Language Models

Sep 28, 2017Sho Takase, Jun Suzuki, Masaaki Nagata

This paper proposes a reinforcing method that refines the output layers of existing Recurrent Neural Network (RNN) language models. We refer to our proposed method as Input-to-Output Gate (IOG). IOG has an extremely simple structure, and thus, can be easily combined with any RNN language models. Our experiments on the Penn Treebank and WikiText-2 datasets demonstrate that IOG consistently boosts the performance of several different types of current topline RNN language models.

Cutting-off Redundant Repeating Generations for Neural Abstractive Summarization

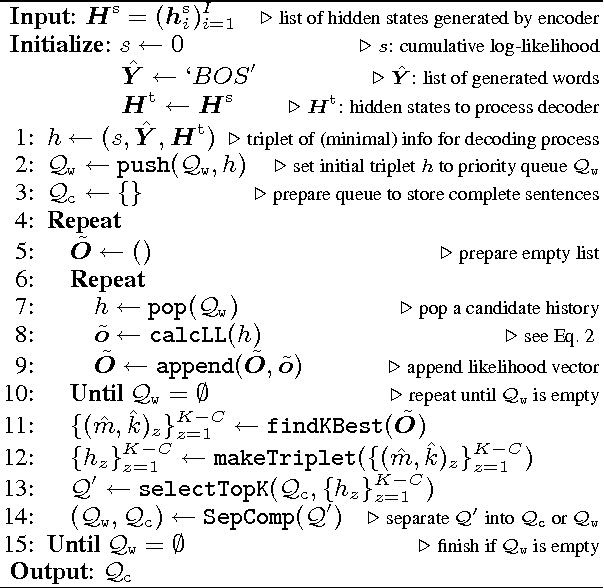

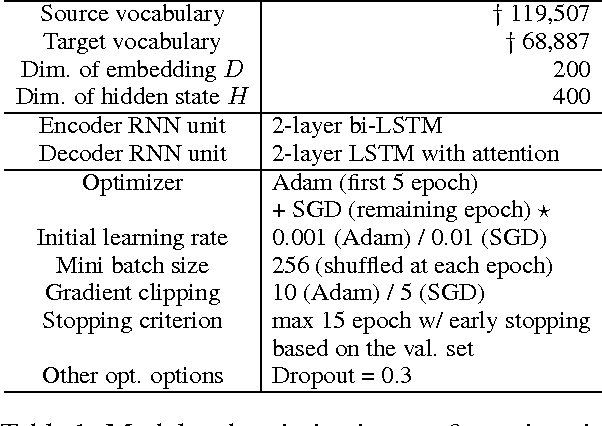

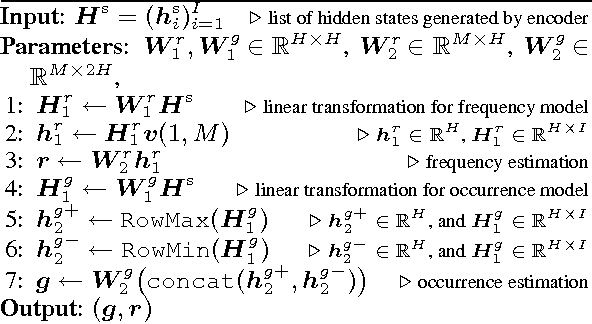

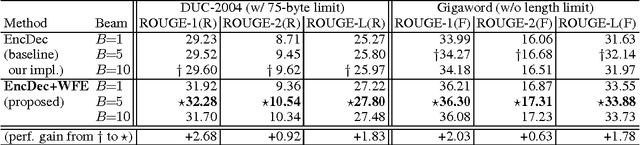

Feb 13, 2017Jun Suzuki, Masaaki Nagata

This paper tackles the reduction of redundant repeating generation that is often observed in RNN-based encoder-decoder models. Our basic idea is to jointly estimate the upper-bound frequency of each target vocabulary in the encoder and control the output words based on the estimation in the decoder. Our method shows significant improvement over a strong RNN-based encoder-decoder baseline and achieved its best results on an abstractive summarization benchmark.

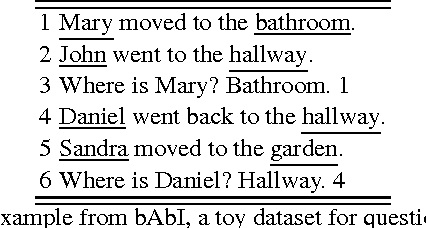

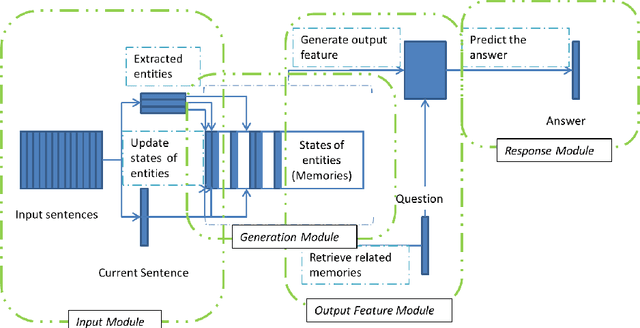

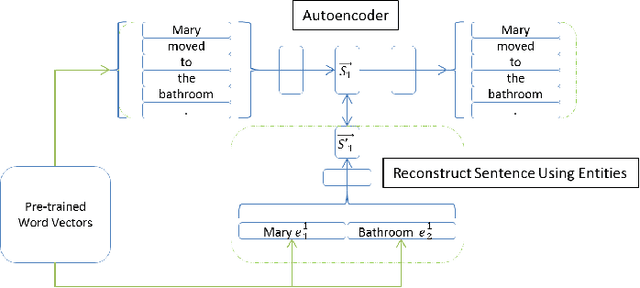

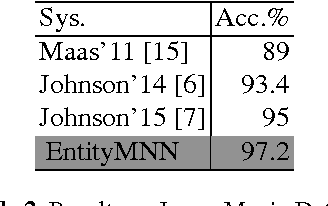

Reading Comprehension using Entity-based Memory Network

Feb 01, 2017Xun Wang, Katsuhito Sudoh, Masaaki Nagata, Tomohide Shibata, Daisuke Kawahara, Sadao Kurohashi

This paper introduces a novel neural network model for question answering, the \emph{entity-based memory network}. It enhances neural networks' ability of representing and calculating information over a long period by keeping records of entities contained in text. The core component is a memory pool which comprises entities' states. These entities' states are continuously updated according to the input text. Questions with regard to the input text are used to search the memory pool for related entities and answers are further predicted based on the states of retrieved entities. Compared with previous memory network models, the proposed model is capable of handling fine-grained information and more sophisticated relations based on entities. We formulated several different tasks as question answering problems and tested the proposed model. Experiments reported satisfying results.

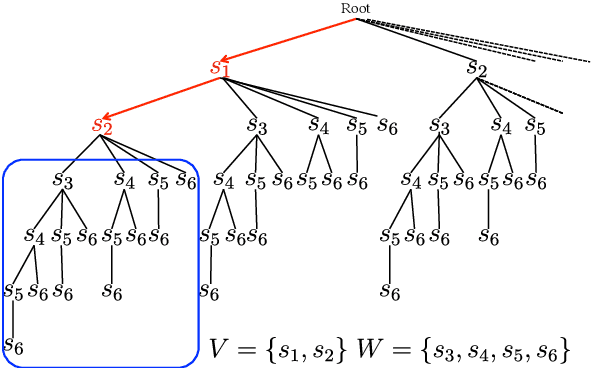

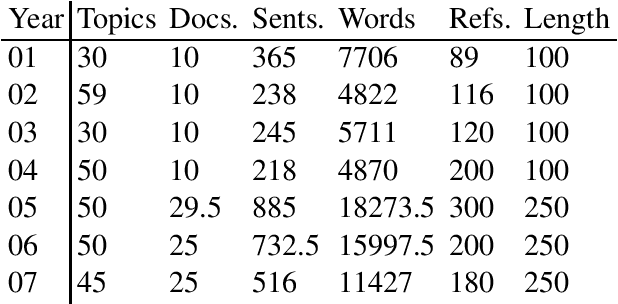

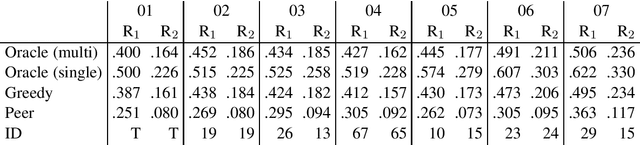

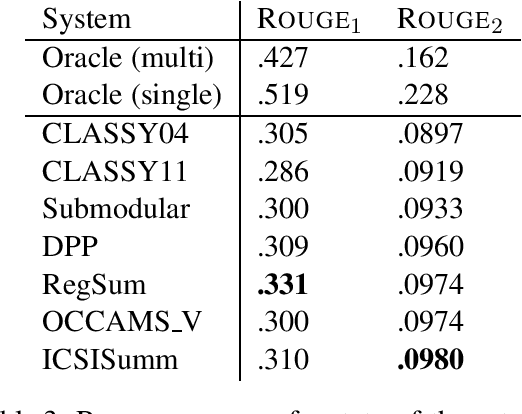

Enumeration of Extractive Oracle Summaries

Jan 06, 2017Tsutomu Hirao, Masaaki Nishino, Jun Suzuki, Masaaki Nagata

To analyze the limitations and the future directions of the extractive summarization paradigm, this paper proposes an Integer Linear Programming (ILP) formulation to obtain extractive oracle summaries in terms of ROUGE-N. We also propose an algorithm that enumerates all of the oracle summaries for a set of reference summaries to exploit F-measures that evaluate which system summaries contain how many sentences that are extracted as an oracle summary. Our experimental results obtained from Document Understanding Conference (DUC) corpora demonstrated the following: (1) room still exists to improve the performance of extractive summarization; (2) the F-measures derived from the enumerated oracle summaries have significantly stronger correlations with human judgment than those derived from single oracle summaries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge