Maria Torres Vega

AI-assisted Automatic Jump Detection and Height Estimation in Volleyball Using a Waist-worn IMU

May 09, 2025Abstract:The physical load of jumps plays a critical role in injury prevention for volleyball players. However, manual video analysis of jump activities is time-intensive and costly, requiring significant effort and expensive hardware setups. The advent of the inertial measurement unit (IMU) and machine learning algorithms offers a convenient and efficient alternative. Despite this, previous research has largely focused on either jump classification or physical load estimation, leaving a gap in integrated solutions. This study aims to present a pipeline to automatically detect jumps and predict heights using data from a waist-worn IMU. The pipeline leverages a Multi-Stage Temporal Convolutional Network (MS-TCN) to detect jump segments in time-series data and classify the specific jump category. Subsequently, jump heights are estimated using three downstream regression machine learning models based on the identified segments. Our method is verified on a dataset comprising 10 players and 337 jumps. Compared to the result of VERT in height estimation (R-squared=-1.53), a commercial device commonly used in jump landing tasks, our method not only accurately identifies jump activities and their specific types (F1-score=0.90) but also demonstrates superior performance in height prediction (R-squared=0.50). This integrated solution offers a promising tool for monitoring physical load and mitigating injury risk in volleyball players.

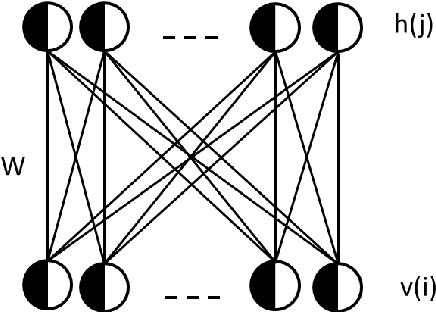

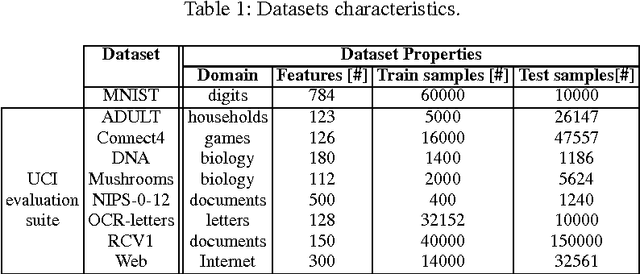

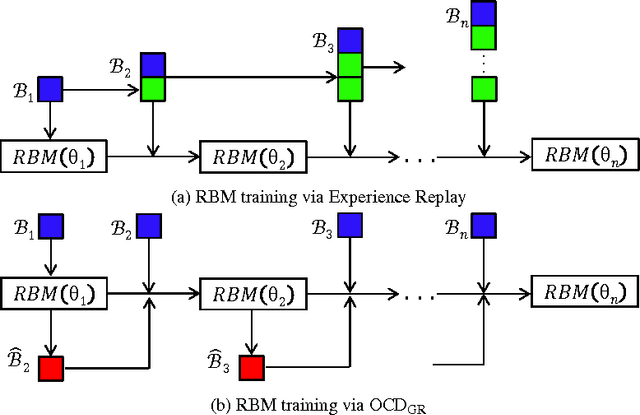

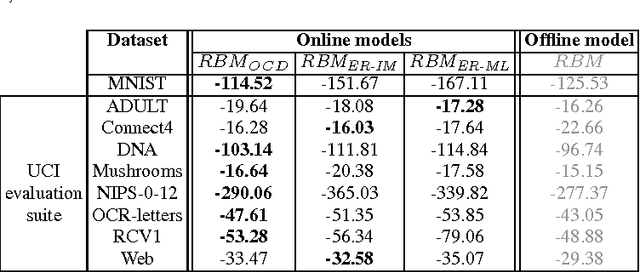

Online Contrastive Divergence with Generative Replay: Experience Replay without Storing Data

Oct 18, 2016

Abstract:Conceived in the early 1990s, Experience Replay (ER) has been shown to be a successful mechanism to allow online learning algorithms to reuse past experiences. Traditionally, ER can be applied to all machine learning paradigms (i.e., unsupervised, supervised, and reinforcement learning). Recently, ER has contributed to improving the performance of deep reinforcement learning. Yet, its application to many practical settings is still limited by the memory requirements of ER, necessary to explicitly store previous observations. To remedy this issue, we explore a novel approach, Online Contrastive Divergence with Generative Replay (OCD_GR), which uses the generative capability of Restricted Boltzmann Machines (RBMs) instead of recorded past experiences. The RBM is trained online, and does not require the system to store any of the observed data points. We compare OCD_GR to ER on 9 real-world datasets, considering a worst-case scenario (data points arriving in sorted order) as well as a more realistic one (sequential random-order data points). Our results show that in 64.28% of the cases OCD_GR outperforms ER and in the remaining 35.72% it has an almost equal performance, while having a considerably reduced space complexity (i.e., memory usage) at a comparable time complexity.

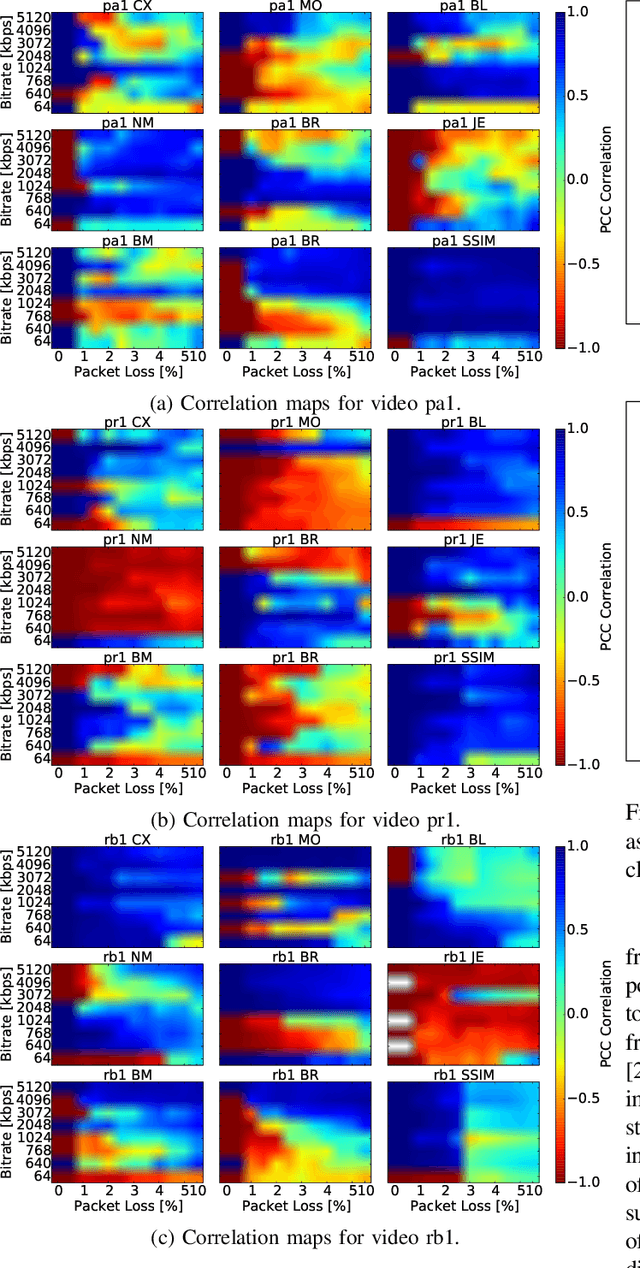

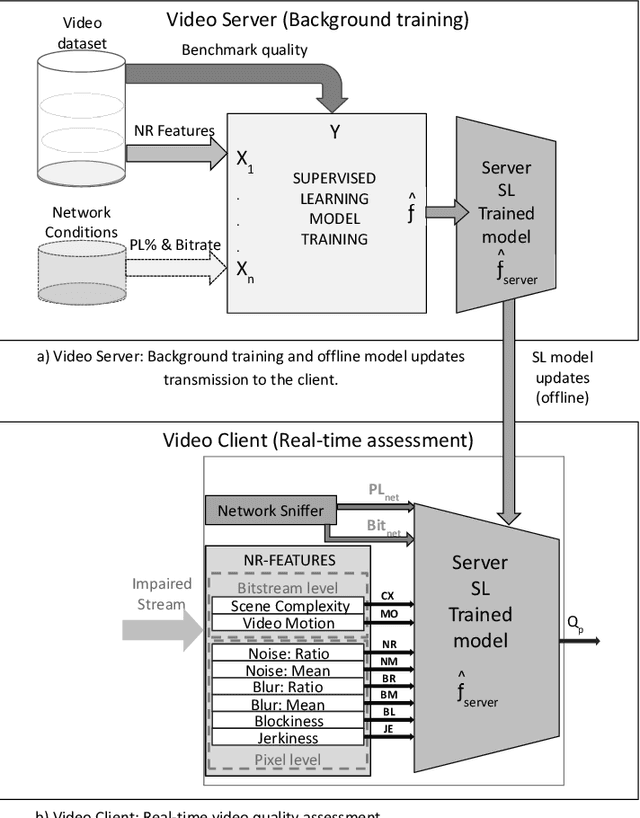

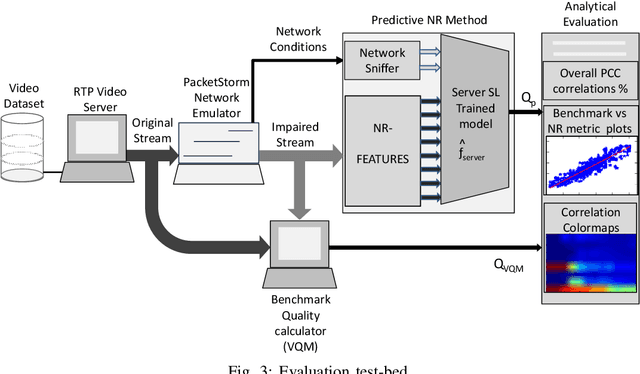

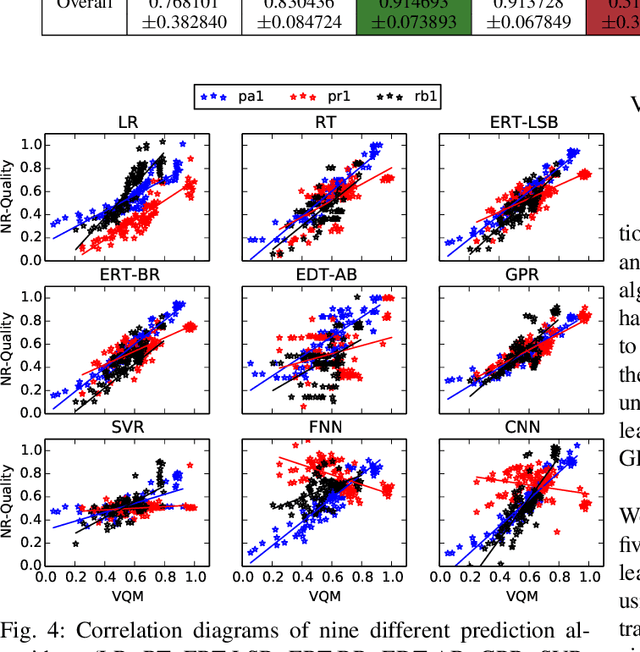

Predictive No-Reference Assessment of Video Quality

Apr 27, 2016

Abstract:Among the various means to evaluate the quality of video streams, No-Reference (NR) methods have low computation and may be executed on thin clients. Thus, NR algorithms would be perfect candidates in cases of real-time quality assessment, automated quality control and, particularly, in adaptive mobile streaming. Yet, existing NR approaches are often inaccurate, in comparison to Full-Reference (FR) algorithms, especially under lossy network conditions. In this work, we present an NR method that combines machine learning with simple NR metrics to achieve a quality index comparably as accurate as the Video Quality Metric (VQM) Full-Reference algorithm. Our method is tested in an extensive dataset (960 videos), under lossy network conditions and considering nine different machine learning algorithms. Overall, we achieve an over 97% correlation with VQM, while allowing real-time assessment of video quality of experience in realistic streaming scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge