Luiz A. DaSilva

On the Robustness of RSMA to Adversarial BD-RIS-Induced Interference

May 26, 2025Abstract:This article investigates the robustness of rate-splitting multiple access (RSMA) in multi-user multiple-input multiple-output (MIMO) systems to interference attacks against channel acquisition induced by beyond-diagonal RISs (BD-RISs). Two primary attack strategies, random and aligned interference, are proposed for fully connected and group-connected BD-RIS architectures. Valid random reflection coefficients are generated exploiting the Takagi factorization, while potent aligned interference attacks are achieved through optimization strategies based on a quadratically constrained quadratic program (QCQP) reformulation followed by projections onto the unitary manifold. Our numerical findings reveal that, when perfect channel state information (CSI) is available, RSMA behaves similarly to space-division multiple access (SDMA) and thus is highly susceptible to the attack, with BD-RIS inducing severe performance loss and significantly outperforming diagonal RIS. However, under imperfect CSI, RSMA consistently demonstrates significantly greater robustness than SDMA, particularly as the system's transmit power increases.

Reconfigurable Intelligent Surfaces: The New Frontier of Next G Security

Dec 09, 2022

Abstract:RIS is one of the significant technological advancements that will mark next-generation wireless. RIS technology also opens up the possibility of new security threats, since the reflection of impinging signals can be used for malicious purposes. This article introduces the basic concept for a RIS-assisted attack that re-uses the legitimate signal towards a malicious objective. Specific attacks are identified from this base scenario, and the RIS-assisted signal cancellation attack is selected for evaluation as an attack that inherently exploits RIS capabilities. The key takeaway from the evaluation is that an effective attack requires accurate channel information, a RIS deployed in a favorable location (from the point of view of the attacker), and it disproportionately affects legitimate links that already suffer from reduced path loss. These observations motivate specific security solutions and recommendations for future work.

Energy Aware Deep Reinforcement Learning Scheduling for Sensors Correlated in Time and Space

Nov 19, 2020

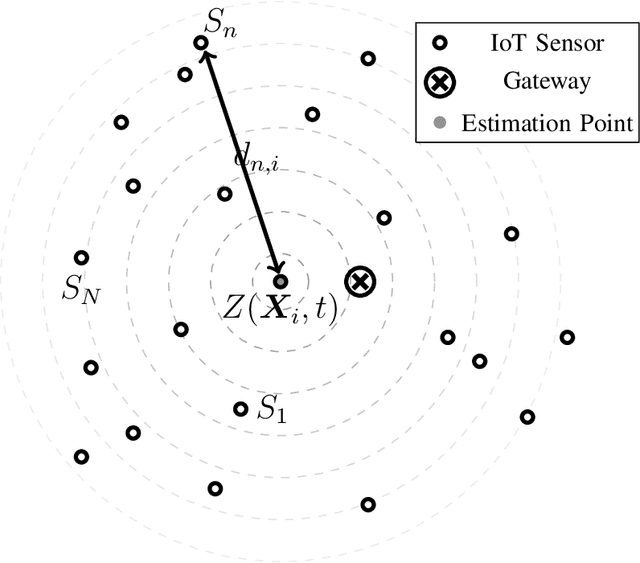

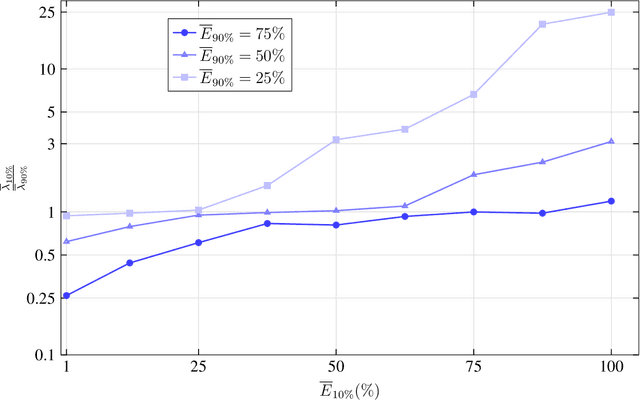

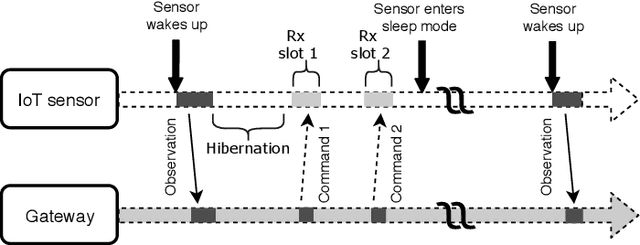

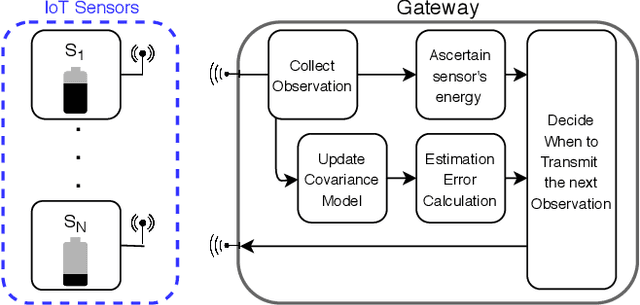

Abstract:Millions of battery-powered sensors deployed for monitoring purposes in a multitude of scenarios, e.g., agriculture, smart cities, industry, etc., require energy-efficient solutions to prolong their lifetime. When these sensors observe a phenomenon distributed in space and evolving in time, it is expected that collected observations will be correlated in time and space. In this paper, we propose a Deep Reinforcement Learning (DRL) based scheduling mechanism capable of taking advantage of correlated information. We design our solution using the Deep Deterministic Policy Gradient (DDPG) algorithm. The proposed mechanism is capable of determining the frequency with which sensors should transmit their updates, to ensure accurate collection of observations, while simultaneously considering the energy available. To evaluate our scheduling mechanism, we use multiple datasets containing environmental observations obtained in multiple real deployments. The real observations enable us to model the environment with which the mechanism interacts as realistically as possible. We show that our solution can significantly extend the sensors' lifetime. We compare our mechanism to an idealized, all-knowing scheduler to demonstrate that its performance is near-optimal. Additionally, we highlight the unique feature of our design, energy-awareness, by displaying the impact of sensors' energy levels on the frequency of updates.

Adaptive Height Optimisation for Cellular-Connected UAVs using Reinforcement Learning

Jul 27, 2020

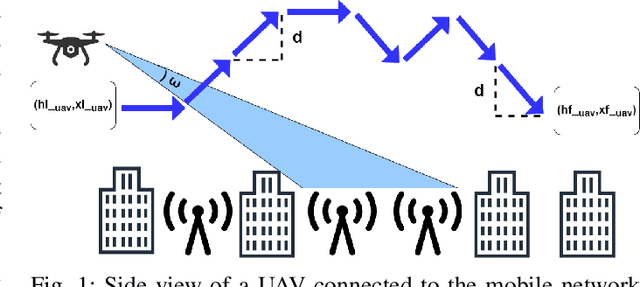

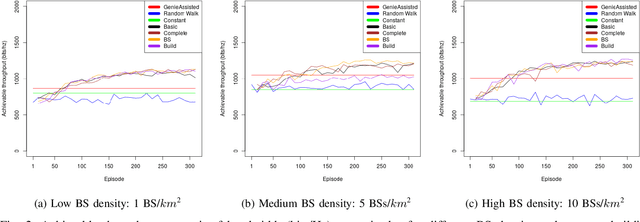

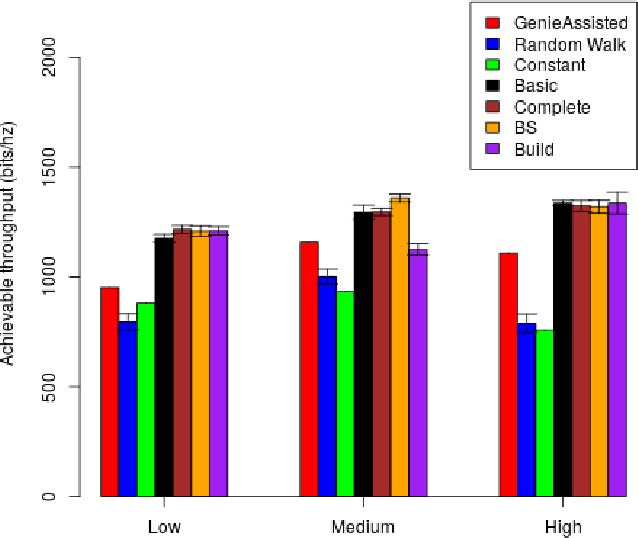

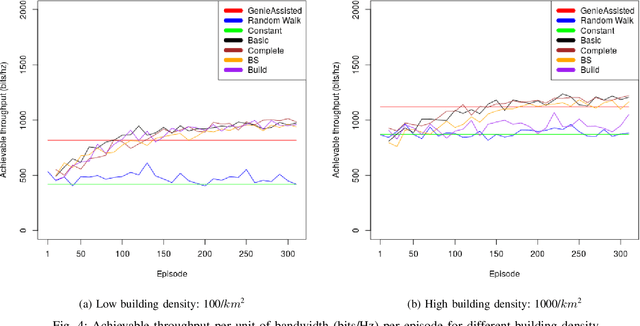

Abstract:With the increasing number of \acp{uav} as users of the cellular network, the research community faces particular challenges in providing reliable \ac{uav} connectivity. A challenge that has limited research is understanding how the local building and \ac{bs} density affects \ac{uav}'s connection to a cellular network, that in the physical layer is related to its spectrum efficiency. With more \acp{bs}, the \ac{uav} connectivity could be negatively affected as it has \ac{los} to most of them, decreasing its spectral efficiency. On the other hand, buildings could be blocking interference from undesirable \ac{bs}, improving the link of the \ac{uav} to the serving \ac{bs}. This paper proposes a \ac{rl}-based algorithm to optimise the height of a UAV, as it moves dynamically within a range of heights, with the focus of increasing the UAV spectral efficiency. We evaluate the solution for different \ac{bs} and building densities. Our results show that in most scenarios \ac{rl} outperforms the baselines achieving up to 125\% over naive constant baseline, and up to 20\% over greedy approach with up front knowledge of the best height of UAV in the next time step.

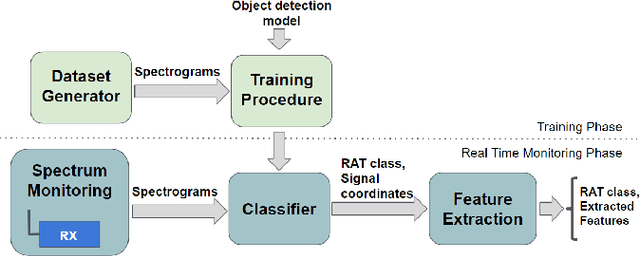

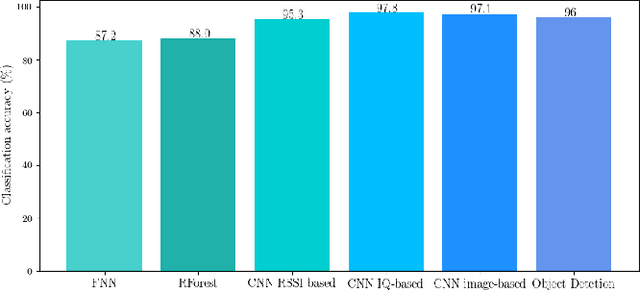

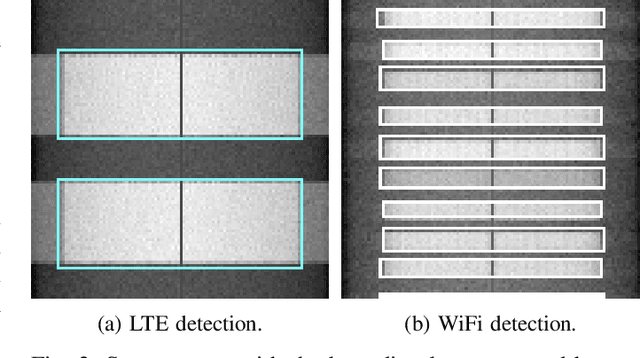

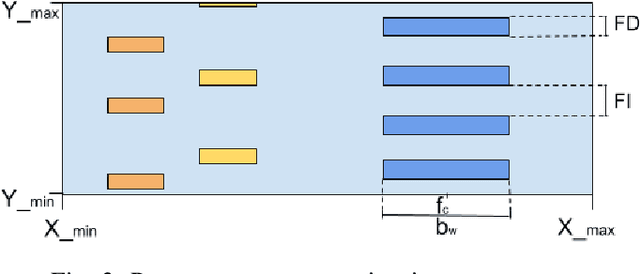

Radio Access Technology Characterisation Through Object Detection

Jul 27, 2020

Abstract:\ac{RAT} classification and monitoring are essential for efficient coexistence of different communication systems in shared spectrum. Shared spectrum, including operation in license-exempt bands, is envisioned in the \ac{5G} standards (e.g., 3GPP Rel. 16). In this paper, we propose a \ac{ML} approach to characterise the spectrum utilisation and facilitate the dynamic access to it. Recent advances in \acp{CNN} enable us to perform waveform classification by processing spectrograms as images. In contrast to other \ac{ML} methods that can only provide the class of the monitored \acp{RAT}, the solution we propose can recognise not only different \acp{RAT} in shared spectrum, but also identify critical parameters such as inter-frame duration, frame duration, centre frequency, and signal bandwidth by using object detection and a feature extraction module to extract features from spectrograms. We have implemented and evaluated our solution using a dataset of commercial transmissions, as well as in a \ac{SDR} testbed environment. The scenario evaluated was the coexistence of WiFi and LTE transmissions in shared spectrum. Our results show that our approach has an accuracy of 96\% in the classification of \acp{RAT} from a dataset that captures transmissions of regular user communications. It also shows that the extracted features can be precise within a margin of 2\%, %of the size of the image, and is capable of detect above 94\% of objects under a broad range of transmission power levels and interference conditions.

Spectrum Monitoring for Radar Bands using Deep Convolutional Neural Networks

May 01, 2017

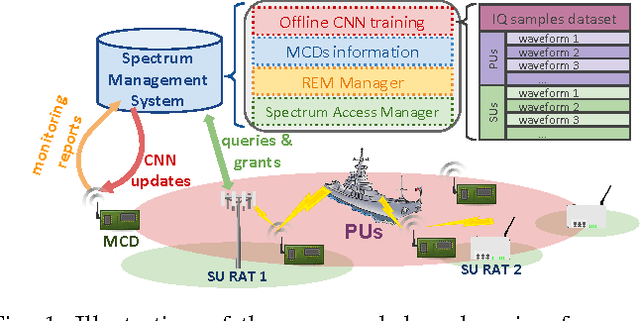

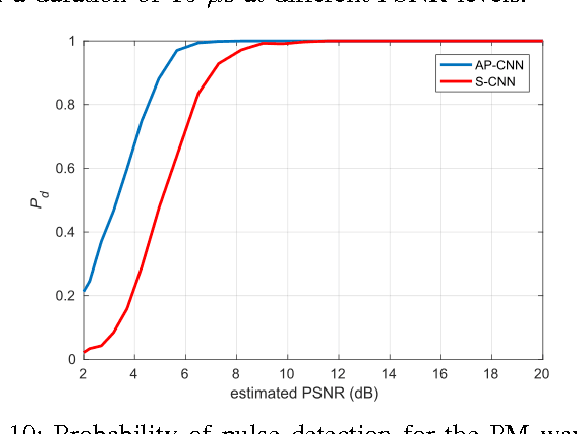

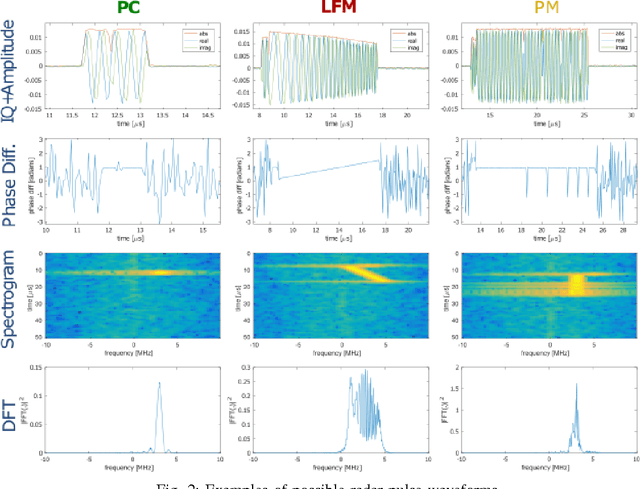

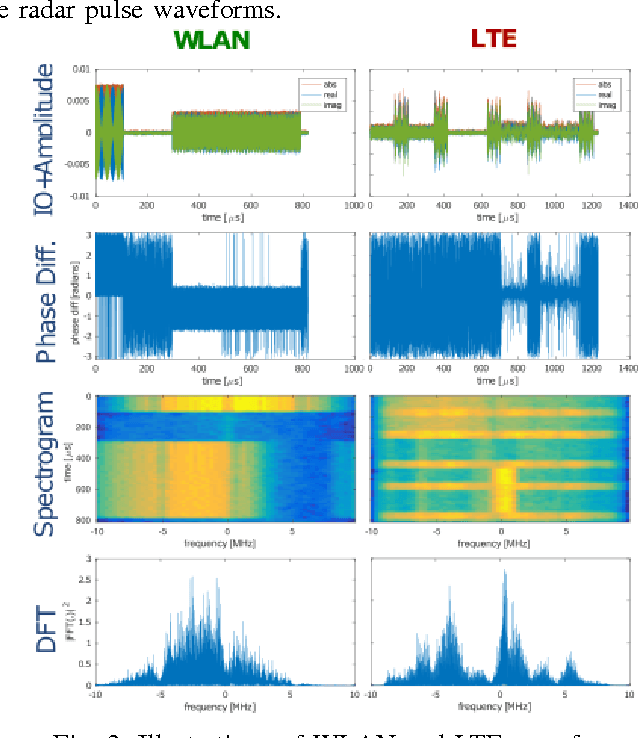

Abstract:In this paper, we present a spectrum monitoring framework for the detection of radar signals in spectrum sharing scenarios. The core of our framework is a deep convolutional neural network (CNN) model that enables Measurement Capable Devices to identify the presence of radar signals in the radio spectrum, even when these signals are overlapped with other sources of interference, such as commercial LTE and WLAN. We collected a large dataset of RF measurements, which include the transmissions of multiple radar pulse waveforms, downlink LTE, WLAN, and thermal noise. We propose a pre-processing data representation that leverages the amplitude and phase shifts of the collected samples. This representation allows our CNN model to achieve a classification accuracy of 99.6% on our testing dataset. The trained CNN model is then tested under various SNR values, outperforming other models, such as spectrogram-based CNN models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge