Lorenzo Schena

Reinforcement Twinning for Hybrid Control of Flapping-Wing Drones

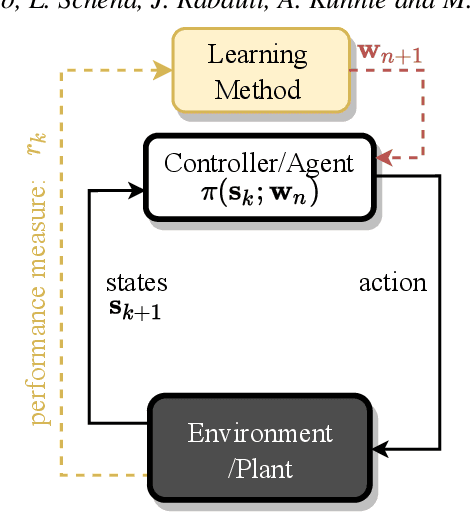

May 21, 2025Abstract:Controlling the flight of flapping-wing drones requires versatile controllers that handle their time-varying, nonlinear, and underactuated dynamics from incomplete and noisy sensor data. Model-based methods struggle with accurate modeling, while model-free approaches falter in efficiently navigating very high-dimensional and nonlinear control objective landscapes. This article presents a novel hybrid model-free/model-based approach to flight control based on the recently proposed reinforcement twinning algorithm. The model-based (MB) approach relies on an adjoint formulation using an adaptive digital twin, continuously identified from live trajectories, while the model-free (MF) approach relies on reinforcement learning. The two agents collaborate through transfer learning, imitation learning, and experience sharing using the real environment, the digital twin and a referee. The latter selects the best agent to interact with the real environment based on performance within the digital twin and a real-to-virtual environment consistency ratio. The algorithm is evaluated for controlling the longitudinal dynamics of a flapping-wing drone, with the environment simulated as a nonlinear, time-varying dynamical system under the influence of quasi-steady aerodynamic forces. The hybrid control learning approach is tested with three types of initialization of the adaptive model: (1) offline identification using previously available data, (2) random initialization with full online identification, and (3) offline pre-training with an estimation bias, followed by online adaptation. In all three scenarios, the proposed hybrid learning approach demonstrates superior performance compared to purely model-free and model-based methods.

Comparative analysis of machine learning methods for active flow control

Feb 25, 2022

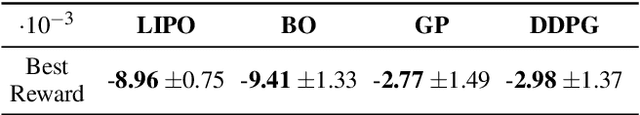

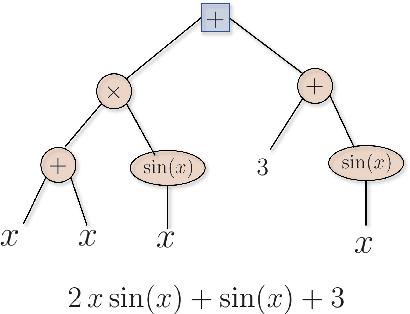

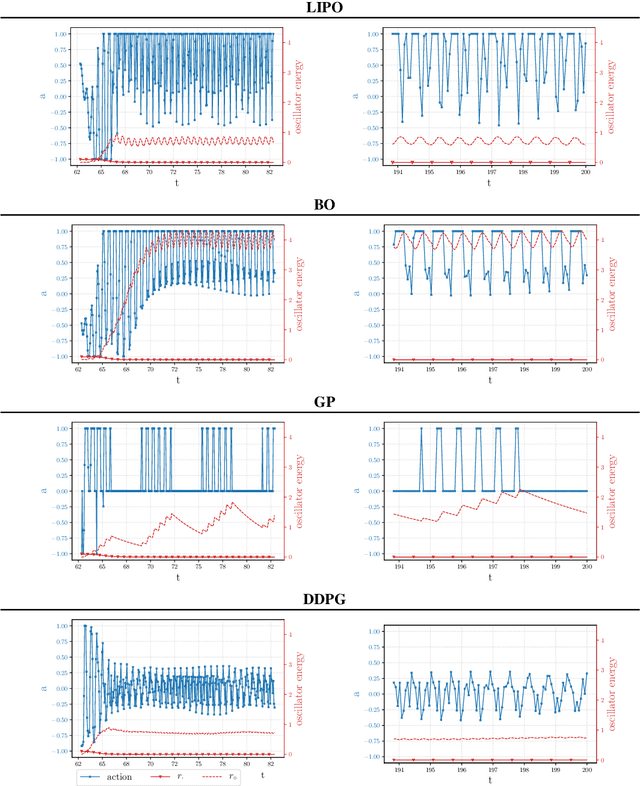

Abstract:Machine learning frameworks such as Genetic Programming (GP) and Reinforcement Learning (RL) are gaining popularity in flow control. This work presents a comparative analysis of the two, bench-marking some of their most representative algorithms against global optimization techniques such as Bayesian Optimization (BO) and Lipschitz global optimization (LIPO). First, we review the general framework of the flow control problem, linking optimal control theory with model-free machine learning methods. Then, we test the control algorithms on three test cases. These are (1) the stabilization of a nonlinear dynamical system featuring frequency cross-talk, (2) the wave cancellation from a Burgers' flow and (3) the drag reduction in a cylinder wake flow. Although the control of these problems has been tackled in the recent literature with one method or the other, we present a comprehensive comparison to illustrate their differences in exploration versus exploitation and their balance between `model capacity' in the control law definition versus `required complexity'. We believe that such a comparison opens the path towards hybridization of the various methods, and we offer some perspective on their future development in the literature of flow control problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge