Liane Guillou

Incorporating Temporal Information in Entailment Graph Mining

Sep 20, 2021

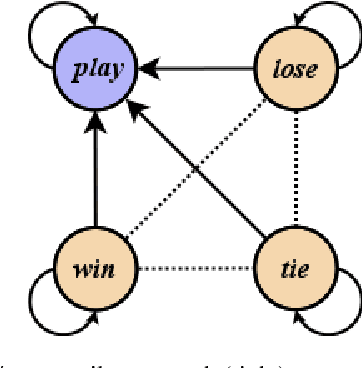

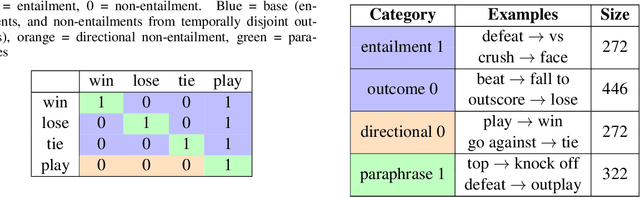

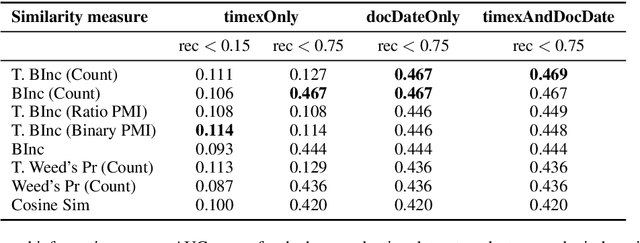

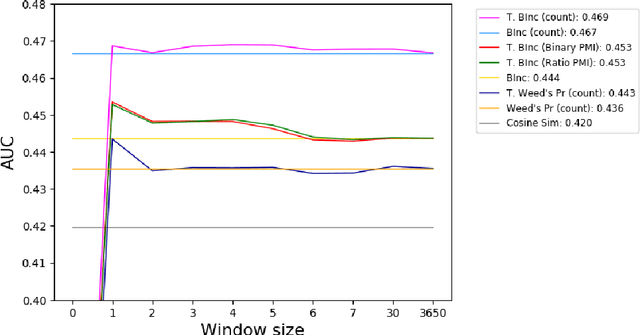

Abstract:We present a novel method for injecting temporality into entailment graphs to address the problem of spurious entailments, which may arise from similar but temporally distinct events involving the same pair of entities. We focus on the sports domain in which the same pairs of teams play on different occasions, with different outcomes. We present an unsupervised model that aims to learn entailments such as win/lose $\rightarrow$ play, while avoiding the pitfall of learning non-entailments such as win $\not\rightarrow$ lose. We evaluate our model on a manually constructed dataset, showing that incorporating time intervals and applying a temporal window around them, are effective strategies.

* L. Guillou, S. Bijl de Vroe, M.J. Hosseini, M. Johnson, and M. Steedman. 2020. Incorporating temporal information in entailment graph mining. In Proceedings of the Graph-based Methods for Natural Language Processing (TextGraphs), pages 60-71, Barcelona, Spain (Online). Association for Computational Linguistics

Modality and Negation in Event Extraction

Sep 20, 2021

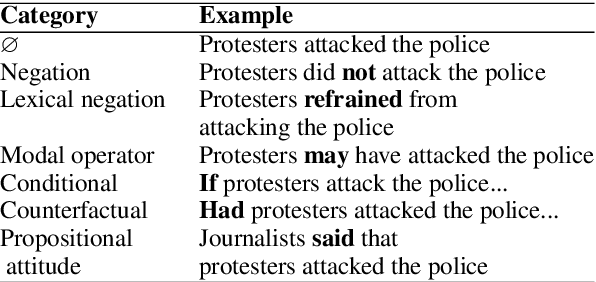

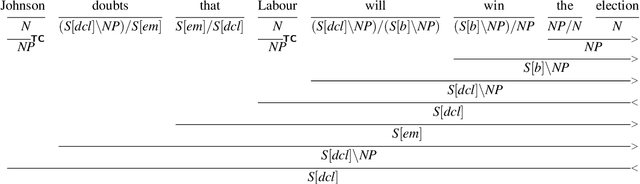

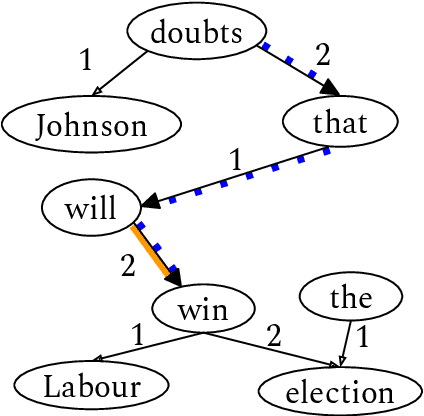

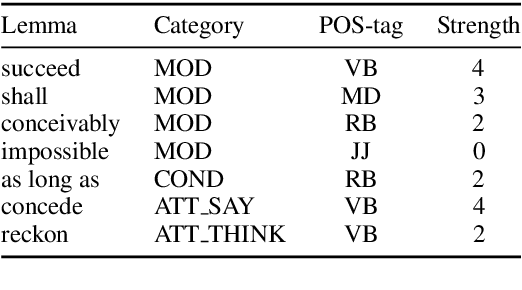

Abstract:Language provides speakers with a rich system of modality for expressing thoughts about events, without being committed to their actual occurrence. Modality is commonly used in the political news domain, where both actual and possible courses of events are discussed. NLP systems struggle with these semantic phenomena, often incorrectly extracting events which did not happen, which can lead to issues in downstream applications. We present an open-domain, lexicon-based event extraction system that captures various types of modality. This information is valuable for Question Answering, Knowledge Graph construction and Fact-checking tasks, and our evaluation shows that the system is sufficiently strong to be used in downstream applications.

* S. Bijl de Vroe, L. Guillou, M. Stanojevi\'c, N. McKenna, and M. Steedman. 2021. Modality and Negation in Event Extraction. In Proceedings of the 4th Workshop on Challenges and Applications of Automated Extraction of Socio-political Events from Text (CASE 2021), pages 31-42, online. Association for Computational Linguistics

Multivalent Entailment Graphs for Question Answering

Apr 16, 2021

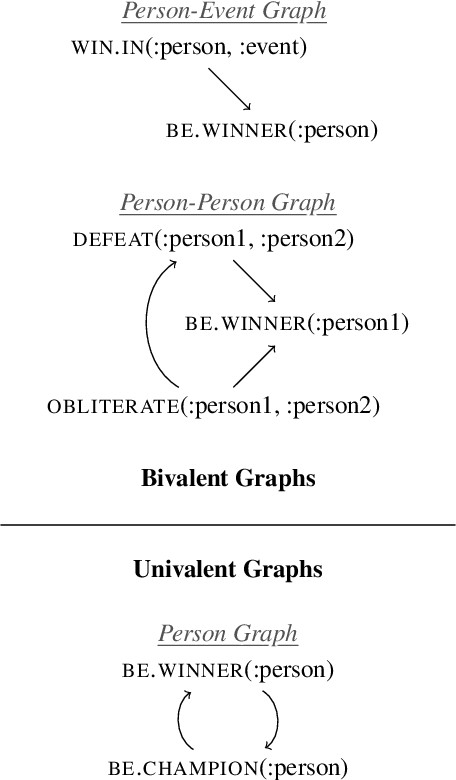

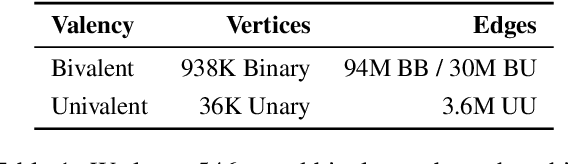

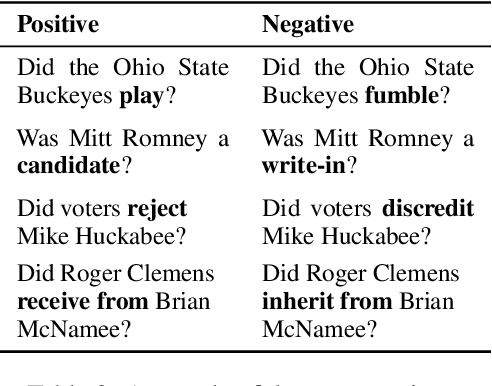

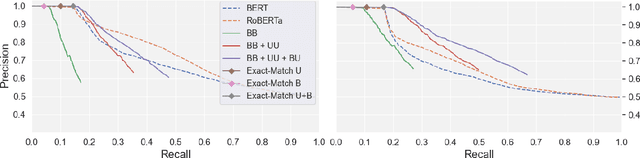

Abstract:Drawing inferences between open-domain natural language predicates is a necessity for true language understanding. There has been much progress in unsupervised learning of entailment graphs for this purpose. We make three contributions: (1) we reinterpret the Distributional Inclusion Hypothesis to model entailment between predicates of different valencies, like DEFEAT(Biden, Trump) entails WIN(Biden); (2) we actualize this theory by learning unsupervised Multivalent Entailment Graphs of open-domain predicates; and (3) we demonstrate the capabilities of these graphs on a novel question answering task. We show that directional entailment is more helpful for inference than bidirectional similarity on questions of fine-grained semantics. We also show that drawing on evidence across valencies answers more questions than by using only the same valency evidence.

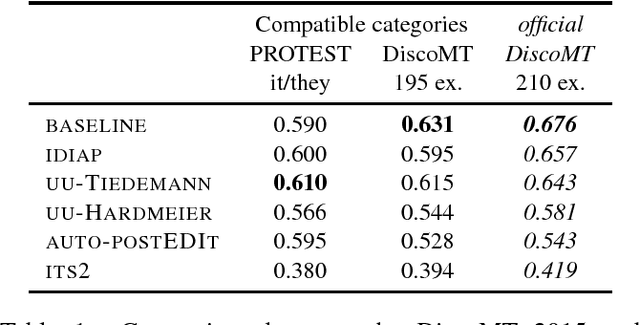

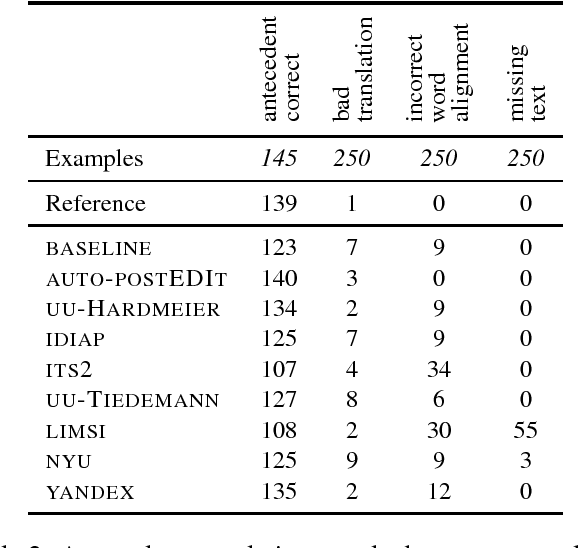

Findings of the 2016 WMT Shared Task on Cross-lingual Pronoun Prediction

Nov 27, 2019

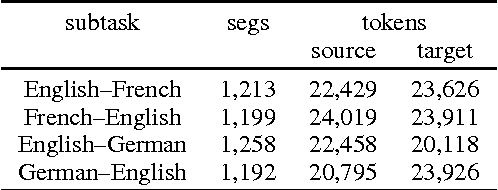

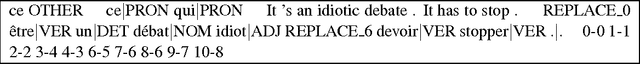

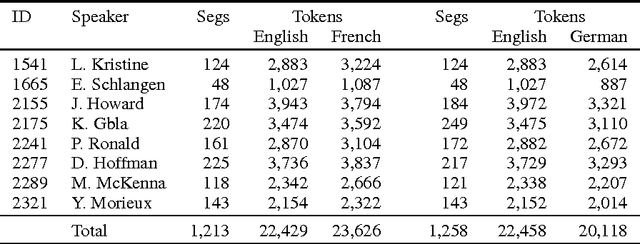

Abstract:We describe the design, the evaluation setup, and the results of the 2016 WMT shared task on cross-lingual pronoun prediction. This is a classification task in which participants are asked to provide predictions on what pronoun class label should replace a placeholder value in the target-language text, provided in lemmatised and PoS-tagged form. We provided four subtasks, for the English-French and English-German language pairs, in both directions. Eleven teams participated in the shared task; nine for the English-French subtask, five for French-English, nine for English-German, and six for German-English. Most of the submissions outperformed two strong language-model based baseline systems, with systems using deep recurrent neural networks outperforming those using other architectures for most language pairs.

* cross-lingual pronoun prediction, WMT, shared task, English, German, French

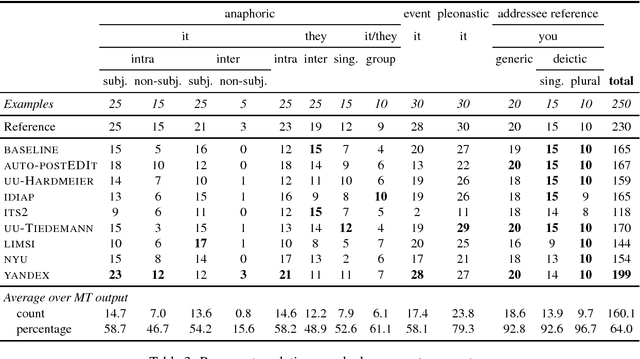

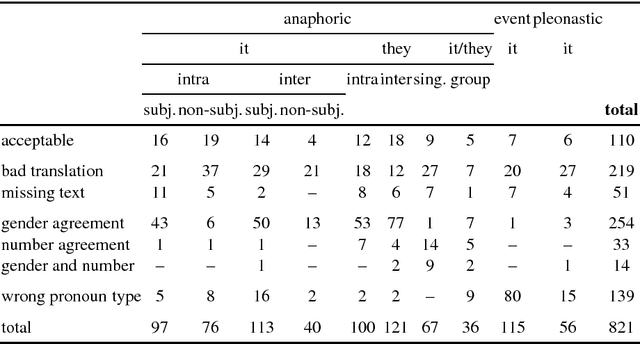

Pronoun Translation in English-French Machine Translation: An Analysis of Error Types

Aug 30, 2018

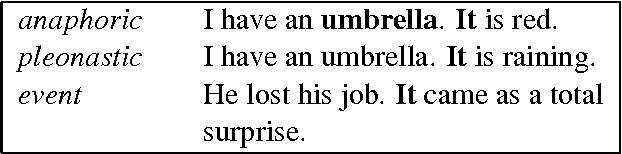

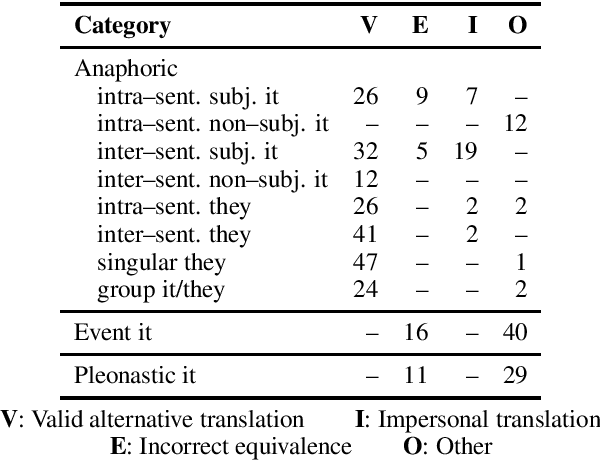

Abstract:Pronouns are a long-standing challenge in machine translation. We present a study of the performance of a range of rule-based, statistical and neural MT systems on pronoun translation based on an extensive manual evaluation using the PROTEST test suite, which enables a fine-grained analysis of different pronoun types and sheds light on the difficulties of the task. We find that the rule-based approaches in our corpus perform poorly as a result of oversimplification, whereas SMT and early NMT systems exhibit significant shortcomings due to a lack of awareness of the functional and referential properties of pronouns. A recent Transformer-based NMT system with cross-sentence context shows very promising results on non-anaphoric pronouns and intra-sentential anaphora, but there is still considerable room for improvement in examples with cross-sentence dependencies.

Automatic Reference-Based Evaluation of Pronoun Translation Misses the Point

Aug 13, 2018

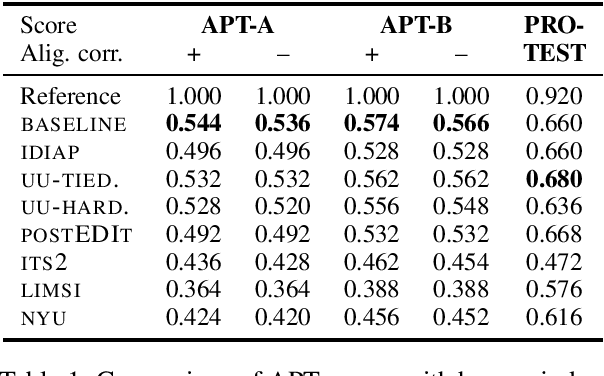

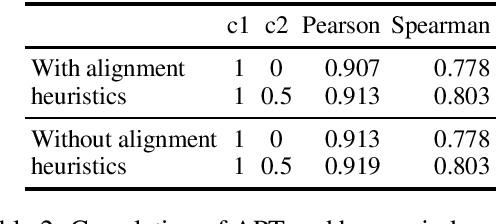

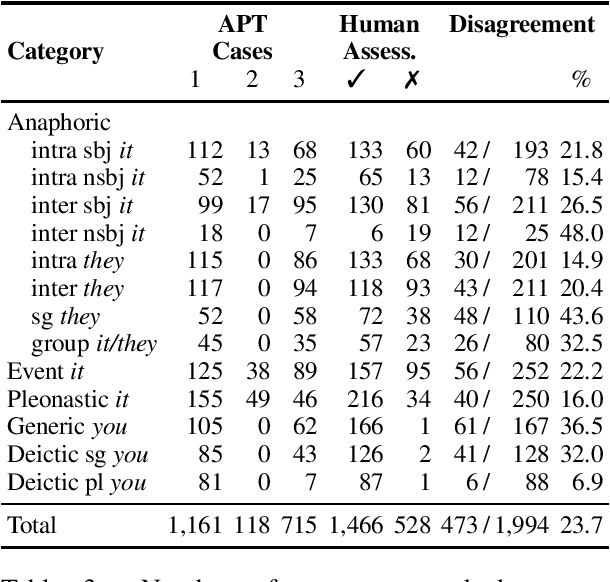

Abstract:We compare the performance of the APT and AutoPRF metrics for pronoun translation against a manually annotated dataset comprising human judgements as to the correctness of translations of the PROTEST test suite. Although there is some correlation with the human judgements, a range of issues limit the performance of the automated metrics. Instead, we recommend the use of semi-automatic metrics and test suites in place of fully automatic metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge