Leonard Sunwoo

Unified Chest X-ray and Radiology Report Generation Model with Multi-view Chest X-rays

Mar 01, 2023

Abstract:Generated synthetic data in medical research can substitute privacy and security-sensitive data with a large-scale curated dataset, reducing data collection and annotation costs. As part of this effort, we propose UniXGen, a unified chest X-ray and report generation model, with the following contributions. First, we design a unified model for bidirectional chest X-ray and report generation by adopting a vector quantization method to discretize chest X-rays into discrete visual tokens and formulating both tasks as sequence generation tasks. Second, we introduce several special tokens to generate chest X-rays with specific views that can be useful when the desired views are unavailable. Furthermore, UniXGen can flexibly take various inputs from single to multiple views to take advantage of the additional findings available in other X-ray views. We adopt an efficient transformer for computational and memory efficiency to handle the long-range input sequence of multi-view chest X-rays with high resolution and long paragraph reports. In extensive experiments, we show that our unified model has a synergistic effect on both generation tasks, as opposed to training only the task-specific models. We also find that view-specific special tokens can distinguish between different views and properly generate specific views even if they do not exist in the dataset, and utilizing multi-view chest X-rays can faithfully capture the abnormal findings in the additional X-rays. The source code is publicly available at: https://github.com/ttumyche/UniXGen.

Unpaired Deep Learning for Accelerated MRI using Optimal Transport Driven CycleGAN

Aug 29, 2020

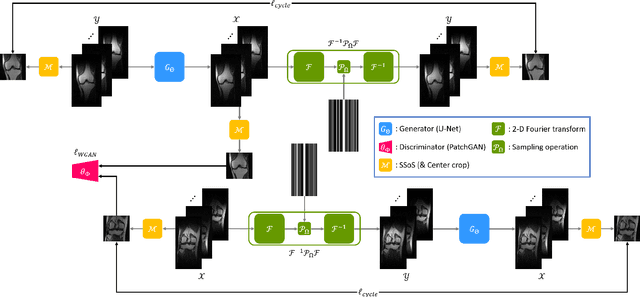

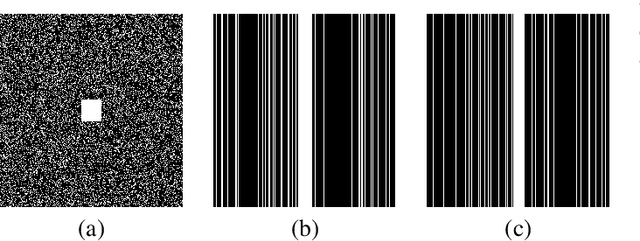

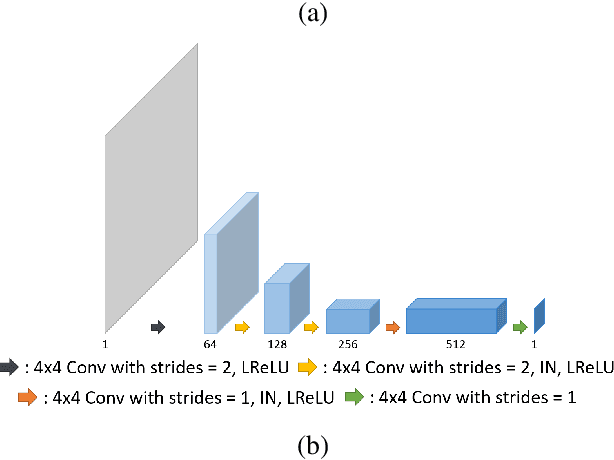

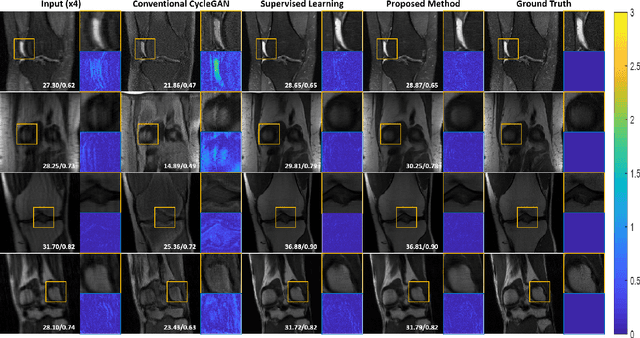

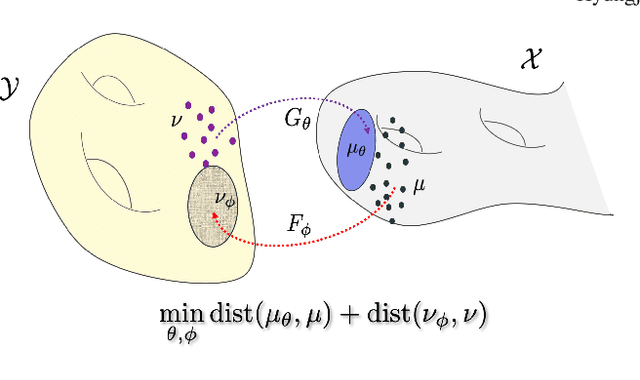

Abstract:Recently, deep learning approaches for accelerated MRI have been extensively studied thanks to their high performance reconstruction in spite of significantly reduced runtime complexity. These neural networks are usually trained in a supervised manner, so matched pairs of subsampled and fully sampled k-space data are required. Unfortunately, it is often difficult to acquire matched fully sampled k-space data, since the acquisition of fully sampled k-space data requires long scan time and often leads to the change of the acquisition protocol. Therefore, unpaired deep learning without matched label data has become a very important research topic. In this paper, we propose an unpaired deep learning approach using a optimal transport driven cycle-consistent generative adversarial network (OT-cycleGAN) that employs a single pair of generator and discriminator. The proposed OT-cycleGAN architecture is rigorously derived from a dual formulation of the optimal transport formulation using a specially designed penalized least squares cost. The experimental results show that our method can reconstruct high resolution MR images from accelerated k- space data from both single and multiple coil acquisition, without requiring matched reference data.

Two-Stage Deep Learning for Accelerated 3D Time-of-Flight MRA without Matched Training Data

Aug 04, 2020

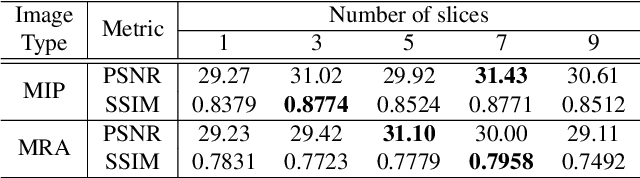

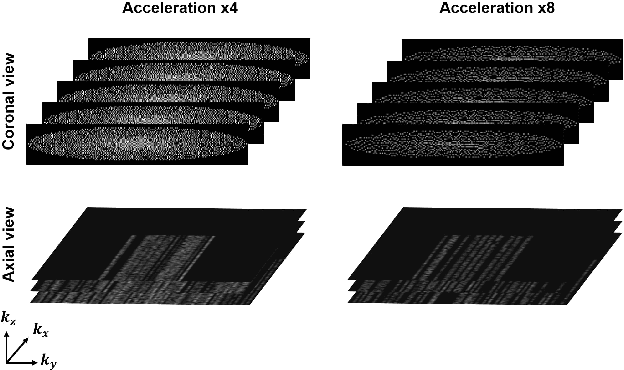

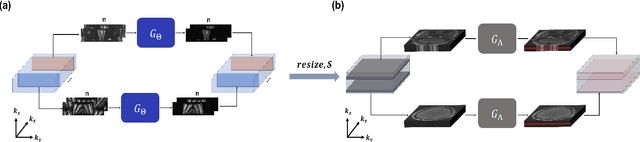

Abstract:Time-of-flight magnetic resonance angiography (TOF-MRA) is one of the most widely used non-contrast MR imaging methods to visualize blood vessels, but due to the 3-D volume acquisition highly accelerated acquisition is necessary. Accordingly, high quality reconstruction from undersampled TOF-MRA is an important research topic for deep learning. However, most existing deep learning works require matched reference data for supervised training, which are often difficult to obtain. By extending the recent theoretical understanding of cycleGAN from the optimal transport theory, here we propose a novel two-stage unsupervised deep learning approach, which is composed of the multi-coil reconstruction network along the coronal plane followed by a multi-planar refinement network along the axial plane. Specifically, the first network is trained in the square-root of sum of squares (SSoS) domain to achieve high quality parallel image reconstruction, whereas the second refinement network is designed to efficiently learn the characteristics of highly-activated blood flow using double-headed max-pool discriminator. Extensive experiments demonstrate that the proposed learning process without matched reference exceeds performance of state-of-the-art compressed sensing (CS)-based method and provides comparable or even better results than supervised learning approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge