Leandro Minku

MARLINE: Multi-Source Mapping Transfer Learning for Non-Stationary Environments

Sep 09, 2025Abstract:Concept drift is a major problem in online learning due to its impact on the predictive performance of data stream mining systems. Recent studies have started exploring data streams from different sources as a strategy to tackle concept drift in a given target domain. These approaches make the assumption that at least one of the source models represents a concept similar to the target concept, which may not hold in many real-world scenarios. In this paper, we propose a novel approach called Multi-source mApping with tRansfer LearnIng for Non-stationary Environments (MARLINE). MARLINE can benefit from knowledge from multiple data sources in non-stationary environments even when source and target concepts do not match. This is achieved by projecting the target concept to the space of each source concept, enabling multiple source sub-classifiers to contribute towards the prediction of the target concept as part of an ensemble. Experiments on several synthetic and real-world datasets show that MARLINE was more accurate than several state-of-the-art data stream learning approaches.

Multi-Label Transfer Learning in Non-Stationary Data Streams

Sep 09, 2025Abstract:Label concepts in multi-label data streams often experience drift in non-stationary environments, either independently or in relation to other labels. Transferring knowledge between related labels can accelerate adaptation, yet research on multi-label transfer learning for data streams remains limited. To address this, we propose two novel transfer learning methods: BR-MARLENE leverages knowledge from different labels in both source and target streams for multi-label classification; BRPW-MARLENE builds on this by explicitly modelling and transferring pairwise label dependencies to enhance learning performance. Comprehensive experiments show that both methods outperform state-of-the-art multi-label stream approaches in non-stationary environments, demonstrating the effectiveness of inter-label knowledge transfer for improved predictive performance.

Tackling Virtual and Real Concept Drifts: An Adaptive Gaussian Mixture Model

Feb 11, 2021

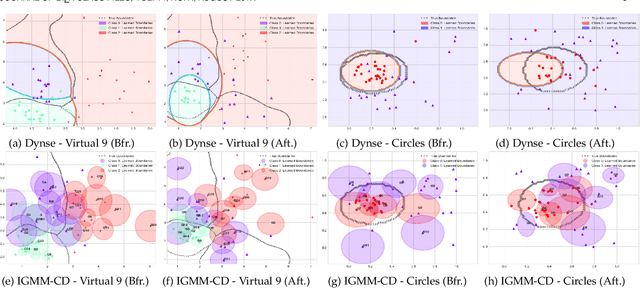

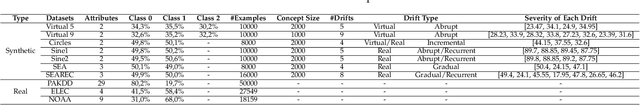

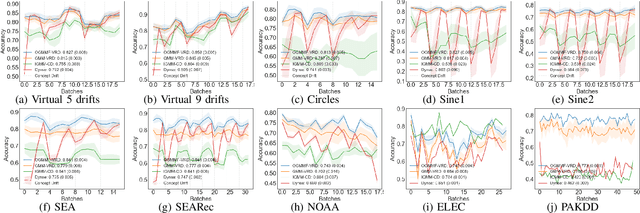

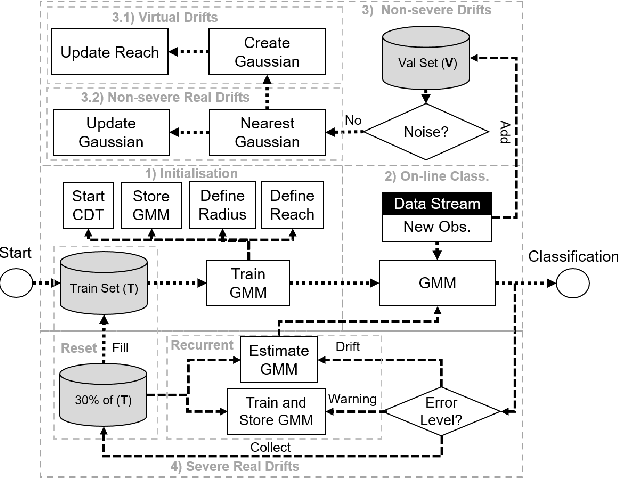

Abstract:Real-world applications have been dealing with large amounts of data that arrive over time and generally present changes in their underlying joint probability distribution, i.e., concept drift. Concept drift can be subdivided into two types: virtual drift, which affects the unconditional probability distribution p(x), and real drift, which affects the conditional probability distribution p(y|x). Existing works focuses on real drift. However, strategies to cope with real drift may not be the best suited for dealing with virtual drift, since the real class boundaries remain unchanged. We provide the first in depth analysis of the differences between the impact of virtual and real drifts on classifiers' suitability. We propose an approach to handle both drifts called On-line Gaussian Mixture Model With Noise Filter For Handling Virtual and Real Concept Drifts (OGMMF-VRD). Experiments with 7 synthetic and 3 real-world datasets show that OGMMF-VRD obtained the best results in terms of average accuracy, G-mean and runtime compared to existing approaches. Moreover, its accuracy over time suffered less performance degradation in the presence of drifts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge