Gustavo Oliveira

Partial Reasoning in Language Models: Search and Refinement Guided by Uncertainty

Jan 17, 2026Abstract:The use of Large Language Models (LLMs) for reasoning and planning tasks has drawn increasing attention in Artificial Intelligence research. Despite their remarkable progress, these models still exhibit limitations in multi-step inference scenarios, particularly in mathematical and logical reasoning. We introduce PREGU (Partial Reasoning Guided by Uncertainty). PREGU monitors the entropy of the output distribution during autoregressive generation and halts the process whenever entropy exceeds a defined threshold, signaling uncertainty. From that point, a localized search is performed in the latent space to refine the partial reasoning and select the most coherent answer, using the Soft Reasoning method. Experiments conducted with LLaMA-3-8B, Mistral-7B, and Qwen2-7B across four reasoning benchmarks (GSM8K, GSM-Hard, SVAMP, and StrategyQA) showed performance greater than or similar to Soft Reasoning, indicating that entropy can serve as an effective signal to trigger selective refinement during reasoning.

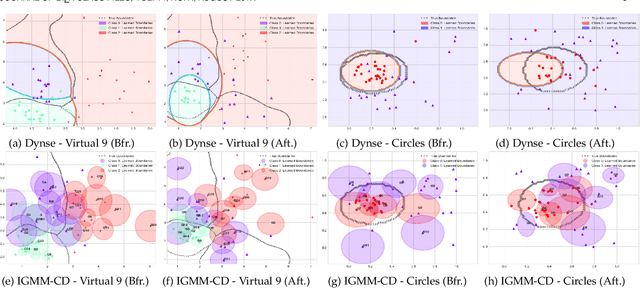

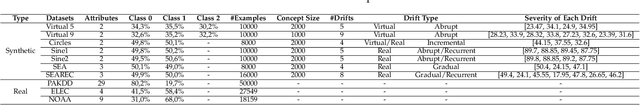

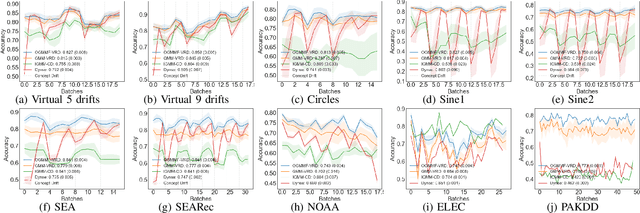

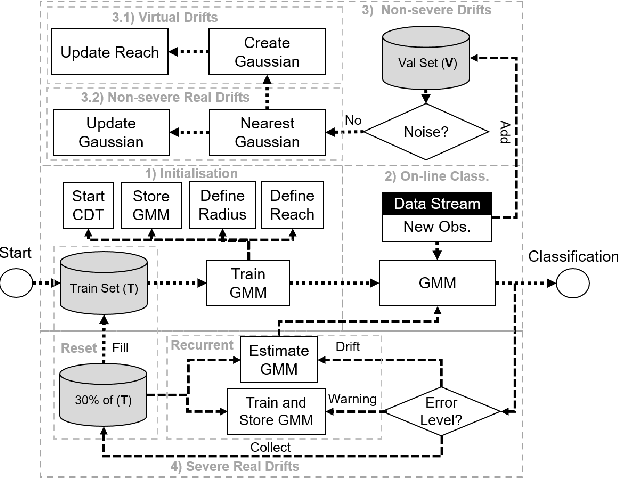

Tackling Virtual and Real Concept Drifts: An Adaptive Gaussian Mixture Model

Feb 11, 2021

Abstract:Real-world applications have been dealing with large amounts of data that arrive over time and generally present changes in their underlying joint probability distribution, i.e., concept drift. Concept drift can be subdivided into two types: virtual drift, which affects the unconditional probability distribution p(x), and real drift, which affects the conditional probability distribution p(y|x). Existing works focuses on real drift. However, strategies to cope with real drift may not be the best suited for dealing with virtual drift, since the real class boundaries remain unchanged. We provide the first in depth analysis of the differences between the impact of virtual and real drifts on classifiers' suitability. We propose an approach to handle both drifts called On-line Gaussian Mixture Model With Noise Filter For Handling Virtual and Real Concept Drifts (OGMMF-VRD). Experiments with 7 synthetic and 3 real-world datasets show that OGMMF-VRD obtained the best results in terms of average accuracy, G-mean and runtime compared to existing approaches. Moreover, its accuracy over time suffered less performance degradation in the presence of drifts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge