Kyoung Mu Lee

Scene-Adaptive Video Frame Interpolation via Meta-Learning

Apr 02, 2020

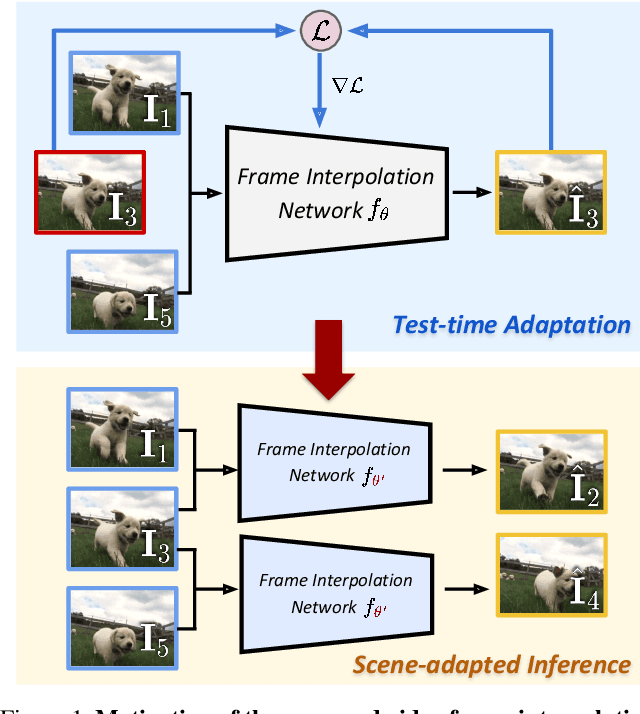

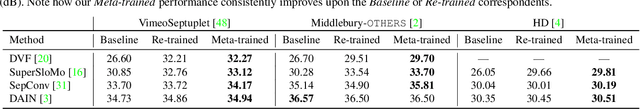

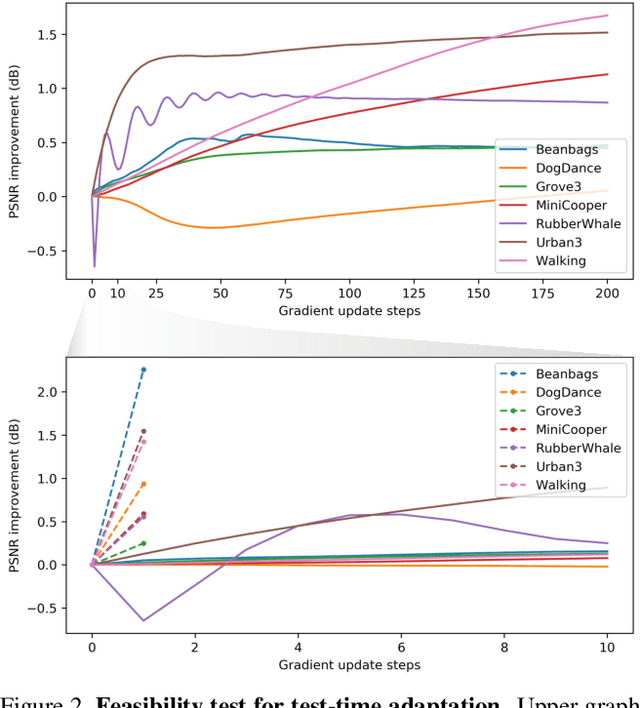

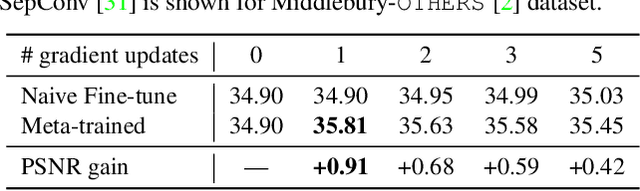

Abstract:Video frame interpolation is a challenging problem because there are different scenarios for each video depending on the variety of foreground and background motion, frame rate, and occlusion. It is therefore difficult for a single network with fixed parameters to generalize across different videos. Ideally, one could have a different network for each scenario, but this is computationally infeasible for practical applications. In this work, we propose to adapt the model to each video by making use of additional information that is readily available at test time and yet has not been exploited in previous works. We first show the benefits of `test-time adaptation' through simple fine-tuning of a network, then we greatly improve its efficiency by incorporating meta-learning. We obtain significant performance gains with only a single gradient update without any additional parameters. Finally, we show that our meta-learning framework can be easily employed to any video frame interpolation network and can consistently improve its performance on multiple benchmark datasets.

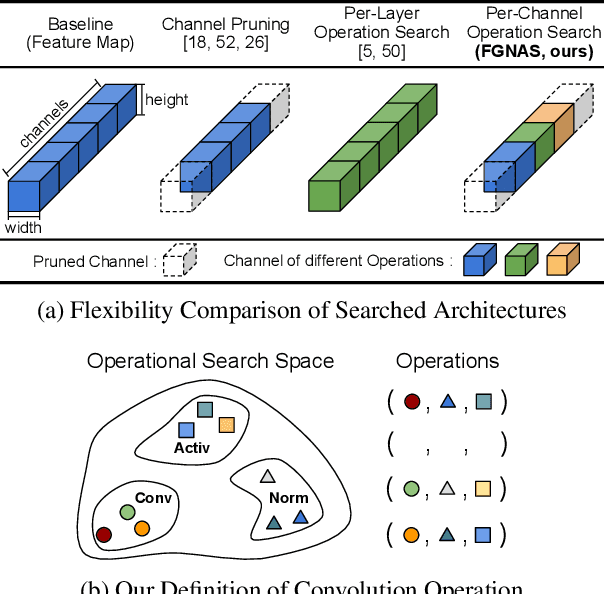

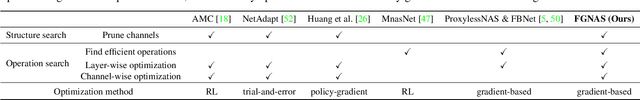

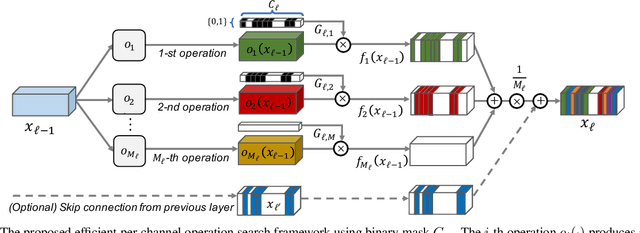

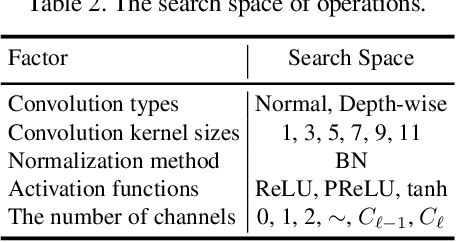

Fine-Grained Neural Architecture Search

Nov 18, 2019

Abstract:We present an elegant framework of fine-grained neural architecture search (FGNAS), which allows to employ multiple heterogeneous operations within a single layer and can even generate compositional feature maps using several different base operations. FGNAS runs efficiently in spite of significantly large search space compared to other methods because it trains networks end-to-end by a stochastic gradient descent method. Moreover, the proposed framework allows to optimize the network under predefined resource constraints in terms of number of parameters, FLOPs and latency. FGNAS has been applied to two crucial applications in resource demanding computer vision tasks---large-scale image classification and image super-resolution---and demonstrates the state-of-the-art performance through flexible operation search and channel pruning.

AbsPoseLifter: Absolute 3D Human Pose Lifting Network from a Single Noisy 2D Human Pose

Oct 26, 2019

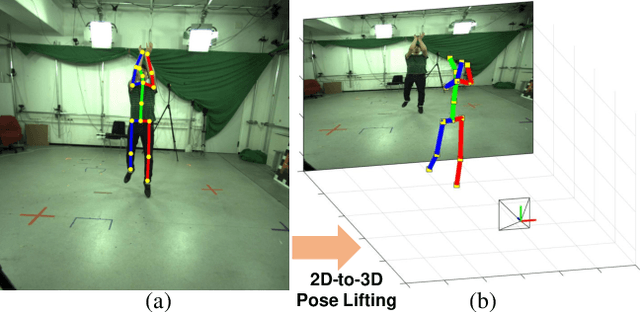

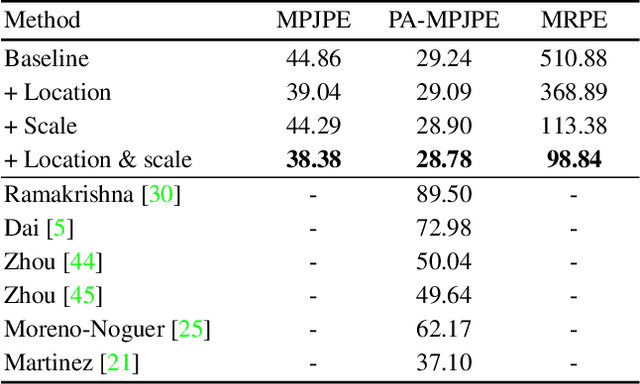

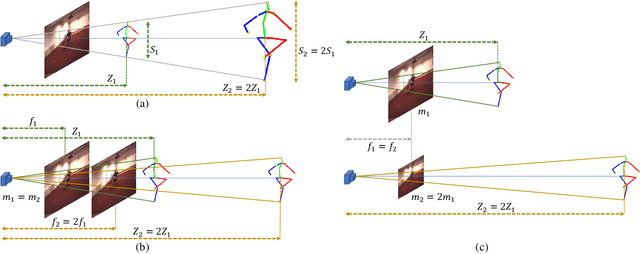

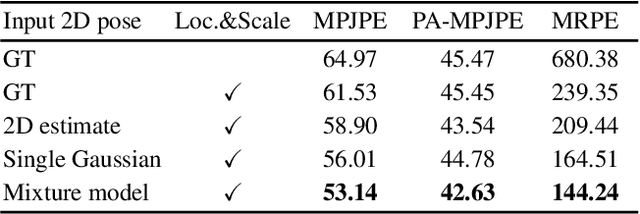

Abstract:This study presents a new network (i.e., AbsPoseLifter) that lifts a 2D human pose to an absolute 3D pose in a camera coordinate system. The proposed network estimates the absolute 3D location of a target subject and also outputs a considerably improved 3D relative pose estimation compared with those of existing pose lifting methods. We also propose using our AbsPoseLifter with a 2D pose estimator in a cascade fashion to estimate 3D human pose from a single RGB image. In this case, we empirically prove that using realistic 2D poses synthesized with the real error distribution of 2D body joints considerably improves the performance of our AbsPoseLifter. The proposed method is applied to public datasets to achieve state-of-the-art 2D-to-3D pose lifting and 3D human pose estimation.

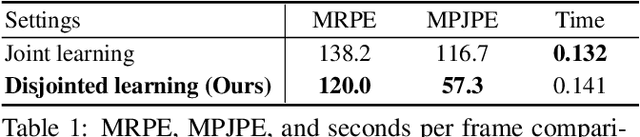

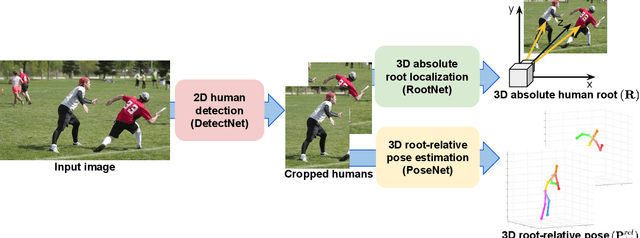

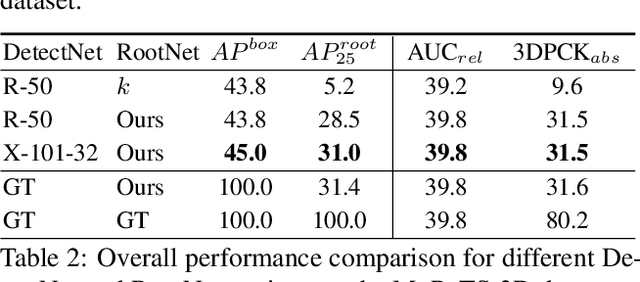

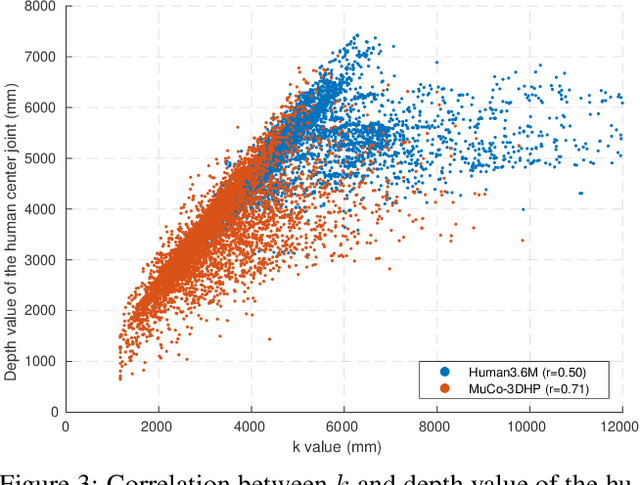

Camera Distance-aware Top-down Approach for 3D Multi-person Pose Estimation from a Single RGB Image

Aug 17, 2019

Abstract:Although significant improvement has been achieved recently in 3D human pose estimation, most of the previous methods only treat a single-person case. In this work, we firstly propose a fully learning-based, camera distance-aware top-down approach for 3D multi-person pose estimation from a single RGB image. The pipeline of the proposed system consists of human detection, absolute 3D human root localization, and root-relative 3D single-person pose estimation modules. Our system achieves comparable results with the state-of-the-art 3D single-person pose estimation models without any groundtruth information and significantly outperforms previous 3D multi-person pose estimation methods on publicly available datasets. The code is available in https://github.com/mks0601/3DMPPE_ROOTNET_RELEASE , https://github.com/mks0601/3DMPPE_POSENET_RELEASE.

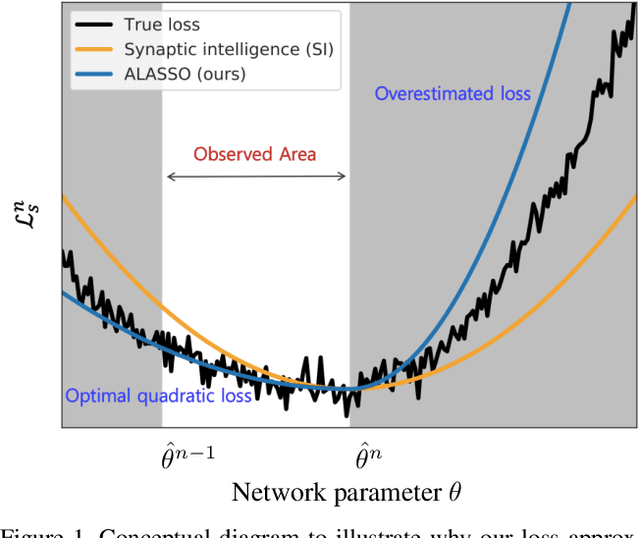

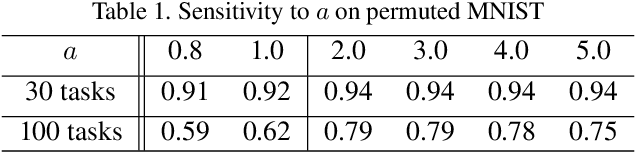

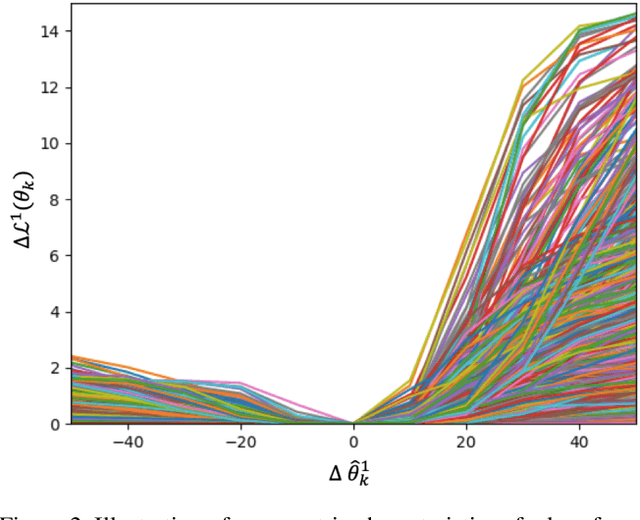

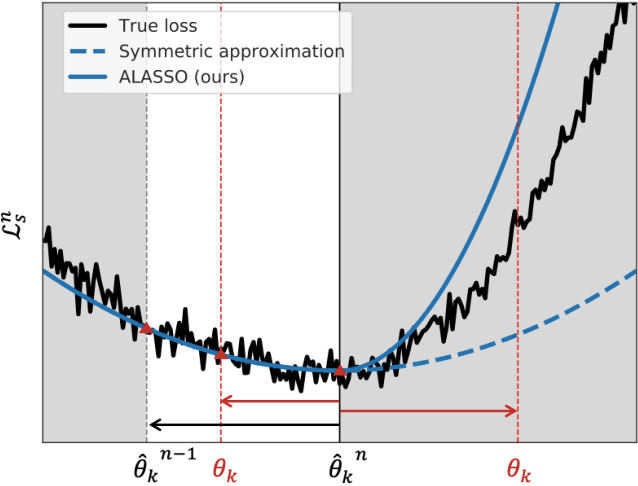

Continual Learning by Asymmetric Loss Approximation with Single-Side Overestimation

Aug 08, 2019

Abstract:Catastrophic forgetting is a critical challenge in training deep neural networks. Although continual learning has been investigated as a countermeasure to the problem, it often suffers from requirements of additional network components and weak scalability to a large number of tasks. We propose a novel approach to continual learning by approximating a true loss function based on an asymmetric quadratic function with one of its sides overestimated. Our algorithm is motivated by the empirical observation that updates of network parameters affect target loss functions asymmetrically. In the proposed continual learning framework, we estimate an asymmetric loss function for the tasks considered in the past through a proper overestimation of its unobserved side in training new tasks, while deriving the accurate model parameter for the observed side. In contrast to existing approaches, our method is free from side effects and achieves the state-of-the-art results that are even close to the upper-bound performance on several challenging benchmark datasets.

Learning to Forget for Meta-Learning

Jun 13, 2019

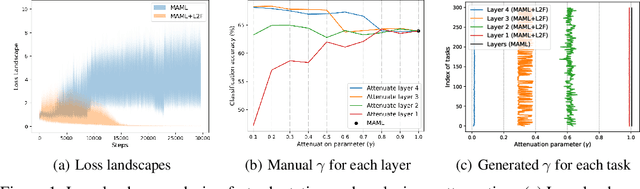

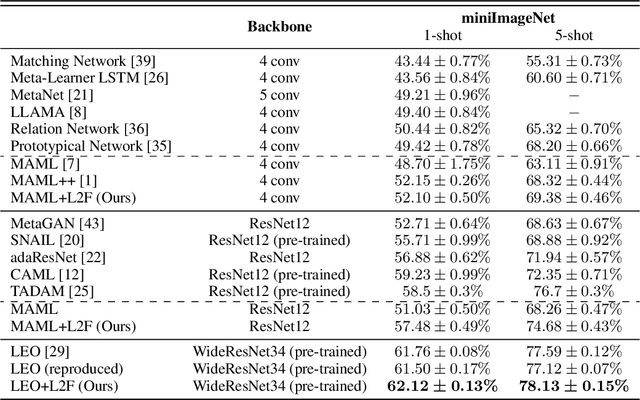

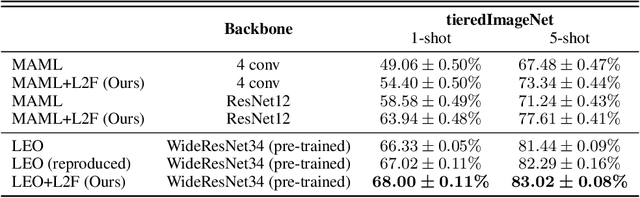

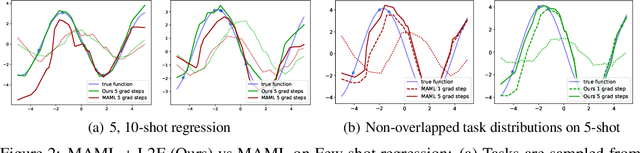

Abstract:Few-shot learning is a challenging problem where the system is required to achieve generalization from only few examples. Meta-learning tackles the problem by learning prior knowledge shared across a distribution of tasks, which is then used to quickly adapt to unseen tasks. Model-agnostic meta-learning (MAML) algorithm formulates prior knowledge as a common initialization across tasks. However, forcibly sharing an initialization brings about conflicts between tasks and thus compromises the quality of the initialization. In this work, by observing that the extent of compromise differs among tasks and between layers of a neural network, we propose a new initialization idea that employs task-dependent layer-wise attenuation, which we call selective forgetting. The proposed attenuation scheme dynamically controls how much of prior knowledge each layer will exploit for a given task. The experimental results demonstrate that the proposed method mitigates the conflicts and provides outstanding performance as a result. We further show that the proposed method, named L2F, can be applied and improve other state-of-the-art MAML-based frameworks, illustrating its generalizability.

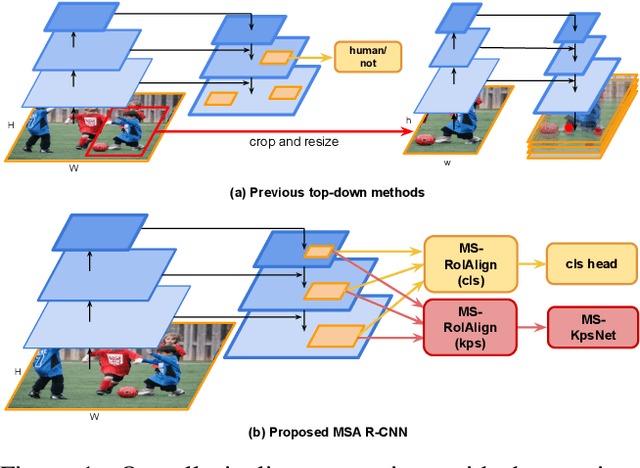

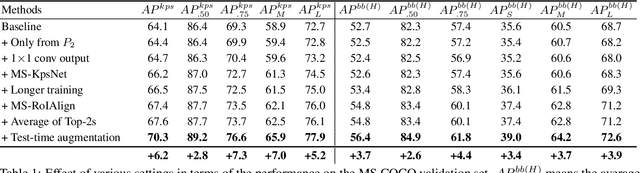

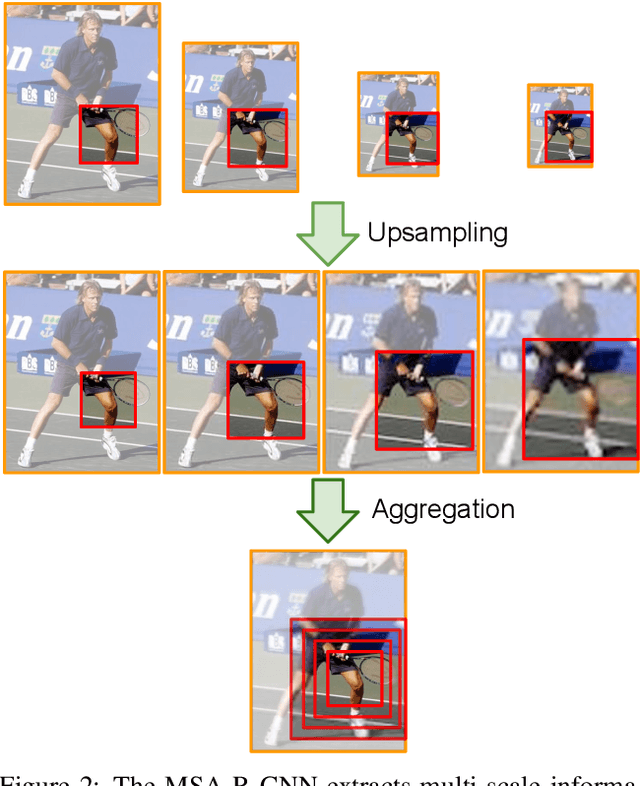

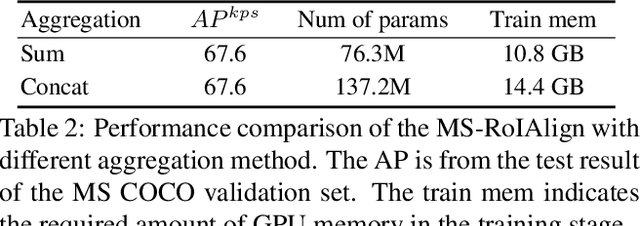

Multi-scale Aggregation R-CNN for 2D Multi-person Pose Estimation

May 10, 2019

Abstract:Multi-person pose estimation from a 2D image is challenging because it requires not only keypoint localization but also human detection. In state-of-the-art top-down methods, multi-scale information is a crucial factor for the accurate pose estimation because it contains both of local information around the keypoints and global information of the entire person. Although multi-scale information allows these methods to achieve the state-of-the-art performance, the top-down methods still require a huge amount of computation because they need to use an additional human detector to feed the cropped human image to their pose estimation model. To effectively utilize multi-scale information with the smaller computation, we propose a multi-scale aggregation R-CNN (MSA R-CNN). It consists of multi-scale RoIAlign block (MS-RoIAlign) and multi-scale keypoint head network (MS-KpsNet) which are designed to effectively utilize multi-scale information. Also, in contrast to previous top-down methods, the MSA R-CNN performs human detection and keypoint localization in a single model, which results in reduced computation. The proposed model achieved the best performance among single model-based methods and its results are comparable to those of separated model-based methods with a smaller amount of computation on the publicly available 2D multi-person keypoint localization dataset.

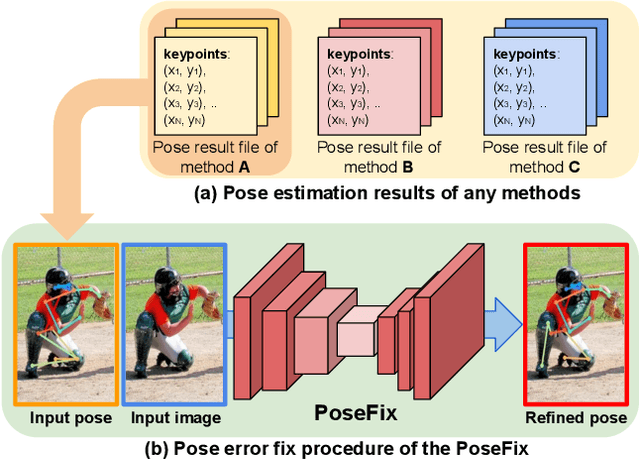

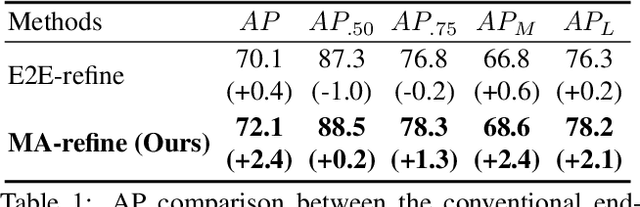

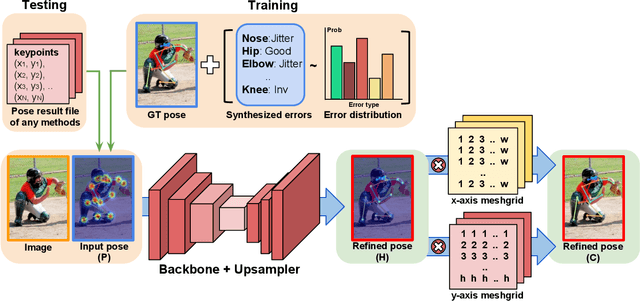

PoseFix: Model-agnostic General Human Pose Refinement Network

Dec 10, 2018

Abstract:Multi-person pose estimation from a 2D image is an essential technique for human behavior understanding. In this paper, we propose a human pose refinement network that estimates a refined pose from a tuple of an input image and input pose. The pose refinement was performed mainly through an end-to-end trainable multi-stage architecture in previous methods. However, they are highly dependent on pose estimation models and require careful model design. By contrast, we propose a model-agnostic pose refinement method. According to a recent study, state-of-the-art 2D human pose estimation methods have similar error distributions. We use this error statistics as prior information to generate synthetic poses and use the synthesized poses to train our model. In the testing stage, pose estimation results of any other methods can be input to the proposed method. Moreover, the proposed model does not require code or knowledge about other methods, which allows it to be easily used in the post-processing step. We show that the proposed approach achieves better performance than the conventional multi-stage refinement models and consistently improves the performance of various state-of-the-art pose estimation methods on the commonly used benchmark. We will release the code and pre-trained model for easy access.

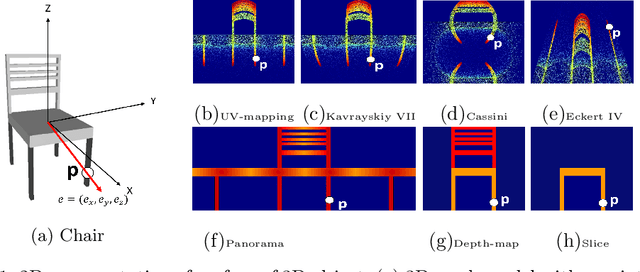

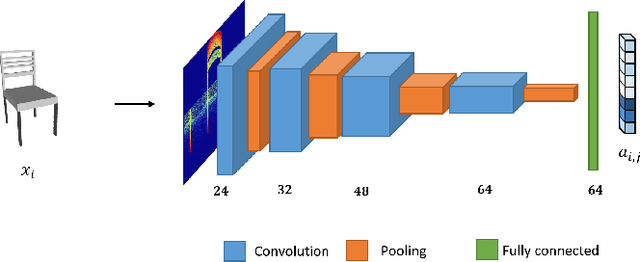

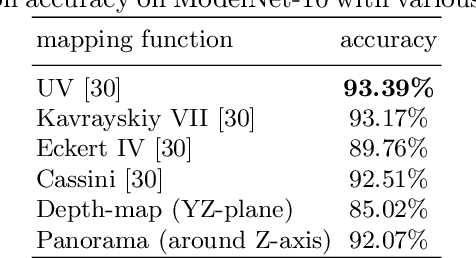

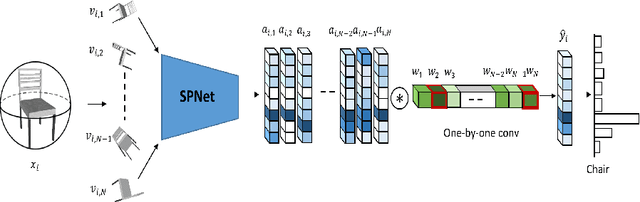

SPNet: Deep 3D Object Classification and Retrieval using Stereographic Projection

Nov 05, 2018

Abstract:We propose an efficient Stereographic Projection Neural Network (SPNet) for learning representations of 3D objects. We first transform a 3D input volume into a 2D planar image using stereographic projection. We then present a shallow 2D convolutional neural network (CNN) to estimate the object category followed by view ensemble, which combines the responses from multiple views of the object to further enhance the predictions. Specifically, the proposed approach consists of four stages: (1) Stereographic projection of a 3D object, (2) view-specific feature learning, (3) view selection and (4) view ensemble. The proposed approach performs comparably to the state-of-the-art methods while having substantially lower GPU memory as well as network parameters. Despite its lightness, the experiments on 3D object classification and shape retrievals demonstrate the high performance of the proposed method.

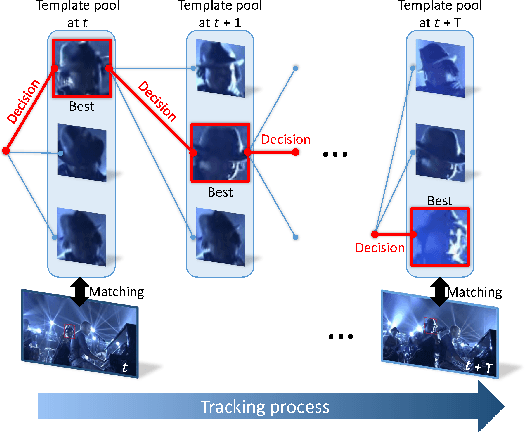

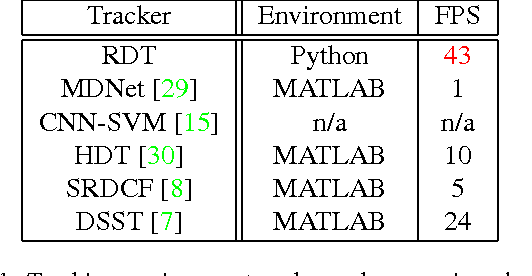

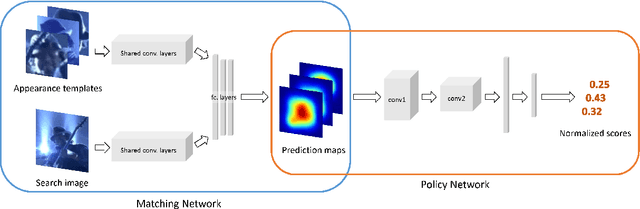

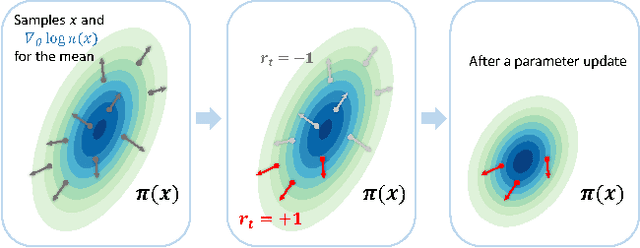

Real-time visual tracking by deep reinforced decision making

Aug 17, 2018

Abstract:One of the major challenges of model-free visual tracking problem has been the difficulty originating from the unpredictable and drastic changes in the appearance of objects we target to track. Existing methods tackle this problem by updating the appearance model on-line in order to adapt to the changes in the appearance. Despite the success of these methods however, inaccurate and erroneous updates of the appearance model result in a tracker drift. In this paper, we introduce a novel real-time visual tracking algorithm based on a template selection strategy constructed by deep reinforcement learning methods. The tracking algorithm utilizes this strategy to choose the appropriate template for tracking a given frame. The template selection strategy is self-learned by utilizing a simple policy gradient method on numerous training episodes randomly generated from a tracking benchmark dataset. Our proposed reinforcement learning framework is generally applicable to other confidence map based tracking algorithms. The experiment shows that our tracking algorithm runs in real-time speed of 43 fps and the proposed policy network effectively decides the appropriate template for successful visual tracking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge