Koji Inoue

Multilingual and Continuous Backchannel Prediction: A Cross-lingual Study

Dec 16, 2025

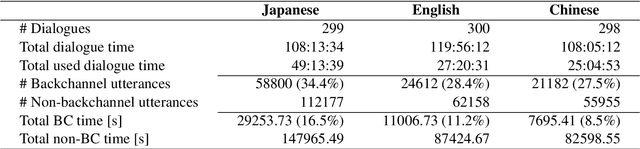

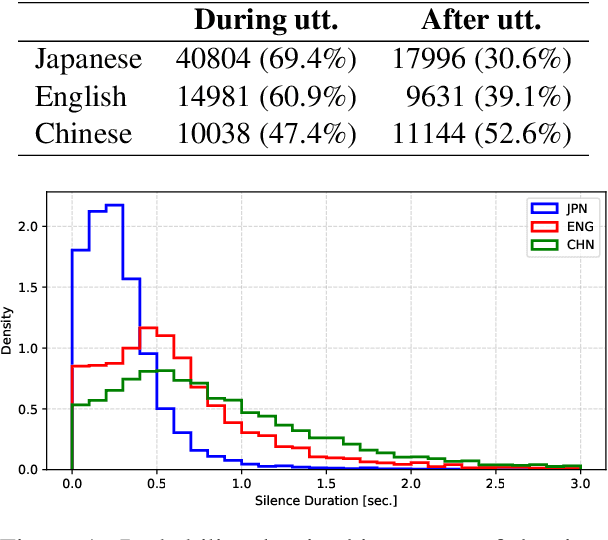

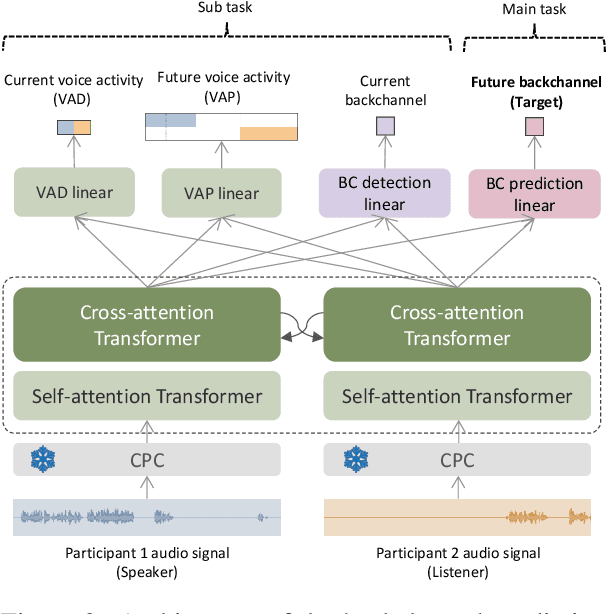

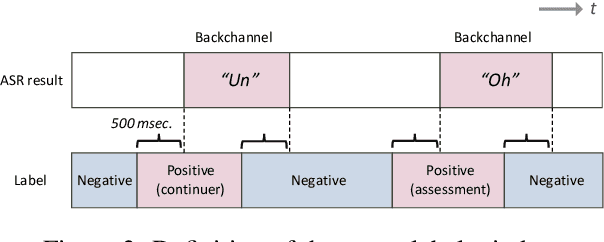

Abstract:We present a multilingual, continuous backchannel prediction model for Japanese, English, and Chinese, and use it to investigate cross-linguistic timing behavior. The model is Transformer-based and operates at the frame level, jointly trained with auxiliary tasks on approximately 300 hours of dyadic conversations. Across all three languages, the multilingual model matches or surpasses monolingual baselines, indicating that it learns both language-universal cues and language-specific timing patterns. Zero-shot transfer with two-language training remains limited, underscoring substantive cross-lingual differences. Perturbation analyses reveal distinct cue usage: Japanese relies more on short-term linguistic information, whereas English and Chinese are more sensitive to silence duration and prosodic variation; multilingual training encourages shared yet adaptable representations and reduces overreliance on pitch in Chinese. A context-length study further shows that Japanese is relatively robust to shorter contexts, while Chinese benefits markedly from longer contexts. Finally, we integrate the trained model into a real-time processing software, demonstrating CPU-only inference. Together, these findings provide a unified model and empirical evidence for how backchannel timing differs across languages, informing the design of more natural, culturally-aware spoken dialogue systems.

Triadic Multi-party Voice Activity Projection for Turn-taking in Spoken Dialogue Systems

Jul 10, 2025Abstract:Turn-taking is a fundamental component of spoken dialogue, however conventional studies mostly involve dyadic settings. This work focuses on applying voice activity projection (VAP) to predict upcoming turn-taking in triadic multi-party scenarios. The goal of VAP models is to predict the future voice activity for each speaker utilizing only acoustic data. This is the first study to extend VAP into triadic conversation. We trained multiple models on a Japanese triadic dataset where participants discussed a variety of topics. We found that the VAP trained on triadic conversation outperformed the baseline for all models but that the type of conversation affected the accuracy. This study establishes that VAP can be used for turn-taking in triadic dialogue scenarios. Future work will incorporate this triadic VAP turn-taking model into spoken dialogue systems.

Does the Appearance of Autonomous Conversational Robots Affect User Spoken Behaviors in Real-World Conference Interactions?

Mar 17, 2025Abstract:We investigate the impact of robot appearance on users' spoken behavior during real-world interactions by comparing a human-like android, ERICA, with a less anthropomorphic humanoid, TELECO. Analyzing data from 42 participants at SIGDIAL 2024, we extracted linguistic features such as disfluencies and syntactic complexity from conversation transcripts. The results showed moderate effect sizes, suggesting that participants produced fewer disfluencies and employed more complex syntax when interacting with ERICA. Further analysis involving training classification models like Na\"ive Bayes, which achieved an F1-score of 71.60\%, and conducting feature importance analysis, highlighted the significant role of disfluencies and syntactic complexity in interactions with robots of varying human-like appearances. Discussing these findings within the frameworks of cognitive load and Communication Accommodation Theory, we conclude that designing robots to elicit more structured and fluent user speech can enhance their communicative alignment with humans.

A Noise-Robust Turn-Taking System for Real-World Dialogue Robots: A Field Experiment

Mar 08, 2025Abstract:Turn-taking is a crucial aspect of human-robot interaction, directly influencing conversational fluidity and user engagement. While previous research has explored turn-taking models in controlled environments, their robustness in real-world settings remains underexplored. In this study, we propose a noise-robust voice activity projection (VAP) model, based on a Transformer architecture, to enhance real-time turn-taking in dialogue robots. To evaluate the effectiveness of the proposed system, we conducted a field experiment in a shopping mall, comparing the VAP system with a conventional cloud-based speech recognition system. Our analysis covered both subjective user evaluations and objective behavioral analysis. The results showed that the proposed system significantly reduced response latency, leading to a more natural conversation where both the robot and users responded faster. The subjective evaluations suggested that faster responses contribute to a better interaction experience.

An LLM Benchmark for Addressee Recognition in Multi-modal Multi-party Dialogue

Jan 28, 2025

Abstract:Handling multi-party dialogues represents a significant step for advancing spoken dialogue systems, necessitating the development of tasks specific to multi-party interactions. To address this challenge, we are constructing a multi-modal multi-party dialogue corpus of triadic (three-participant) discussions. This paper focuses on the task of addressee recognition, identifying who is being addressed to take the next turn, a critical component unique to multi-party dialogue systems. A subset of the corpus was annotated with addressee information, revealing that explicit addressees are indicated in approximately 20% of conversational turns. To evaluate the task's complexity, we benchmarked the performance of a large language model (GPT-4o) on addressee recognition. The results showed that GPT-4o achieved an accuracy only marginally above chance, underscoring the challenges of addressee recognition in multi-party dialogue. These findings highlight the need for further research to enhance the capabilities of large language models in understanding and navigating the intricacies of multi-party conversational dynamics.

Why Do We Laugh? Annotation and Taxonomy Generation for Laughable Contexts in Spontaneous Text Conversation

Jan 28, 2025Abstract:Laughter serves as a multifaceted communicative signal in human interaction, yet its identification within dialogue presents a significant challenge for conversational AI systems. This study addresses this challenge by annotating laughable contexts in Japanese spontaneous text conversation data and developing a taxonomy to classify the underlying reasons for such contexts. Initially, multiple annotators manually labeled laughable contexts using a binary decision (laughable or non-laughable). Subsequently, an LLM was used to generate explanations for the binary annotations of laughable contexts, which were then categorized into a taxonomy comprising ten categories, including "Empathy and Affinity" and "Humor and Surprise," highlighting the diverse range of laughter-inducing scenarios. The study also evaluated GPT-4's performance in recognizing the majority labels of laughable contexts, achieving an F1 score of 43.14%. These findings contribute to the advancement of conversational AI by establishing a foundation for more nuanced recognition and generation of laughter, ultimately fostering more natural and engaging human-AI interactions.

Human-Like Embodied AI Interviewer: Employing Android ERICA in Real International Conference

Dec 13, 2024

Abstract:This paper introduces the human-like embodied AI interviewer which integrates android robots equipped with advanced conversational capabilities, including attentive listening, conversational repairs, and user fluency adaptation. Moreover, it can analyze and present results post-interview. We conducted a real-world case study at SIGDIAL 2024 with 42 participants, of whom 69% reported positive experiences. This study demonstrated the system's effectiveness in conducting interviews just like a human and marked the first employment of such a system at an international conference. The demonstration video is available at https://youtu.be/jCuw9g99KuE.

Yeah, Un, Oh: Continuous and Real-time Backchannel Prediction with Fine-tuning of Voice Activity Projection

Oct 21, 2024

Abstract:In human conversations, short backchannel utterances such as "yeah" and "oh" play a crucial role in facilitating smooth and engaging dialogue. These backchannels signal attentiveness and understanding without interrupting the speaker, making their accurate prediction essential for creating more natural conversational agents. This paper proposes a novel method for real-time, continuous backchannel prediction using a fine-tuned Voice Activity Projection (VAP) model. While existing approaches have relied on turn-based or artificially balanced datasets, our approach predicts both the timing and type of backchannels in a continuous and frame-wise manner on unbalanced, real-world datasets. We first pre-train the VAP model on a general dialogue corpus to capture conversational dynamics and then fine-tune it on a specialized dataset focused on backchannel behavior. Experimental results demonstrate that our model outperforms baseline methods in both timing and type prediction tasks, achieving robust performance in real-time environments. This research offers a promising step toward more responsive and human-like dialogue systems, with implications for interactive spoken dialogue applications such as virtual assistants and robots.

Analysis and Detection of Differences in Spoken User Behaviors between Autonomous and Wizard-of-Oz Systems

Oct 04, 2024

Abstract:This study examined users' behavioral differences in a large corpus of Japanese human-robot interactions, comparing interactions between a tele-operated robot and an autonomous dialogue system. We analyzed user spoken behaviors in both attentive listening and job interview dialogue scenarios. Results revealed significant differences in metrics such as speech length, speaking rate, fillers, backchannels, disfluencies, and laughter between operator-controlled and autonomous conditions. Furthermore, we developed predictive models to distinguish between operator and autonomous system conditions. Our models demonstrated higher accuracy and precision compared to the baseline model, with several models also achieving a higher F1 score than the baseline.

Robotic Backchanneling in Online Conversation Facilitation: A Cross-Generational Study

Sep 25, 2024

Abstract:Japan faces many challenges related to its aging society, including increasing rates of cognitive decline in the population and a shortage of caregivers. Efforts have begun to explore solutions using artificial intelligence (AI), especially socially embodied intelligent agents and robots that can communicate with people. Yet, there has been little research on the compatibility of these agents with older adults in various everyday situations. To this end, we conducted a user study to evaluate a robot that functions as a facilitator for a group conversation protocol designed to prevent cognitive decline. We modified the robot to use backchannelling, a natural human way of speaking, to increase receptiveness of the robot and enjoyment of the group conversation experience. We conducted a cross-generational study with young adults and older adults. Qualitative analyses indicated that younger adults perceived the backchannelling version of the robot as kinder, more trustworthy, and more acceptable than the non-backchannelling robot. Finally, we found that the robot's backchannelling elicited nonverbal backchanneling in older participants.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge