Karl Löwenmark

Agent-based Condition Monitoring Assistance with Multimodal Industrial Database Retrieval Augmented Generation

Jun 10, 2025Abstract:Condition monitoring (CM) plays a crucial role in ensuring reliability and efficiency in the process industry. Although computerised maintenance systems effectively detect and classify faults, tasks like fault severity estimation, and maintenance decisions still largely depend on human expert analysis. The analysis and decision making automatically performed by current systems typically exhibit considerable uncertainty and high false alarm rates, leading to increased workload and reduced efficiency. This work integrates large language model (LLM)-based reasoning agents with CM workflows to address analyst and industry needs, namely reducing false alarms, enhancing fault severity estimation, improving decision support, and offering explainable interfaces. We propose MindRAG, a modular framework combining multimodal retrieval-augmented generation (RAG) with novel vector store structures designed specifically for CM data. The framework leverages existing annotations and maintenance work orders as surrogates for labels in a supervised learning protocol, addressing the common challenge of training predictive models on unlabelled and noisy real-world datasets. The primary contributions include: (1) an approach for structuring industry CM data into a semi-structured multimodal vector store compatible with LLM-driven workflows; (2) developing multimodal RAG techniques tailored for CM data; (3) developing practical reasoning agents capable of addressing real-world CM queries; and (4) presenting an experimental framework for integrating and evaluating such agents in realistic industrial scenarios. Preliminary results, evaluated with the help of an experienced analyst, indicate that MindRAG provide meaningful decision support for more efficient management of alarms, thereby improving the interpretability of CM systems.

Technical Language Supervision for Intelligent Fault Diagnosis in Process Industry

Dec 11, 2021

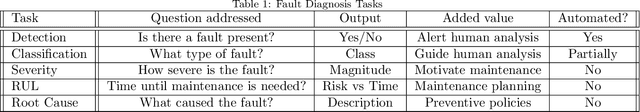

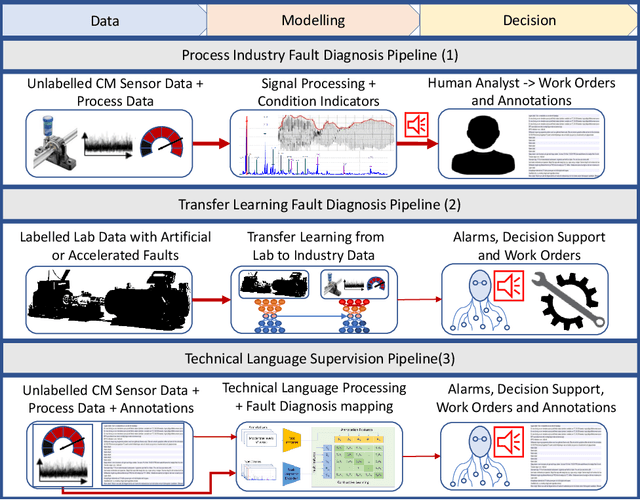

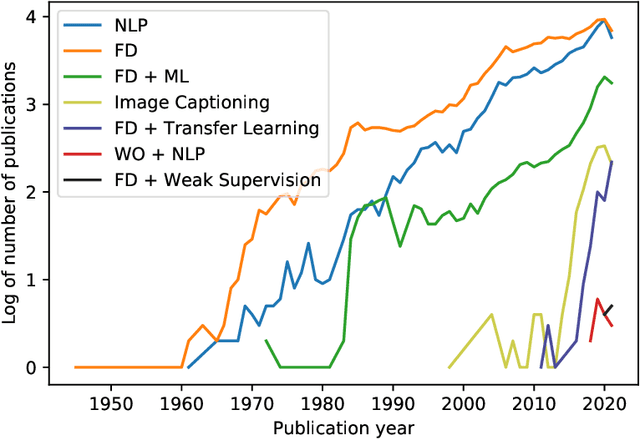

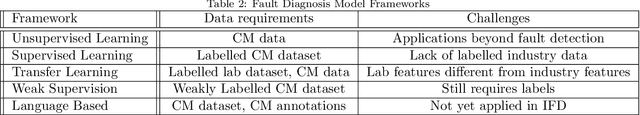

Abstract:In the process industry, condition monitoring systems with automated fault diagnosis methods assisthuman experts and thereby improve maintenance efficiency, process sustainability, and workplace safety.Improving the automated fault diagnosis methods using data and machine learning-based models is a centralaspect of intelligent fault diagnosis (IFD). A major challenge in IFD is to develop realistic datasets withaccurate labels needed to train and validate models, and to transfer models trained with labeled lab datato heterogeneous process industry environments. However, fault descriptions and work-orders written bydomain experts are increasingly digitized in modern condition monitoring systems, for example in the contextof rotating equipment monitoring. Thus, domain-specific knowledge about fault characteristics and severitiesexists as technical language annotations in industrial datasets. Furthermore, recent advances in naturallanguage processing enable weakly supervised model optimization using natural language annotations, mostnotably in the form ofnatural language supervision(NLS). This creates a timely opportunity to developtechnical language supervision(TLS) solutions for IFD systems grounded in industrial data, for exampleas a complement to pre-training with lab data to address problems like overfitting and inaccurate out-of-sample generalisation. We surveyed the literature and identify a considerable improvement in the maturityof NLS over the last two years, facilitating applications beyond natural language; a rapid development ofweak supervision methods; and transfer learning as a current trend in IFD which can benefit from thesedevelopments. Finally, we describe a framework for integration of TLS in IFD which is inspired by recentNLS innovations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge