Kari Tammi

Trajectory-based Road Autolabeling with Lidar-Camera Fusion in Winter Conditions

Dec 03, 2024

Abstract:Robust road segmentation in all road conditions is required for safe autonomous driving and advanced driver assistance systems. Supervised deep learning methods provide accurate road segmentation in the domain of their training data but cannot be trusted in out-of-distribution scenarios. Including the whole distribution in the trainset is challenging as each sample must be labeled by hand. Trajectory-based self-supervised methods offer a potential solution as they can learn from the traversed route without manual labels. However, existing trajectory-based methods use learning schemes that rely only on the camera or only on the lidar. In this paper, trajectory-based learning is implemented jointly with lidar and camera for increased performance. Our method outperforms recent standalone camera- and lidar-based methods when evaluated with a challenging winter driving dataset including countryside and suburb driving scenes. The source code is available at https://github.com/eerik98/lidar-camera-road-autolabeling.git

DynaHull: Density-centric Dynamic Point Filtering in Point Clouds

Jan 15, 2024Abstract:In the field of indoor robotics, accurately navigating and mapping in dynamic environments using point clouds can be a challenging task due to the presence of dynamic points. These dynamic points are often represented by people in indoor environments, but in industrial settings with moving machinery, there can be various types of dynamic points. This study introduces DynaHull, a novel technique designed to enhance indoor mapping accuracy by effectively removing dynamic points from point clouds. DynaHull works by leveraging the observation that, over multiple scans, stationary points have a higher density compared to dynamic ones. Furthermore, DynaHull addresses mapping challenges related to unevenly distributed points by clustering the map into smaller sections. In each section, the density factor of each point is determined by dividing the number of neighbors by the volume these neighboring points occupy using a convex hull method. The algorithm removes the dynamic points using an adaptive threshold based on the point count of each cluster, thus reducing the false positives. The performance of DynaHull was compared to state-of-the-art techniques, such as ERASOR, Removert, OctoMap, and a baseline statistical outlier removal from Open3D, by comparing each method to the ground truth map created during a low activity period in which only a few dynamic points were present. The results indicated that DynaHull outperformed these techniques in various metrics, noticeably in the Earth Mover's Distance. This research contributes to indoor robotics by providing efficient methods for dynamic point removal, essential for accurate mapping and localization in dynamic environments.

TADAP: Trajectory-Aided Drivable area Auto-labeling with Pre-trained self-supervised features in winter driving conditions

Dec 20, 2023Abstract:Detection of the drivable area in all conditions is crucial for autonomous driving and advanced driver assistance systems. However, the amount of labeled data in adverse driving conditions is limited, especially in winter, and supervised methods generalize poorly to conditions outside the training distribution. For easy adaption to all conditions, the need for human annotation should be removed from the learning process. In this paper, Trajectory-Aided Drivable area Auto-labeling with Pre-trained self-supervised features (TADAP) is presented for automated annotation of the drivable area in winter driving conditions. A sample of the drivable area is extracted based on the trajectory estimate from the global navigation satellite system. Similarity with the sample area is determined based on pre-trained self-supervised visual features. Image areas similar to the sample area are considered to be drivable. These TADAP labels were evaluated with a novel winter-driving dataset, collected in varying driving scenes. A prediction model trained with the TADAP labels achieved a +9.6 improvement in intersection over union compared to the previous state-of-the-art of self-supervised drivable area detection.

Multi-Echo Denoising in Adverse Weather

May 23, 2023Abstract:Adverse weather can cause noise to light detection and ranging (LiDAR) data. This is a problem since it is used in many outdoor applications, e.g. object detection and mapping. We propose the task of multi-echo denoising, where the goal is to pick the echo that represents the objects of interest and discard other echoes. Thus, the idea is to pick points from alternative echoes that are not available in standard strongest echo point clouds due to the noise. In an intuitive sense, we are trying to see through the adverse weather. To achieve this goal, we propose a novel self-supervised deep learning method and the characteristics similarity regularization method to boost its performance. Based on extensive experiments on a semi-synthetic dataset, our method achieves superior performance compared to the state-of-the-art in self-supervised adverse weather denoising (23% improvement). Moreover, the experiments with a real multi-echo adverse weather dataset prove the efficacy of multi-echo denoising. Our work enables more reliable point cloud acquisition in adverse weather and thus promises safer autonomous driving and driving assistance systems in such conditions. The code is available at https://github.com/alvariseppanen/SMEDNet

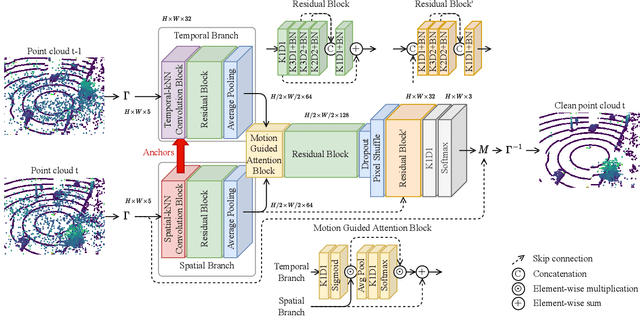

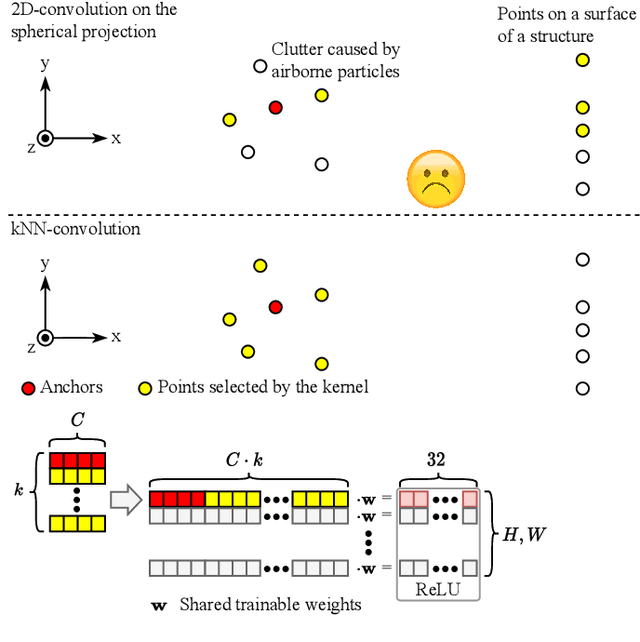

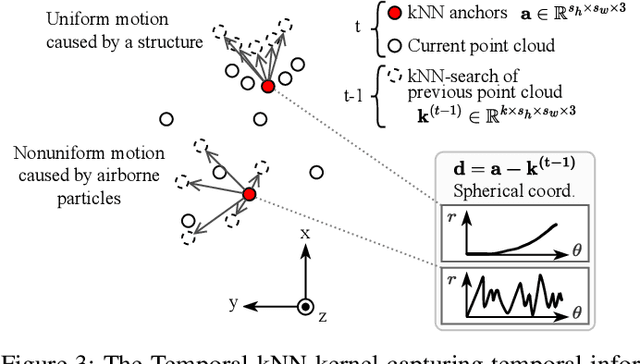

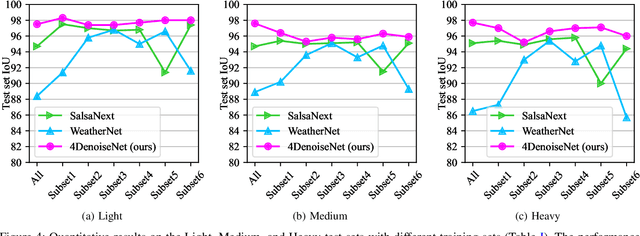

4DenoiseNet: Adverse Weather Denoising from Adjacent Point Clouds

Sep 15, 2022

Abstract:Reliable point cloud data is essential for perception tasks \textit{e.g.} in robotics and autonomous driving applications. Adverse weather causes a specific type of noise to light detection and ranging (LiDAR) sensor data, which degrades the quality of the point clouds significantly. To address this issue, this letter presents a novel point cloud adverse weather denoising deep learning algorithm (4DenoiseNet). Our algorithm takes advantage of the time dimension unlike deep learning adverse weather denoising methods in the literature. It performs about 10\% better in terms of intersection over union metric compared to the previous work and is more computationally efficient. These results are achieved on our novel SnowyKITTI dataset, which has over 40000 adverse weather annotated point clouds. Moreover, strong qualitative results on the Canadian Adverse Driving Conditions dataset indicate good generalizability to domain shifts and to different sensor intrinsics.

No GPU? No problem: an ultra fast 3D detection of road users with a simple proposal generator and energy-based out-of-distribution PointNets

Jun 06, 2022

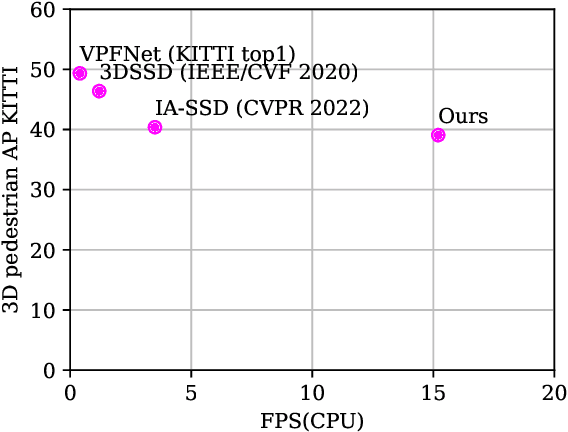

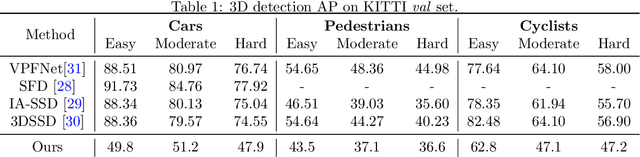

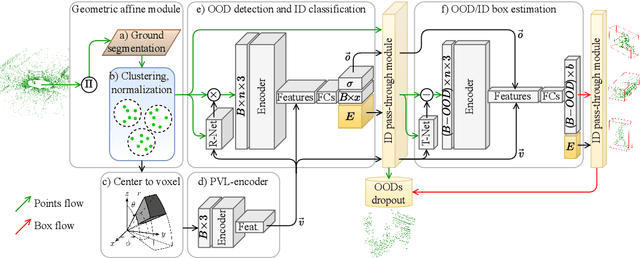

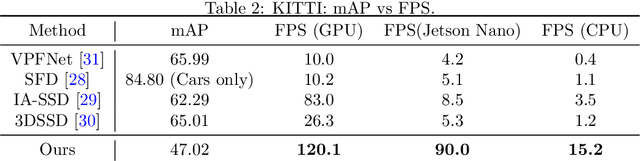

Abstract:This paper presents a novel architecture for point cloud road user detection, which is based on a classical point cloud proposal generator approach, that utilizes simple geometrical rules. New methods are coupled with this technique to achieve extremely small computational requirement, and mAP that is comparable to the state-of-the-art. The idea is to specifically exploit geometrical rules in hopes of faster performance. The typical downsides of this approach, e.g. global context loss, are tackled in this paper, and solutions are presented. This approach allows real-time performance on a single core CPU, which is not the case with end-to-end solutions presented in the state-of-the-art. We have evaluated the performance of the method with the public KITTI dataset, and with our own annotated dataset collected with a small mobile robot platform. Moreover, we also present a novel ground segmentation method, which is evaluated with the public SemanticKITTI dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge