Kannappan Palaniappan

Automated Feature Tracking for Real-Time Kinematic Analysis and Shape Estimation of Carbon Nanotube Growth

Aug 26, 2025Abstract:Carbon nanotubes (CNTs) are critical building blocks in nanotechnology, yet the characterization of their dynamic growth is limited by the experimental challenges in nanoscale motion measurement using scanning electron microscopy (SEM) imaging. Existing ex situ methods offer only static analysis, while in situ techniques often require manual initialization and lack continuous per-particle trajectory decomposition. We present Visual Feature Tracking (VFTrack) an in-situ real-time particle tracking framework that automatically detects and tracks individual CNT particles in SEM image sequences. VFTrack integrates handcrafted or deep feature detectors and matchers within a particle tracking framework to enable kinematic analysis of CNT micropillar growth. A systematic using 13,540 manually annotated trajectories identifies the ALIKED detector with LightGlue matcher as an optimal combination (F1-score of 0.78, $\alpha$-score of 0.89). VFTrack motion vectors decomposed into axial growth, lateral drift, and oscillations, facilitate the calculation of heterogeneous regional growth rates and the reconstruction of evolving CNT pillar morphologies. This work enables advancement in automated nano-material characterization, bridging the gap between physics-based models and experimental observation to enable real-time optimization of CNT synthesis.

Predicting mechanical properties of Carbon Nanotube (CNT) images Using Multi-Layer Synthetic Finite Element Model Simulations

Jul 16, 2023Abstract:We present a pipeline for predicting mechanical properties of vertically-oriented carbon nanotube (CNT) forest images using a deep learning model for artificial intelligence (AI)-based materials discovery. Our approach incorporates an innovative data augmentation technique that involves the use of multi-layer synthetic (MLS) or quasi-2.5D images which are generated by blending 2D synthetic images. The MLS images more closely resemble 3D synthetic and real scanning electron microscopy (SEM) images of CNTs but without the computational cost of performing expensive 3D simulations or experiments. Mechanical properties such as stiffness and buckling load for the MLS images are estimated using a physics-based model. The proposed deep learning architecture, CNTNeXt, builds upon our previous CNTNet neural network, using a ResNeXt feature representation followed by random forest regression estimator. Our machine learning approach for predicting CNT physical properties by utilizing a blended set of synthetic images is expected to outperform single synthetic image-based learning when it comes to predicting mechanical properties of real scanning electron microscopy images. This has the potential to accelerate understanding and control of CNT forest self-assembly for diverse applications.

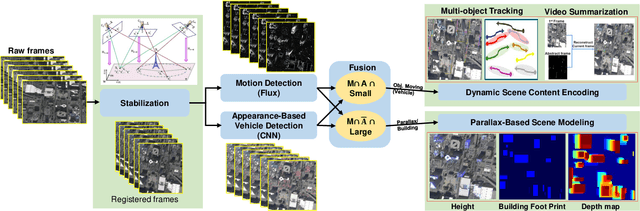

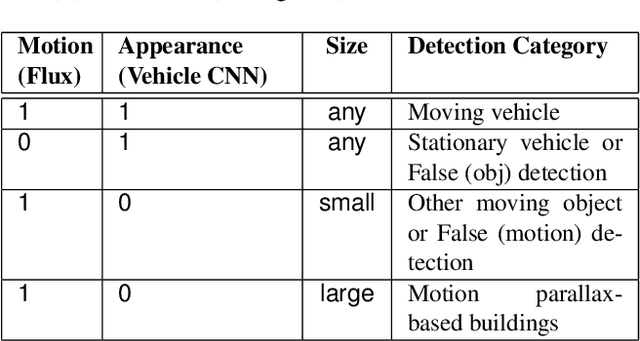

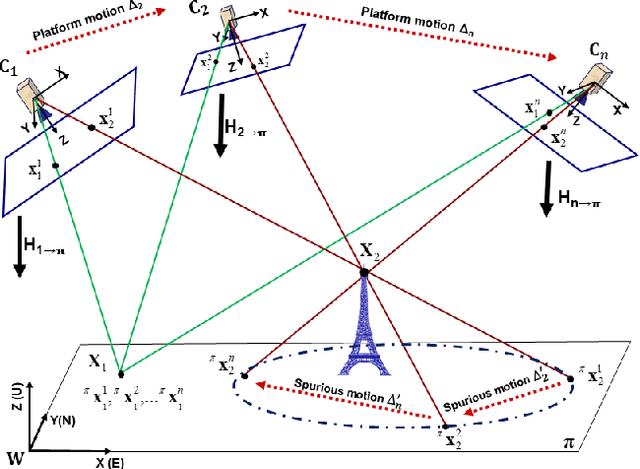

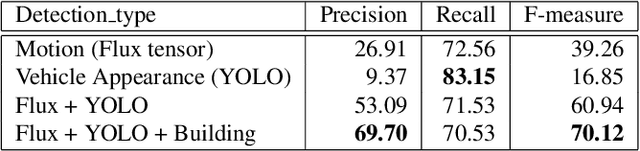

Multi-Cue Vehicle Detection for Semantic Video Compression In Georegistered Aerial Videos

Jul 02, 2019

Abstract:Detection of moving objects such as vehicles in videos acquired from an airborne camera is very useful for video analytics applications. Using fast low power algorithms for onboard moving object detection would also provide region of interest-based semantic information for scene content aware image compression. This would enable more efficient and flexible communication link utilization in lowbandwidth airborne cloud computing networks. Despite recent advances in both UAV or drone platforms and imaging sensor technologies, vehicle detection from aerial video remains challenging due to small object sizes, platform motion and camera jitter, obscurations, scene complexity and degraded imaging conditions. This paper proposes an efficient moving vehicle detection pipeline which synergistically fuses both appearance and motion-based detections in a complementary manner using deep learning combined with flux tensor spatio-temporal filtering. Our proposed multi-cue pipeline is able to detect moving vehicles with high precision and recall, while filtering out false positives such as parked vehicles, through intelligent fusion. Experimental results show that incorporating contextual information of moving vehicles enables high semantic compression ratios of over 100:1 with high image fidelity, for better utilization of limited bandwidth air-to-ground network links.

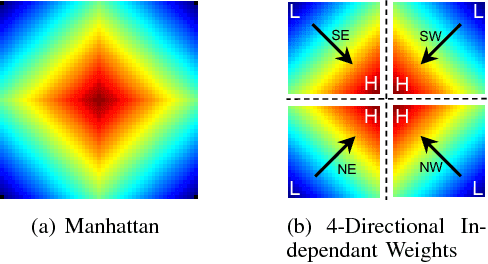

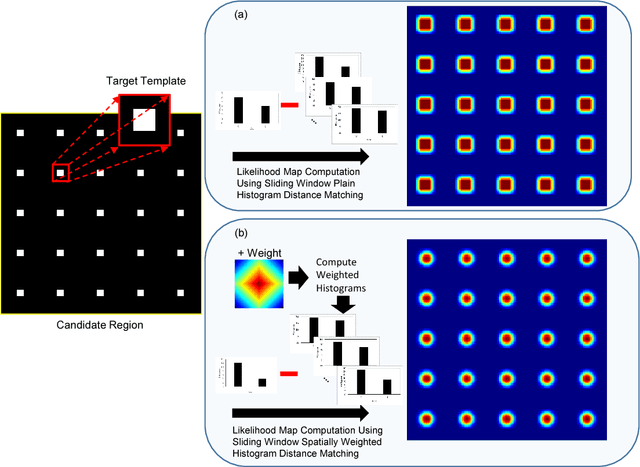

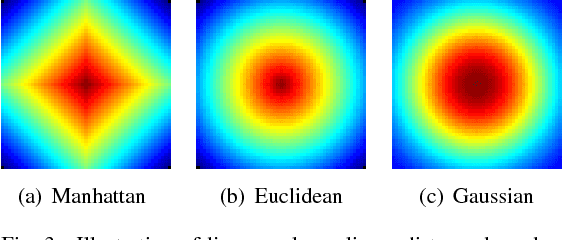

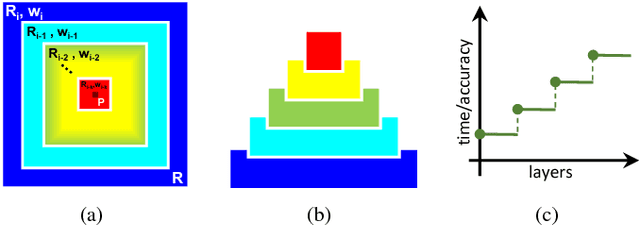

Multi-Scale Spatially Weighted Local Histograms in O(1)

May 09, 2017

Abstract:Weighting pixel contribution considering its location is a key feature in many fundamental image processing tasks including filtering, object modeling and distance matching. Several techniques have been proposed that incorporate Spatial information to increase the accuracy and boost the performance of detection, tracking and recognition systems at the cost of speed. But, it is still not clear how to efficiently ex- tract weighted local histograms in constant time using integral histogram. This paper presents a novel algorithm to compute accurately multi-scale Spatially weighted local histograms in constant time using Weighted Integral Histogram (SWIH) for fast search. We applied our spatially weighted integral histogram approach for fast tracking and obtained more accurate and robust target localization result in comparison with using plain histogram.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge