Kai Ming Ting

Isolation Distributional Kernel: A New Tool for Point & Group Anomaly Detection

Sep 24, 2020

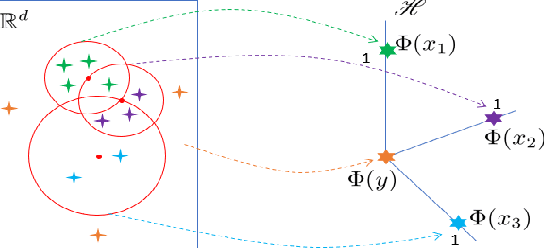

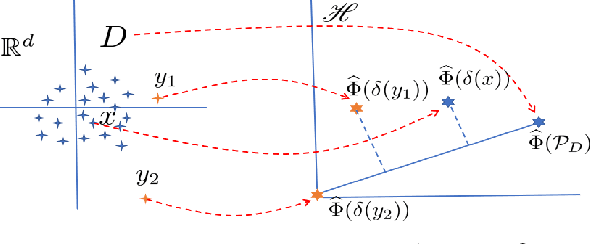

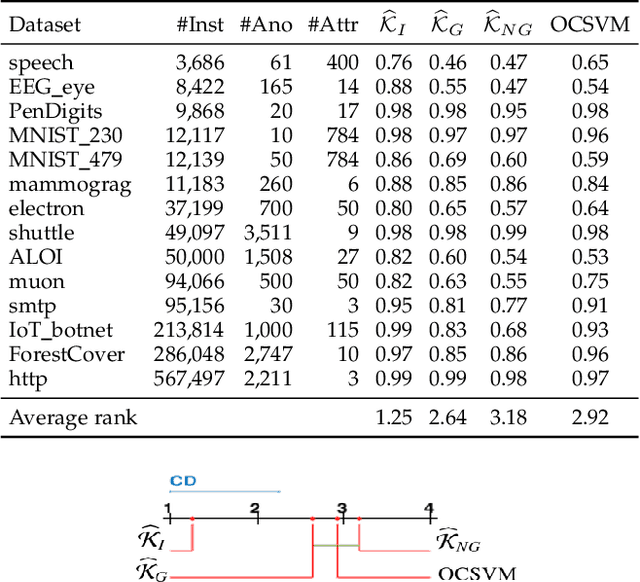

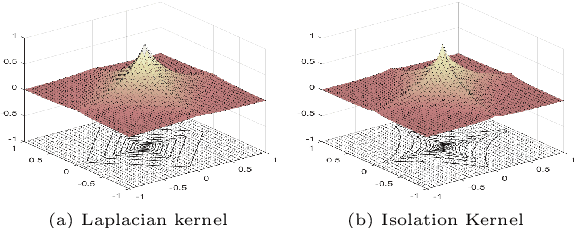

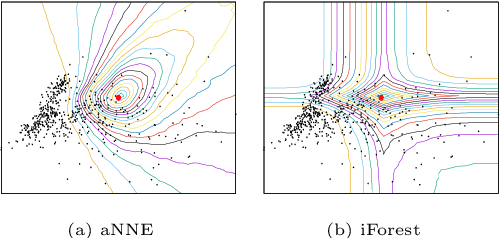

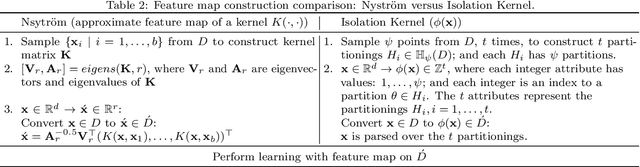

Abstract:We introduce Isolation Distributional Kernel as a new way to measure the similarity between two distributions. Existing approaches based on kernel mean embedding, which convert a point kernel to a distributional kernel, have two key issues: the point kernel employed has a feature map with intractable dimensionality; and it is {\em data independent}. This paper shows that Isolation Distributional Kernel (IDK), which is based on a {\em data dependent} point kernel, addresses both key issues. We demonstrate IDK's efficacy and efficiency as a new tool for kernel based anomaly detection for both point and group anomalies. Without explicit learning, using IDK alone outperforms existing kernel based point anomaly detector OCSVM and other kernel mean embedding methods that rely on Gaussian kernel. For group anomaly detection,we introduce an IDK based detector called IDK$^2$. It reformulates the problem of group anomaly detection in input space into the problem of point anomaly detection in Hilbert space, without the need for learning. IDK$^2$ runs orders of magnitude faster than group anomaly detector OCSMM.We reveal for the first time that an effective kernel based anomaly detector based on kernel mean embedding must employ a characteristic kernel which is data dependent.

A new effective and efficient measure for outlying aspect mining

May 27, 2020

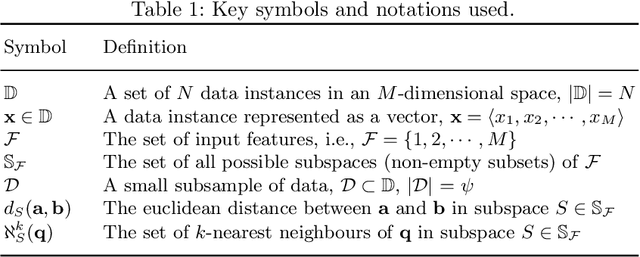

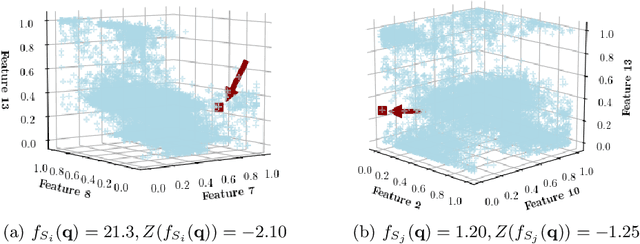

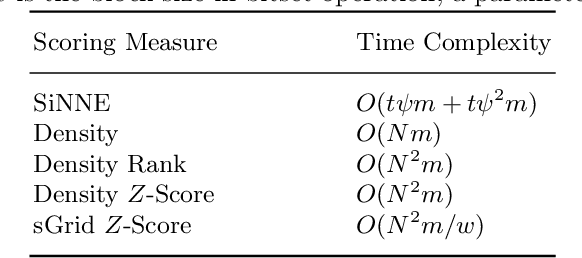

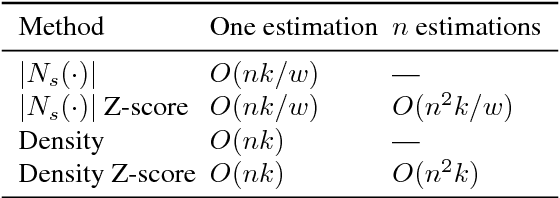

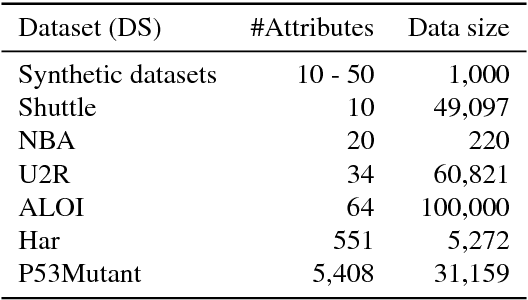

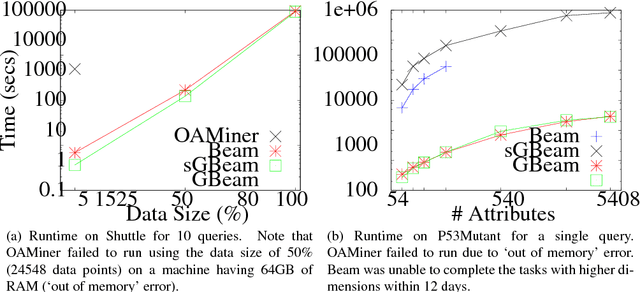

Abstract:Outlying Aspect Mining (OAM) aims to find the subspaces (a.k.a. aspects) in which a given query is an outlier with respect to a given dataset. Existing OAM algorithms use traditional distance/density-based outlier scores to rank subspaces. Because these distance/density-based scores depend on the dimensionality of subspaces, they cannot be compared directly between subspaces of different dimensionality. $Z$-score normalisation has been used to make them comparable. It requires to compute outlier scores of all instances in each subspace. This adds significant computational overhead on top of already expensive density estimation---making OAM algorithms infeasible to run in large and/or high-dimensional datasets. We also discover that $Z$-score normalisation is inappropriate for OAM in some cases. In this paper, we introduce a new score called SiNNE, which is independent of the dimensionality of subspaces. This enables the scores in subspaces with different dimensionalities to be compared directly without any additional normalisation. Our experimental results revealed that SiNNE produces better or at least the same results as existing scores; and it significantly improves the runtime of an existing OAM algorithm based on beam search.

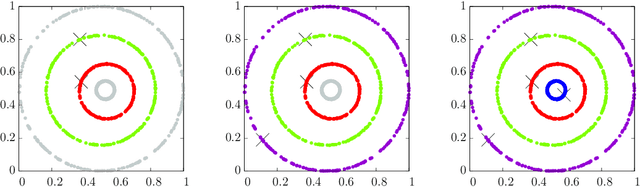

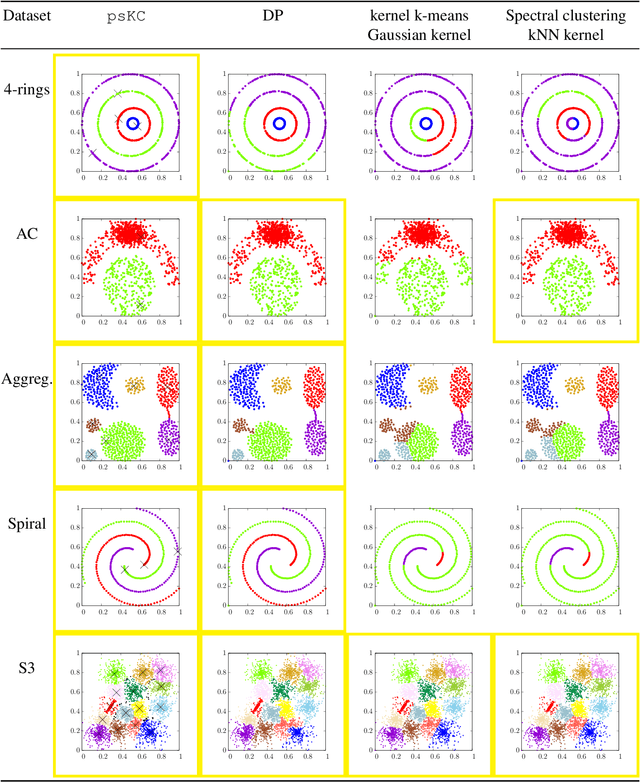

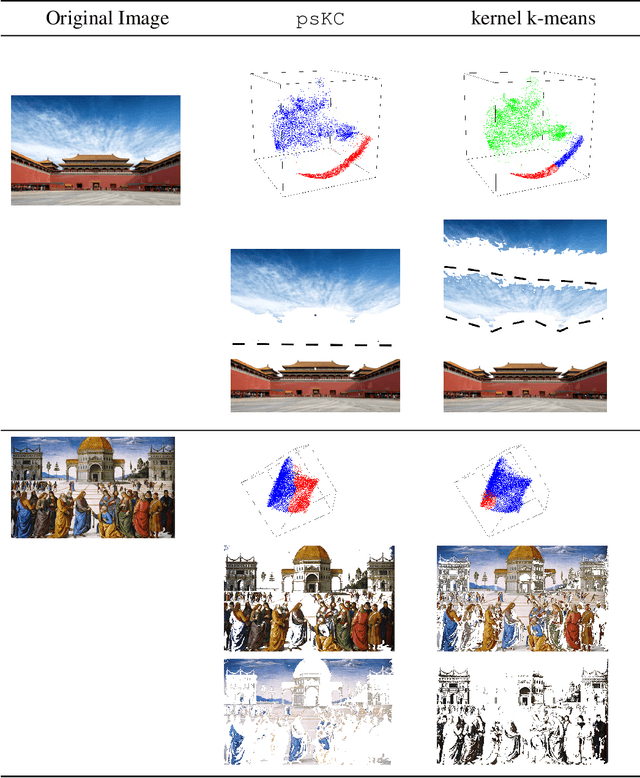

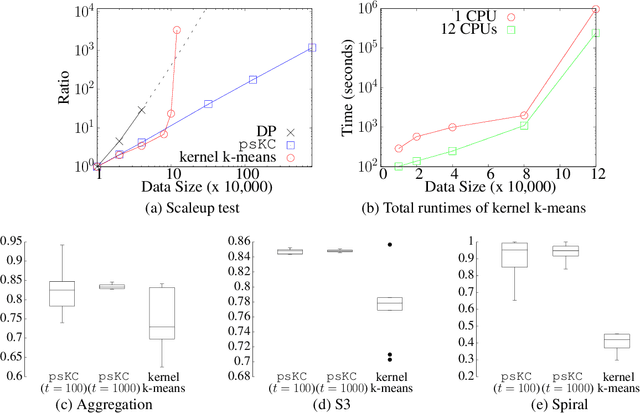

Clustering based on Point-Set Kernel

Feb 14, 2020

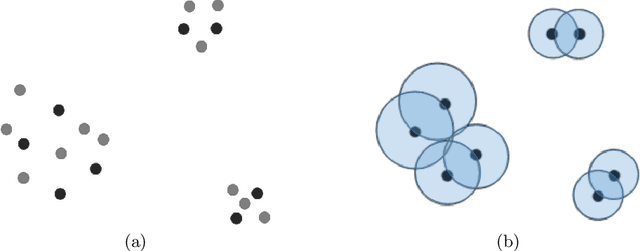

Abstract:Measuring similarity between two objects is the core operation in existing cluster analyses in grouping similar objects into clusters. Cluster analyses have been applied to a number of applications, including image segmentation, social network analysis, and computational biology. This paper introduces a new similarity measure called point-set kernel which computes the similarity between an object and a sample of objects generated from an unknown distribution. The proposed clustering procedure utilizes this new measure to characterize both the typical point of every cluster and the cluster grown from the typical point. We show that the new clustering procedure is both effective and efficient such that it can deal with large scale datasets. In contrast, existing clustering algorithms are either efficient or effective; and even efficient ones have difficulty dealing with large scale datasets without special hardware. We show that the proposed algorithm is more effective and runs orders of magnitude faster than the state-of-the-art density-peak clustering and scalable kernel k-means clustering when applying to datasets of millions of data points, on commonly used computing machines.

Isolation Kernel: The X Factor in Efficient and Effective Large Scale Online Kernel Learning

Jul 02, 2019

Abstract:Large scale online kernel learning aims to build an efficient and scalable kernel-based predictive model incrementally from a sequence of potentially infinite data points. To achieve this aim, the method must be able to deal with a potentially infinite number of support vectors. The current state-of-the-art is unable to deal with even a moderate number of support vectors. This paper identifies the root cause of the current methods, i.e., the type of kernel used which has a feature map of infinite dimensionality. With this revelation and together with our discovery that a recently introduced Isolation Kernel has a finite feature map, to achieve the above aim of large scale online kernel learning becomes extremely simple---simply use Isolation Kernel instead of kernels having infinite feature map. We show for the first time that online kernel learning is able to deal with a potentially infinite number of support vectors.

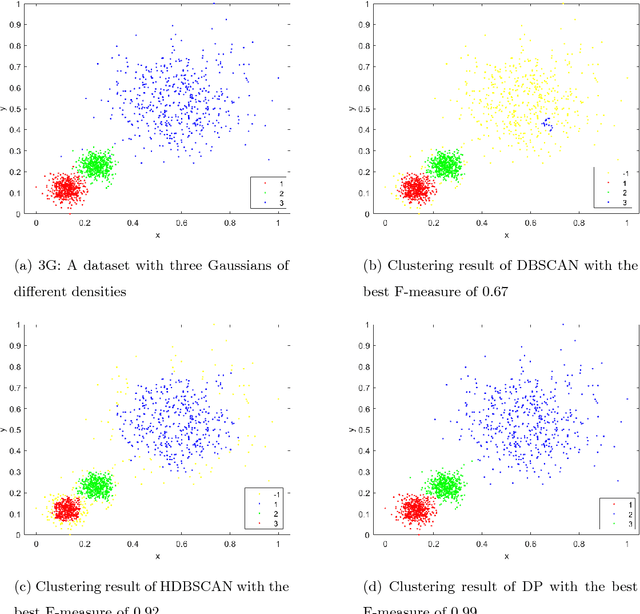

Nearest-Neighbour-Induced Isolation Similarity and its Impact on Density-Based Clustering

Jun 30, 2019

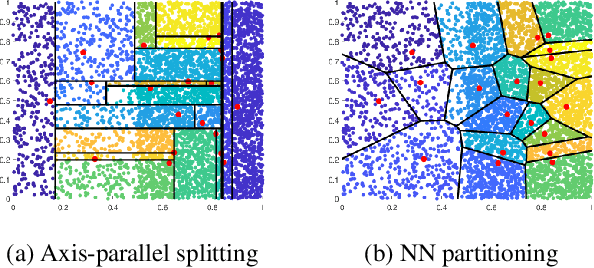

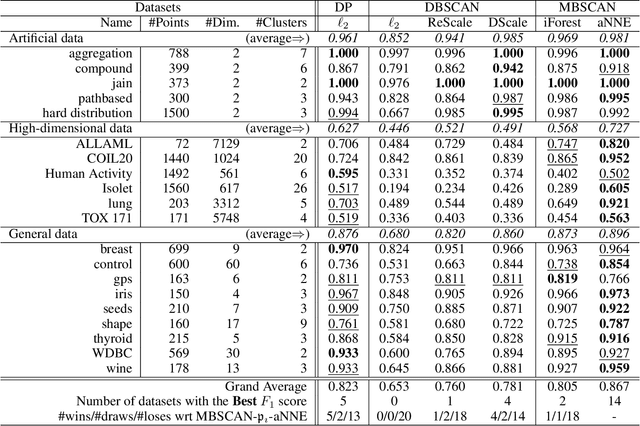

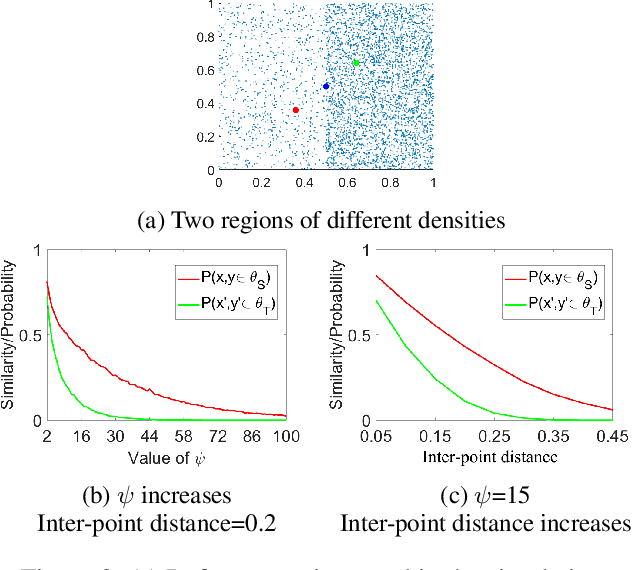

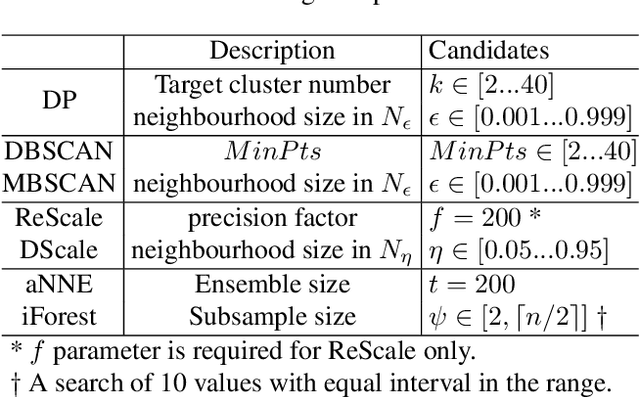

Abstract:A recent proposal of data dependent similarity called Isolation Kernel/Similarity has enabled SVM to produce better classification accuracy. We identify shortcomings of using a tree method to implement Isolation Similarity; and propose a nearest neighbour method instead. We formally prove the characteristic of Isolation Similarity with the use of the proposed method. The impact of Isolation Similarity on density-based clustering is studied here. We show for the first time that the clustering performance of the classic density-based clustering algorithm DBSCAN can be significantly uplifted to surpass that of the recent density-peak clustering algorithm DP. This is achieved by simply replacing the distance measure with the proposed nearest-neighbour-induced Isolation Similarity in DBSCAN, leaving the rest of the procedure unchanged. A new type of clusters called mass-connected clusters is formally defined. We show that DBSCAN, which detects density-connected clusters, becomes one which detects mass-connected clusters, when the distance measure is replaced with the proposed similarity. We also provide the condition under which mass-connected clusters can be detected, while density-connected clusters cannot.

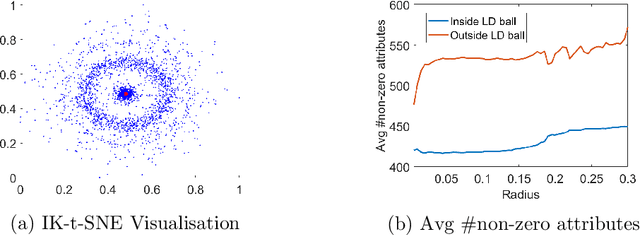

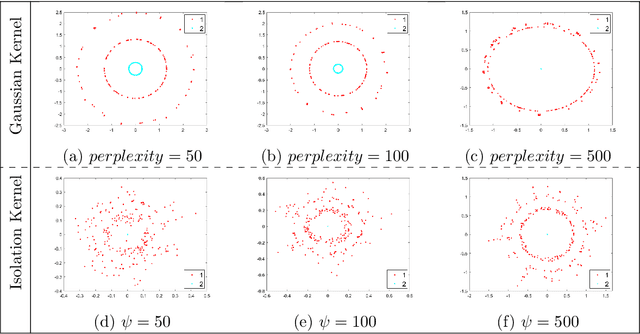

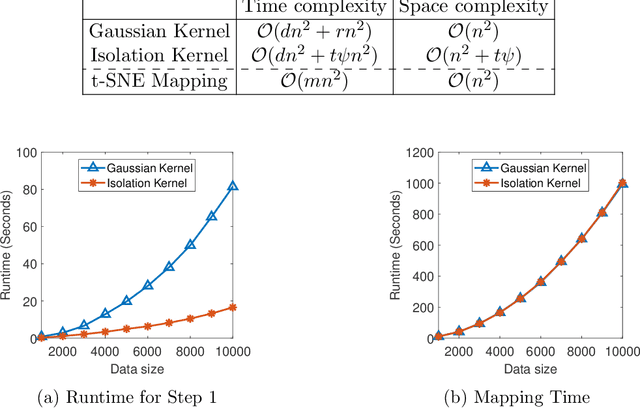

Improving Stochastic Neighbour Embedding fundamentally with a well-defined data-dependent kernel

Jun 25, 2019

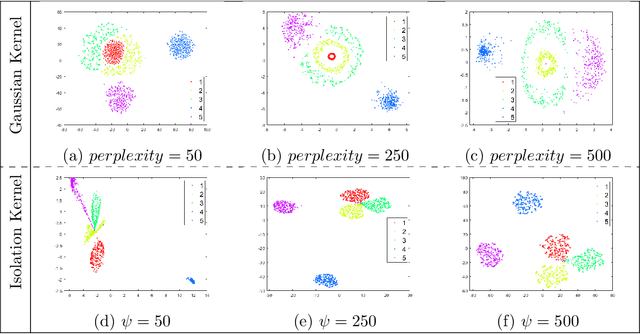

Abstract:We identify a fundamental issue in the popular Stochastic Neighbour Embedding (SNE and t-SNE), i.e., the "learned" similarity of any two points in high-dimensional space is not defined and cannot be computed. It underlines two previously unexplored issues in the algorithm which have undermined the quality of its final visualisation output and its ability to process large datasets. The issues are:(a) the reference probability in high-dimensional space is set based on entropy which has undefined relation with local density; and (b) the use of data independent kernel which leads to the need to determine n bandwidths for a dataset of n points. This paper establishes a principle to set the reference probability via a data-dependent kernel which has a well-defined kernel characteristic that linked directly to local density. A solution based on a recent data-dependent kernel called Isolation Kernel addresses the fundamental issue as well as its two ensuing issues. As a result, it significantly improves the quality of the final visualisation output and removes one obstacle that prevents t-SNE from processing large datasets. The solution is extremely simple, i.e., simply replacing the existing data independent kernel with Isolation Kernel, leaving the rest of the t-SNE procedure unchanged.

Hierarchical clustering that takes advantage of both density-peak and density-connectivity

Oct 08, 2018

Abstract:This paper focuses on density-based clustering, particularly the Density Peak (DP) algorithm and the one based on density-connectivity DBSCAN; and proposes a new method which takes advantage of the individual strengths of these two methods to yield a density-based hierarchical clustering algorithm. Our investigation begins with formally defining the types of clusters DP and DBSCAN are designed to detect; and then identifies the kinds of distributions that DP and DBSCAN individually fail to detect all clusters in a dataset. These identified weaknesses inspire us to formally define a new kind of clusters and propose a new method called DC-HDP to overcome these weaknesses to identify clusters with arbitrary shapes and varied densities. In addition, the new method produces a richer clustering result in terms of hierarchy or dendrogram for better cluster structures understanding. Our empirical evaluation results show that DC-HDP produces the best clustering results on 14 datasets in comparison with 7 state-of-the-art clustering algorithms.

A simple efficient density estimator that enables fast systematic search

Sep 12, 2017

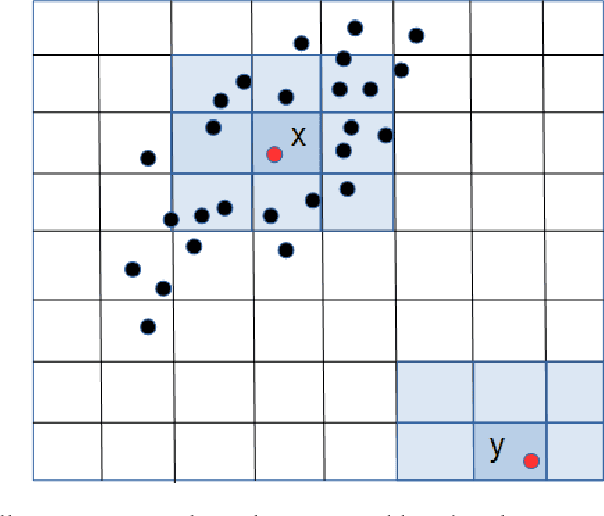

Abstract:This paper introduces a simple and efficient density estimator that enables fast systematic search. To show its advantage over commonly used kernel density estimator, we apply it to outlying aspects mining. Outlying aspects mining discovers feature subsets (or subspaces) that describe how a query stand out from a given dataset. The task demands a systematic search of subspaces. We identify that existing outlying aspects miners are restricted to datasets with small data size and dimensions because they employ kernel density estimator, which is computationally expensive, for subspace assessments. We show that a recent outlying aspects miner can run orders of magnitude faster by simply replacing its density estimator with the proposed density estimator, enabling it to deal with large datasets with thousands of dimensions that would otherwise be impossible.

Classification under Streaming Emerging New Classes: A Solution using Completely Random Trees

May 30, 2016

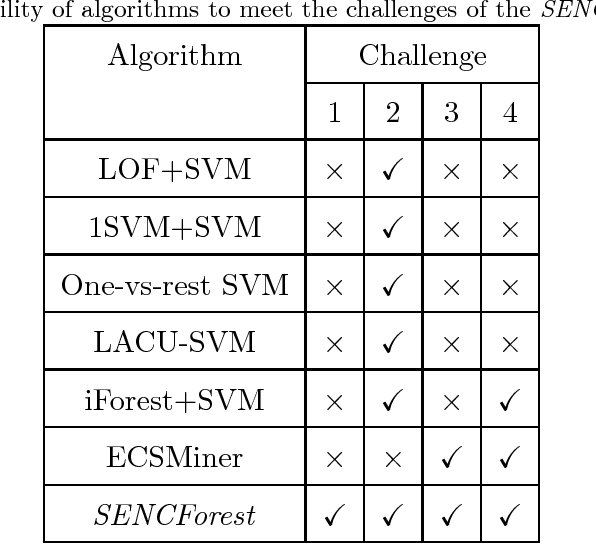

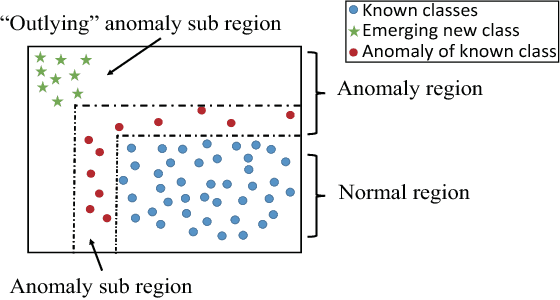

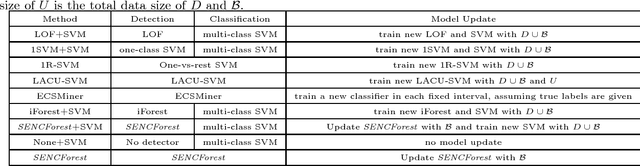

Abstract:This paper investigates an important problem in stream mining, i.e., classification under streaming emerging new classes or SENC. The common approach is to treat it as a classification problem and solve it using either a supervised learner or a semi-supervised learner. We propose an alternative approach by using unsupervised learning as the basis to solve this problem. The SENC problem can be decomposed into three sub problems: detecting emerging new classes, classifying for known classes, and updating models to enable classification of instances of the new class and detection of more emerging new classes. The proposed method employs completely random trees which have been shown to work well in unsupervised learning and supervised learning independently in the literature. This is the first time, as far as we know, that completely random trees are used as a single common core to solve all three sub problems: unsupervised learning, supervised learning and model update in data streams. We show that the proposed unsupervised-learning-focused method often achieves significantly better outcomes than existing classification-focused methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge