Junyang Mo

Breast Ultrasound Tumor Generation via Mask Generator and Text-Guided Network:A Clinically Controllable Framework with Downstream Evaluation

Jul 10, 2025Abstract:The development of robust deep learning models for breast ultrasound (BUS) image analysis is significantly constrained by the scarcity of expert-annotated data. To address this limitation, we propose a clinically controllable generative framework for synthesizing BUS images. This framework integrates clinical descriptions with structural masks to generate tumors, enabling fine-grained control over tumor characteristics such as morphology, echogencity, and shape. Furthermore, we design a semantic-curvature mask generator, which synthesizes structurally diverse tumor masks guided by clinical priors. During inference, synthetic tumor masks serve as input to the generative framework, producing highly personalized synthetic BUS images with tumors that reflect real-world morphological diversity. Quantitative evaluations on six public BUS datasets demonstrate the significant clinical utility of our synthetic images, showing their effectiveness in enhancing downstream breast cancer diagnosis tasks. Furthermore, visual Turing tests conducted by experienced sonographers confirm the realism of the generated images, indicating the framework's potential to support broader clinical applications.

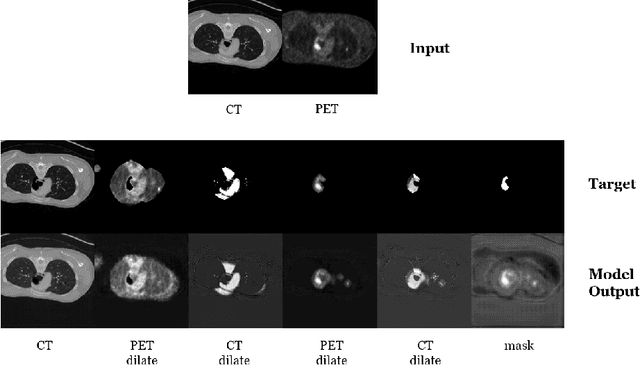

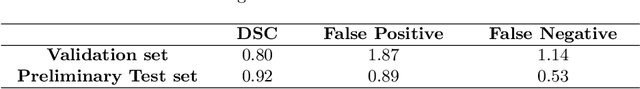

AutoPET Challenge 2022: Step-by-Step Lesion Segmentation in Whole-body FDG-PET/CT

Sep 04, 2022

Abstract:Automatic segmentation of tumor lesions is a critical initial processing step for quantitative PET/CT analysis. However, numerous tumor lesions with different shapes, sizes, and uptake intensity may be distributed in different anatomical contexts throughout the body, and there is also significant uptake in healthy organs. Therefore, building a systemic PET/CT tumor lesion segmentation model is a challenging task. In this paper, we propose a novel step-by-step 3D segmentation method to address this problem. We achieved Dice score of 0.92, false positive volume of 0.89 and false negative volume of 0.53 on preliminary test set.The code of our work is available on the following link: https://github.com/rightl/autopet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge