Jordan M. Malof

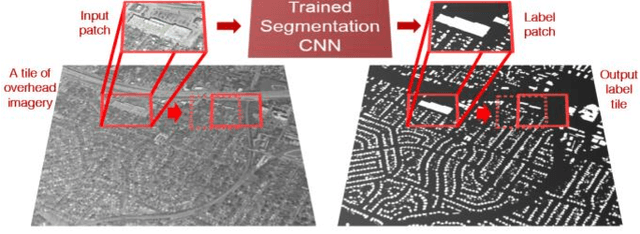

The Synthinel-1 dataset: a collection of high resolution synthetic overhead imagery for building segmentation

Jan 15, 2020

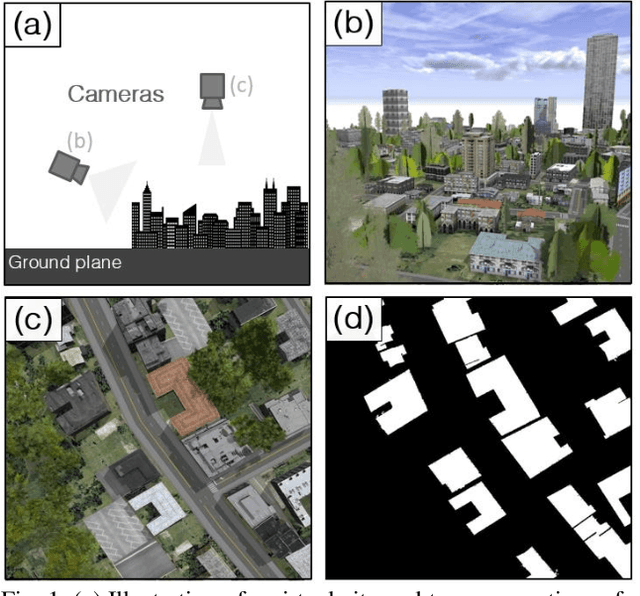

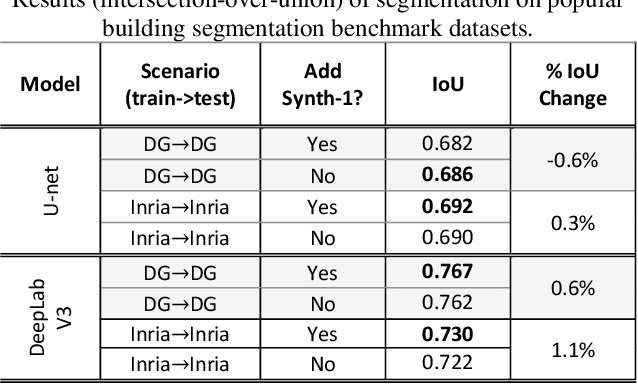

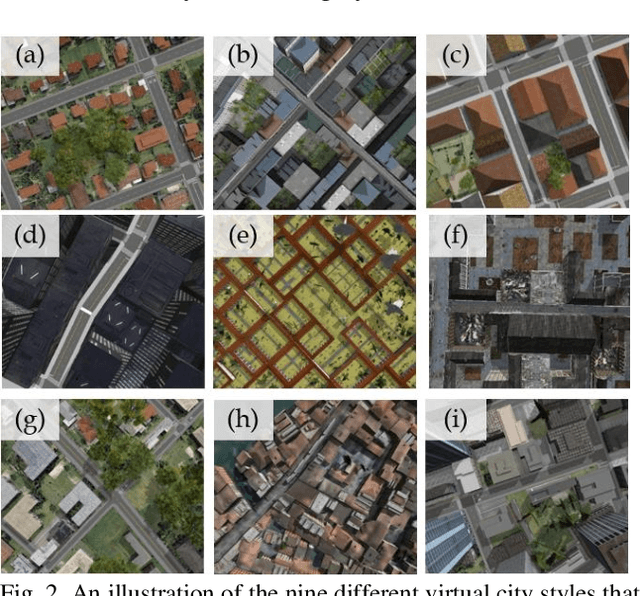

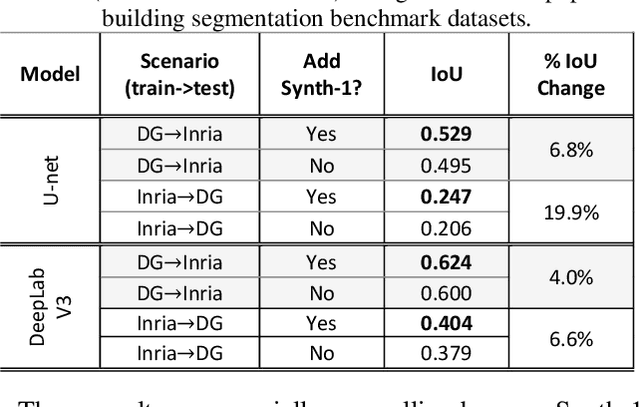

Abstract:Recently deep learning - namely convolutional neural networks (CNNs) - have yielded impressive performance for the task of building segmentation on large overhead (e.g., satellite) imagery benchmarks. However, these benchmark datasets only capture a small fraction of the variability present in real-world overhead imagery, limiting the ability to properly train, or evaluate, models for real-world application. Unfortunately, developing a dataset that captures even a small fraction of real-world variability is typically infeasible due to the cost of imagery, and manual pixel-wise labeling of the imagery. In this work we develop an approach to rapidly and cheaply generate large and diverse virtual environments from which we can capture synthetic overhead imagery for training segmentation CNNs. Using this approach, generate and publicly-release a collection of synthetic overhead imagery - termed Synthinel-1 with full pixel-wise building labels. We use several benchmark dataset to demonstrate that Synthinel-1 is consistently beneficial when used to augment real-world training imagery, especially when CNNs are tested on novel geographic locations or conditions.

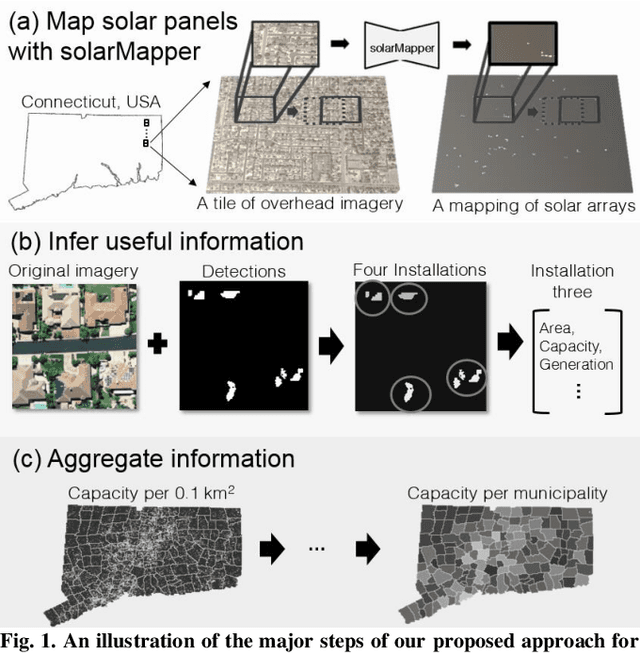

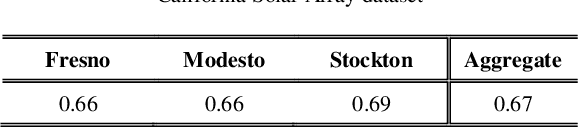

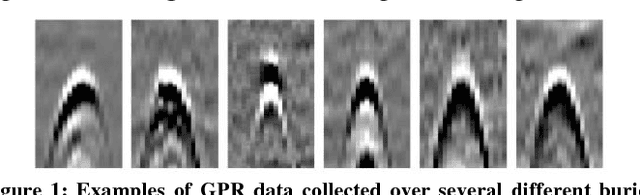

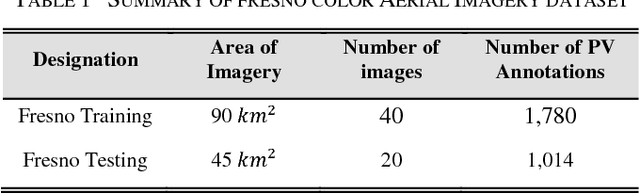

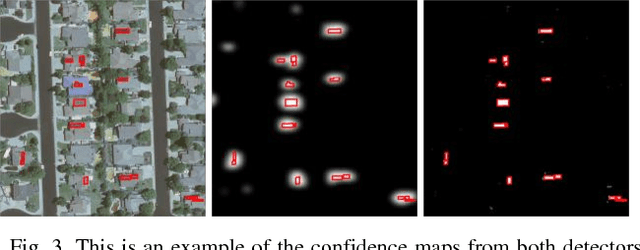

Mapping solar array location, size, and capacity using deep learning and overhead imagery

Feb 28, 2019

Abstract:The effective integration of distributed solar photovoltaic (PV) arrays into existing power grids will require access to high quality data; the location, power capacity, and energy generation of individual solar PV installations. Unfortunately, existing methods for obtaining this data are limited in their spatial resolution and completeness. We propose a general framework for accurately and cheaply mapping individual PV arrays, and their capacities, over large geographic areas. At the core of this approach is a deep learning algorithm called SolarMapper - which we make publicly available - that can automatically map PV arrays in high resolution overhead imagery. We estimate the performance of SolarMapper on a large dataset of overhead imagery across three US cities in California. We also describe a procedure for deploying SolarMapper to new geographic regions, so that it can be utilized by others. We demonstrate the effectiveness of the proposed deployment procedure by using it to map solar arrays across the entire US state of Connecticut (CT). Using these results, we demonstrate that we achieve highly accurate estimates of total installed PV capacity within each of CT's 168 municipal regions.

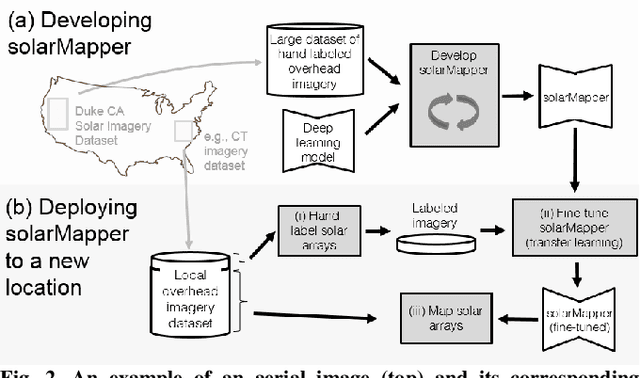

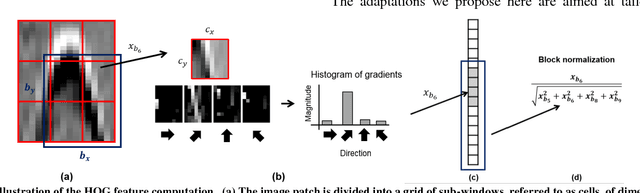

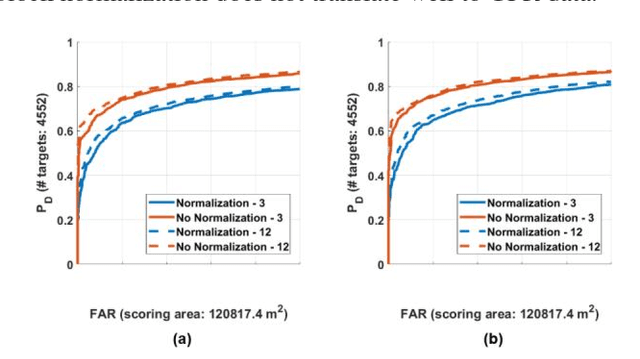

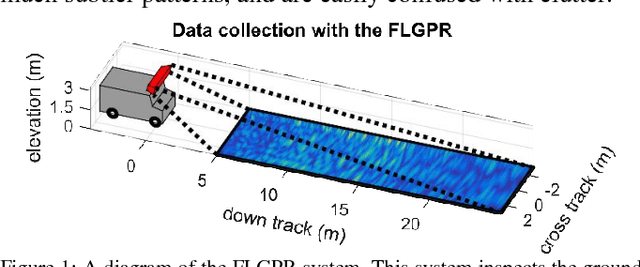

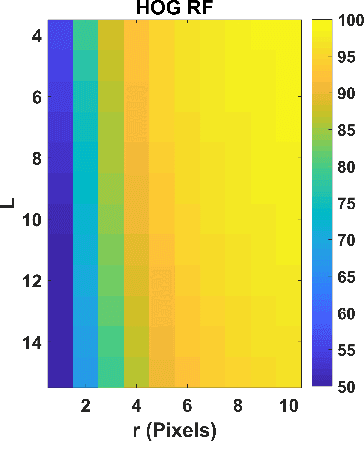

gprHOG and the popularity of Histogram of Oriented Gradients for Buried Threat Detection in Ground-Penetrating Radar

Oct 02, 2018

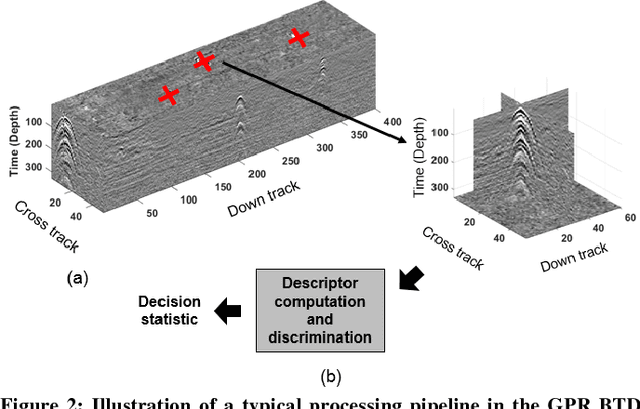

Abstract:Substantial research has been devoted to the development of algorithms that automate buried threat detection (BTD) with ground penetrating radar (GPR) data, resulting in a large number of proposed algorithms. One popular algorithm GPR-based BTD, originally applied by Torrione et al., 2012, is the Histogram of Oriented Gradients (HOG) feature. In a recent large-scale comparison among five veteran institutions, a modified version of HOG referred to here as "gprHOG", performed poorly compared to other modern algorithms. In this paper, we provide experimental evidence demonstrating that the modifications to HOG that comprise gprHOG result in a substantially better-performing algorithm. The results here, in conjunction with the large-scale algorithm comparison, suggest that HOG is not competitive with modern GPR-based BTD algorithms. Given HOG's popularity, these results raise some questions about many existing studies, and suggest gprHOG (and especially HOG) should be employed with caution in future studies.

A Large-Scale Multi-Institutional Evaluation of Advanced Discrimination Algorithms for Buried Threat Detection in Ground Penetrating Radar

Jun 07, 2018

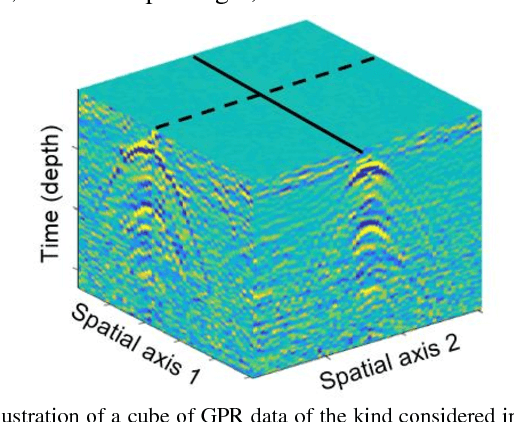

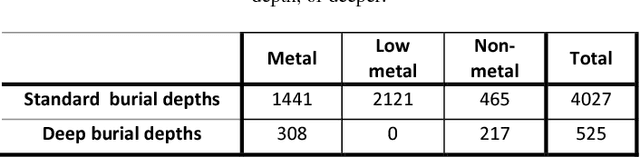

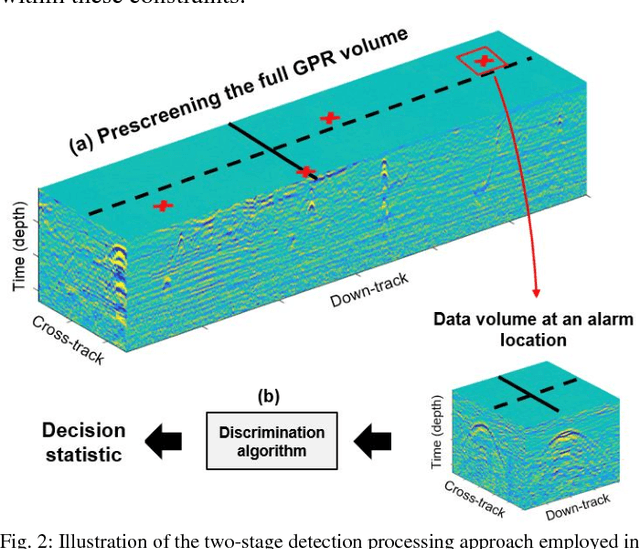

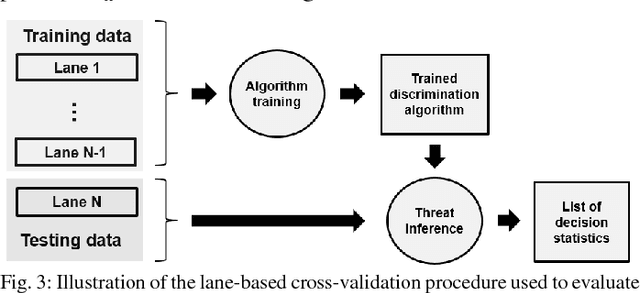

Abstract:In this paper we consider the development of algorithms for the automatic detection of buried threats using ground penetrating radar (GPR) measurements. GPR is one of the most studied and successful modalities for automatic buried threat detection (BTD), and a large variety of BTD algorithms have been proposed for it. Despite this, large-scale comparisons of GPR-based BTD algorithms are rare in the literature. In this work we report the results of a multi-institutional effort to develop advanced buried threat detection algorithms for a real-world GPR BTD system. The effort involved five institutions with substantial experience with the development of GPR-based BTD algorithms. In this paper we report the technical details of the advanced algorithms submitted by each institution, representing their latest technical advances, and many state-of-the-art GPR-based BTD algorithms. We also report the results of evaluating the algorithms from each institution on the large experimental dataset used for development. The experimental dataset comprised 120,000 m^2 of GPR data using surface area, from 13 different lanes across two US test sites. The data was collected using a vehicle-mounted GPR system, the variants of which have supplied data for numerous publications. Using these results, we identify the most successful and common processing strategies among the submitted algorithms, and make recommendations for GPR-based BTD algorithm design.

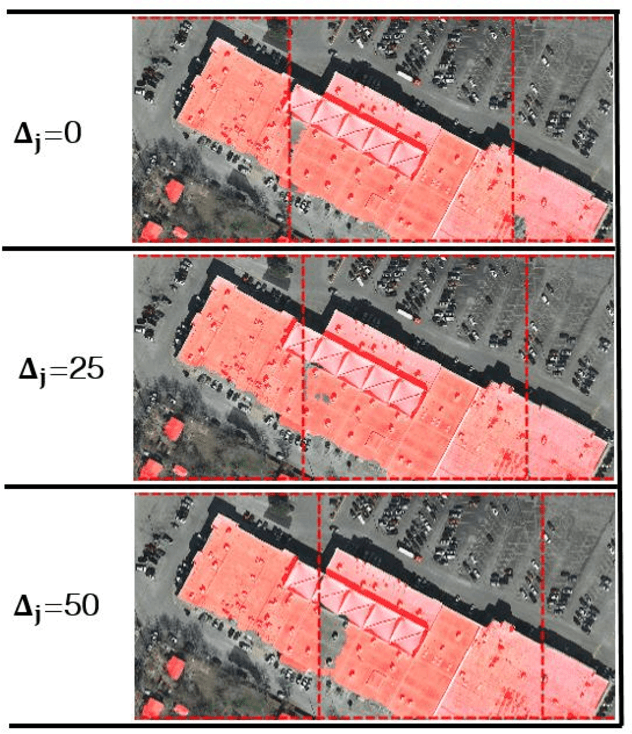

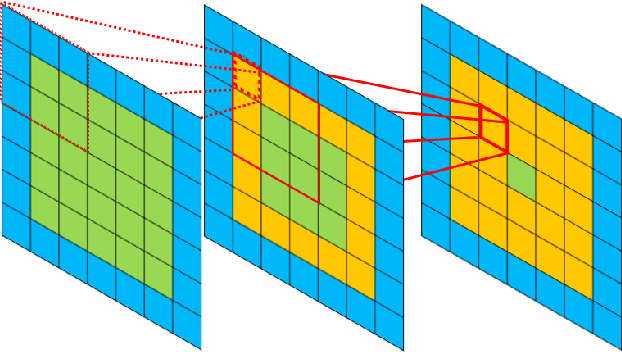

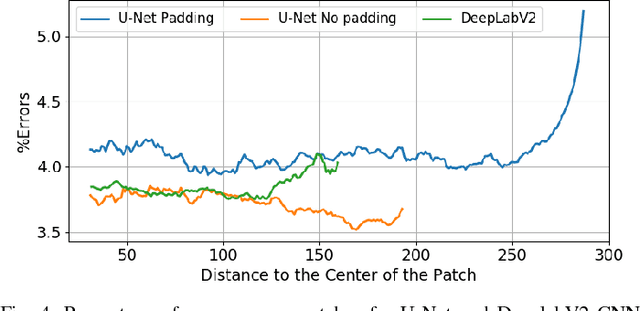

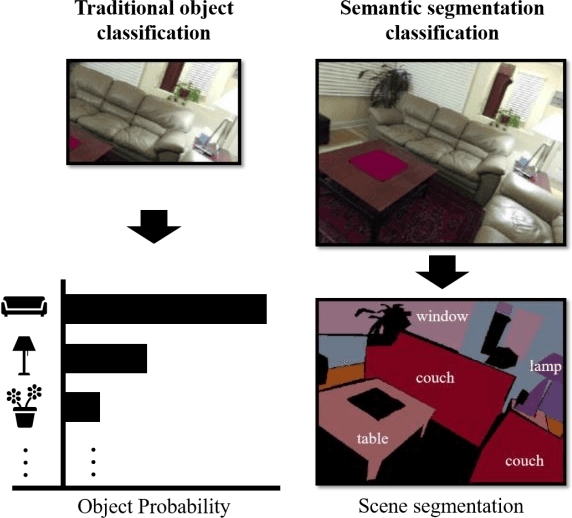

Dense labeling of large remote sensing imagery with convolutional neural networks: a simple and faster alternative to stitching output label maps

May 30, 2018

Abstract:In this work we consider the application of convolutional neural networks (CNNs) for pixel-wise labeling (a.k.a., semantic segmentation) of remote sensing imagery (e.g., aerial color or hyperspectral imagery). Remote sensing imagery is usually stored in the form of very large images, referred to as "tiles", which are too large to be segmented directly using most CNNs and their associated hardware. As a result, during label inference, smaller sub-images, called "patches", are processed individually and then "stitched" (concatenated) back together to create a tile-sized label map. This approach suffers from computational ineffiency and can result in discontinuities at output boundaries. We propose a simple alternative approach in which the input size of the CNN is dramatically increased only during label inference. This does not avoid stitching altogether, but substantially mitigates its limitations. We evaluate the performance of the proposed approach against a vonventional stitching approach using two popular segmentation CNN models and two large-scale remote sensing imagery datasets. The results suggest that the proposed approach substantially reduces label inference time, while also yielding modest overall label accuracy increases. This approach contributed to our wining entry (overall performance) in the INRIA building labeling competition.

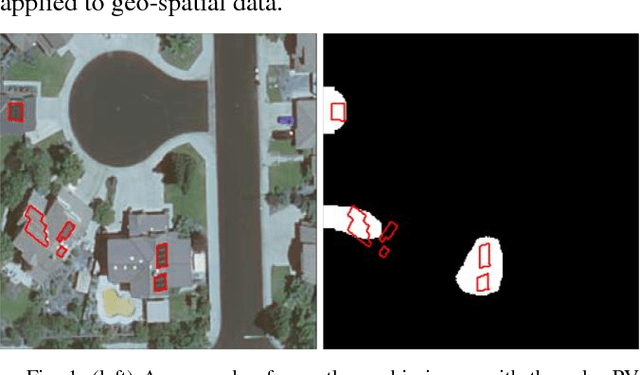

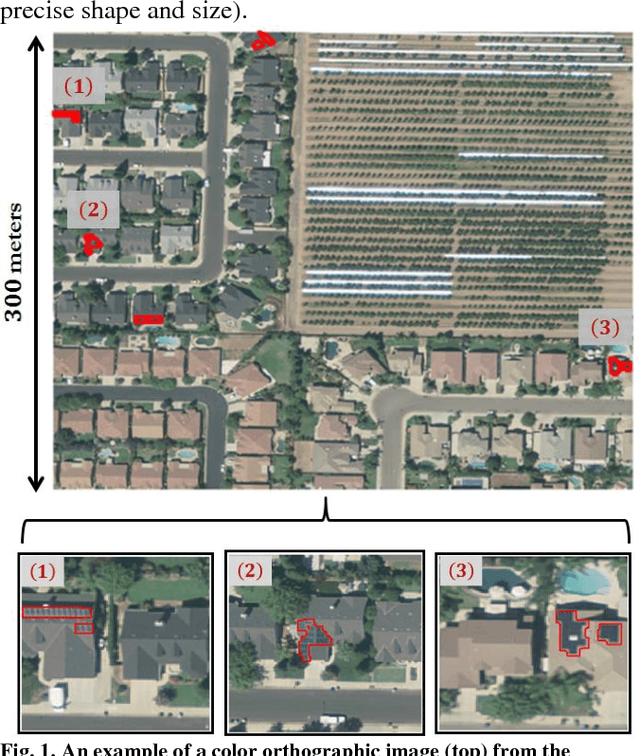

Application of a semantic segmentation convolutional neural network for accurate automatic detection and mapping of solar photovoltaic arrays in aerial imagery

Jan 11, 2018

Abstract:We consider the problem of automatically detecting small-scale solar photovoltaic arrays for behind-the-meter energy resource assessment in high resolution aerial imagery. Such algorithms offer a faster and more cost-effective solution to collecting information on distributed solar photovoltaic (PV) arrays, such as their location, capacity, and generated energy. The surface area of PV arrays, a characteristic which can be estimated from aerial imagery, provides an important proxy for array capacity and energy generation. In this work, we employ a state-of-the-art convolutional neural network architecture, called SegNet (Badrinarayanan et. al., 2015), to semantically segment (or map) PV arrays in aerial imagery. This builds on previous work focused on identifying the locations of PV arrays, as opposed to their specific shapes and sizes. We measure the ability of our SegNet implementation to estimate the surface area of PV arrays on a large, publicly available, dataset that has been employed in several previous studies. The results indicate that the SegNet model yields substantial performance improvements with respect to estimating shape and size as compared to a recently proposed convolutional neural network PV detection algorithm.

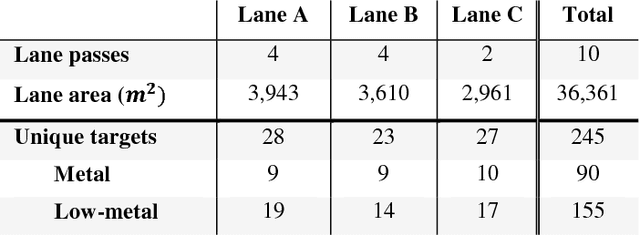

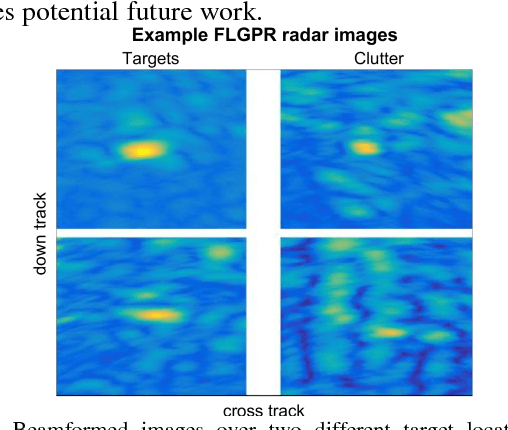

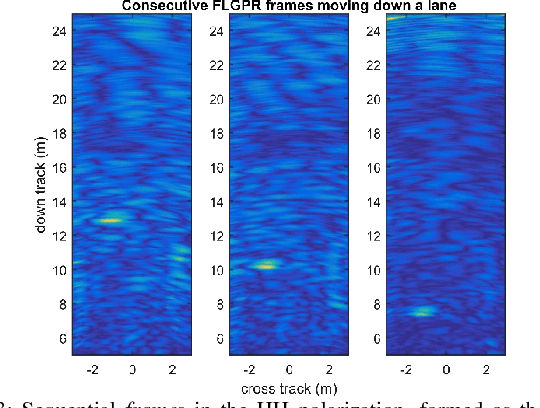

A large comparison of feature-based approaches for buried target classification in forward-looking ground-penetrating radar

Feb 09, 2017

Abstract:Forward-looking ground-penetrating radar (FLGPR) has recently been investigated as a remote sensing modality for buried target detection (e.g., landmines). In this context, raw FLGPR data is beamformed into images and then computerized algorithms are applied to automatically detect subsurface buried targets. Most existing algorithms are supervised, meaning they are trained to discriminate between labeled target and non-target imagery, usually based on features extracted from the imagery. A large number of features have been proposed for this purpose, however thus far it is unclear which are the most effective. The first goal of this work is to provide a comprehensive comparison of detection performance using existing features on a large collection of FLGPR data. Fusion of the decisions resulting from processing each feature is also considered. The second goal of this work is to investigate two modern feature learning approaches from the object recognition literature: the bag-of-visual-words and the Fisher vector for FLGPR processing. The results indicate that the new feature learning approaches outperform existing methods. Results also show that fusion between existing features and new features yields little additional performance improvements.

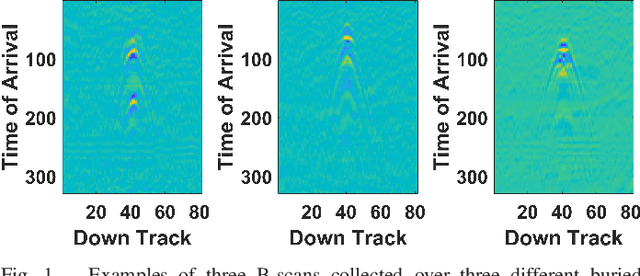

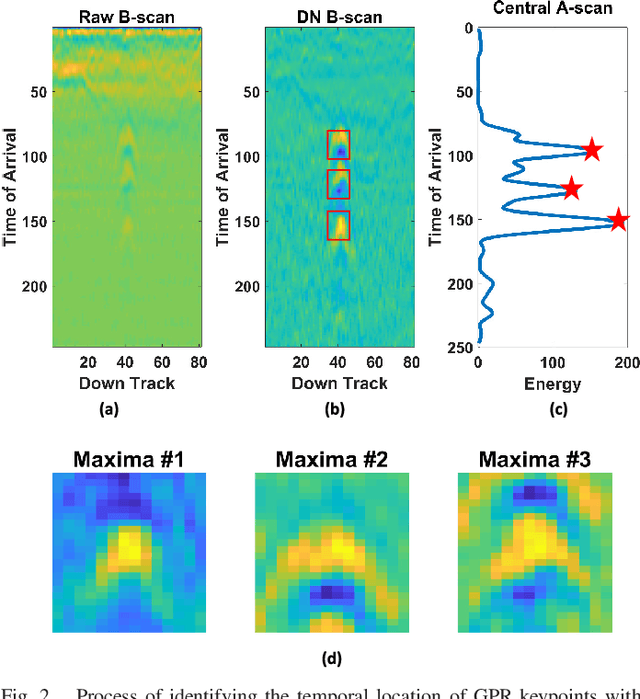

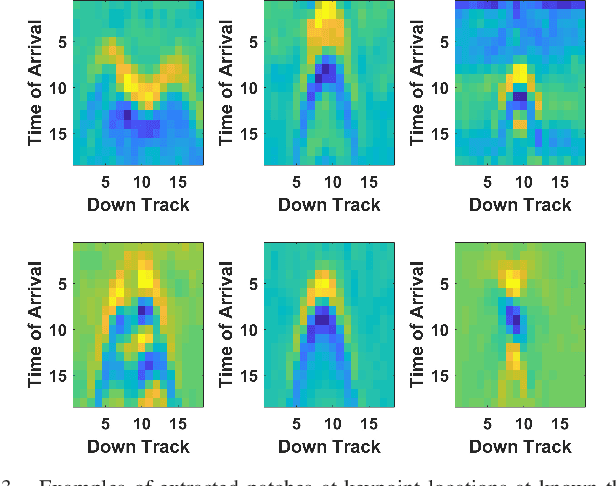

On Choosing Training and Testing Data for Supervised Algorithms in Ground Penetrating Radar Data for Buried Threat Detection

Dec 11, 2016

Abstract:Ground penetrating radar (GPR) is one of the most popular and successful sensing modalities that has been investigated for landmine and subsurface threat detection. Many of the detection algorithms applied to this task are supervised and therefore require labeled examples of target and non-target data for training. Training data most often consists of 2-dimensional images (or patches) of GPR data, from which features are extracted, and provided to the classifier during training and testing. Identifying desirable training and testing locations to extract patches, which we term "keypoints", is well established in the literature. In contrast however, a large variety of strategies have been proposed regarding keypoint utilization (e.g., how many of the identified keypoints should be used at targets, or non-target, locations). Given the variety keypoint utilization strategies that are available, it is very unclear (i) which strategies are best, or (ii) whether the choice of strategy has a large impact on classifier performance. We address these questions by presenting a taxonomy of existing utilization strategies, and then evaluating their effectiveness on a large dataset using many different classifiers and features. We analyze the results and propose a new strategy, called PatchSelect, which outperforms other strategies across all experiments.

Automatic Detection of Solar Photovoltaic Arrays in High Resolution Aerial Imagery

Jul 20, 2016

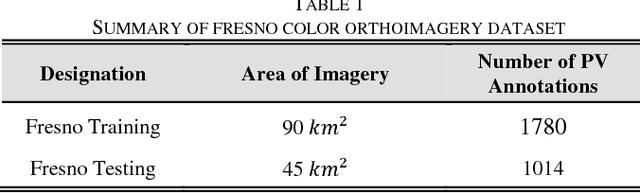

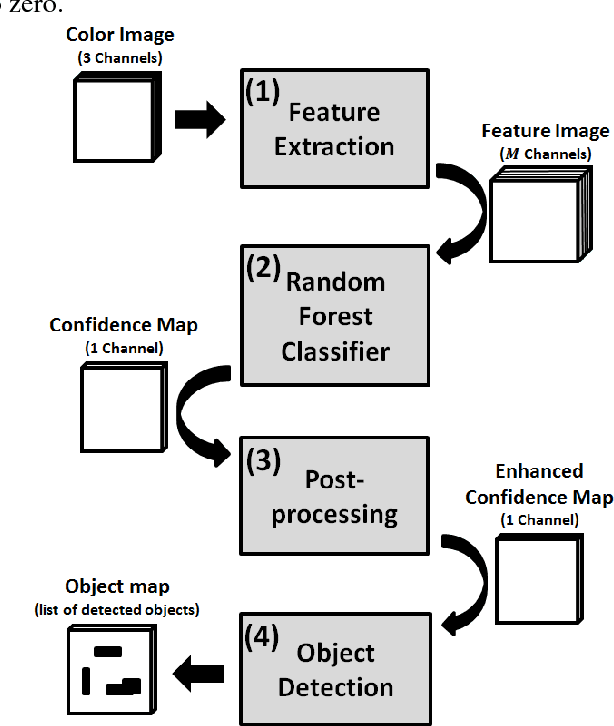

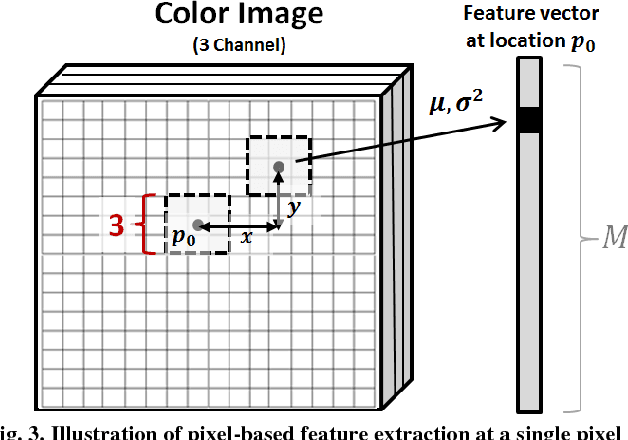

Abstract:The quantity of small scale solar photovoltaic (PV) arrays in the United States has grown rapidly in recent years. As a result, there is substantial interest in high quality information about the quantity, power capacity, and energy generated by such arrays, including at a high spatial resolution (e.g., counties, cities, or even smaller regions). Unfortunately, existing methods for obtaining this information, such as surveys and utility interconnection filings, are limited in their completeness and spatial resolution. This work presents a computer algorithm that automatically detects PV panels using very high resolution color satellite imagery. The approach potentially offers a fast, scalable method for obtaining accurate information on PV array location and size, and at much higher spatial resolutions than are currently available. The method is validated using a very large (135 km^2) collection of publicly available [1] aerial imagery, with over 2,700 human annotated PV array locations. The results demonstrate the algorithm is highly effective on a per-pixel basis. It is likewise effective at object-level PV array detection, but with significant potential for improvement in estimating the precise shape/size of the PV arrays. These results are the first of their kind for the detection of solar PV in aerial imagery, demonstrating the feasibility of the approach and establishing a baseline performance for future investigations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge