Joohyung Kim

CHILD (Controller for Humanoid Imitation and Live Demonstration): a Whole-Body Humanoid Teleoperation System

Jul 31, 2025Abstract:Recent advances in teleoperation have demonstrated robots performing complex manipulation tasks. However, existing works rarely support whole-body joint-level teleoperation for humanoid robots, limiting the diversity of tasks that can be accomplished. This work presents Controller for Humanoid Imitation and Live Demonstration (CHILD), a compact reconfigurable teleoperation system that enables joint level control over humanoid robots. CHILD fits within a standard baby carrier, allowing the operator control over all four limbs, and supports both direct joint mapping for full-body control and loco-manipulation. Adaptive force feedback is incorporated to enhance operator experience and prevent unsafe joint movements. We validate the capabilities of this system by conducting loco-manipulation and full-body control examples on a humanoid robot and multiple dual-arm systems. Lastly, we open-source the design of the hardware promoting accessibility and reproducibility. Additional details and open-source information are available at our project website: https://uiuckimlab.github.io/CHILD-pages.

PAPRAS: Plug-And-Play Robotic Arm System

Feb 19, 2023Abstract:This paper presents a novel robotic arm system, named PAPRAS (Plug-And-Play Robotic Arm System). PAPRAS consists of a portable robotic arm(s), docking mount(s), and software architecture including a control system. By analyzing the target task spaces at home, the dimensions and configuration of PAPRAS are determined. PAPRAS's arm is light (less than 6kg) with an optimized 3D-printed structure, and it has a high payload (3kg) as a human-arm-sized manipulator. A locking mechanism is embedded in the structure for better portability and the 3D-printed docking mount can be installed easily. PAPRAS's software architecture is developed on an open-source framework and optimized for low-latency multiagent-based distributed manipulator control. A process to create new demonstrations is presented to show PAPRAS's ease of use and efficiency. In the paper, simulations and hardware experiments are presented in various demonstrations, including sink-to-dishwasher manipulation, coffee making, mobile manipulation on a quadruped, and suit-up demo to validate the hardware and software design.

Self-Supervised Motion Retargeting with Safety Guarantee

Mar 11, 2021

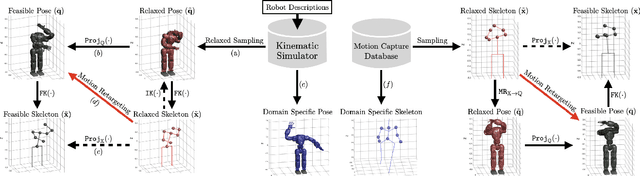

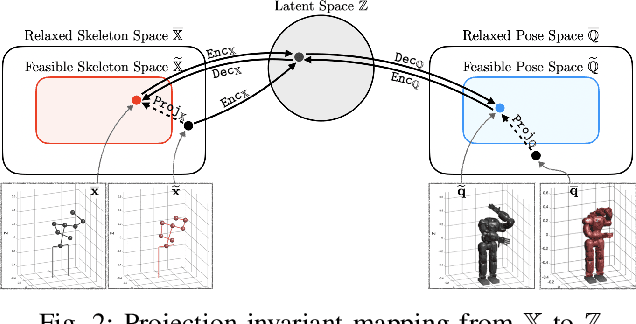

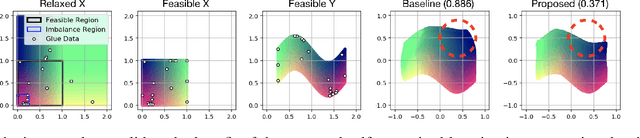

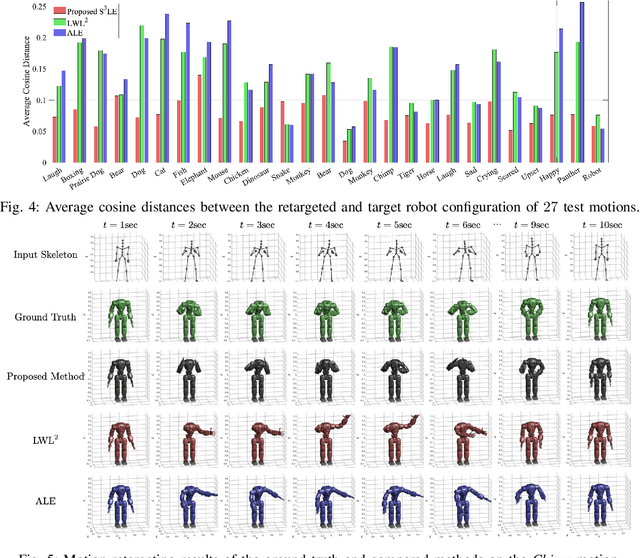

Abstract:In this paper, we present self-supervised shared latent embedding (S3LE), a data-driven motion retargeting method that enables the generation of natural motions in humanoid robots from motion capture data or RGB videos. While it requires paired data consisting of human poses and their corresponding robot configurations, it significantly alleviates the necessity of time-consuming data-collection via novel paired data generating processes. Our self-supervised learning procedure consists of two steps: automatically generating paired data to bootstrap the motion retargeting, and learning a projection-invariant mapping to handle the different expressivity of humans and humanoid robots. Furthermore, our method guarantees that the generated robot pose is collision-free and satisfies position limits by utilizing nonparametric regression in the shared latent space. We demonstrate that our method can generate expressive robotic motions from both the CMU motion capture database and YouTube videos.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge