Jonathan Pan

Conversational Context Classification: A Representation Engineering Approach

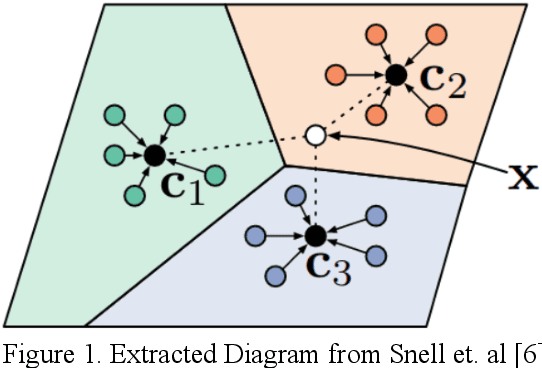

Jan 18, 2026Abstract:The increasing prevalence of Large Language Models (LLMs) demands effective safeguards for their operation, particularly concerning their tendency to generate out-of-context responses. A key challenge is accurately detecting when LLMs stray from expected conversational norms, manifesting as topic shifts, factual inaccuracies, or outright hallucinations. Traditional anomaly detection struggles to directly apply within contextual semantics. This paper outlines our experiment in exploring the use of Representation Engineering (RepE) and One-Class Support Vector Machine (OCSVM) to identify subspaces within the internal states of LLMs that represent a specific context. By training OCSVM on in-context examples, we establish a robust boundary within the LLM's hidden state latent space. We evaluate out study with two open source LLMs - Llama and Qwen models in specific contextual domain. Our approach entailed identifying the optimal layers within the LLM's internal state subspaces that strongly associates with the context of interest. Our evaluation results showed promising results in identifying the subspace for a specific context. Aside from being useful in detecting in or out of context conversation threads, this research work contributes to the study of better interpreting LLMs.

Automated Post-Incident Policy Gap Analysis via Threat-Informed Evidence Mapping using Large Language Models

Jan 04, 2026Abstract:Cybersecurity post-incident reviews are essential for identifying control failures and improving organisational resilience, yet they remain labour-intensive, time-consuming, and heavily reliant on expert judgment. This paper investigates whether Large Language Models (LLMs) can augment post-incident review workflows by autonomously analysing system evidence and identifying security policy gaps. We present a threat-informed, agentic framework that ingests log data, maps observed behaviours to the MITRE ATT&CK framework, and evaluates organisational security policies for adequacy and compliance. Using a simulated brute-force attack scenario against a Windows OpenSSH service (MITRE ATT&CK T1110), the system leverages GPT-4o for reasoning, LangGraph for multi-agent workflow orchestration, and LlamaIndex for traceable policy retrieval. Experimental results indicate that the LLM-based pipeline can interpret log-derived evidence, identify insufficient or missing policy controls, and generate actionable remediation recommendations with explicit evidence-to-policy traceability. Unlike prior work that treats log analysis and policy validation as isolated tasks, this study integrates both into a unified end-to-end proof-of-concept post-incident review framework. The findings suggest that LLM-assisted analysis has the potential to improve the efficiency, consistency, and auditability of post-incident evaluations, while highlighting the continued need for human oversight in high-stakes cybersecurity decision-making.

Automating Security Audit Using Large Language Model based Agent: An Exploration Experiment

May 15, 2025Abstract:In the current rapidly changing digital environment, businesses are under constant stress to ensure that their systems are secured. Security audits help to maintain a strong security posture by ensuring that policies are in place, controls are implemented, gaps are identified for cybersecurity risks mitigation. However, audits are usually manual, requiring much time and costs. This paper looks at the possibility of developing a framework to leverage Large Language Models (LLMs) as an autonomous agent to execute part of the security audit, namely with the field audit. password policy compliance for Windows operating system. Through the conduct of an exploration experiment of using GPT-4 with Langchain, the agent executed the audit tasks by accurately flagging password policy violations and appeared to be more efficient than traditional manual audits. Despite its potential limitations in operational consistency in complex and dynamic environment, the framework suggests possibilities to extend further to real-time threat monitoring and compliance checks.

Probing Latent Subspaces in LLM for AI Security: Identifying and Manipulating Adversarial States

Mar 12, 2025Abstract:Large Language Models (LLMs) have demonstrated remarkable capabilities across various tasks, yet they remain vulnerable to adversarial manipulations such as jailbreaking via prompt injection attacks. These attacks bypass safety mechanisms to generate restricted or harmful content. In this study, we investigated the underlying latent subspaces of safe and jailbroken states by extracting hidden activations from a LLM. Inspired by attractor dynamics in neuroscience, we hypothesized that LLM activations settle into semi stable states that can be identified and perturbed to induce state transitions. Using dimensionality reduction techniques, we projected activations from safe and jailbroken responses to reveal latent subspaces in lower dimensional spaces. We then derived a perturbation vector that when applied to safe representations, shifted the model towards a jailbreak state. Our results demonstrate that this causal intervention results in statistically significant jailbreak responses in a subset of prompts. Next, we probed how these perturbations propagate through the model's layers, testing whether the induced state change remains localized or cascades throughout the network. Our findings indicate that targeted perturbations induced distinct shifts in activations and model responses. Our approach paves the way for potential proactive defenses, shifting from traditional guardrail based methods to preemptive, model agnostic techniques that neutralize adversarial states at the representation level.

Audio Simulation for Sound Source Localization in Virtual Evironment

Apr 02, 2024Abstract:Non-line-of-sight localization in signal-deprived environments is a challenging yet pertinent problem. Acoustic methods in such predominantly indoor scenarios encounter difficulty due to the reverberant nature. In this study, we aim to locate sound sources to specific locations within a virtual environment by leveraging physically grounded sound propagation simulations and machine learning methods. This process attempts to overcome the issue of data insufficiency to localize sound sources to their location of occurrence especially in post-event localization. We achieve 0.786+/- 0.0136 F1-score using an audio transformer spectrogram approach.

Enhancing Reasoning Capacity of SLM using Cognitive Enhancement

Apr 01, 2024

Abstract:Large Language Models (LLMs) have been applied to automate cyber security activities and processes including cyber investigation and digital forensics. However, the use of such models for cyber investigation and digital forensics should address accountability and security considerations. Accountability ensures models have the means to provide explainable reasonings and outcomes. This information can be extracted through explicit prompt requests. For security considerations, it is crucial to address privacy and confidentiality of the involved data during data processing as well. One approach to deal with this consideration is to have the data processed locally using a local instance of the model. Due to limitations of locally available resources, namely memory and GPU capacities, a Smaller Large Language Model (SLM) will typically be used. These SLMs have significantly fewer parameters compared to the LLMs. However, such size reductions have notable performance reduction, especially when tasked to provide reasoning explanations. In this paper, we aim to mitigate performance reduction through the integration of cognitive strategies that humans use for problem-solving. We term this as cognitive enhancement through prompts. Our experiments showed significant improvement gains of the SLMs' performances when such enhancements were applied. We believe that our exploration study paves the way for further investigation into the use of cognitive enhancement to optimize SLM for cyber security applications.

A Review on the effectiveness of Dimensional Reduction with Computational Forensics: An Application on Malware Analysis

Jan 15, 2023Abstract:The Android operating system is pervasively adopted as the operating system platform of choice for smart devices. However, the strong adoption has also resulted in exponential growth in the number of Android based malicious software or malware. To deal with such cyber threats as part of cyber investigation and digital forensics, computational techniques in the form of machine learning algorithms are applied for such malware identification, detection and forensics analysis. However, such Computational Forensics modelling techniques are constrained the volume, velocity, variety and veracity of the malware landscape. This in turn would affect its identification and detection effectiveness. Such consequence would inherently induce the question of sustainability with such solution approach. One approach to optimise effectiveness is to apply dimensional reduction techniques like Principal Component Analysis with the intent to enhance algorithmic performance. In this paper, we evaluate the effectiveness of the application of Principle Component Analysis on Computational Forensics task of detecting Android based malware. We applied our research hypothesis to three different datasets with different machine learning algorithms. Our research result showed that the dimensionally reduced dataset would result in a measure of degradation in accuracy performance.

Active Meta-Learner for Log Analysis

Mar 18, 2022

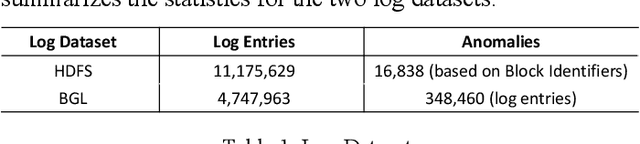

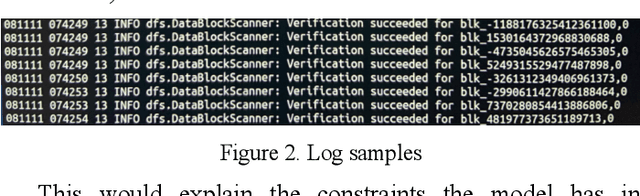

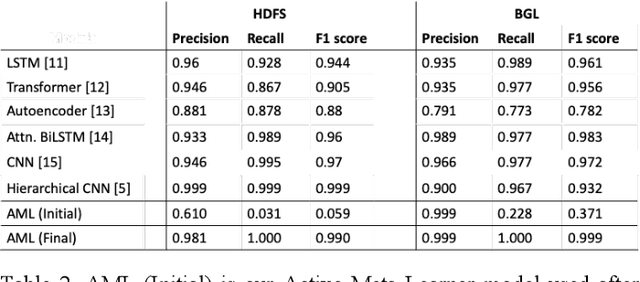

Abstract:The analysis of logs is a vital activity undertaken for cyber investigation, digital forensics and fault detection to enhance system and cyber resilience. However, performing log analysis is a complex task. It requires extensive knowledge of how the logs are generated and the format of the log entries used. Also, it requires extensive knowledge or expertise in the identifying anomalous log entries from normal or benign log entries. This is especially complex when the forms of anomalous entries are constrained by what are the known forms of internal or external attacks techniques or the varied forms of disruptions that may exists. New or evasive forms of such disruptions are difficult to define. The challenge of log analysis is further complicated by the volume of log entries. Even with the availability of such log data, labelling such log entries would be a massive undertaking. Hence this research seeks to address these challenges with its novel Deep Learning model that learns and improves itself progressively with inputs or corrections provided when available. The practical application of such model construct facilitates log analysis or review with abilities to learn or incorporate new patterns to spot anomalies or ignore false positives.

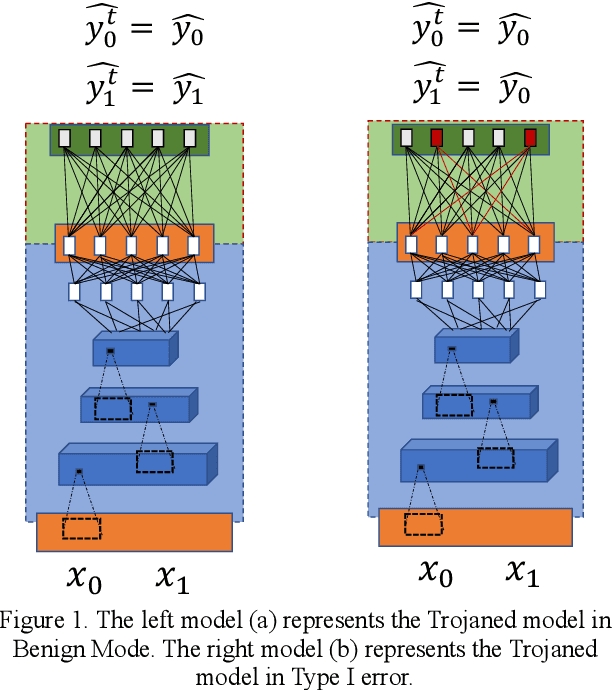

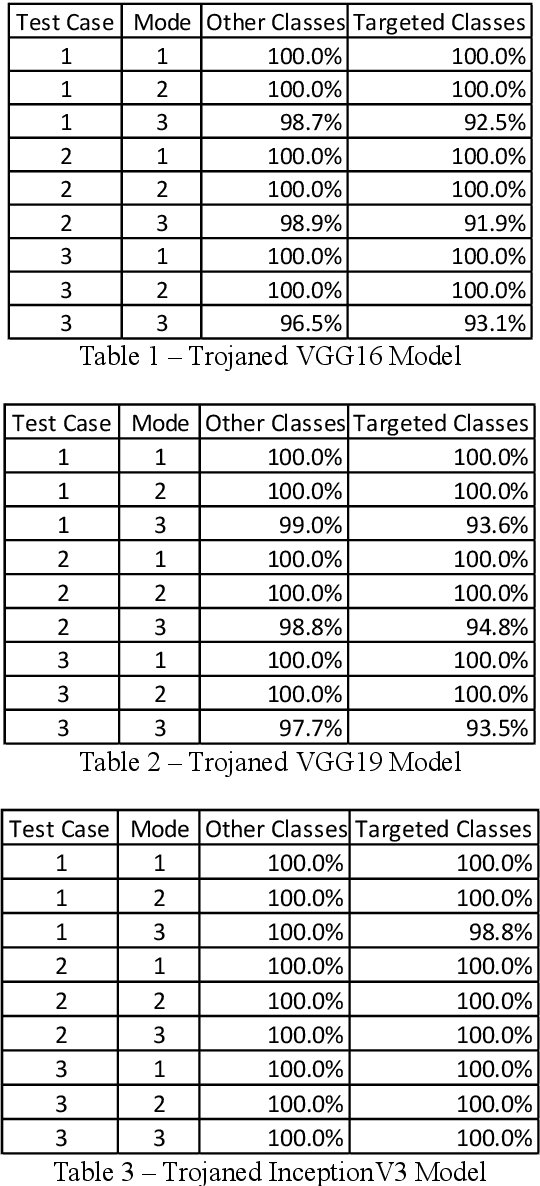

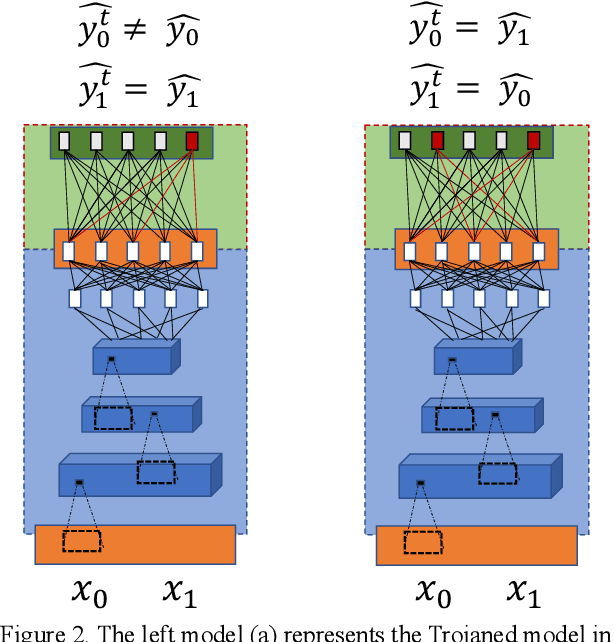

Blackbox Trojanising of Deep Learning Models : Using non-intrusive network structure and binary alterations

Aug 02, 2020

Abstract:Recent advancements in Artificial Intelligence namely in Deep Learning has heightened its adoption in many applications. Some are playing important roles to the extent that we are heavily dependent on them for our livelihood. However, as with all technologies, there are vulnerabilities that malicious actors could exploit. A form of exploitation is to turn these technologies, intended for good, to become dual-purposed instruments to support deviant acts like malicious software trojans. As part of proactive defense, researchers are proactively identifying such vulnerabilities so that protective measures could be developed subsequently. This research explores a novel blackbox trojanising approach using a simple network structure modification to any deep learning image classification model that would transform a benign model into a deviant one with a simple manipulation of the weights to induce specific types of errors. Propositions to protect the occurrence of such simple exploits are discussed in this research. This research highlights the importance of providing sufficient safeguards to these models so that the intended good of AI innovation and adoption may be protected.

IoT Network Behavioral Fingerprint Inference with Limited Network Trace for Cyber Investigation: A Meta Learning Approach

Feb 08, 2020

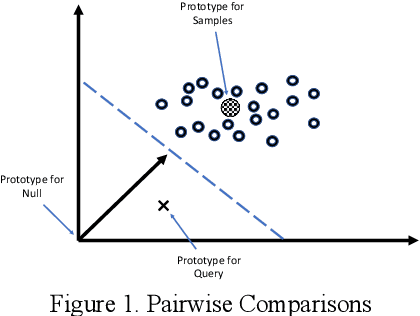

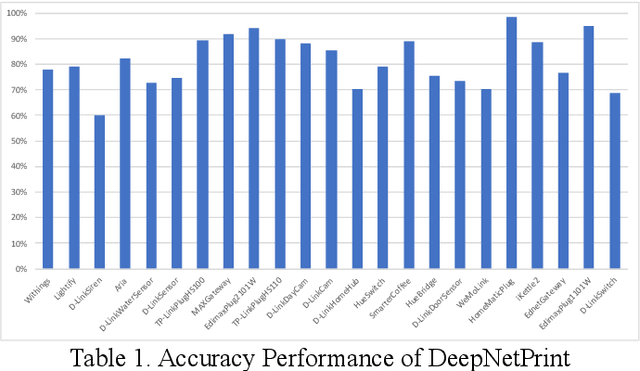

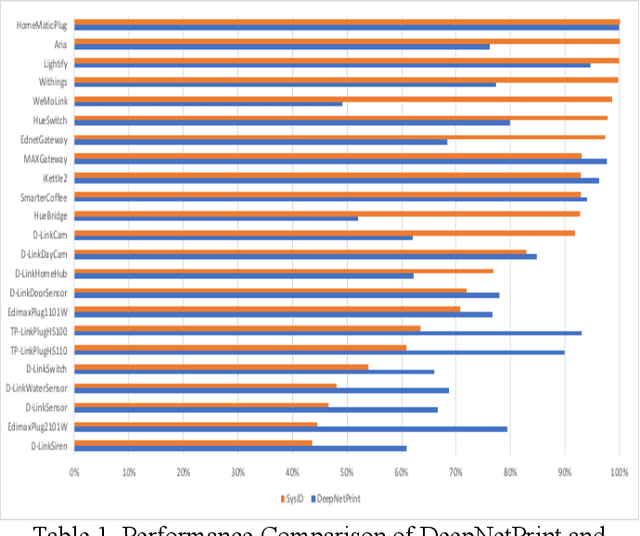

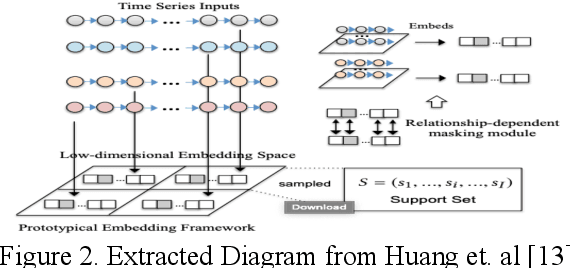

Abstract:The development and adoption of Internet of Things (IoT) devices will grow significantly in the coming years to enable Industry 4.0. Many forms of IoT devices will be developed and used across industry verticals. However, the euphoria of this technology adoption is shadowed by the solemn presence of cyber threats that will follow its growth trajectory. Cyber threats would either embed their malicious code or attack vulnerabilities in IoT that could induce significant consequences in cyber and physical realms. In order to manage such destructive effects, incident responders and cyber investigators require the capabilities to find these rogue IoT and contain them quickly. Such online devices may only leave network activity traces. A collection of relevant traces could be used to infer the IoT's network behaviorial fingerprints and in turn could facilitate investigative find of these IoT. However, the challenge is how to infer these fingerprints when there is limited network activity traces. This research proposes the novel model construct that learns to infer the network behaviorial fingerprint of specific IoT based on limited network activity traces using a One-Card Time Series Meta-Learner called DeepNetPrint. Our research also demonstrates the application of DeepNetPrint to identify IoT devices that performs comparatively well against leading supervised learning models. Our solution would enable cyber investigator to identify specific IoT of interest while overcoming the constraints of having only limited network traces of the IoT.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge