John Roberts

Building Effective Safety Guardrails in AI Education Tools

Aug 07, 2025Abstract:There has been rapid development in generative AI tools across the education sector, which in turn is leading to increased adoption by teachers. However, this raises concerns regarding the safety and age-appropriateness of the AI-generated content that is being created for use in classrooms. This paper explores Oak National Academy's approach to addressing these concerns within the development of the UK Government's first publicly available generative AI tool - our AI-powered lesson planning assistant (Aila). Aila is intended to support teachers planning national curriculum-aligned lessons that are appropriate for pupils aged 5-16 years. To mitigate safety risks associated with AI-generated content we have implemented four key safety guardrails - (1) prompt engineering to ensure AI outputs are generated within pedagogically sound and curriculum-aligned parameters, (2) input threat detection to mitigate attacks, (3) an Independent Asynchronous Content Moderation Agent (IACMA) to assess outputs against predefined safety categories, and (4) taking a human-in-the-loop approach, to encourage teachers to review generated content before it is used in the classroom. Through our on-going evaluation of these safety guardrails we have identified several challenges and opportunities to take into account when implementing and testing safety guardrails. This paper highlights ways to build more effective safety guardrails in generative AI education tools including the on-going iteration and refinement of guardrails, as well as enabling cross-sector collaboration through sharing both open-source code, datasets and learnings.

* 9 pages, published in proceedings of International Conference on Artificial Intelligence in Education (AIED) 2025: Posters and Late Breaking Results, Workshops and Tutorials, Industry and Innovation Tracks, Practitioners, Doctoral Consortium, Blue Sky, and WideAIED

Network-level Safety Metrics for Overall Traffic Safety Assessment: A Case Study

Jan 27, 2022

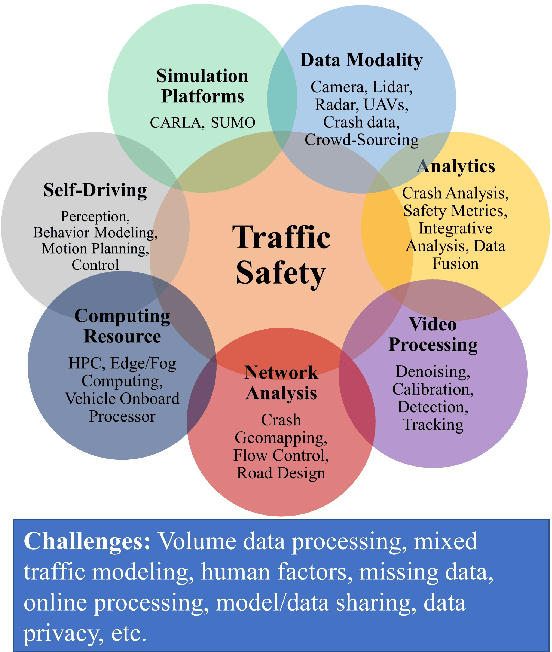

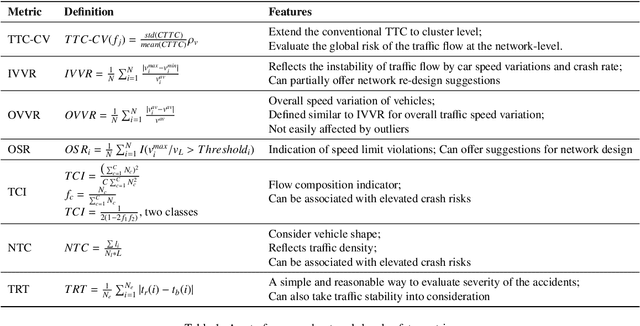

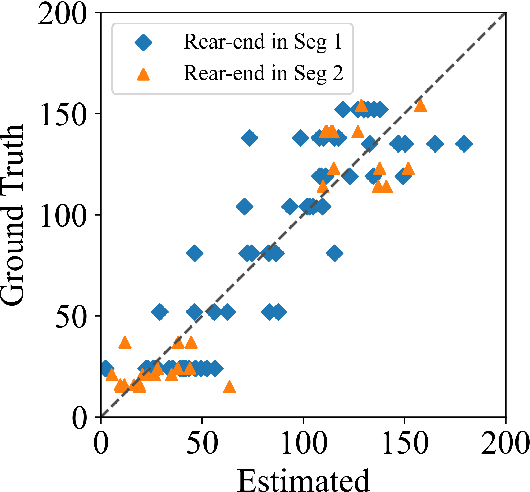

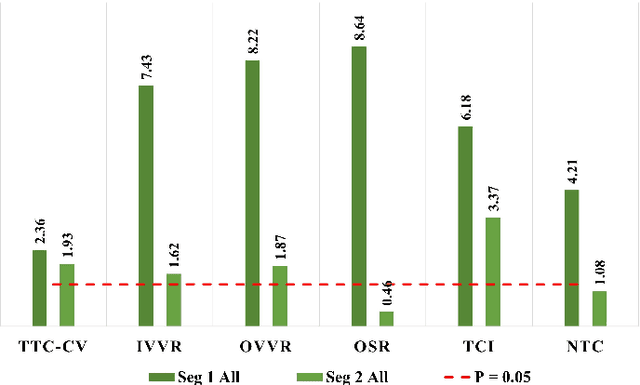

Abstract:Driving safety analysis has recently witnessed unprecedented results due to advances in computation frameworks, connected vehicle technology, new generation sensors, and artificial intelligence (AI). Particularly, the recent advances performance of deep learning (DL) methods realized higher levels of safety for autonomous vehicles and empowered volume imagery processing for driving safety analysis. An important application of DL methods is extracting driving safety metrics from traffic imagery. However, the majority of current methods use safety metrics for micro-scale analysis of individual crash incidents or near-crash events, which does not provide insightful guidelines for the overall network-level traffic management. On the other hand, large-scale safety assessment efforts mainly emphasize spatial and temporal distributions of crashes, while not always revealing the safety violations that cause crashes. To bridge these two perspectives, we define a new set of network-level safety metrics for the overall safety assessment of traffic flow by processing imagery taken by roadside infrastructure sensors. An integrative analysis of the safety metrics and crash data reveals the insightful temporal and spatial correlation between the representative network-level safety metrics and the crash frequency. The analysis is performed using two video cameras in the state of Arizona along with a 5-year crash report obtained from the Arizona Department of Transportation. The results confirm that network-level safety metrics can be used by the traffic management teams to equip traffic monitoring systems with advanced AI-based risk analysis, and timely traffic flow control decisions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge