Jason Blocklove

Large Language Models (LLMs) for Electronic Design Automation (EDA)

Aug 27, 2025

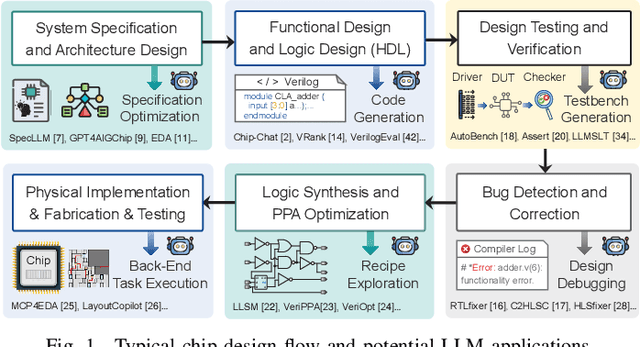

Abstract:With the growing complexity of modern integrated circuits, hardware engineers are required to devote more effort to the full design-to-manufacturing workflow. This workflow involves numerous iterations, making it both labor-intensive and error-prone. Therefore, there is an urgent demand for more efficient Electronic Design Automation (EDA) solutions to accelerate hardware development. Recently, large language models (LLMs) have shown remarkable advancements in contextual comprehension, logical reasoning, and generative capabilities. Since hardware designs and intermediate scripts can be represented as text, integrating LLM for EDA offers a promising opportunity to simplify and even automate the entire workflow. Accordingly, this paper provides a comprehensive overview of incorporating LLMs into EDA, with emphasis on their capabilities, limitations, and future opportunities. Three case studies, along with their outlook, are introduced to demonstrate the capabilities of LLMs in hardware design, testing, and optimization. Finally, future directions and challenges are highlighted to further explore the potential of LLMs in shaping the next-generation EDA, providing valuable insights for researchers interested in leveraging advanced AI technologies for EDA.

Evaluating LLMs for Hardware Design and Test

Apr 23, 2024

Abstract:Large Language Models (LLMs) have demonstrated capabilities for producing code in Hardware Description Languages (HDLs). However, most of the focus remains on their abilities to write functional code, not test code. The hardware design process consists of both design and test, and so eschewing validation and verification leaves considerable potential benefit unexplored, given that a design and test framework may allow for progress towards full automation of the digital design pipeline. In this work, we perform one of the first studies exploring how a LLM can both design and test hardware modules from provided specifications. Using a suite of 8 representative benchmarks, we examined the capabilities and limitations of the state-of-the-art conversational LLMs when producing Verilog for functional and verification purposes. We taped out the benchmarks on a Skywater 130nm shuttle and received the functional chip.

Chip-Chat: Challenges and Opportunities in Conversational Hardware Design

May 22, 2023Abstract:Modern hardware design starts with specifications provided in natural language. These are then translated by hardware engineers into appropriate Hardware Description Languages (HDLs) such as Verilog before synthesizing circuit elements. Automating this translation could reduce sources of human error from the engineering process. But, it is only recently that artificial intelligence (AI) has demonstrated capabilities for machine-based end-to-end design translations. Commercially-available instruction-tuned Large Language Models (LLMs) such as OpenAI's ChatGPT and Google's Bard claim to be able to produce code in a variety of programming languages; but studies examining them for hardware are still lacking. In this work, we thus explore the challenges faced and opportunities presented when leveraging these recent advances in LLMs for hardware design. Using a suite of 8 representative benchmarks, we examined the capabilities and limitations of the state of the art conversational LLMs when producing Verilog for functional and verification purposes. Given that the LLMs performed best when used interactively, we then performed a longer fully conversational case study where a hardware engineer co-designed a novel 8-bit accumulator-based microprocessor architecture. We sent the benchmarks and processor to tapeout in a Skywater 130nm shuttle, meaning that these 'Chip-Chats' resulted in what we believe to be the world's first wholly-AI-written HDL for tapeout.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge