James Noeckel

View2CAD: Reconstructing View-Centric CAD Models from Single RGB-D Scans

Apr 05, 2025

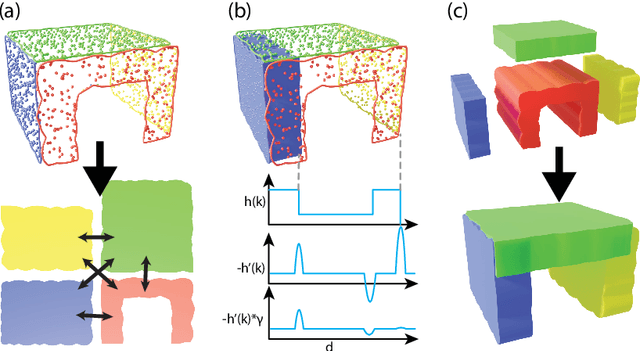

Abstract:Parametric CAD models, represented as Boundary Representations (B-reps), are foundational to modern design and manufacturing workflows, offering the precision and topological breakdown required for downstream tasks such as analysis, editing, and fabrication. However, B-Reps are often inaccessible due to conversion to more standardized, less expressive geometry formats. Existing methods to recover B-Reps from measured data require complete, noise-free 3D data, which are laborious to obtain. We alleviate this difficulty by enabling the precise reconstruction of CAD shapes from a single RGB-D image. We propose a method that addresses the challenge of reconstructing only the observed geometry from a single view. To allow for these partial observations, and to avoid hallucinating incorrect geometry, we introduce a novel view-centric B-rep (VB-Rep) representation, which incorporates structures to handle visibility limits and encode geometric uncertainty. We combine panoptic image segmentation with iterative geometric optimization to refine and improve the reconstruction process. Our results demonstrate high-quality reconstruction on synthetic and real RGB-D data, showing that our method can bridge the reality gap.

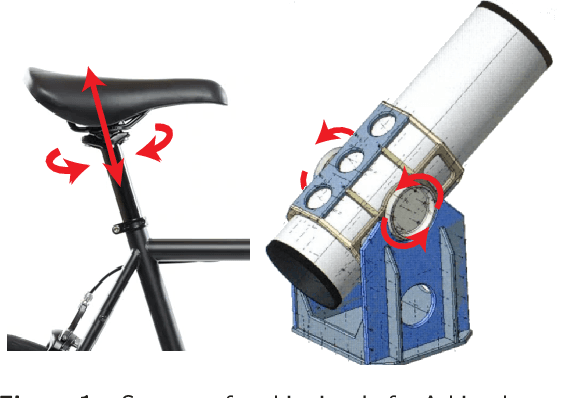

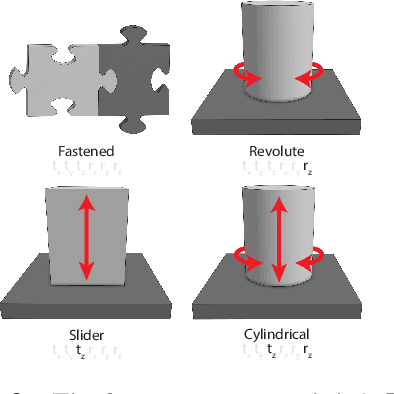

Mates2Motion: Learning How Mechanical CAD Assemblies Work

Aug 02, 2022

Abstract:We describe our work on inferring the degrees of freedom between mated parts in mechanical assemblies using deep learning on CAD representations. We train our model using a large dataset of real-world mechanical assemblies consisting of CAD parts and mates joining them together. We present methods for re-defining these mates to make them better reflect the motion of the assembly, as well as narrowing down the possible axes of motion. We also conduct a user study to create a motion-annotated test set with more reliable labels.

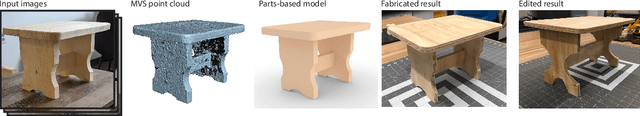

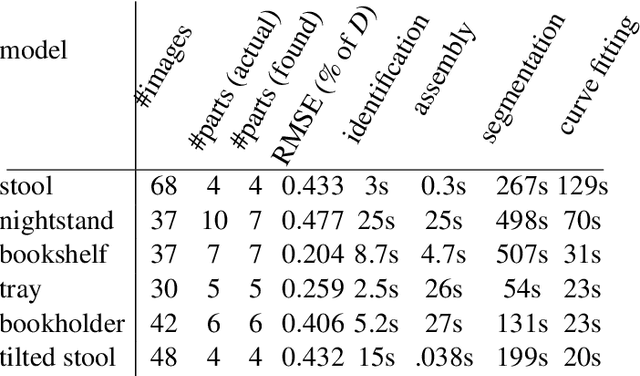

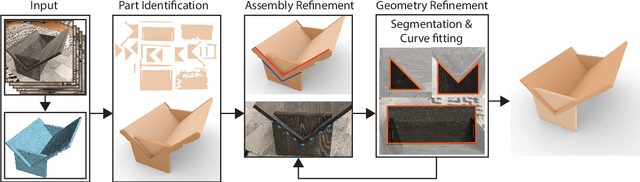

Fabrication-Aware Reverse Engineering for Carpentry

Jul 21, 2021

Abstract:We propose a novel method to generate fabrication blueprints from images of carpentered items. While 3D reconstruction from images is a well-studied problem, typical approaches produce representations that are ill-suited for computer-aided design and fabrication applications. Our key insight is that fabrication processes define and constrain the design space for carpentered objects, and can be leveraged to develop novel reconstruction methods. Our method makes use of domain-specific constraints to recover not just valid geometry, but a semantically valid assembly of parts, using a combination of image-based and geometric optimization techniques. We demonstrate our method on a variety of wooden objects and furniture, and show that we can automatically obtain designs that are both easy to edit and accurate recreations of the ground truth. We further illustrate how our method can be used to fabricate a physical replica of the captured object as well as a customized version, which can be produced by directly editing the reconstructed model in CAD software.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge