Henry Z. Lo

Count-ception: Counting by Fully Convolutional Redundant Counting

Jul 23, 2017

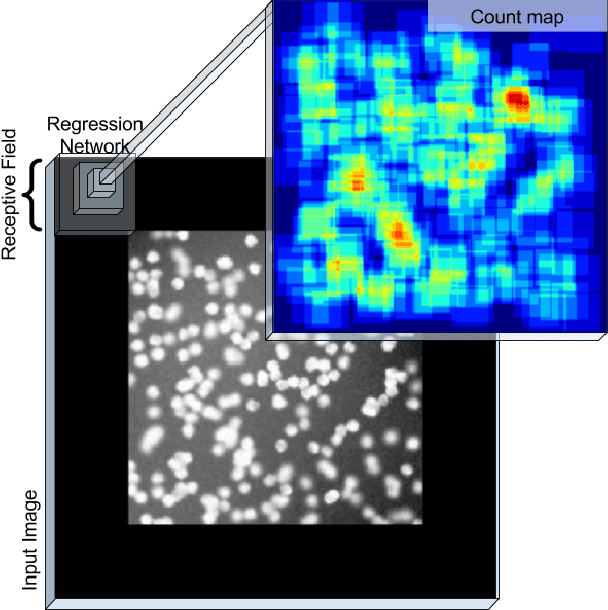

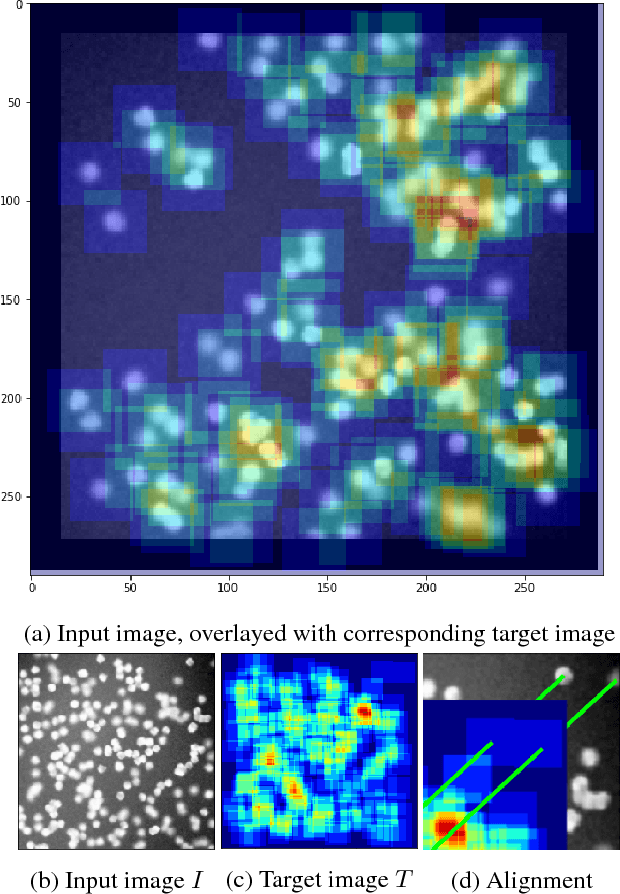

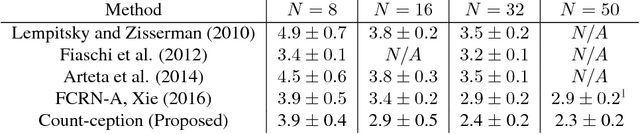

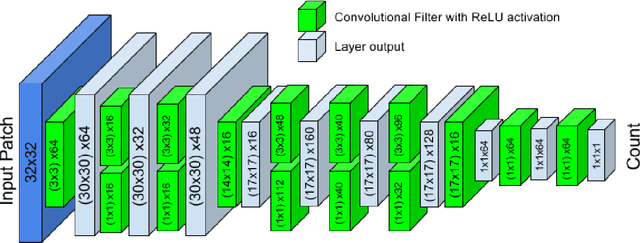

Abstract:Counting objects in digital images is a process that should be replaced by machines. This tedious task is time consuming and prone to errors due to fatigue of human annotators. The goal is to have a system that takes as input an image and returns a count of the objects inside and justification for the prediction in the form of object localization. We repose a problem, originally posed by Lempitsky and Zisserman, to instead predict a count map which contains redundant counts based on the receptive field of a smaller regression network. The regression network predicts a count of the objects that exist inside this frame. By processing the image in a fully convolutional way each pixel is going to be accounted for some number of times, the number of windows which include it, which is the size of each window, (i.e., 32x32 = 1024). To recover the true count we take the average over the redundant predictions. Our contribution is redundant counting instead of predicting a density map in order to average over errors. We also propose a novel deep neural network architecture adapted from the Inception family of networks called the Count-ception network. Together our approach results in a 20% relative improvement (2.9 to 2.3 MAE) over the state of the art method by Xie, Noble, and Zisserman in 2016.

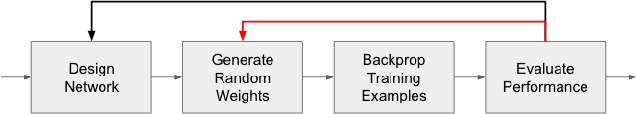

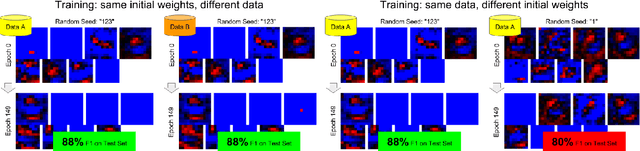

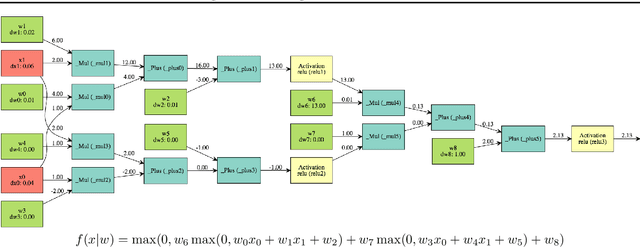

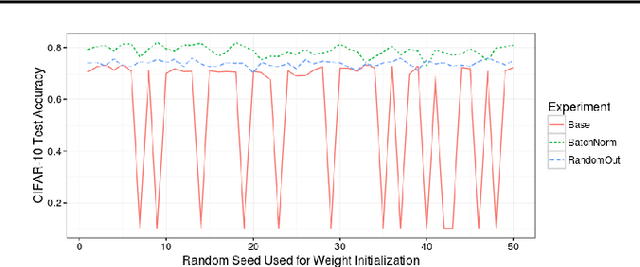

RandomOut: Using a convolutional gradient norm to rescue convolutional filters

May 29, 2017

Abstract:Filters in convolutional neural networks are sensitive to their initialization. The random numbers used to initialize filters are a bias and determine if you will "win" and converge to a satisfactory local minimum so we call this The Filter Lottery. We observe that the 28x28 Inception-V3 model without Batch Normalization fails to train 26% of the time when varying the random seed alone. This is a problem that affects the trial and error process of designing a network. Because random seeds have a large impact it makes it hard to evaluate a network design without trying many different random starting weights. This work aims to reduce the bias imposed by the initial weights so a network converges more consistently. We propose to evaluate and replace specific convolutional filters that have little impact on the prediction. We use the gradient norm to evaluate the impact of a filter on error, and re-initialize filters when the gradient norm of its weights falls below a specific threshold. This consistently improves accuracy on the 28x28 Inception-V3 with a median increase of +3.3%. In effect our method RandomOut increases the number of filters explored without increasing the size of the network. We observe that the RandomOut method has more consistent generalization performance, having a standard deviation of 1.3% instead of 2% when varying random seeds, and does so faster and with fewer parameters.

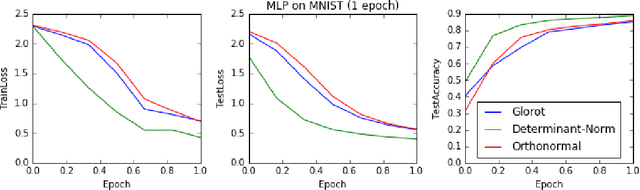

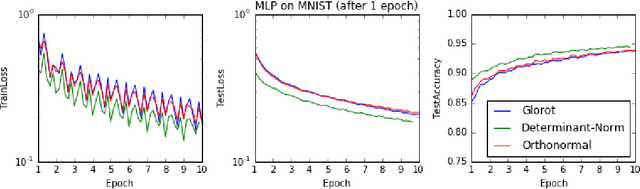

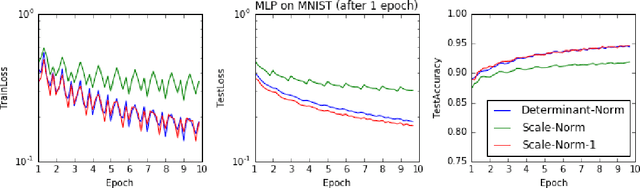

Scale Normalization

Apr 26, 2016

Abstract:One of the difficulties of training deep neural networks is caused by improper scaling between layers. Scaling issues introduce exploding / gradient problems, and have typically been addressed by careful scale-preserving initialization. We investigate the value of preserving scale, or isometry, beyond the initial weights. We propose two methods of maintaing isometry, one exact and one stochastic. Preliminary experiments show that for both determinant and scale-normalization effectively speeds up learning. Results suggest that isometry is important in the beginning of learning, and maintaining it leads to faster learning.

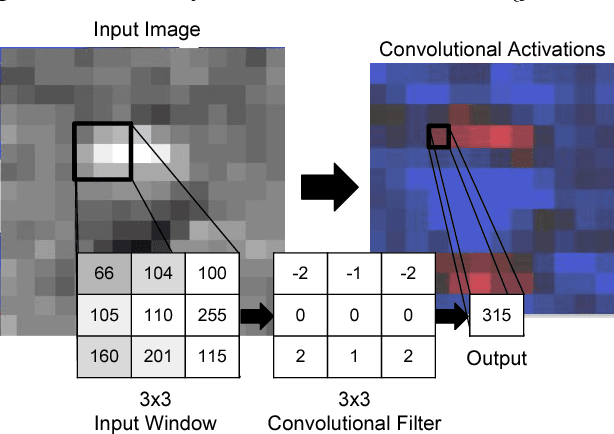

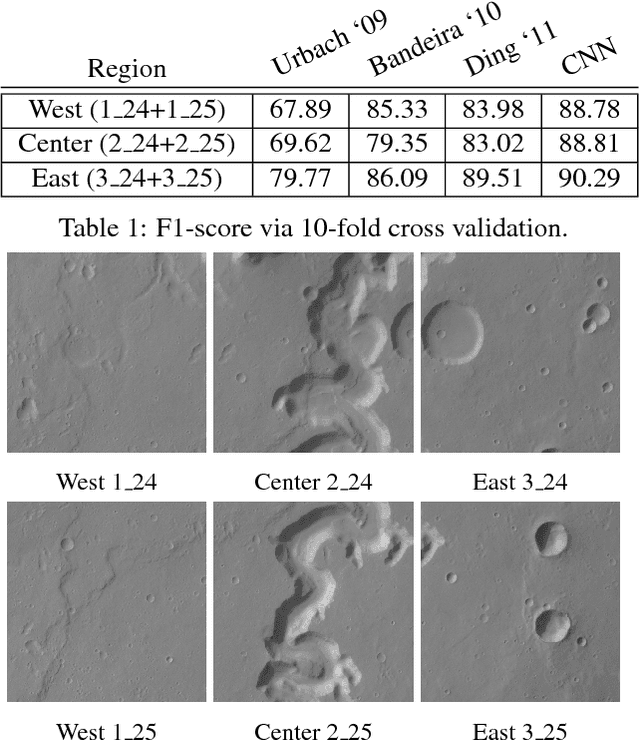

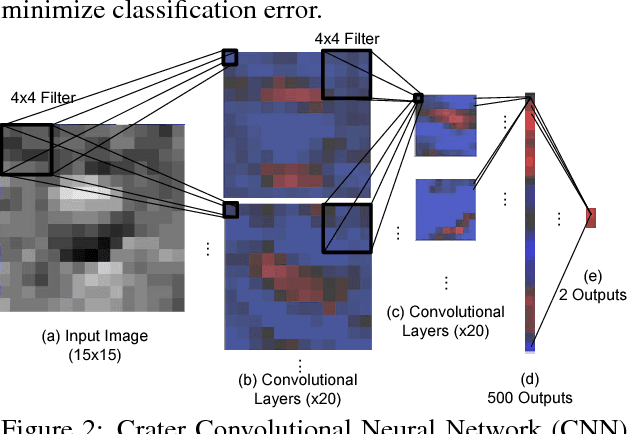

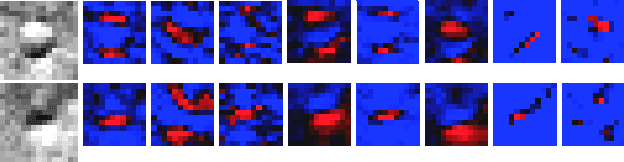

Crater Detection via Convolutional Neural Networks

Jan 05, 2016

Abstract:Craters are among the most studied geomorphic features in the Solar System because they yield important information about the past and present geological processes and provide information about the relative ages of observed geologic formations. We present a method for automatic crater detection using advanced machine learning to deal with the large amount of satellite imagery collected. The challenge of automatically detecting craters comes from their is complex surface because their shape erodes over time to blend into the surface. Bandeira provided a seminal dataset that embodied this challenge that is still an unsolved pattern recognition problem to this day. There has been work to solve this challenge based on extracting shape and contrast features and then applying classification models on those features. The limiting factor in this existing work is the use of hand crafted filters on the image such as Gabor or Sobel filters or Haar features. These hand crafted methods rely on domain knowledge to construct. We would like to learn the optimal filters and features based on training examples. In order to dynamically learn filters and features we look to Convolutional Neural Networks (CNNs) which have shown their dominance in computer vision. The power of CNNs is that they can learn image filters which generate features for high accuracy classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge