Hengyi Li

AVC-DPO: Aligned Video Captioning via Direct Preference Optimization

Jul 02, 2025Abstract:Although video multimodal large language models (video MLLMs) have achieved substantial progress in video captioning tasks, it remains challenging to adjust the focal emphasis of video captions according to human preferences. To address this limitation, we propose Aligned Video Captioning via Direct Preference Optimization (AVC-DPO), a post-training framework designed to enhance captioning capabilities in video MLLMs through preference alignment. Our approach designs enhanced prompts that specifically target temporal dynamics and spatial information-two key factors that humans care about when watching a video-thereby incorporating human-centric preferences. AVC-DPO leverages the same foundation model's caption generation responses under varied prompt conditions to conduct preference-aware training and caption alignment. Using this framework, we have achieved exceptional performance in the LOVE@CVPR'25 Workshop Track 1A: Video Detailed Captioning Challenge, achieving first place on the Video Detailed Captioning (VDC) benchmark according to the VDCSCORE evaluation metric.

Recognition of Oracle Bone Inscriptions by using Two Deep Learning Models

May 04, 2021

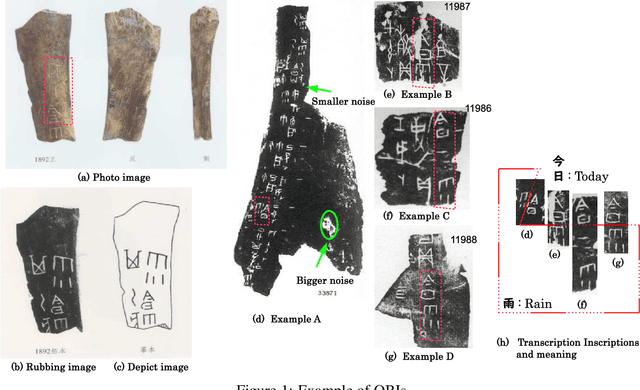

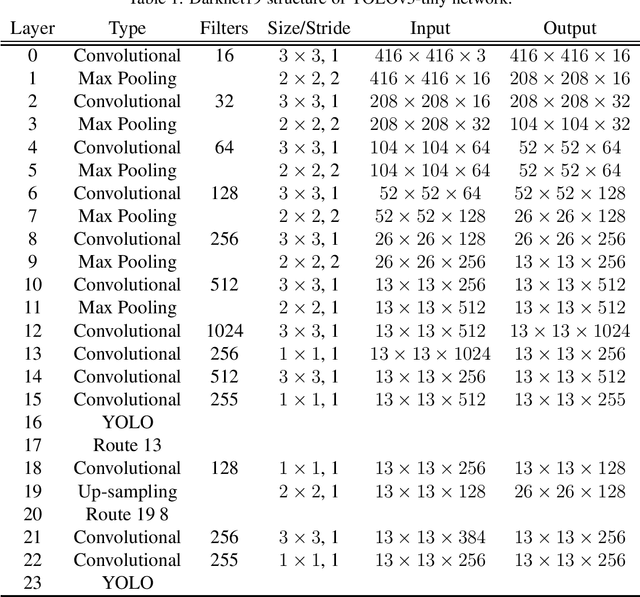

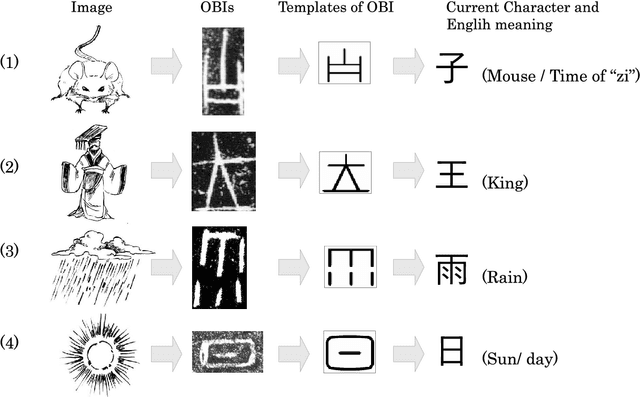

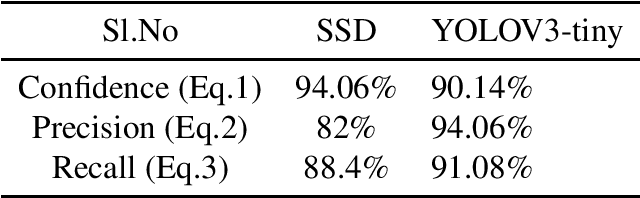

Abstract:Oracle bone inscriptions (OBIs) contain some of the oldest characters in the world and were used in China about 3000 years ago. As an ancient form of literature, OBIs store a lot of information that can help us understand the world history, character evaluations, and more. However, as OBIs were found only discovered about 120 years ago, few studies have described them, and the aging process has made the inscriptions less legible. Hence, automatic character detection and recognition has become an important issue. This paper aims to design a online OBI recognition system for helping preservation and organization the cultural heritage. We evaluated two deep learning models for OBI recognition, and have designed an API that can be accessed online for OBI recognition. In the first stage, you only look once (YOLO) is applied for detecting and recognizing OBIs. However, not all of the OBIs can be detected correctly by YOLO, so we next utilize MobileNet to recognize the undetected OBIs by manually cropping the undetected OBI in the image. MobileNet is used for this second stage of recognition as our evaluation of ten state-of-the-art models showed that it is the best network for OBI recognition due to its superior performance in terms of accuracy, loss and time consumption. We installed our system on an application programming interface (API) and opened it for OBI detection and recognition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge