Heesung Kwon

Semantics to Space(S2S): Embedding semantics into spatial space for zero-shot verb-object query inferencing

Jun 13, 2019

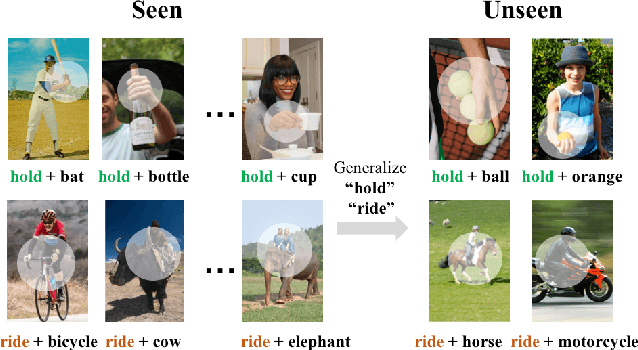

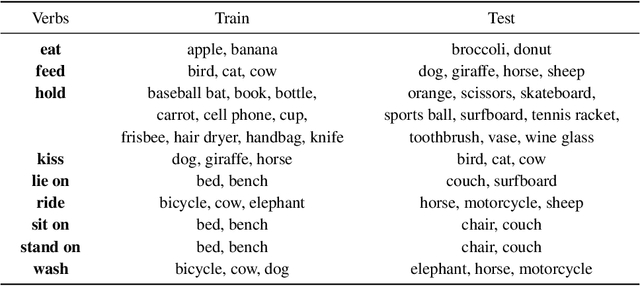

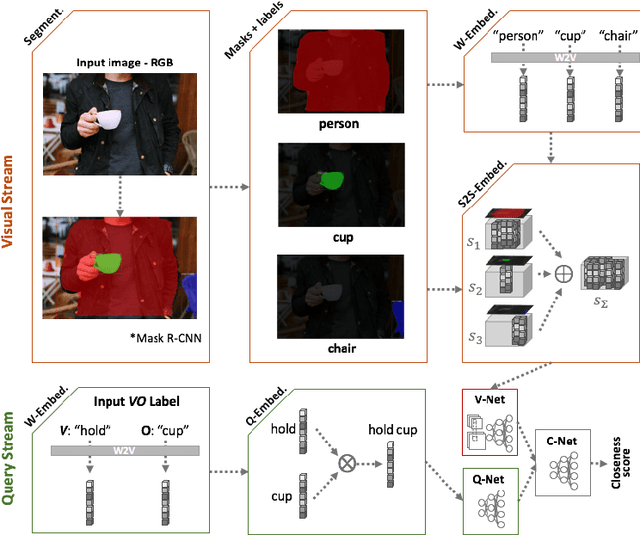

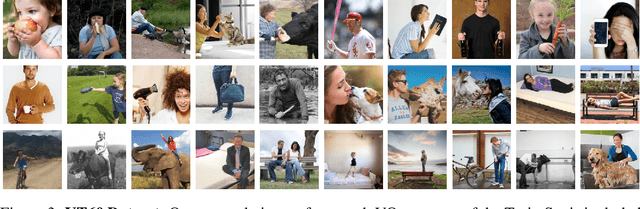

Abstract:We present a novel deep zero-shot learning (ZSL) model for inferencing human-object-interaction with verb-object (VO) query. While the previous ZSL approaches only use the semantic/textual information to be fed into the query stream, we seek to incorporate and embed the semantics into the visual representation stream as well. Our approach is powered by Semantics-to-Space (S2S) architecture where semantics derived from the residing objects are embedded into a spatial space. This architecture allows the co-capturing of the semantic attributes of the human and the objects along with their location/size/silhouette information. As this is the first attempt to address the zero-shot human-object-interaction inferencing with VO query, we have constructed a new dataset, Verb-Transferability 60 (VT60). VT60 provides 60 different VO pairs with overlapping verbs tailored for testing ZSL approaches with VO query. Experimental evaluations show that our approach not only outperforms the state-of-the-art, but also shows the capability of consistently improving performance regardless of which ZSL baseline architecture is used.

Integrating Propositional and Relational Label Side Information for Hierarchical Zero-Shot Image Classification

Feb 14, 2019

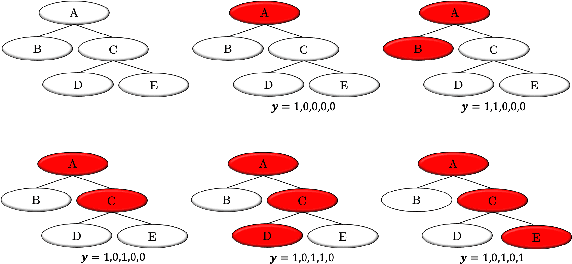

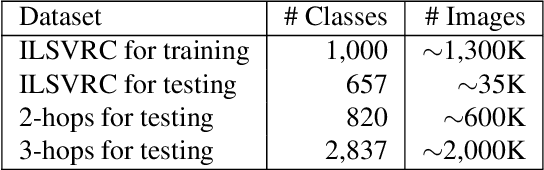

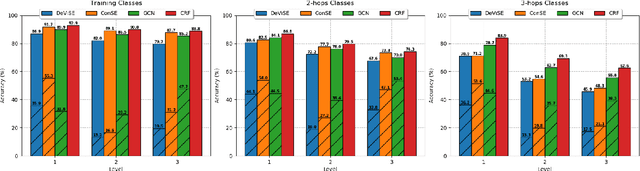

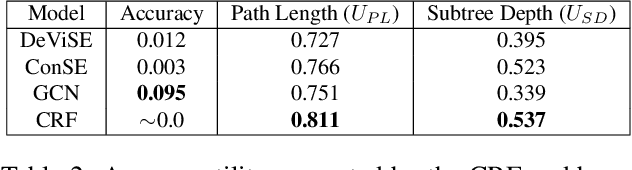

Abstract:Zero-shot learning (ZSL) is one of the most extreme forms of learning from scarce labeled data. It enables predicting that images belong to classes for which no labeled training instances are available. In this paper, we present a new ZSL framework that leverages both label attribute side information and a semantic label hierarchy. We present two methods, lifted zero-shot prediction and a custom conditional random field (CRF) model, that integrate both forms of side information. We propose benchmark tasks for this framework that focus on making predictions across a range of semantic levels. We show that lifted zero-shot prediction can dramatically outperform baseline methods when making predictions within specified semantic levels, and that the probability distribution provided by the CRF model can be leveraged to yield further performance improvements when making unconstrained predictions over the hierarchy.

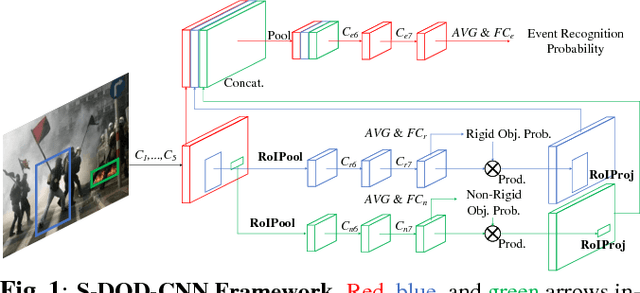

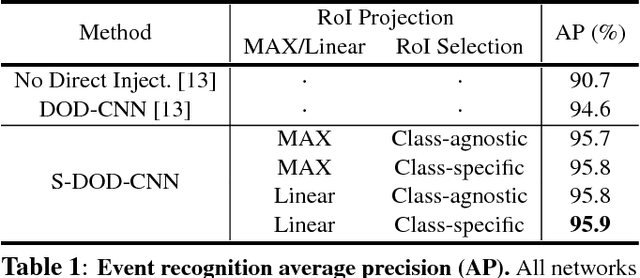

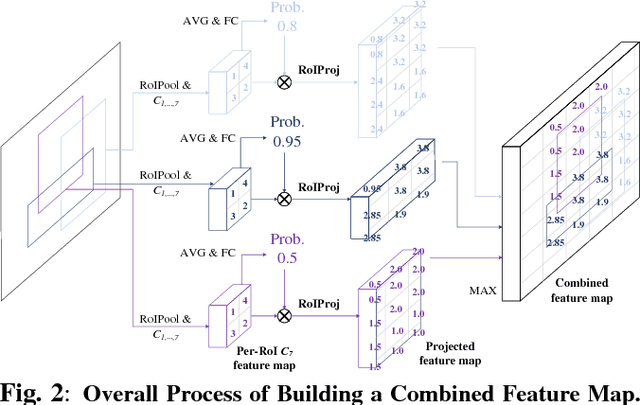

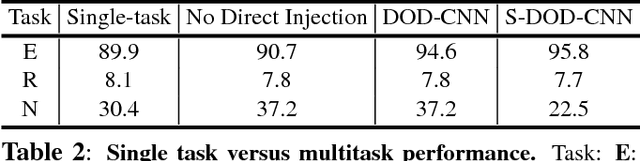

S-DOD-CNN: Doubly Injecting Spatially-Preserved Object Information for Event Recognition

Feb 11, 2019

Abstract:We present a novel event recognition approach called Spatially-preserved Doubly-injected Object Detection CNN (S-DOD-CNN), which incorporates the spatially preserved object detection information in both a direct and an indirect way. Indirect injection is carried out by simply sharing the weights between the object detection modules and the event recognition module. Meanwhile, our novelty lies in the fact that we have preserved the spatial information for the direct injection. Once multiple regions-of-intereset (RoIs) are acquired, their feature maps are computed and then projected onto a spatially-preserving combined feature map using one of the four RoI Projection approaches we present. In our architecture, combined feature maps are generated for object detection which are directly injected to the event recognition module. Our method provides the state-of-the-art accuracy for malicious event recognition.

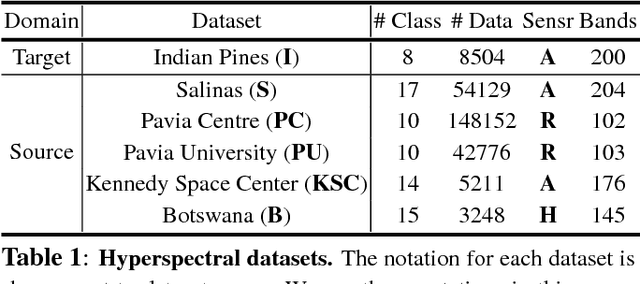

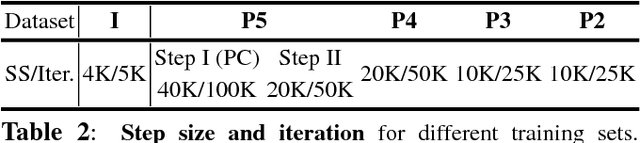

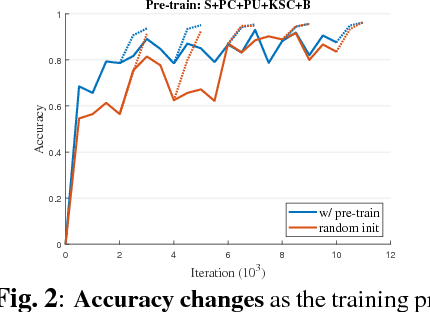

Is Pretraining Necessary for Hyperspectral Image Classification?

Jan 24, 2019

Abstract:We address two questions for training a convolutional neural network (CNN) for hyperspectral image classification: i) is it possible to build a pre-trained network? and ii) is the pre-training effective in furthering the performance? To answer the first question, we have devised an approach that pre-trains a network on multiple source datasets that differ in their hyperspectral characteristics and fine-tunes on a target dataset. This approach effectively resolves the architectural issue that arises when transferring meaningful information between the source and the target networks. To answer the second question, we carried out several ablation experiments. Based on the experimental results, a network trained from scratch performs as good as a network fine-tuned from a pre-trained network. However, we observed that pre-training the network has its own advantage in achieving better performances when deeper networks are required.

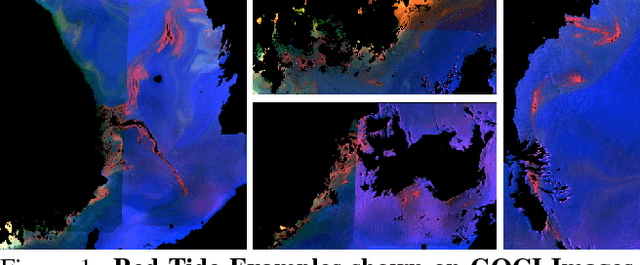

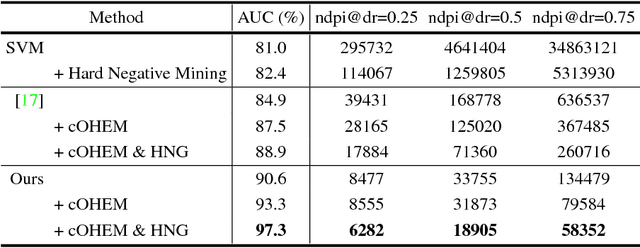

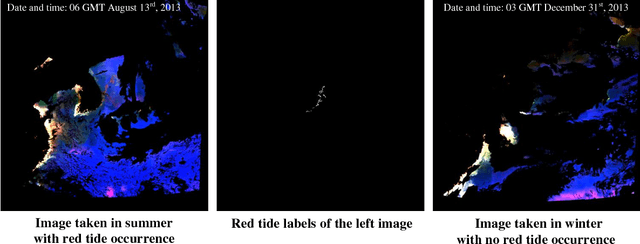

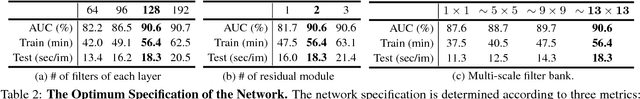

Discovering and Generating Hard Examples for Training a Red Tide Detector

Dec 13, 2018

Abstract:Currently, accurate detection of natural phenomena, such as red tide, that adversely affect wildlife and human, using satellite images has been increasingly utilized. However, red tide detection on satellite images still remains a very hard task due to unpredictable nature of red tide occurrence, extreme sparsity of red tide samples, difficulties in accurate groundtruthing, etc. In this paper, we aim to tackle both the data sparsity and groundtruthing issues by primarily addressing two challenges: i) extreme data imbalance between red tide and non-red tide examples and ii) significant lack of hard examples of non-red tide that can enhance detection performance. In the proposed work, we devise a 9-layer fully convolutional network jointly optimized with two plug-in modules tailored to overcoming the two challenges: i) cascaded online hard example mining (cOHEM) to ease the data imbalance and ii) a hard negative example generator (HNG) to supplement the hard negative (non-red tide) examples. Our proposed network jointly trained with cOHEM and HNG provides state-of-the-art red tide detection accuracy on GOCI satellite images.

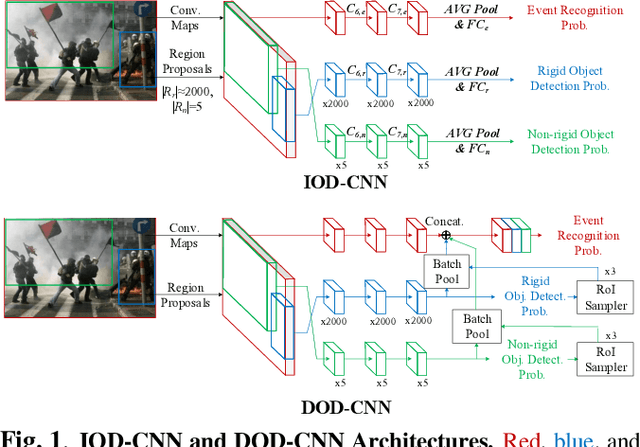

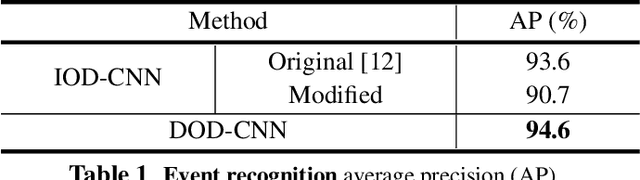

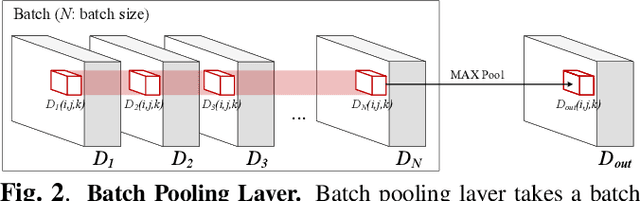

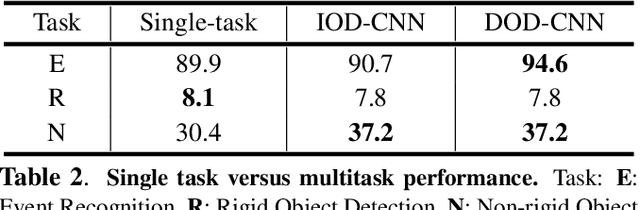

DOD-CNN: Doubly-injecting Object Information for Event Recognition

Nov 07, 2018

Abstract:Recognizing an event in an image can be enhanced by detecting relevant objects in two ways: 1) indirectly utilizing object detection information within the unified architecture or 2) directly making use of the object detection output results. We introduce a novel approach, referred to as Doubly-injected Object Detection CNN (DOD-CNN), exploiting the object information in both ways for the task of event recognition. The structure of this network is inspired by the Integrated Object Detection CNN (IOD-CNN) where object information is indirectly exploited by the event recognition module through the shared portion of the network. In the DOD-CNN architecture, the intermediate object detection outputs are directly injected into the event recognition network while keeping the indirect sharing structure inherited from the IOD-CNN, thus being `doubly-injected'. We also introduce a batch pooling layer which constructs one representative feature map from multiple object hypotheses. We have demonstrated the effectiveness of injecting the object detection information in two different ways in the task of malicious event recognition.

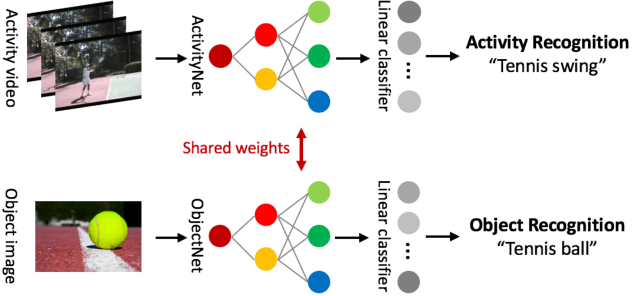

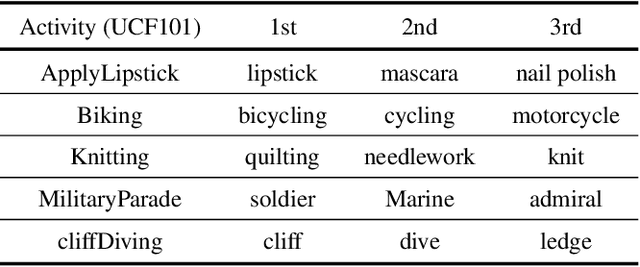

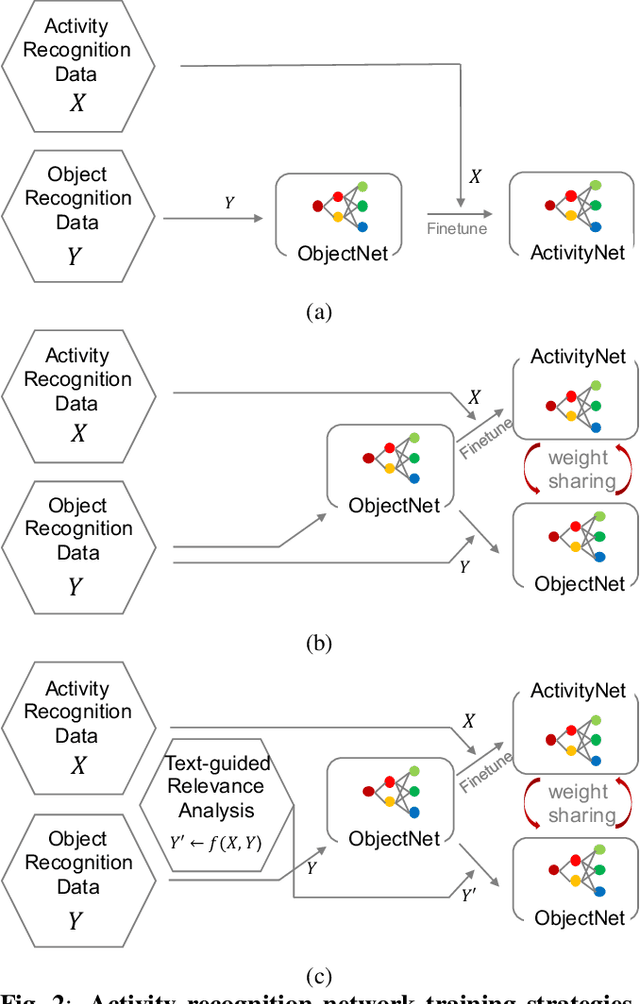

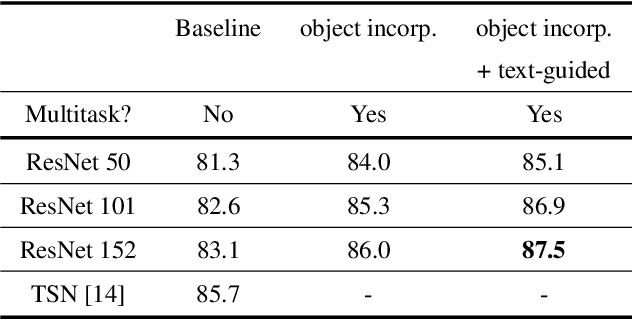

Object and Text-guided Semantics for CNN-based Activity Recognition

May 04, 2018

Abstract:Many previous methods have demonstrated the importance of considering semantically relevant objects for carrying out video-based human activity recognition, yet none of the methods have harvested the power of large text corpora to relate the objects and the activities to be transferred into learning a unified deep convolutional neural network. We present a novel activity recognition CNN which co-learns the object recognition task in an end-to-end multitask learning scheme to improve upon the baseline activity recognition performance. We further improve upon the multitask learning approach by exploiting a text-guided semantic space to select the most relevant objects with respect to the target activities. To the best of our knowledge, we are the first to investigate this approach.

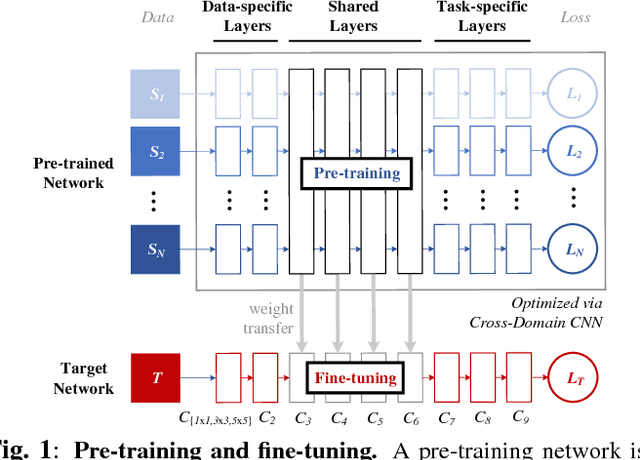

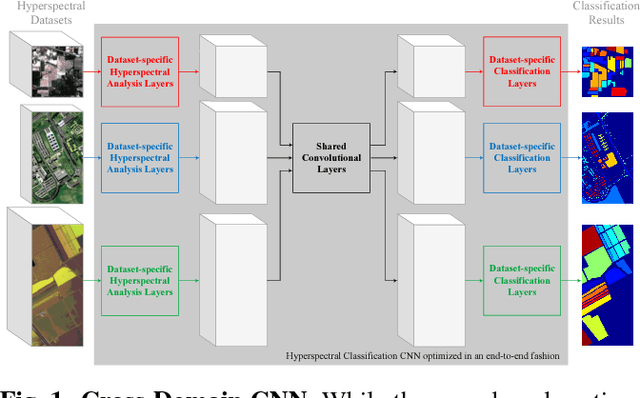

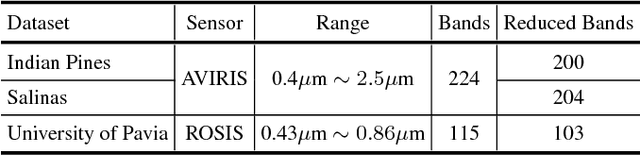

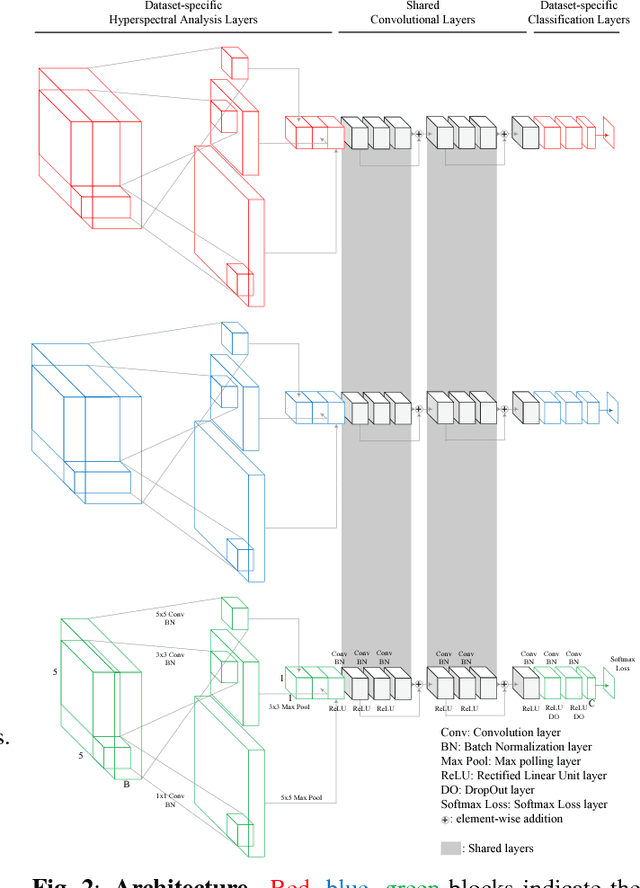

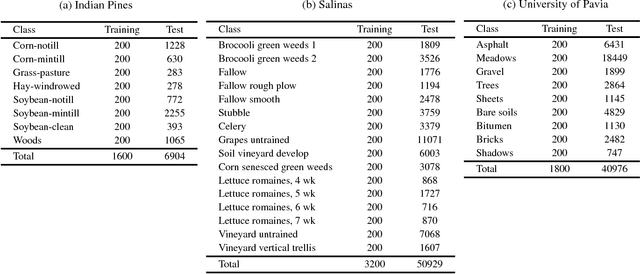

Cross-domain CNN for Hyperspectral Image Classification

May 02, 2018

Abstract:In this paper, we address the dataset scarcity issue with the hyperspectral image classification. As only a few thousands of pixels are available for training, it is difficult to effectively learn high-capacity Convolutional Neural Networks (CNNs). To cope with this problem, we propose a novel cross-domain CNN containing the shared parameters which can co-learn across multiple hyperspectral datasets. The network also contains the non-shared portions designed to handle the dataset specific spectral characteristics and the associated classification tasks. Our approach is the first attempt to learn a CNN for multiple hyperspectral datasets, in an end-to-end fashion. Moreover, we have experimentally shown that the proposed network trained on three of the widely used datasets outperform all the baseline networks which are trained on single dataset.

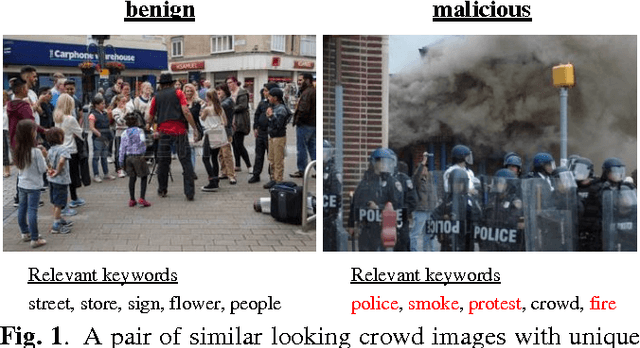

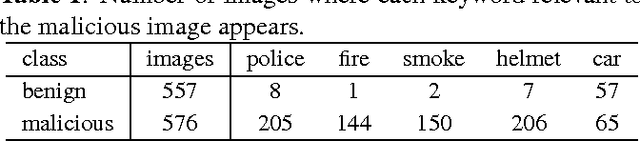

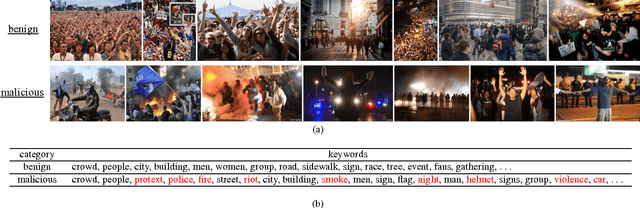

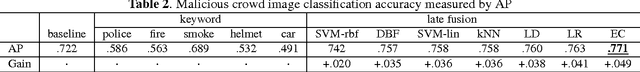

Exploitation of Semantic Keywords for Malicious Event Classification

Dec 01, 2017

Abstract:Learning an event classifier is challenging when the scenes are semantically different but visually similar. However, as humans, we typically handle such tasks painlessly by adding our background semantic knowledge. Motivated by this observation, we aim to provide an empirical study about how additional information such as semantic keywords can boost up the discrimination of such events. To demonstrate the validity of this study, we first construct a novel Malicious Crowd Dataset containing crowd images with two events, benign and malicious, which look visually similar. Note that the primary focus of this paper is not to provide the state-of-the-art performance on this dataset but to show the beneficial aspects of using semantically-driven keyword information. By leveraging crowd-sourcing platforms, such as Amazon Mechanical Turk, we collect semantic keywords associated with images and then subsequently identify a subset of keywords (e.g. police, fire, etc.) unique to specific events. We first show that by using recently introduced attention models, a naive CNN-based event classifier actually learns to primarily focus on local attributes associated with the discriminant semantic keywords identified by the Turks. We further show that incorporating the keyword-driven information into early- and late-fusion approaches can significantly enhance malicious event classification.

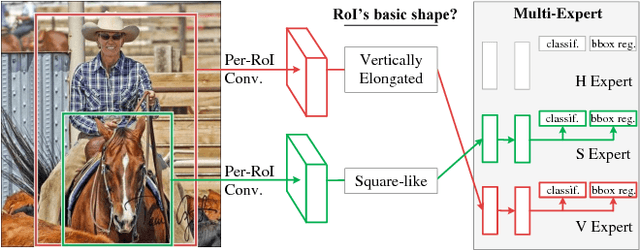

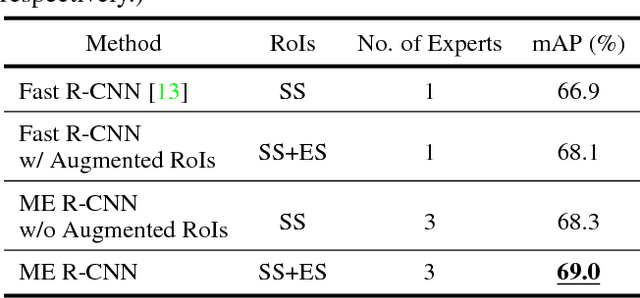

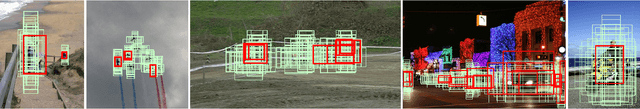

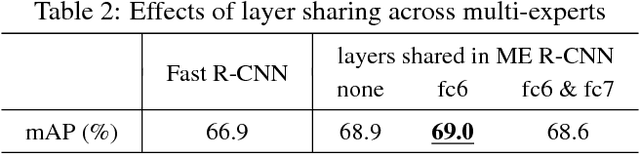

ME R-CNN: Multi-Expert R-CNN for Object Detection

Dec 01, 2017

Abstract:We introduce Multi-Expert Region-based CNN (ME R-CNN) which is equipped with multiple experts and built on top of the R-CNN framework known to be one of the state-of-the-art object detection methods. ME R-CNN focuses in better capturing the appearance variations caused by different shapes, poses, and viewing angles. The proposed approach consists of three experts each responsible for objects with particular shapes: horizontally elongated, square-like, and vertically elongated. On top of using selective search which provides a compact, yet effective set of region of interests (RoIs) for object detection, we augmented the set by also employing the exhaustive search for training only. Incorporating the exhaustive search can provide complementary advantages: i) it captures the multitude of neighboring RoIs missed by the selective search, and thus ii) provide significantly larger amount of training examples. We show that the ME R-CNN architecture provides considerable performance increase over the baselines on PASCAL VOC 07, 12, and MS COCO datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge