Hao Xia

STEP: Warm-Started Visuomotor Policies with Spatiotemporal Consistency Prediction

Feb 09, 2026Abstract:Diffusion policies have recently emerged as a powerful paradigm for visuomotor control in robotic manipulation due to their ability to model the distribution of action sequences and capture multimodality. However, iterative denoising leads to substantial inference latency, limiting control frequency in real-time closed-loop systems. Existing acceleration methods either reduce sampling steps, bypass diffusion through direct prediction, or reuse past actions, but often struggle to jointly preserve action quality and achieve consistently low latency. In this work, we propose STEP, a lightweight spatiotemporal consistency prediction mechanism to construct high-quality warm-start actions that are both distributionally close to the target action and temporally consistent, without compromising the generative capability of the original diffusion policy. Then, we propose a velocity-aware perturbation injection mechanism that adaptively modulates actuation excitation based on temporal action variation to prevent execution stall especially for real-world tasks. We further provide a theoretical analysis showing that the proposed prediction induces a locally contractive mapping, ensuring convergence of action errors during diffusion refinement. We conduct extensive evaluations on nine simulated benchmarks and two real-world tasks. Notably, STEP with 2 steps can achieve an average 21.6% and 27.5% higher success rate than BRIDGER and DDIM on the RoboMimic benchmark and real-world tasks, respectively. These results demonstrate that STEP consistently advances the Pareto frontier of inference latency and success rate over existing methods.

Unmixing based PAN guided fusion network for hyperspectral imagery

Jan 27, 2022

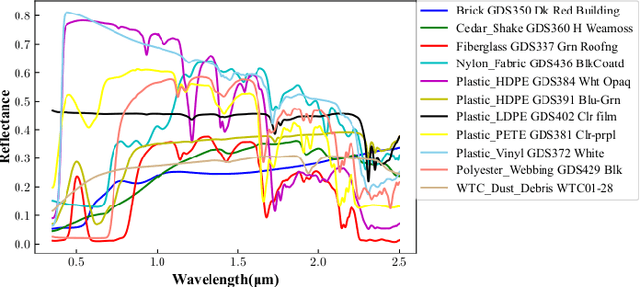

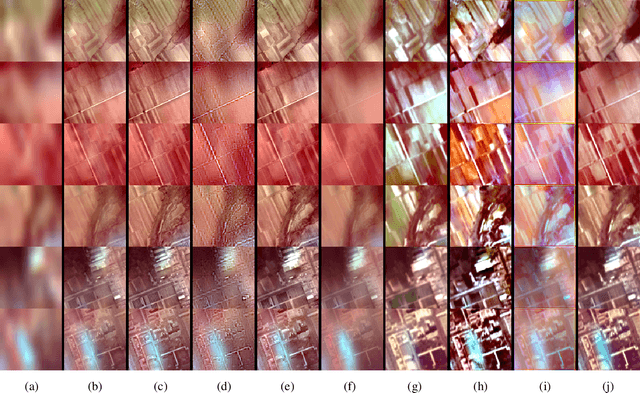

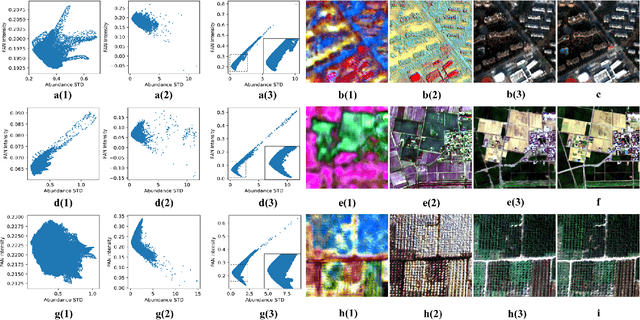

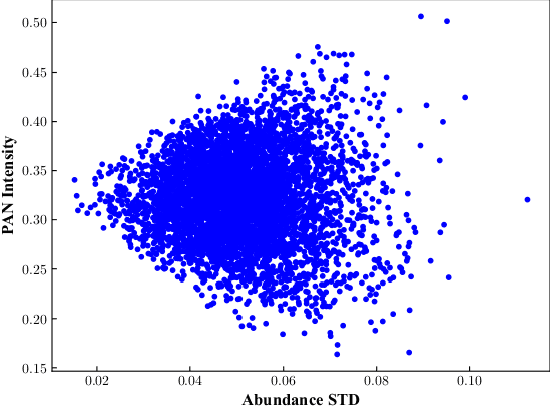

Abstract:The hyperspectral image (HSI) has been widely used in many applications due to its fruitful spectral information. However, the limitation of imaging sensors has reduced its spatial resolution that causes detail loss. One solution is to fuse the low spatial resolution hyperspectral image (LR-HSI) and the panchromatic image (PAN) with inverse features to get the high-resolution hyperspectral image (HR-HSI). Most of the existing fusion methods just focus on small fusion ratios like 4 or 6, which might be impractical for some large ratios' HSI and PAN image pairs. Moreover, the ill-posedness of restoring detail information in HSI with hundreds of bands from PAN image with only one band has not been solved effectively, especially under large fusion ratios. Therefore, a lightweight unmixing-based pan-guided fusion network (Pgnet) is proposed to mitigate this ill-posedness and improve the fusion performance significantly. Note that the fusion process of the proposed network is under the projected low-dimensional abundance subspace with an extremely large fusion ratio of 16. Furthermore, based on the linear and nonlinear relationships between the PAN intensity and abundance, an interpretable PAN detail inject network (PDIN) is designed to inject the PAN details into the abundance feature efficiently. Comprehensive experiments on simulated and real datasets demonstrate the superiority and generality of our method over several state-of-the-art (SOTA) methods qualitatively and quantitatively (The codes in pytorch and paddle versions and dataset could be available at https://github.com/rs-lsl/Pgnet). (This is a improved version compared with the publication in Tgrs with the modification in the deduction of the PDIN block.)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge