Guang Cheng

Purdue

Unlabeled Data Help: Minimax Analysis and Adversarial Robustness

Feb 14, 2022

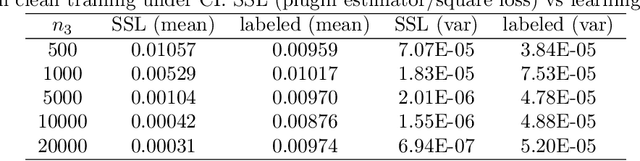

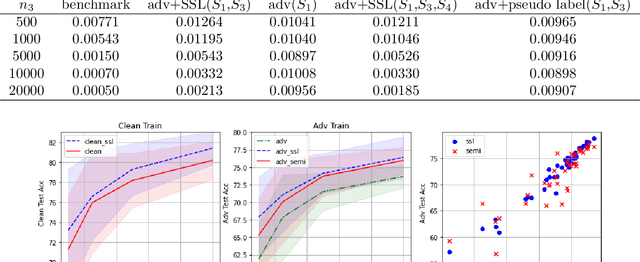

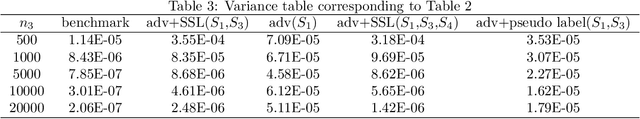

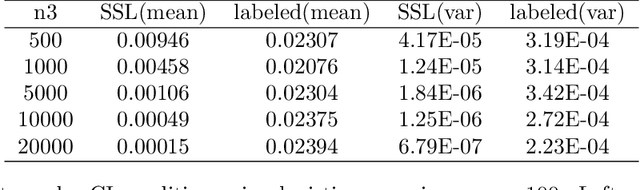

Abstract:The recent proposed self-supervised learning (SSL) approaches successfully demonstrate the great potential of supplementing learning algorithms with additional unlabeled data. However, it is still unclear whether the existing SSL algorithms can fully utilize the information of both labelled and unlabeled data. This paper gives an affirmative answer for the reconstruction-based SSL algorithm \citep{lee2020predicting} under several statistical models. While existing literature only focuses on establishing the upper bound of the convergence rate, we provide a rigorous minimax analysis, and successfully justify the rate-optimality of the reconstruction-based SSL algorithm under different data generation models. Furthermore, we incorporate the reconstruction-based SSL into the existing adversarial training algorithms and show that learning from unlabeled data helps improve the robustness.

High-Dimensional Inference over Networks: Linear Convergence and Statistical Guarantees

Jan 21, 2022

Abstract:We study sparse linear regression over a network of agents, modeled as an undirected graph and no server node. The estimation of the $s$-sparse parameter is formulated as a constrained LASSO problem wherein each agent owns a subset of the $N$ total observations. We analyze the convergence rate and statistical guarantees of a distributed projected gradient tracking-based algorithm under high-dimensional scaling, allowing the ambient dimension $d$ to grow with (and possibly exceed) the sample size $N$. Our theory shows that, under standard notions of restricted strong convexity and smoothness of the loss functions, suitable conditions on the network connectivity and algorithm tuning, the distributed algorithm converges globally at a {\it linear} rate to an estimate that is within the centralized {\it statistical precision} of the model, $O(s\log d/N)$. When $s\log d/N=o(1)$, a condition necessary for statistical consistency, an $\varepsilon$-optimal solution is attained after $\mathcal{O}(\kappa \log (1/\varepsilon))$ gradient computations and $O (\kappa/(1-\rho) \log (1/\varepsilon))$ communication rounds, where $\kappa$ is the restricted condition number of the loss function and $\rho$ measures the network connectivity. The computation cost matches that of the centralized projected gradient algorithm despite having data distributed; whereas the communication rounds reduce as the network connectivity improves. Overall, our study reveals interesting connections between statistical efficiency, network connectivity \& topology, and convergence rate in high dimensions.

Online Bootstrap Inference For Policy Evaluation in Reinforcement Learning

Aug 08, 2021

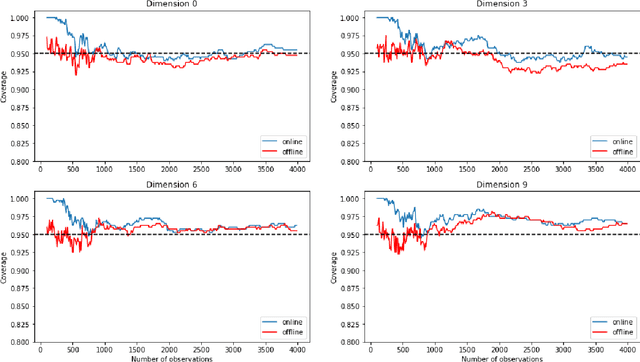

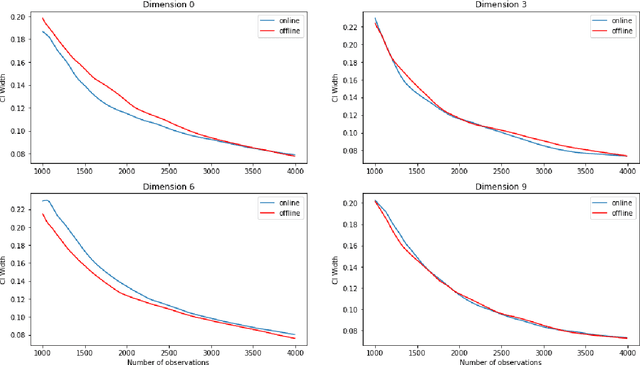

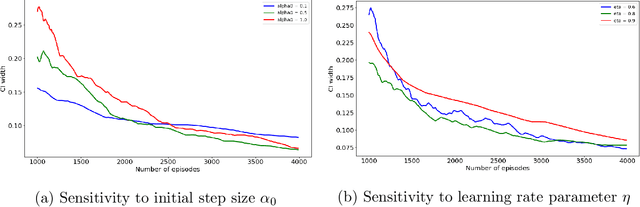

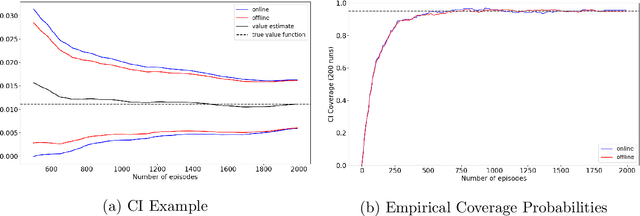

Abstract:The recent emergence of reinforcement learning has created a demand for robust statistical inference methods for the parameter estimates computed using these algorithms. Existing methods for statistical inference in online learning are restricted to settings involving independently sampled observations, while existing statistical inference methods in reinforcement learning (RL) are limited to the batch setting. The online bootstrap is a flexible and efficient approach for statistical inference in linear stochastic approximation algorithms, but its efficacy in settings involving Markov noise, such as RL, has yet to be explored. In this paper, we study the use of the online bootstrap method for statistical inference in RL. In particular, we focus on the temporal difference (TD) learning and Gradient TD (GTD) learning algorithms, which are themselves special instances of linear stochastic approximation under Markov noise. The method is shown to be distributionally consistent for statistical inference in policy evaluation, and numerical experiments are included to demonstrate the effectiveness of this algorithm at statistical inference tasks across a range of real RL environments.

Optimum-statistical collaboration towards efficient black-box optimization

Jun 17, 2021

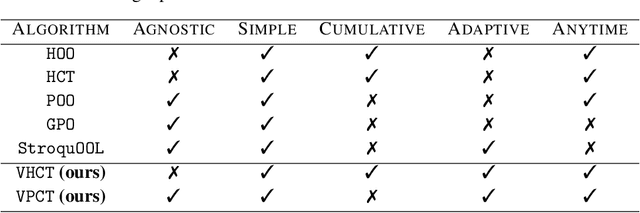

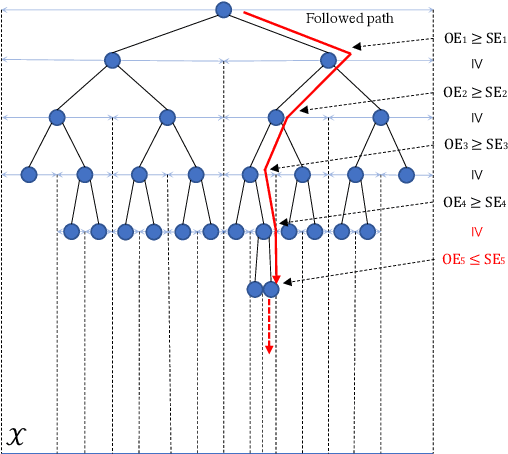

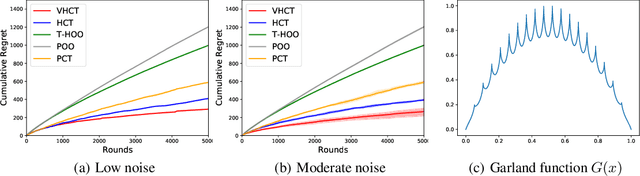

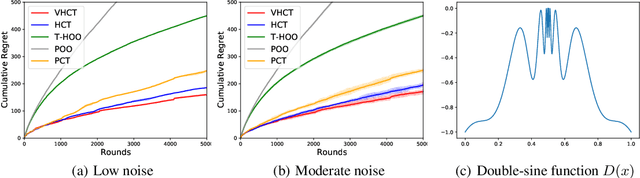

Abstract:With increasingly more hyperparameters involved in their training, machine learning systems demand a better understanding of hyperparameter tuning automation. This has raised interest in studies of provably black-box optimization, which is made more practical by better exploration mechanism implemented in algorithm design, managing the flux of both optimization and statistical errors. Prior efforts focus on delineating optimization errors, but this is deficient: black-box optimization algorithms can be inefficient without considering heterogeneity among reward samples. In this paper, we make the key delineation on the role of statistical uncertainty in black-box optimization, guiding a more efficient algorithm design. We introduce \textit{optimum-statistical collaboration}, a framework of managing the interaction between optimization error flux and statistical error flux evolving in the optimization process. Inspired by this framework, we propose the \texttt{VHCT} algorithms for objective functions with only local-smoothness assumptions. In theory, we prove our algorithm enjoys rate-optimal regret bounds; in experiments, we show the algorithm outperforms prior efforts in extensive settings.

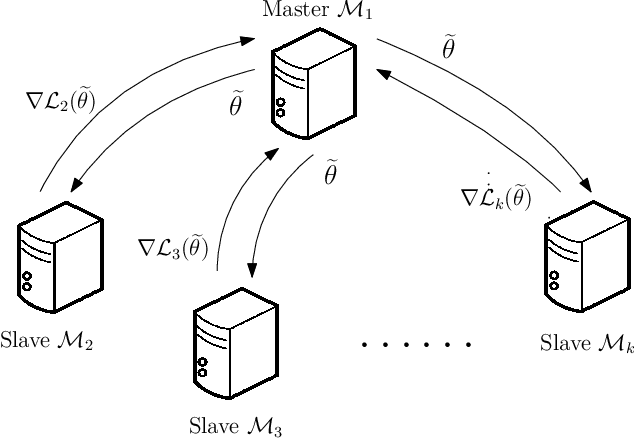

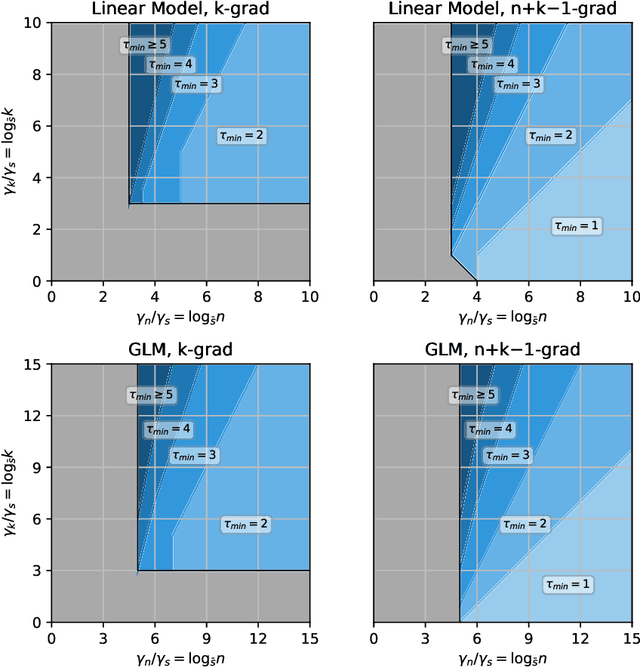

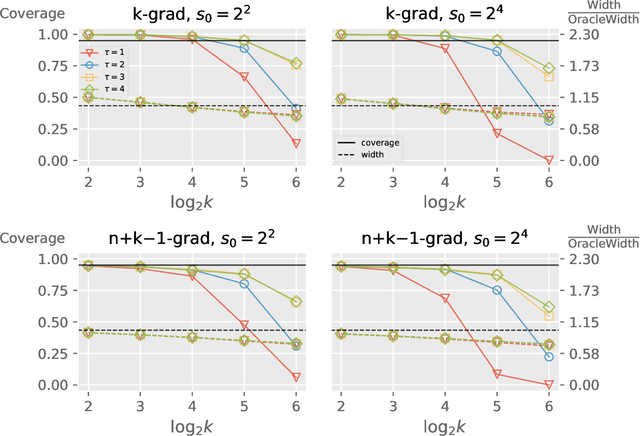

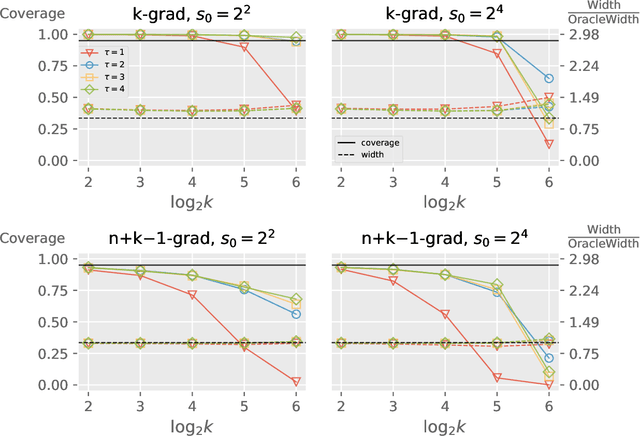

Distributed Bootstrap for Simultaneous Inference Under High Dimensionality

Feb 19, 2021

Abstract:We propose a distributed bootstrap method for simultaneous inference on high-dimensional massive data that are stored and processed with many machines. The method produces a $\ell_\infty$-norm confidence region based on a communication-efficient de-biased lasso, and we propose an efficient cross-validation approach to tune the method at every iteration. We theoretically prove a lower bound on the number of communication rounds $\tau_{\min}$ that warrants the statistical accuracy and efficiency. Furthermore, $\tau_{\min}$ only increases logarithmically with the number of workers and intrinsic dimensionality, while nearly invariant to the nominal dimensionality. We test our theory by extensive simulation studies, and a variable screening task on a semi-synthetic dataset based on the US Airline On-time Performance dataset. The code to reproduce the numerical results is available at GitHub: https://github.com/skchao74/Distributed-bootstrap.

Variance Reduction on Adaptive Stochastic Mirror Descent

Dec 26, 2020

Abstract:We study the idea of variance reduction applied to adaptive stochastic mirror descent algorithms in nonsmooth nonconvex finite-sum optimization problems. We propose a simple yet generalized adaptive mirror descent algorithm with variance reduction named SVRAMD and provide its convergence analysis in different settings. We prove that variance reduction reduces the gradient complexity of most adaptive mirror descent algorithms and boost their convergence. In particular, our general theory implies variance reduction can be applied to algorithms using time-varying step sizes and self-adaptive algorithms such as AdaGrad and RMSProp. Moreover, our convergence rates recover the best existing rates of non-adaptive algorithms. We check the validity of our claims using experiments in deep learning.

Union-net: A deep neural network model adapted to small data sets

Dec 24, 2020

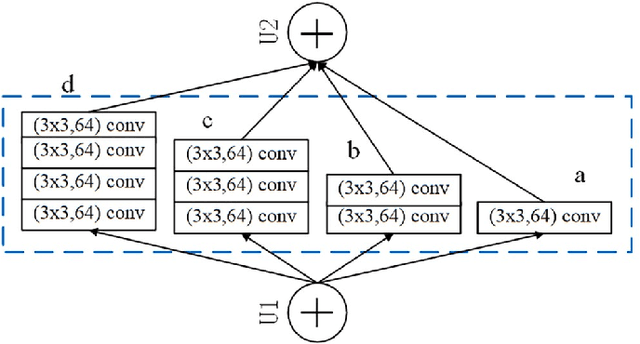

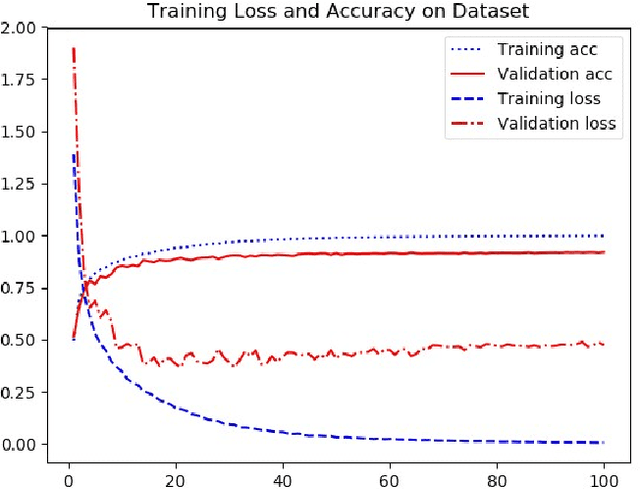

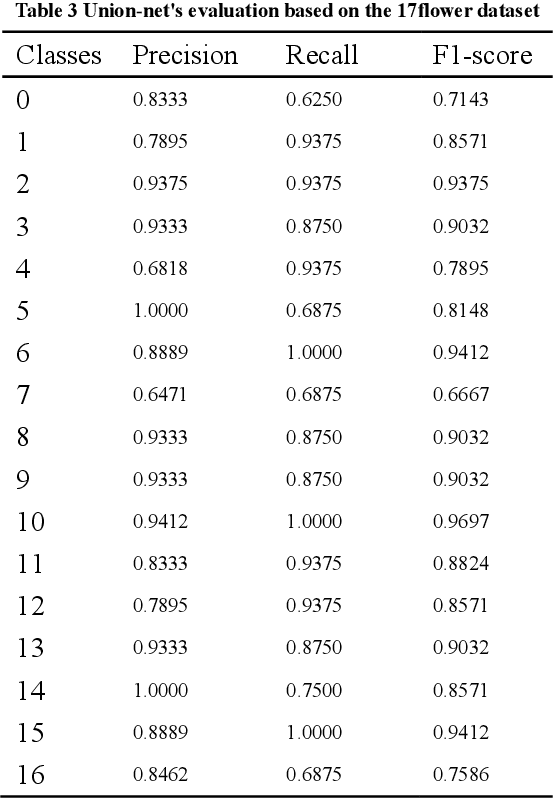

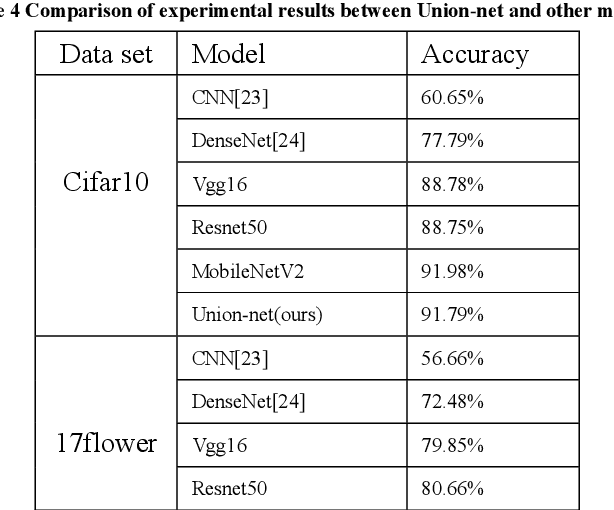

Abstract:In real applications, generally small data sets can be obtained. At present, most of the practical applications of machine learning use classic models based on big data to solve the problem of small data sets. However, the deep neural network model has complex structure, huge model parameters, and training requires more advanced equipment, which brings certain difficulties to the application. Therefore, this paper proposes the concept of union convolution, designing a light deep network model union-net with a shallow network structure and adapting to small data sets. This model combines convolutional network units with different combinations of the same input to form a union module. Each union module is equivalent to a convolutional layer. The serial input and output between the 3 modules constitute a "3-layer" neural network. The output of each union module is fused and added as the input of the last convolutional layer to form a complex network with a 4-layer network structure. It solves the problem that the deep network model network is too deep and the transmission path is too long, which causes the loss of the underlying information transmission. Because the model has fewer model parameters and fewer channels, it can better adapt to small data sets. It solves the problem that the deep network model is prone to overfitting in training small data sets. Use the public data sets cifar10 and 17flowers to conduct multi-classification experiments. Experiments show that the Union-net model can perform well in classification of large data sets and small data sets. It has high practical value in daily application scenarios. The model code is published at https://github.com/yeaso/union-net

Adversarially Robust Estimate and Risk Analysis in Linear Regression

Dec 18, 2020

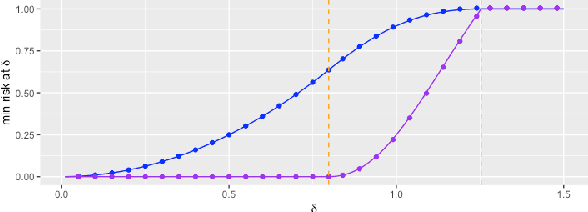

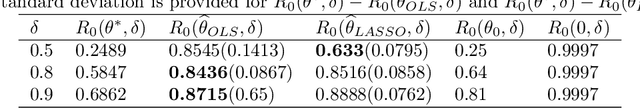

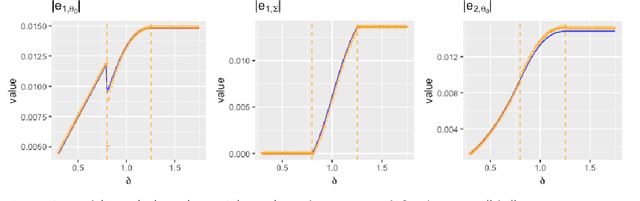

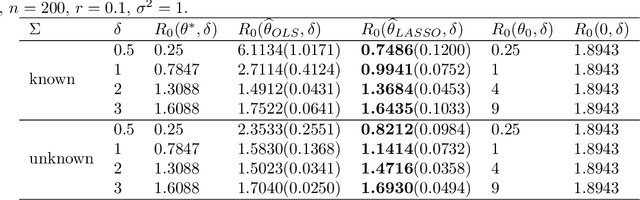

Abstract:Adversarially robust learning aims to design algorithms that are robust to small adversarial perturbations on input variables. Beyond the existing studies on the predictive performance to adversarial samples, our goal is to understand statistical properties of adversarially robust estimates and analyze adversarial risk in the setup of linear regression models. By discovering the statistical minimax rate of convergence of adversarially robust estimators, we emphasize the importance of incorporating model information, e.g., sparsity, in adversarially robust learning. Further, we reveal an explicit connection of adversarial and standard estimates, and propose a straightforward two-stage adversarial learning framework, which facilitates to utilize model structure information to improve adversarial robustness. In theory, the consistency of the adversarially robust estimator is proven and its Bahadur representation is also developed for the statistical inference purpose. The proposed estimator converges in a sharp rate under either low-dimensional or sparse scenario. Moreover, our theory confirms two phenomena in adversarially robust learning: adversarial robustness hurts generalization, and unlabeled data help improve the generalization. In the end, we conduct numerical simulations to verify our theory.

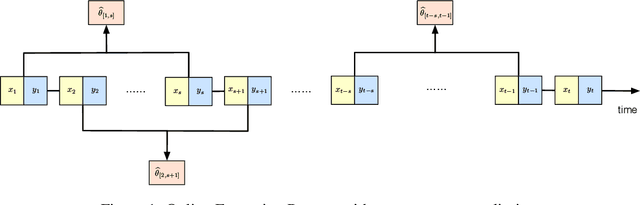

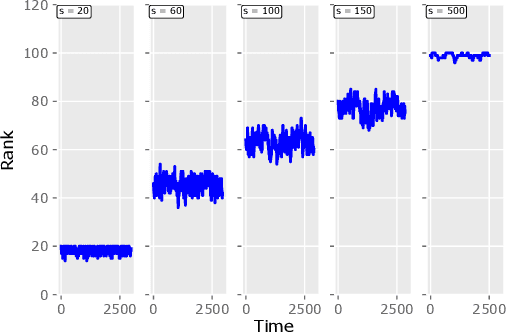

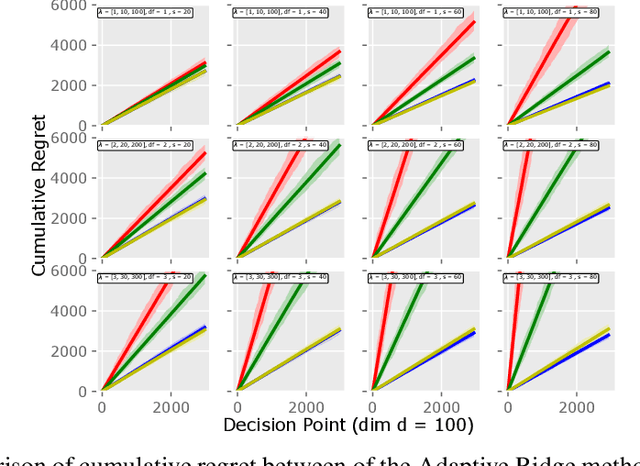

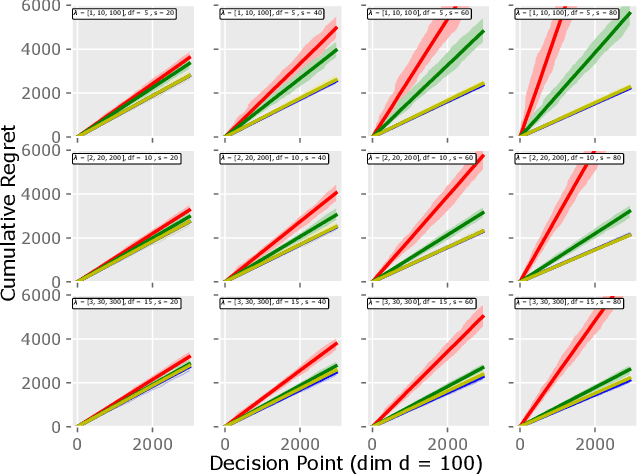

Online Forgetting Process for Linear Regression Models

Dec 03, 2020

Abstract:Motivated by the EU's "Right To Be Forgotten" regulation, we initiate a study of statistical data deletion problems where users' data are accessible only for a limited period of time. This setting is formulated as an online supervised learning task with \textit{constant memory limit}. We propose a deletion-aware algorithm \texttt{FIFD-OLS} for the low dimensional case, and witness a catastrophic rank swinging phenomenon due to the data deletion operation, which leads to statistical inefficiency. As a remedy, we propose the \texttt{FIFD-Adaptive Ridge} algorithm with a novel online regularization scheme, that effectively offsets the uncertainty from deletion. In theory, we provide the cumulative regret upper bound for both online forgetting algorithms. In the experiment, we showed \texttt{FIFD-Adaptive Ridge} outperforms the ridge regression algorithm with fixed regularization level, and hopefully sheds some light on more complex statistical models.

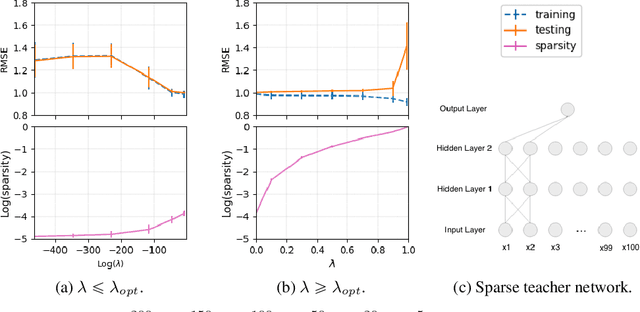

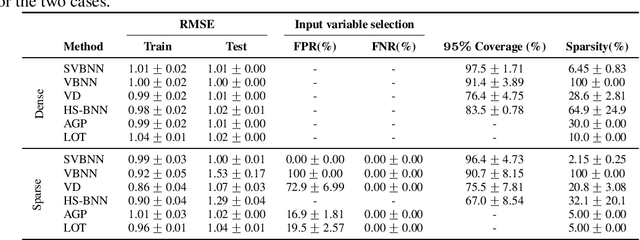

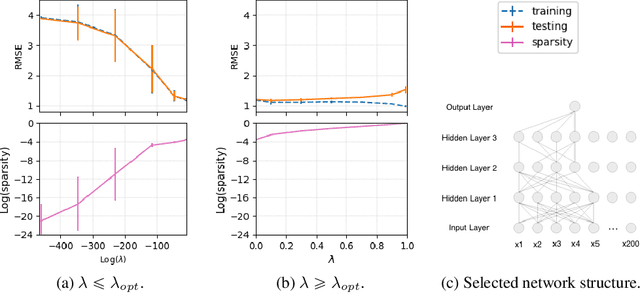

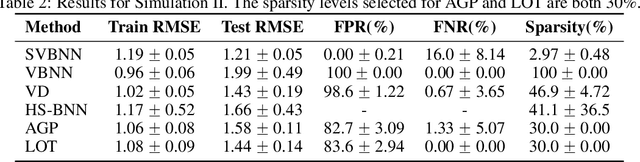

Efficient Variational Inference for Sparse Deep Learning with Theoretical Guarantee

Nov 15, 2020

Abstract:Sparse deep learning aims to address the challenge of huge storage consumption by deep neural networks, and to recover the sparse structure of target functions. Although tremendous empirical successes have been achieved, most sparse deep learning algorithms are lacking of theoretical support. On the other hand, another line of works have proposed theoretical frameworks that are computationally infeasible. In this paper, we train sparse deep neural networks with a fully Bayesian treatment under spike-and-slab priors, and develop a set of computationally efficient variational inferences via continuous relaxation of Bernoulli distribution. The variational posterior contraction rate is provided, which justifies the consistency of the proposed variational Bayes method. Notably, our empirical results demonstrate that this variational procedure provides uncertainty quantification in terms of Bayesian predictive distribution and is also capable to accomplish consistent variable selection by training a sparse multi-layer neural network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge