Giusy Spacone

Wearable and Ultra-Low-Power Fusion of EMG and A-Mode US for Hand-Wrist Kinematic Tracking

Oct 02, 2025

Abstract:Hand gesture recognition based on biosignals has shown strong potential for developing intuitive human-machine interaction strategies that closely mimic natural human behavior. In particular, sensor fusion approaches have gained attention for combining complementary information and overcoming the limitations of individual sensing modalities, thereby enabling more robust and reliable systems. Among them, the fusion of surface electromyography (EMG) and A-mode ultrasound (US) is very promising. However, prior solutions rely on power-hungry platforms unsuitable for multi-day use and are limited to discrete gesture classification. In this work, we present an ultra-low-power (sub-50 mW) system for concurrent acquisition of 8-channel EMG and 4-channel A-mode US signals, integrating two state-of-the-art platforms into fully wearable, dry-contact armbands. We propose a framework for continuous tracking of 23 degrees of freedom (DoFs), 20 for the hand and 3 for the wrist, using a kinematic glove for ground-truth labeling. Our method employs lightweight encoder-decoder architectures with multi-task learning to simultaneously estimate hand and wrist joint angles. Experimental results under realistic sensor repositioning conditions demonstrate that EMG-US fusion achieves a root mean squared error of $10.6^\circ\pm2.0^\circ$, compared to $12.0^\circ\pm1^\circ$ for EMG and $13.1^\circ\pm2.6^\circ$ for US, and a R$^2$ score of $0.61\pm0.1$, with $0.54\pm0.03$ for EMG and $0.38\pm0.20$ for US.

A Parallel Ultra-Low Power Silent Speech Interface based on a Wearable, Fully-dry EMG Neckband

Sep 26, 2025

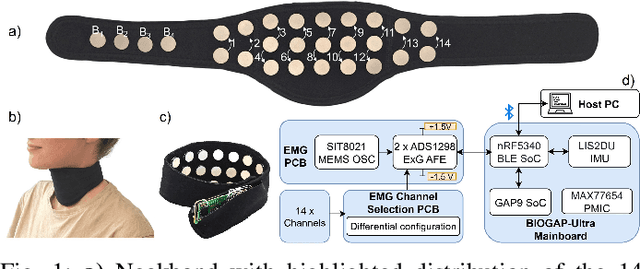

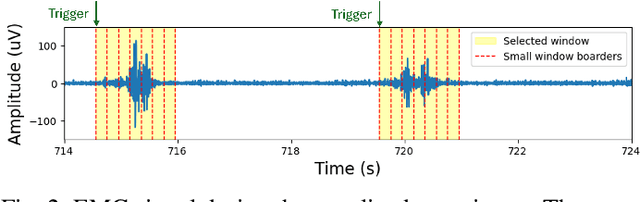

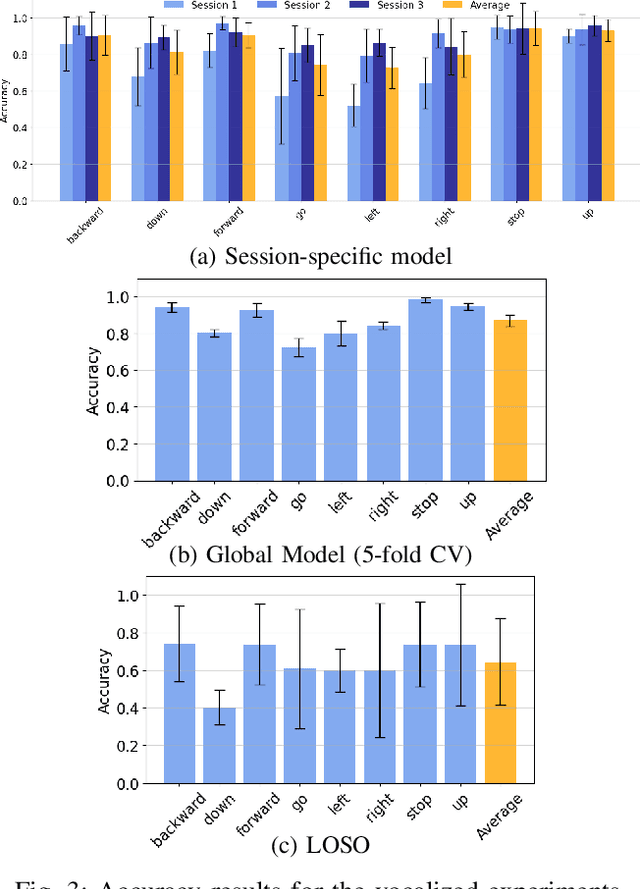

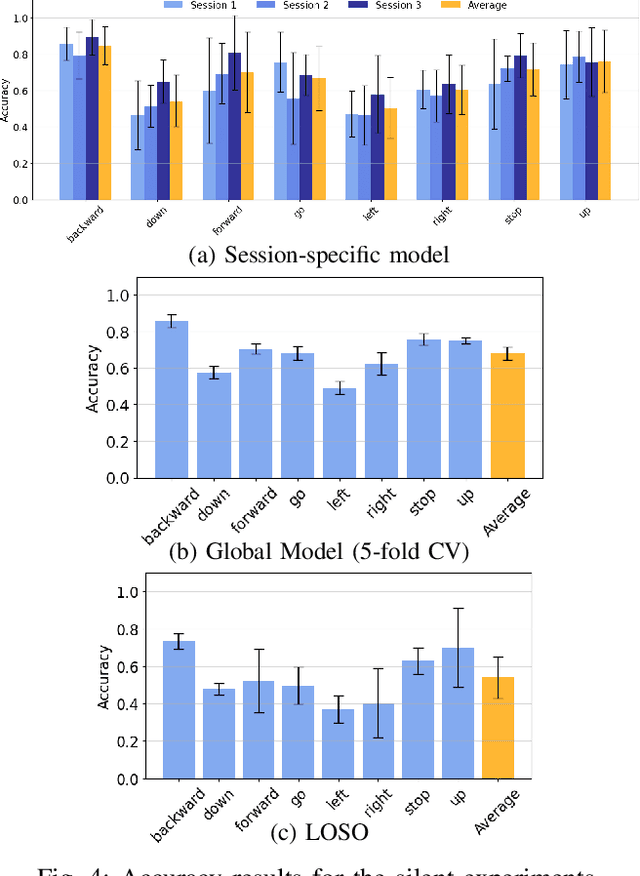

Abstract:We present a wearable, fully-dry, and ultra-low power EMG system for silent speech recognition, integrated into a textile neckband to enable comfortable, non-intrusive use. The system features 14 fully-differential EMG channels and is based on the BioGAP-Ultra platform for ultra-low power (22 mW) biosignal acquisition and wireless transmission. We evaluate its performance on eight speech commands under both vocalized and silent articulation, achieving average classification accuracies of 87$\pm$3% and 68$\pm$3% respectively, with a 5-fold CV approach. To mimic everyday-life conditions, we introduce session-to-session variability by repositioning the neckband between sessions, achieving leave-one-session-out accuracies of 64$\pm$18% and 54$\pm$7% for the vocalized and silent experiments, respectively. These results highlight the robustness of the proposed approach and the promise of energy-efficient silent-speech decoding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge