Gelareh Hajian

Dynamics of Affective States During Takeover Requests in Conditionally Automated Driving Among Older Adults with and without Cognitive Impairment

May 23, 2025

Abstract:Driving is a key component of independence and quality of life for older adults. However, cognitive decline associated with conditions such as mild cognitive impairment and dementia can compromise driving safety and often lead to premature driving cessation. Conditionally automated vehicles, which require drivers to take over control when automation reaches its operational limits, offer a potential assistive solution. However, their effectiveness depends on the driver's ability to respond to takeover requests (TORs) in a timely and appropriate manner. Understanding emotional responses during TORs can provide insight into drivers' engagement, stress levels, and readiness to resume control, particularly in cognitively vulnerable populations. This study investigated affective responses, measured via facial expression analysis of valence and arousal, during TORs among cognitively healthy older adults and those with cognitive impairment. Facial affect data were analyzed across different road geometries and speeds to evaluate within- and between-group differences in affective states. Within-group comparisons using the Wilcoxon signed-rank test revealed significant changes in valence and arousal during TORs for both groups. Cognitively healthy individuals showed adaptive increases in arousal under higher-demand conditions, while those with cognitive impairment exhibited reduced arousal and more positive valence in several scenarios. Between-group comparisons using the Mann-Whitney U test indicated that cognitively impaired individuals displayed lower arousal and higher valence than controls across different TOR conditions. These findings suggest reduced emotional response and awareness in cognitively impaired drivers, highlighting the need for adaptive vehicle systems that detect affective states and support safe handovers for vulnerable users.

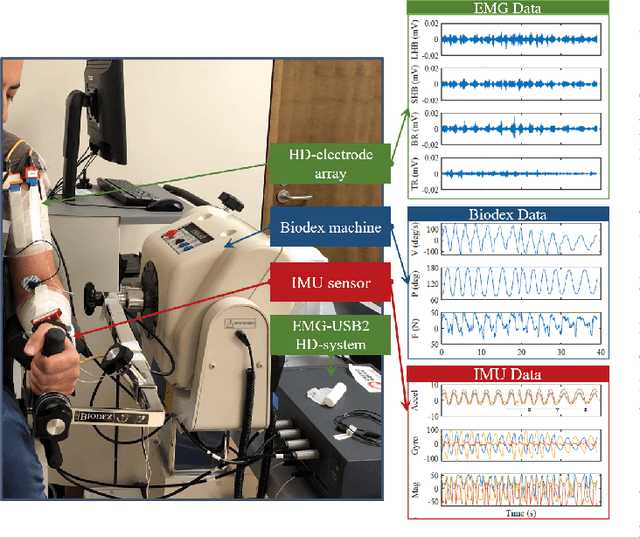

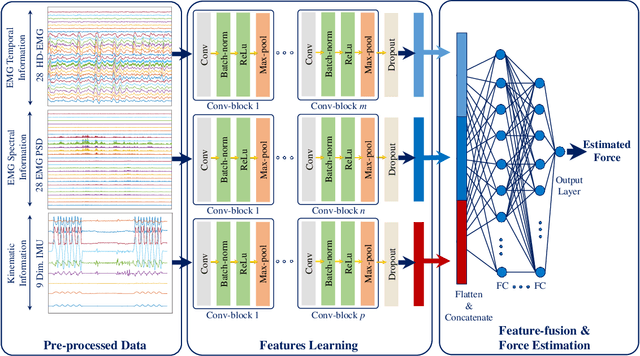

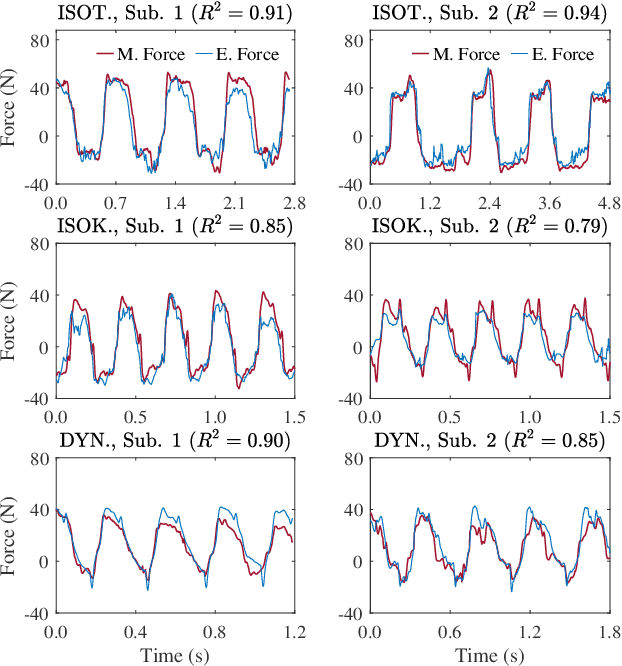

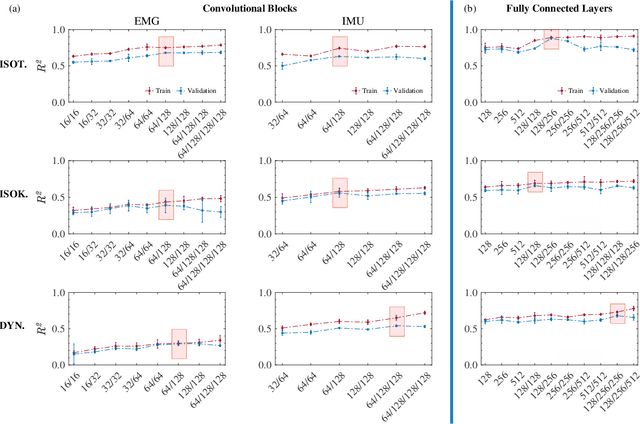

Multimodal Estimation of End Point Force During Quasi-dynamic and Dynamic Muscle Contractions Using Deep Learning

Jul 20, 2022

Abstract:Accurate force/torque estimation is essential for applications such as powered exoskeletons, robotics, and rehabilitation. However, force/torque estimation under dynamic conditions is a challenging due to changing joint angles, force levels, muscle lengths, and movement speeds. We propose a novel method to accurately model the generated force under isotonic, isokinetic (quasi-dynamic), and fully dynamic conditions. Our solution uses a deep multimodal CNN to learn from multimodal EMG-IMU data and estimate the generated force for elbow flexion and extension, for both intra- and inter-subject schemes. The proposed deep multimodal CNN extracts representations from EMG (in time and frequency domains) and IMU (in time domain) and aggregates them to obtain an effective embedding for force estimation. We describe a new dataset containing EMG, IMU, and output force data, collected under a number of different experimental conditions, and use this dataset to evaluate our proposed method. The results show the robustness of our approach in comparison to other baseline methods as well as those in the literature, in different experimental setups and validation schemes. The obtained $R^2$ values are 0.91$\pm$0.034, 0.87$\pm$0.041, and 0.81$\pm$0.037 for the intra-subject and 0.81$\pm$0.048, 0.64$\pm$0.037, and 0.59$\pm$0.042 for the inter-subject scheme, during isotonic, isokinetic, and dynamic contractions, respectively. Additionally, our results indicate that force estimation improves significantly when the kinematic information (IMU data) is included. Average improvements of 13.95\%, 118.18\%, and 50.0\% (intra-subject) and 28.98\%, 41.18\%, and 137.93\% (inter-subject) for isotonic, isokinetic, and dynamic contractions respectively are achieved.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge