Gadi Pinkas

Source -Free Domain Adaptation for Speaker Verification in Data-Scarce Languages and Noisy Channels

Jun 09, 2024Abstract:Domain adaptation is often hampered by exceedingly small target datasets and inaccessible source data. These conditions are prevalent in speech verification, where privacy policies and/or languages with scarce speech resources limit the availability of sufficient data. This paper explored techniques of sourcefree domain adaptation unto a limited target speech dataset for speaker verificationin data-scarce languages. Both language and channel mis-match between source and target were investigated. Fine-tuning methods were evaluated and compared across different sizes of labeled target data. A novel iterative cluster-learn algorithm was studied for unlabeled target datasets.

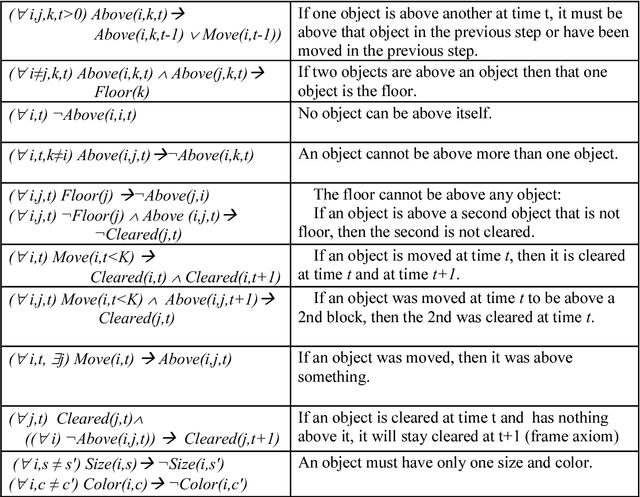

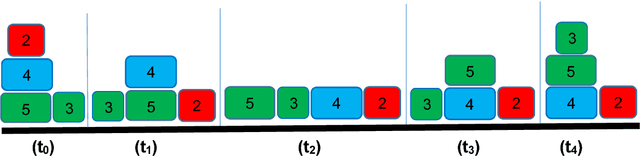

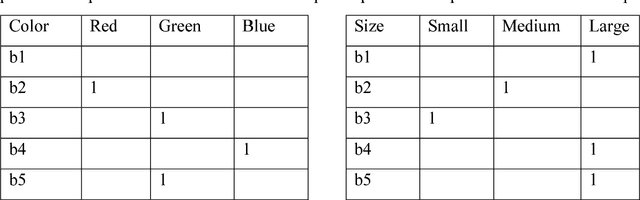

Artificial Neural Networks that Learn to Satisfy Logic Constraints

Dec 08, 2017

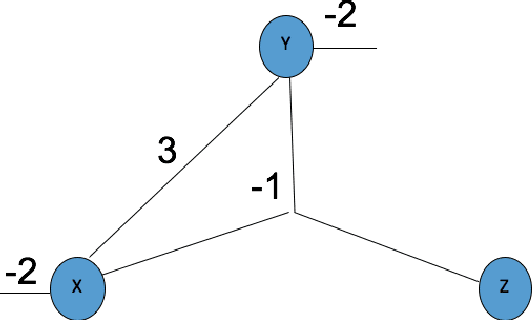

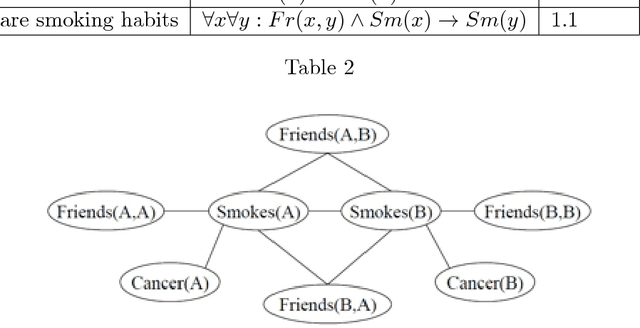

Abstract:Logic-based problems such as planning, theorem proving, or puzzles, typically involve combinatoric search and structured knowledge representation. Artificial neural networks are very successful statistical learners, however, for many years, they have been criticized for their weaknesses in representing and in processing complex structured knowledge which is crucial for combinatoric search and symbol manipulation. Two neural architectures are presented, which can encode structured relational knowledge in neural activation, and store bounded First Order Logic constraints in connection weights. Both architectures learn to search for a solution that satisfies the constraints. Learning is done by unsupervised practicing on problem instances from the same domain, in a way that improves the network-solving speed. No teacher exists to provide answers for the problem instances of the training and test sets. However, the domain constraints are provided as prior knowledge to a loss function that measures the degree of constraint violations. Iterations of activation calculation and learning are executed until a solution that maximally satisfies the constraints emerges on the output units. As a test case, block-world planning problems are used to train networks that learn to plan in that domain, but the techniques proposed could be used more generally as in integrating prior symbolic knowledge with statistical learning

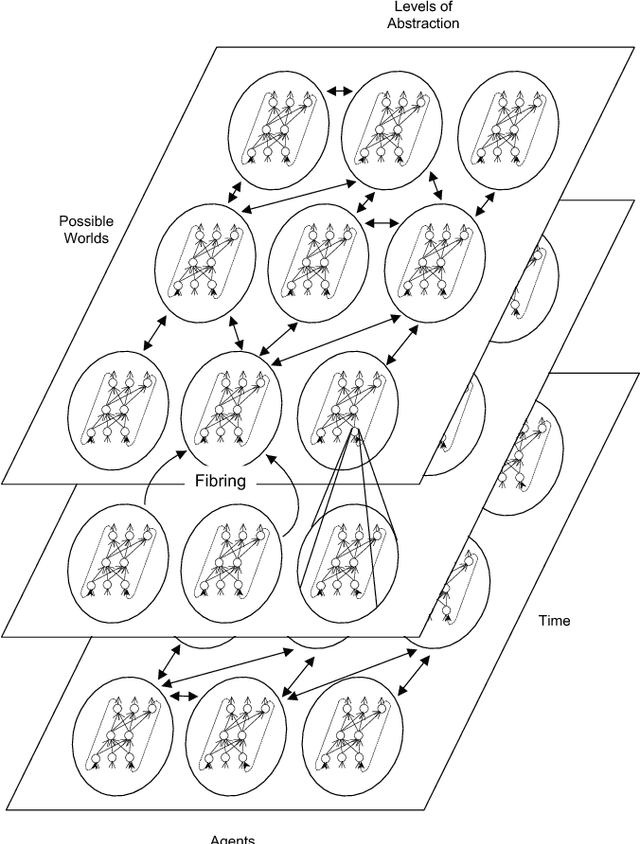

Neural-Symbolic Learning and Reasoning: A Survey and Interpretation

Nov 10, 2017

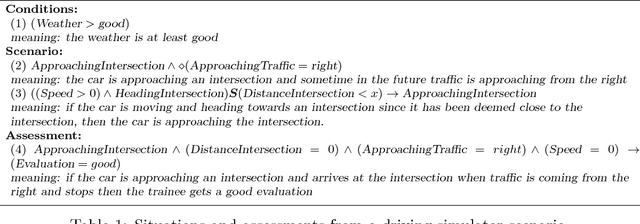

Abstract:The study and understanding of human behaviour is relevant to computer science, artificial intelligence, neural computation, cognitive science, philosophy, psychology, and several other areas. Presupposing cognition as basis of behaviour, among the most prominent tools in the modelling of behaviour are computational-logic systems, connectionist models of cognition, and models of uncertainty. Recent studies in cognitive science, artificial intelligence, and psychology have produced a number of cognitive models of reasoning, learning, and language that are underpinned by computation. In addition, efforts in computer science research have led to the development of cognitive computational systems integrating machine learning and automated reasoning. Such systems have shown promise in a range of applications, including computational biology, fault diagnosis, training and assessment in simulators, and software verification. This joint survey reviews the personal ideas and views of several researchers on neural-symbolic learning and reasoning. The article is organised in three parts: Firstly, we frame the scope and goals of neural-symbolic computation and have a look at the theoretical foundations. We then proceed to describe the realisations of neural-symbolic computation, systems, and applications. Finally we present the challenges facing the area and avenues for further research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge