Franco Zappa

Benchmarking Energy and Latency in TinyML: A Novel Method for Resource-Constrained AI

May 21, 2025Abstract:The rise of IoT has increased the need for on-edge machine learning, with TinyML emerging as a promising solution for resource-constrained devices such as MCU. However, evaluating their performance remains challenging due to diverse architectures and application scenarios. Current solutions have many non-negligible limitations. This work introduces an alternative benchmarking methodology that integrates energy and latency measurements while distinguishing three execution phases pre-inference, inference, and post-inference. Additionally, the setup ensures that the device operates without being powered by an external measurement unit, while automated testing can be leveraged to enhance statistical significance. To evaluate our setup, we tested the STM32N6 MCU, which includes a NPU for executing neural networks. Two configurations were considered: high-performance and Low-power. The variation of the EDP was analyzed separately for each phase, providing insights into the impact of hardware configurations on energy efficiency. Each model was tested 1000 times to ensure statistically relevant results. Our findings demonstrate that reducing the core voltage and clock frequency improve the efficiency of pre- and post-processing without significantly affecting network execution performance. This approach can also be used for cross-platform comparisons to determine the most efficient inference platform and to quantify how pre- and post-processing overhead varies across different hardware implementations.

Non-Line-of-Sight Tracking and Mapping with an Active Corner Camera

Aug 02, 2022

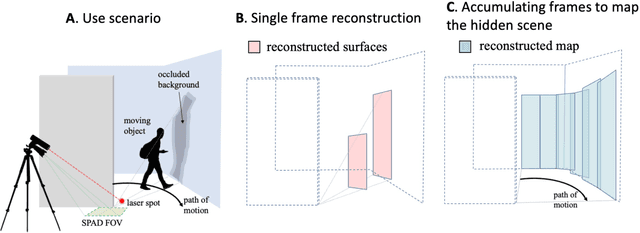

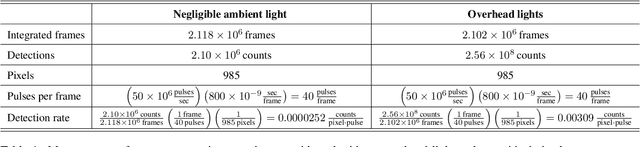

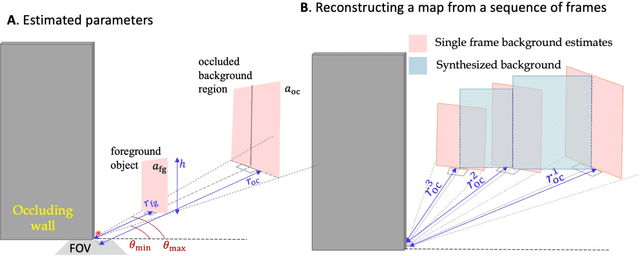

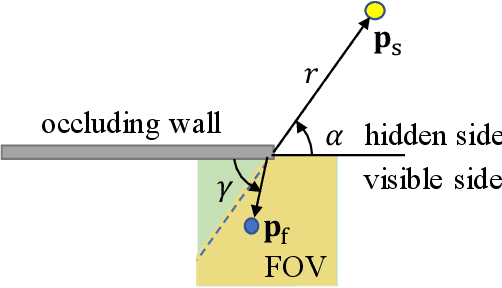

Abstract:The ability to form non-line-of-sight (NLOS) images of changing scenes could be transformative in a variety of fields, including search and rescue, autonomous vehicle navigation, and reconnaissance. Most existing active NLOS methods illuminate the hidden scene using a pulsed laser directed at a relay surface and collect time-resolved measurements of returning light. The prevailing approaches include raster scanning of a rectangular grid on a vertical wall opposite the volume of interest to generate a collection of confocal measurements. These are inherently limited by the need for laser scanning. Methods that avoid laser scanning track the moving parts of the hidden scene as one or two point targets. In this work, based on more complete optical response modeling yet still without multiple illumination positions, we demonstrate accurate reconstructions of objects in motion and a 'map' of the stationary scenery behind them. The ability to count, localize, and characterize the sizes of hidden objects in motion, combined with mapping of the stationary hidden scene, could greatly improve indoor situational awareness in a variety of applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge