Fei Zhong

Fast vehicle detection algorithm based on lightweight YOLO7-tiny

Apr 17, 2023Abstract:The swift and precise detection of vehicles plays a significant role in intelligent transportation systems. Current vehicle detection algorithms encounter challenges of high computational complexity, low detection rate, and limited feasibility on mobile devices. To address these issues, this paper proposes a lightweight vehicle detection algorithm based on YOLOv7-tiny (You Only Look Once version seven) called Ghost-YOLOv7. The width of model is scaled to 0.5 and the standard convolution of the backbone network is replaced with Ghost convolution to achieve a lighter network and improve the detection speed; then a self-designed Ghost bi-directional feature pyramid network (Ghost-BiFPN) is embedded into the neck network to enhance feature extraction capability of the algorithm and enriches semantic information; and a Ghost Decouoled Head (GDH) is employed for accurate prediction of vehicle location and species; finally, a coordinate attention mechanism is introduced into the output layer to suppress environmental interference. The WIoU loss function is employed to further enhance the detection accuracy. Ablation experiments results on the PASCAL VOC dataset demonstrate that Ghost-YOLOv7 outperforms the original YOLOv7-tiny model. It achieving a 29.8% reduction in computation, 37.3% reduction in the number of parameters, 35.1% reduction in model weights, 1.1% higher mean average precision (mAP), the detection speed is higher 27FPS compared with the original algorithm. Ghost-YOLOv7 was also compared on KITTI and BIT-vehicle datasets as well, and the results show that this algorithm has the overall best performance.

Light-YOLOv5: A Lightweight Algorithm for Improved YOLOv5 in Complex Fire Scenarios

Aug 29, 2022

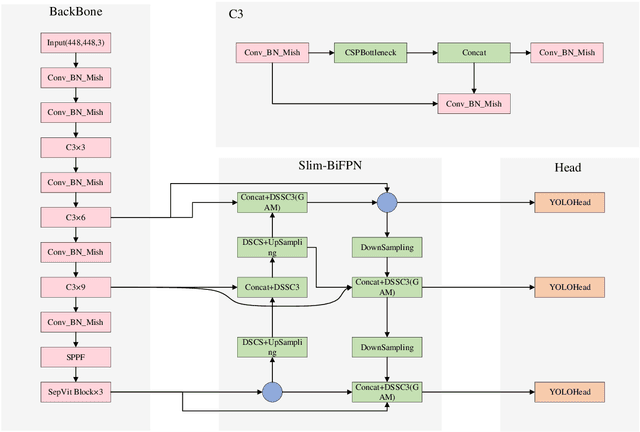

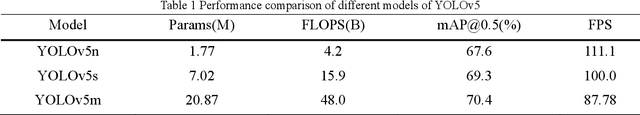

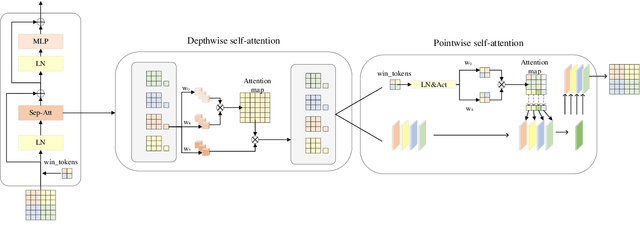

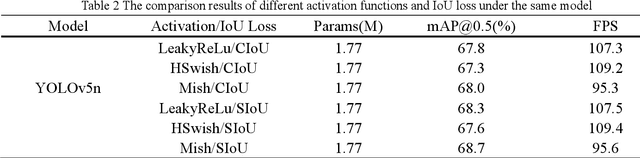

Abstract:In response to the existing object detection algorithms are applied to complex fire scenarios with poor detection accuracy, slow speed and difficult deployment., this paper proposes a lightweight fire detection algorithm of Light-YOLOv5 that achieves a balance of speed and accuracy. First, the last layer of backbone network is replaced with SepViT Block to enhance the contact of backbone network to global information; second, a Light-BiFPN neck network is designed to lighten the model while improving the feature extraction; third, Global Attention Mechanism (GAM) is fused into the network to make the model more focused on global dimensional features; finally, we use the Mish activation function and SIoU loss to increase the convergence speed and improve the accuracy at the same time. The experimental results show that Light-YOLOv5 improves mAP by 3.3% compared to the original algorithm, reduces the number of parameters by 27.1%, decreases the computation by 19.1%, achieves FPS of 91.1. Even compared to the latest YOLOv7-tiny, the mAP of Light-YOLOv5 is 6.8% higher, which shows the effectiveness of the algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge