Faruk Kazi

Converging Dimensions: Information Extraction and Summarization through Multisource, Multimodal, and Multilingual Fusion

Jun 19, 2024Abstract:Recent advances in large language models (LLMs) have led to new summarization strategies, offering an extensive toolkit for extracting important information. However, these approaches are frequently limited by their reliance on isolated sources of data. The amount of information that can be gathered is limited and covers a smaller range of themes, which introduces the possibility of falsified content and limited support for multilingual and multimodal data. The paper proposes a novel approach to summarization that tackles such challenges by utilizing the strength of multiple sources to deliver a more exhaustive and informative understanding of intricate topics. The research progresses beyond conventional, unimodal sources such as text documents and integrates a more diverse range of data, including YouTube playlists, pre-prints, and Wikipedia pages. The aforementioned varied sources are then converted into a unified textual representation, enabling a more holistic analysis. This multifaceted approach to summary generation empowers us to extract pertinent information from a wider array of sources. The primary tenet of this approach is to maximize information gain while minimizing information overlap and maintaining a high level of informativeness, which encourages the generation of highly coherent summaries.

HMD Vision-based Teleoperating UGV and UAV for Hostile Environment using Deep Learning

Sep 14, 2016

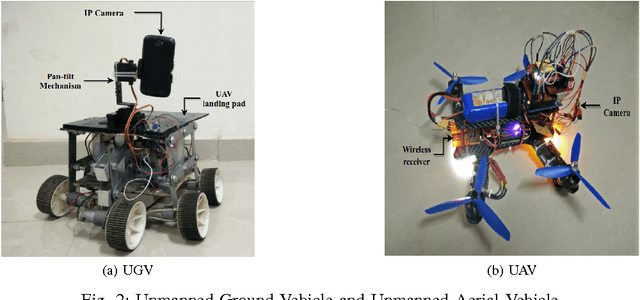

Abstract:The necessity of maintaining a robust antiterrorist task force has become imperative in recent times with resurgence of rogue element in the society. A well equipped combat force warrants the safety and security of citizens and the integrity of the sovereign state. In this paper we propose a novel teleoperating robot which can play a major role in combat, rescue and reconnaissance missions by substantially reducing loss of human soldiers in such hostile environments. The proposed robotic solution consists of an unmanned ground vehicle equipped with an IP camera visual system broadcasting real-time video data to a remote cloud server. With the advancement in machine learning algorithms in the field of computer vision, we incorporate state of the art deep convolutional neural networks to identify and predict individuals with malevolent intent. The classification is performed on every frame of the video stream by the trained network in the cloud server. The predicted output of the network is overlaid on the video stream with specific colour marks and prediction percentage. Finally the data is resized into half-side by side format and streamed to the head mount display worn by the human controller which facilitates first person view of the scenario. The ground vehicle is also coupled with an unmanned aerial vehicle for aerial surveillance. The proposed scheme is an assistive system and the final decision evidently lies with the human handler.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge