Fangneng Zhan

Deep Monocular 3D Human Pose Estimation via Cascaded Dimension-Lifting

Apr 08, 2021

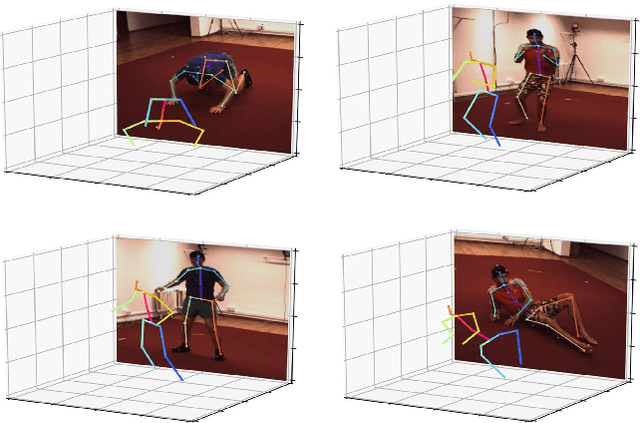

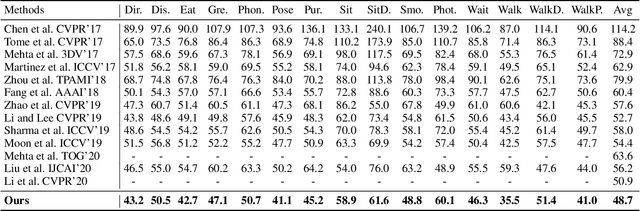

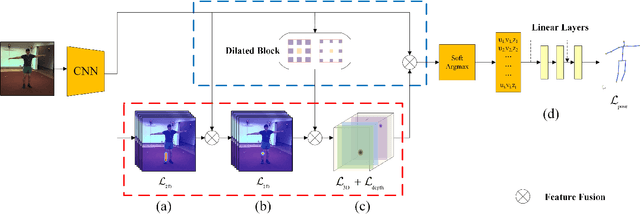

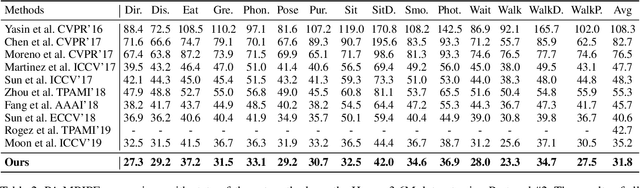

Abstract:The 3D pose estimation from a single image is a challenging problem due to depth ambiguity. One type of the previous methods lifts 2D joints, obtained by resorting to external 2D pose detectors, to the 3D space. However, this type of approaches discards the contextual information of images which are strong cues for 3D pose estimation. Meanwhile, some other methods predict the joints directly from monocular images but adopt a 2.5D output representation $P^{2.5D} = (u,v,z^{r}) $ where both $u$ and $v$ are in the image space but $z^{r}$ in root-relative 3D space. Thus, the ground-truth information (e.g., the depth of root joint from the camera) is normally utilized to transform the 2.5D output to the 3D space, which limits the applicability in practice. In this work, we propose a novel end-to-end framework that not only exploits the contextual information but also produces the output directly in the 3D space via cascaded dimension-lifting. Specifically, we decompose the task of lifting pose from 2D image space to 3D spatial space into several sequential sub-tasks, 1) kinematic skeletons \& individual joints estimation in 2D space, 2) root-relative depth estimation, and 3) lifting to the 3D space, each of which employs direct supervisions and contextual image features to guide the learning process. Extensive experiments show that the proposed framework achieves state-of-the-art performance on two widely used 3D human pose datasets (Human3.6M, MuPoTS-3D).

GMLight: Lighting Estimation via Geometric Distribution Approximation

Feb 20, 2021

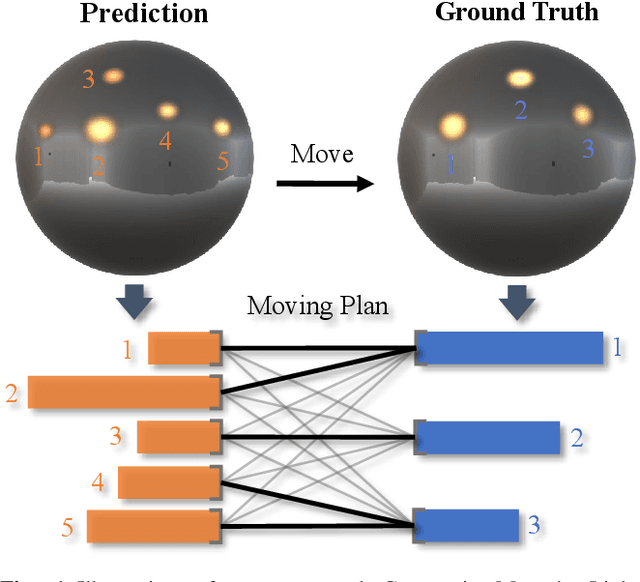

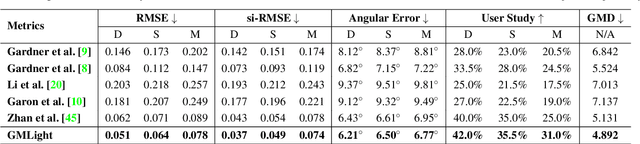

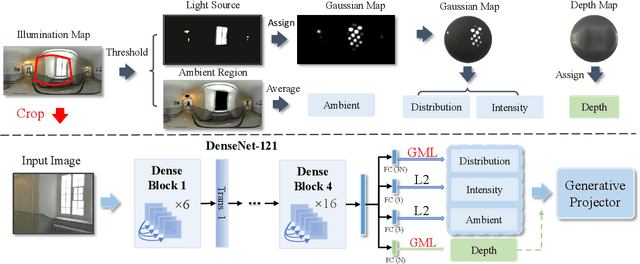

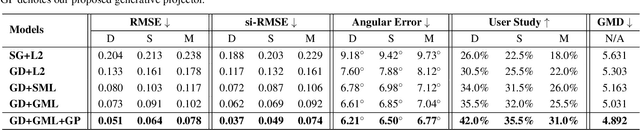

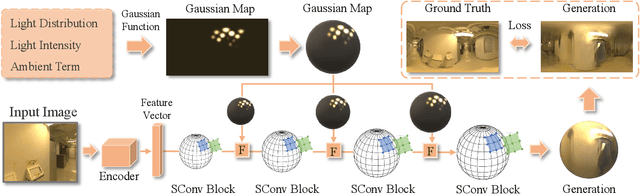

Abstract:Lighting estimation from a single image is an essential yet challenging task in computer vision and computer graphics. Existing works estimate lighting by regressing representative illumination parameters or generating illumination maps directly. However, these methods often suffer from poor accuracy and generalization. This paper presents Geometric Mover's Light (GMLight), a lighting estimation framework that employs a regression network and a generative projector for effective illumination estimation. We parameterize illumination scenes in terms of the geometric light distribution, light intensity, ambient term, and auxiliary depth, and estimate them as a pure regression task. Inspired by the earth mover's distance, we design a novel geometric mover's loss to guide the accurate regression of light distribution parameters. With the estimated lighting parameters, the generative projector synthesizes panoramic illumination maps with realistic appearance and frequency. Extensive experiments show that GMLight achieves accurate illumination estimation and superior fidelity in relighting for 3D object insertion.

EMLight: Lighting Estimation via Spherical Distribution Approximation

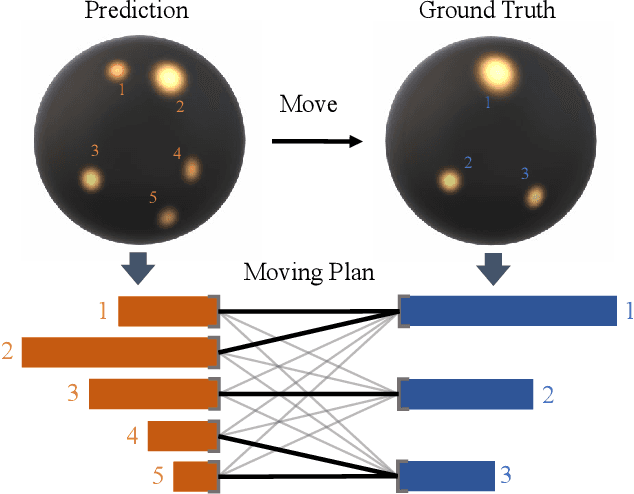

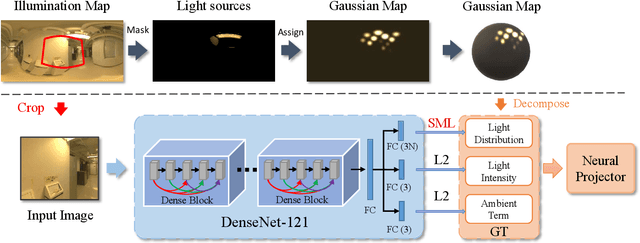

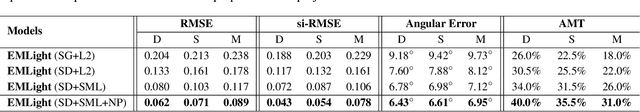

Dec 21, 2020

Abstract:Illumination estimation from a single image is critical in 3D rendering and it has been investigated extensively in the computer vision and computer graphic research community. On the other hand, existing works estimate illumination by either regressing light parameters or generating illumination maps that are often hard to optimize or tend to produce inaccurate predictions. We propose Earth Mover Light (EMLight), an illumination estimation framework that leverages a regression network and a neural projector for accurate illumination estimation. We decompose the illumination map into spherical light distribution, light intensity and the ambient term, and define the illumination estimation as a parameter regression task for the three illumination components. Motivated by the Earth Mover distance, we design a novel spherical mover's loss that guides to regress light distribution parameters accurately by taking advantage of the subtleties of spherical distribution. Under the guidance of the predicted spherical distribution, light intensity and ambient term, the neural projector synthesizes panoramic illumination maps with realistic light frequency. Extensive experiments show that EMLight achieves accurate illumination estimation and the generated relighting in 3D object embedding exhibits superior plausibility and fidelity as compared with state-of-the-art methods.

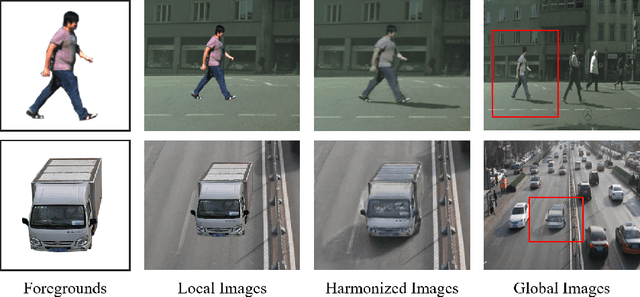

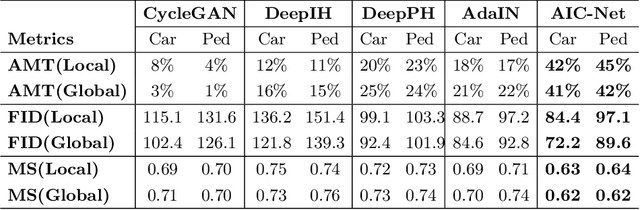

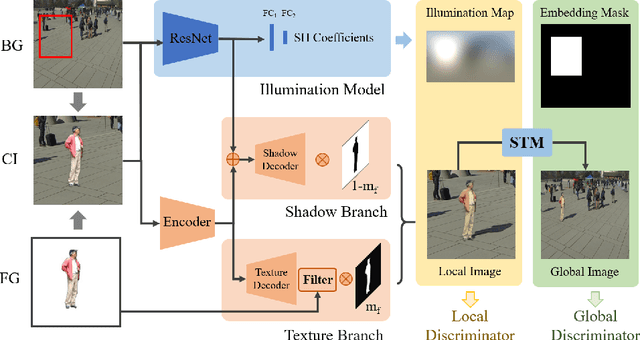

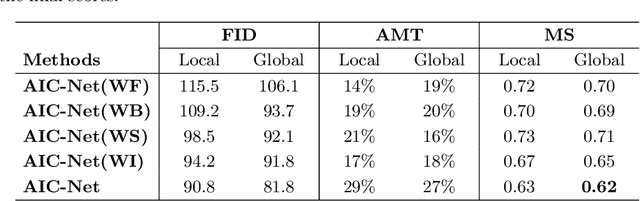

Adversarial Image Composition with Auxiliary Illumination

Sep 17, 2020

Abstract:Dealing with the inconsistency between a foreground object and a background image is a challenging task in high-fidelity image composition. State-of-the-art methods strive to harmonize the composed image by adapting the style of foreground objects to be compatible with the background image, whereas the potential shadow of foreground objects within the composed image which is critical to the composition realism is largely neglected. In this paper, we propose an Adversarial Image Composition Net (AIC-Net) that achieves realistic image composition by considering potential shadows that the foreground object projects in the composed image. A novel branched generation mechanism is proposed, which disentangles the generation of shadows and the transfer of foreground styles for optimal accomplishment of the two tasks simultaneously. A differentiable spatial transformation module is designed which bridges the local harmonization and the global harmonization to achieve their joint optimization effectively. Extensive experiments on pedestrian and car composition tasks show that the proposed AIC-Net achieves superior composition performance qualitatively and quantitatively.

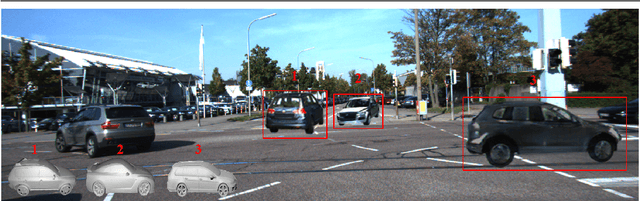

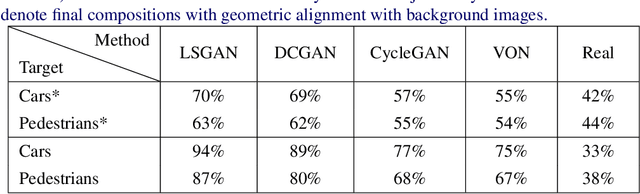

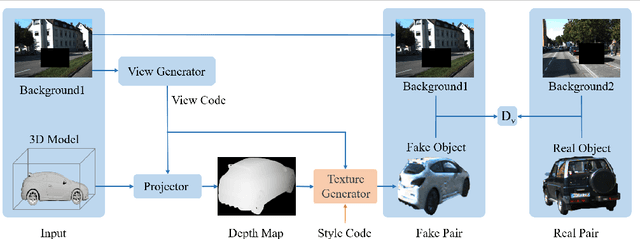

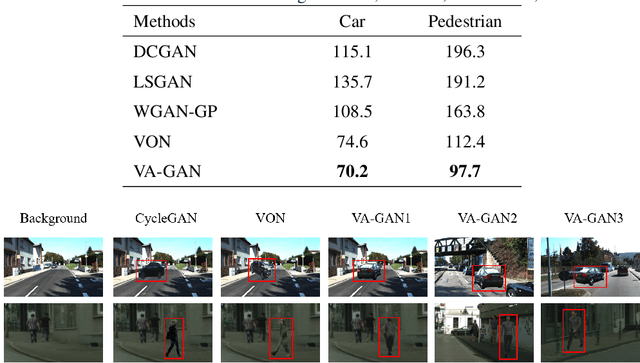

Towards Realistic 3D Embedding via View Alignment

Jul 14, 2020

Abstract:Recent advances in generative adversarial networks (GANs) have achieved great success in automated image composition that generates new images by embedding interested foreground objects into background images automatically. On the other hand, most existing works deal with foreground objects in two-dimensional (2D) images though foreground objects in three-dimensional (3D) models are more flexible with 360-degree view freedom. This paper presents an innovative View Alignment GAN (VA-GAN) that composes new images by embedding 3D models into 2D background images realistically and automatically. VA-GAN consists of a texture generator and a differential discriminator that are inter-connected and end-to-end trainable. The differential discriminator guides to learn geometric transformation from background images so that the composed 3D models can be aligned with the background images with realistic poses and views. The texture generator adopts a novel view encoding mechanism for generating accurate object textures for the 3D models under the estimated views. Extensive experiments over two synthesis tasks (car synthesis with KITTI and pedestrian synthesis with Cityscapes) show that VA-GAN achieves high-fidelity composition qualitatively and quantitatively as compared with state-of-the-art generation methods.

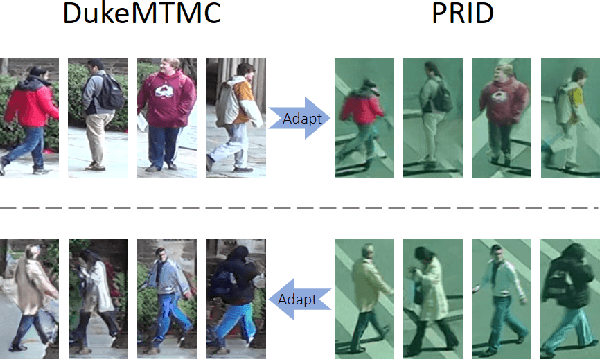

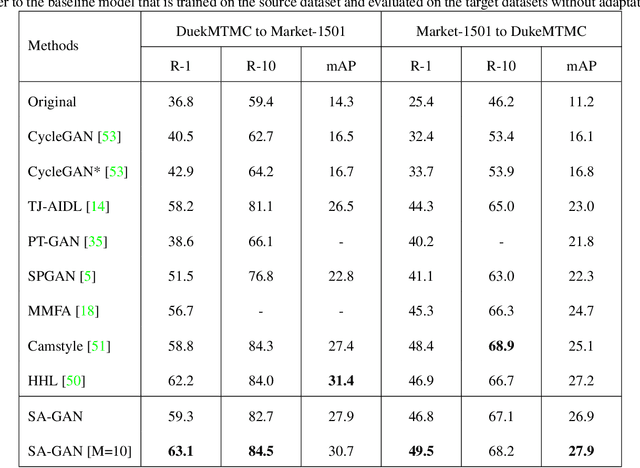

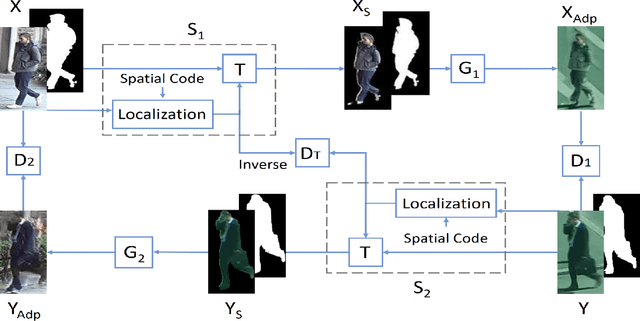

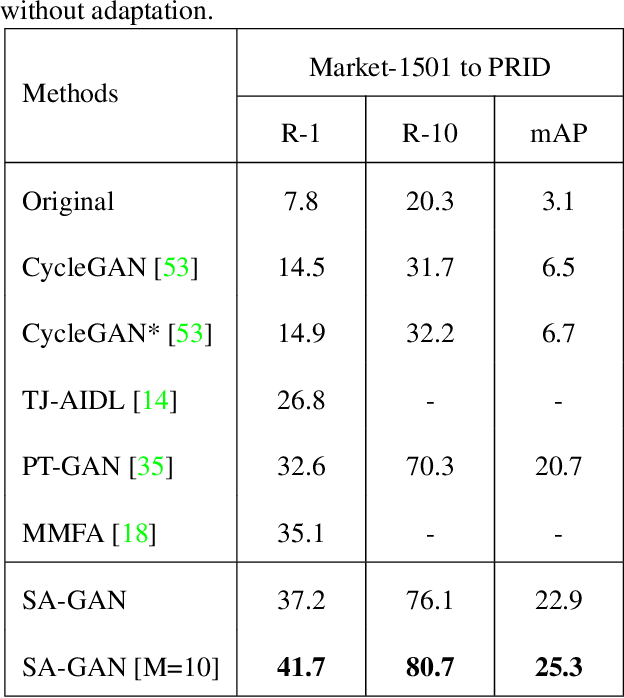

Spatial-Aware GAN for Unsupervised Person Re-identification

Nov 26, 2019

Abstract:The recent person re-identification research has achieved great success by learning from a large number of labeled person images. On the other hand, the learned models often experience significant performance drops when applied to images collected in a different environment. Unsupervised domain adaptation (UDA) has been investigated to mitigate this constraint, but most existing systems adapt images at pixel level only and ignore obvious discrepancies at spatial level. This paper presents an innovative UDA-based person re-identification network that is capable of adapting images at both spatial and pixel levels simultaneously. A novel disentangled cycle-consistency loss is designed which guides the learning of spatial-level and pixel-level adaptation in a collaborative manner. In addition, a novel multi-modal mechanism is incorporated which is capable of generating images of different geometry views and augmenting training images effectively. Extensive experiments over a number of public datasets show that the proposed UDA network achieves superior person re-identification performance as compared with the state-of-the-art.

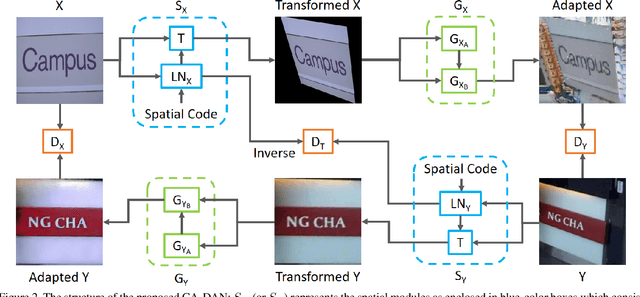

GA-DAN: Geometry-Aware Domain Adaptation Network for Scene Text Detection and Recognition

Jul 23, 2019

Abstract:Recent adversarial learning research has achieved very impressive progress for modelling cross-domain data shifts in appearance space but its counterpart in modelling cross-domain shifts in geometry space lags far behind. This paper presents an innovative Geometry-Aware Domain Adaptation Network (GA-DAN) that is capable of modelling cross-domain shifts concurrently in both geometry space and appearance space and realistically converting images across domains with very different characteristics. In the proposed GA-DAN, a novel multi-modal spatial learning technique is designed which converts a source-domain image into multiple images of different spatial views as in the target domain. A new disentangled cycle-consistency loss is introduced which balances the cycle consistency in appearance and geometry spaces and improves the learning of the whole network greatly. The proposed GA-DAN has been evaluated for the classic scene text detection and recognition tasks, and experiments show that the domain-adapted images achieve superior scene text detection and recognition performance while applied to network training.

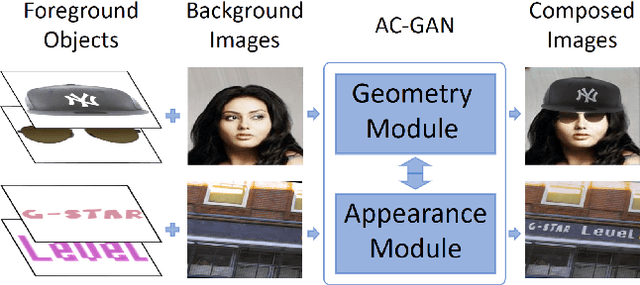

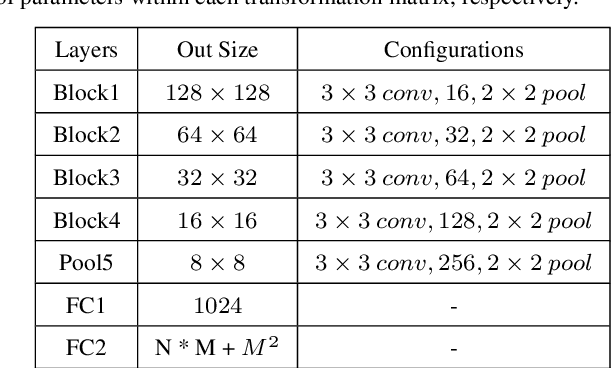

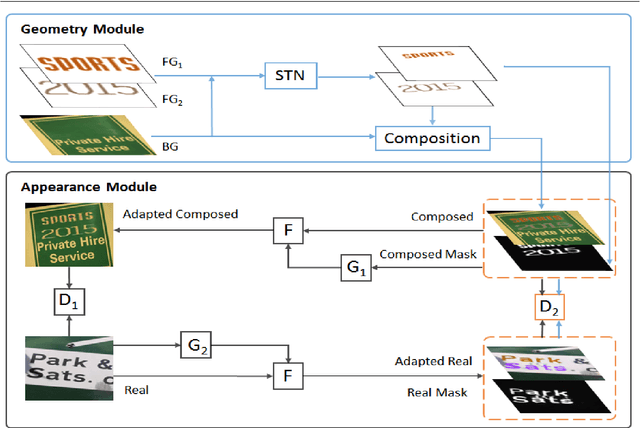

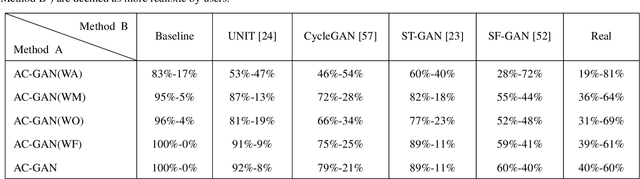

Adaptive Composition GAN towards Realistic Image Synthesis

May 14, 2019

Abstract:Despite the rapid progress of generative adversarial networks (GANs) in image synthesis in recent years, current approaches work in either geometry domain or appearance domain which tend to introduce various synthesis artifacts. This paper presents an innovative Adaptive Composition GAN (AC-GAN) that incorporates image synthesis in geometry and appearance domains into an end-to-end trainable network and achieves synthesis realism in both domains simultaneously. An innovative hierarchical synthesis mechanism is designed which is capable of generating realistic geometry and composition when multiple foreground objects with or without occlusions are involved in synthesis. In addition, a novel attention mask is introduced to guide the appearance adaptation to the embedded foreground objects which helps preserve image details and resolution and also provide better reference for synthesis in geometry domain. Extensive experiments on scene text image synthesis, automated portrait editing and indoor rendering tasks show that the proposed AC-GAN achieves superior synthesis performance qualitatively and quantitatively.

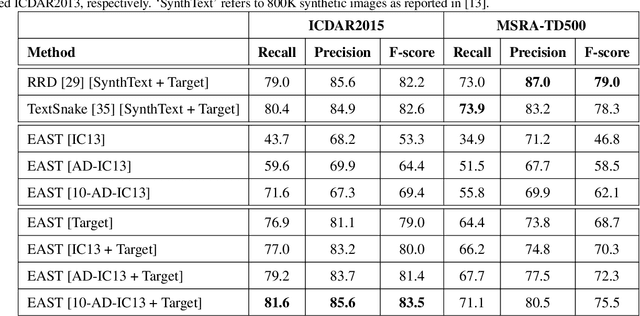

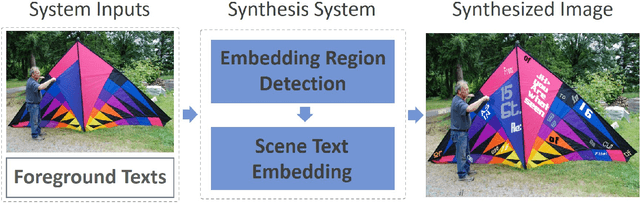

Scene Text Synthesis for Efficient and Effective Deep Network Training

Jan 26, 2019

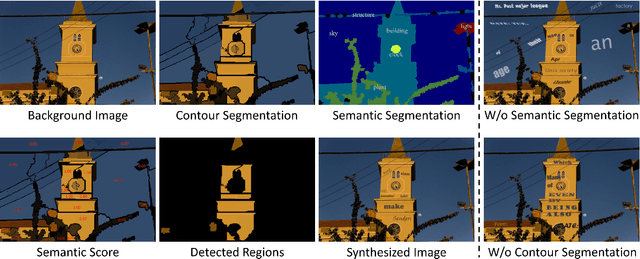

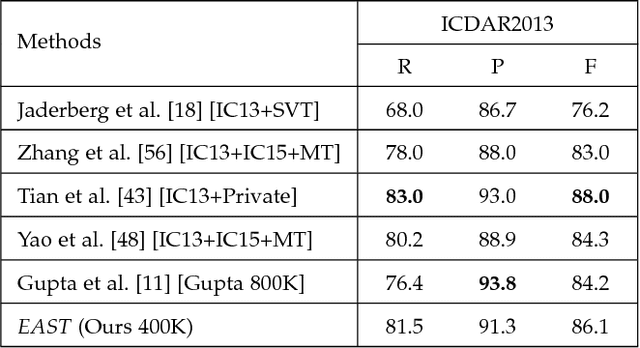

Abstract:A large amount of annotated training images is critical for training accurate and robust deep network models but the collection of a large amount of annotated training images is often time-consuming and costly. Image synthesis alleviates this constraint by generating annotated training images automatically by machines which has attracted increasing interest in the recent deep learning research. We develop an innovative image synthesis technique that composes annotated training images by realistically embedding foreground objects of interest (OOI) into background images. The proposed technique consists of two key components that in principle boost the usefulness of the synthesized images in deep network training. The first is context-aware semantic coherence which ensures that the OOI are placed around semantically coherent regions within the background image. The second is harmonious appearance adaptation which ensures that the embedded OOI are agreeable to the surrounding background from both geometry alignment and appearance realism. The proposed technique has been evaluated over two related but very different computer vision challenges, namely, scene text detection and scene text recognition. Experiments over a number of public datasets demonstrate the effectiveness of our proposed image synthesis technique - the use of our synthesized images in deep network training is capable of achieving similar or even better scene text detection and scene text recognition performance as compared with using real images.

Spatial Fusion GAN for Image Synthesis

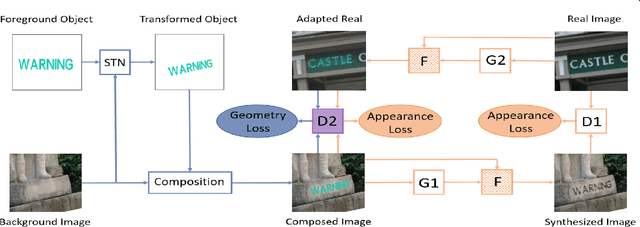

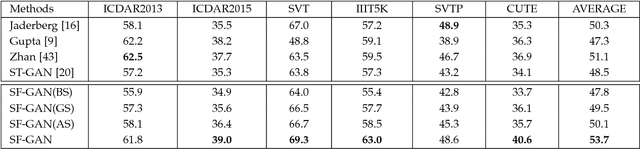

Dec 14, 2018

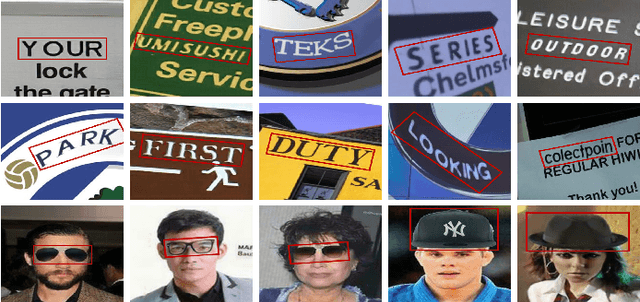

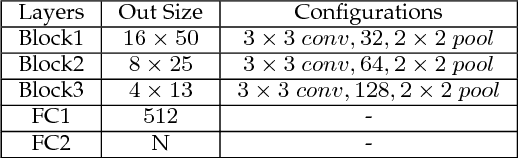

Abstract:Recent advances in generative adversarial networks (GANs) have shown great potentials in realistic image synthesis whereas most existing works address synthesis realism in either appearance space or geometry space but few in both. This paper presents an innovative Spatial Fusion GAN (SF-GAN) that combines a geometry synthesizer and an appearance synthesizer to achieve synthesis realism in both geometry and appearance spaces. The geometry synthesizer learns contextual geometries of background images and transforms and places foreground objects into the background images unanimously. The appearance synthesizer adjusts the color, brightness and styles of the foreground objects and embeds them into background images harmoniously, where a guided filter is introduced for detail preserving. The two synthesizers are inter-connected as mutual references which can be trained end-to-end without supervision. The SF-GAN has been evaluated in two tasks: (1) realistic scene text image synthesis for training better recognition models; (2) glass and hat wearing for realistic matching glasses and hats with real portraits. Qualitative and quantitative comparisons with the state-of-the-art demonstrate the superiority of the proposed SF-GAN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge