Ewa Szczurek

University of Warsaw, Hemholtz Center Munich

seqme: a Python library for evaluating biological sequence design

Nov 06, 2025Abstract:Recent advances in computational methods for designing biological sequences have sparked the development of metrics to evaluate these methods performance in terms of the fidelity of the designed sequences to a target distribution and their attainment of desired properties. However, a single software library implementing these metrics was lacking. In this work we introduce seqme, a modular and highly extendable open-source Python library, containing model-agnostic metrics for evaluating computational methods for biological sequence design. seqme considers three groups of metrics: sequence-based, embedding-based, and property-based, and is applicable to a wide range of biological sequences: small molecules, DNA, ncRNA, mRNA, peptides and proteins. The library offers a number of embedding and property models for biological sequences, as well as diagnostics and visualization functions to inspect the results. seqme can be used to evaluate both one-shot and iterative computational design methods.

PepCompass: Navigating peptide embedding spaces using Riemannian Geometry

Oct 02, 2025Abstract:Antimicrobial peptide discovery is challenged by the astronomical size of peptide space and the relative scarcity of active peptides. Generative models provide continuous latent "maps" of peptide space, but conventionally ignore decoder-induced geometry and rely on flat Euclidean metrics, rendering exploration and optimization distorted and inefficient. Prior manifold-based remedies assume fixed intrinsic dimensionality, which critically fails in practice for peptide data. Here, we introduce PepCompass, a geometry-aware framework for peptide exploration and optimization. At its core, we define a Union of $\kappa$-Stable Riemannian Manifolds $\mathbb{M}^{\kappa}$, a family of decoder-induced manifolds that captures local geometry while ensuring computational stability. We propose two local exploration methods: Second-Order Riemannian Brownian Efficient Sampling, which provides a convergent second-order approximation to Riemannian Brownian motion, and Mutation Enumeration in Tangent Space, which reinterprets tangent directions as discrete amino-acid substitutions. Combining these yields Local Enumeration Bayesian Optimization (LE-BO), an efficient algorithm for local activity optimization. Finally, we introduce Potential-minimizing Geodesic Search (PoGS), which interpolates between prototype embeddings along property-enriched geodesics, biasing discovery toward seeds, i.e. peptides with favorable activity. In-vitro validation confirms the effectiveness of PepCompass: PoGS yields four novel seeds, and subsequent optimization with LE-BO discovers 25 highly active peptides with broad-spectrum activity, including against resistant bacterial strains. These results demonstrate that geometry-informed exploration provides a powerful new paradigm for antimicrobial peptide design.

Mamba Goes HoME: Hierarchical Soft Mixture-of-Experts for 3D Medical Image Segmentation

Jul 08, 2025Abstract:In recent years, artificial intelligence has significantly advanced medical image segmentation. However, challenges remain, including efficient 3D medical image processing across diverse modalities and handling data variability. In this work, we introduce Hierarchical Soft Mixture-of-Experts (HoME), a two-level token-routing layer for efficient long-context modeling, specifically designed for 3D medical image segmentation. Built on the Mamba state-space model (SSM) backbone, HoME enhances sequential modeling through sparse, adaptive expert routing. The first stage employs a Soft Mixture-of-Experts (SMoE) layer to partition input sequences into local groups, routing tokens to specialized per-group experts for localized feature extraction. The second stage aggregates these outputs via a global SMoE layer, enabling cross-group information fusion and global context refinement. This hierarchical design, combining local expert routing with global expert refinement improves generalizability and segmentation performance, surpassing state-of-the-art results across datasets from the three most commonly used 3D medical imaging modalities and data quality.

ProSpero: Active Learning for Robust Protein Design Beyond Wild-Type Neighborhoods

May 28, 2025Abstract:Designing protein sequences of both high fitness and novelty is a challenging task in data-efficient protein engineering. Exploration beyond wild-type neighborhoods often leads to biologically implausible sequences or relies on surrogate models that lose fidelity in novel regions. Here, we propose ProSpero, an active learning framework in which a frozen pre-trained generative model is guided by a surrogate updated from oracle feedback. By integrating fitness-relevant residue selection with biologically-constrained Sequential Monte Carlo sampling, our approach enables exploration beyond wild-type neighborhoods while preserving biological plausibility. We show that our framework remains effective even when the surrogate is misspecified. ProSpero consistently outperforms or matches existing methods across diverse protein engineering tasks, retrieving sequences of both high fitness and novelty.

Factor Analysis with Correlated Topic Model for Multi-Modal Data

Apr 26, 2025

Abstract:Integrating various data modalities brings valuable insights into underlying phenomena. Multimodal factor analysis (FA) uncovers shared axes of variation underlying different simple data modalities, where each sample is represented by a vector of features. However, FA is not suited for structured data modalities, such as text or single cell sequencing data, where multiple data points are measured per each sample and exhibit a clustering structure. To overcome this challenge, we introduce FACTM, a novel, multi-view and multi-structure Bayesian model that combines FA with correlated topic modeling and is optimized using variational inference. Additionally, we introduce a method for rotating latent factors to enhance interpretability with respect to binary features. On text and video benchmarks as well as real-world music and COVID-19 datasets, we demonstrate that FACTM outperforms other methods in identifying clusters in structured data, and integrating them with simple modalities via the inference of shared, interpretable factors.

Targeted AMP generation through controlled diffusion with efficient embeddings

Apr 24, 2025Abstract:Deep learning-based antimicrobial peptide (AMP) discovery faces critical challenges such as low experimental hit rates as well as the need for nuanced controllability and efficient modeling of peptide properties. To address these challenges, we introduce OmegAMP, a framework that leverages a diffusion-based generative model with efficient low-dimensional embeddings, precise controllability mechanisms, and novel classifiers with drastically reduced false positive rates for candidate filtering. OmegAMP enables the targeted generation of AMPs with specific physicochemical properties, activity profiles, and species-specific effectiveness. Moreover, it maximizes sample diversity while ensuring faithfulness to the underlying data distribution during generation. We demonstrate that OmegAMP achieves state-of-the-art performance across all stages of the AMP discovery pipeline, significantly advancing the potential of computational frameworks in combating antimicrobial resistance.

Unified Molecule Generation and Property Prediction

Apr 23, 2025Abstract:Modeling the joint distribution of the data samples and their properties allows to construct a single model for both data generation and property prediction, with synergistic capabilities reaching beyond purely generative or predictive models. However, training joint models presents daunting architectural and optimization challenges. Here, we propose Hyformer, a transformer-based joint model that successfully blends the generative and predictive functionalities, using an alternating attention mask together with a unified pre-training scheme. We show that Hyformer rivals other joint models, as well as state-of-the-art molecule generation and property prediction models. Additionally, we show the benefits of joint modeling in downstream tasks of molecular representation learning, hit identification and antimicrobial peptide design.

De Novo Drug Design with Joint Transformers

Oct 03, 2023Abstract:De novo drug design requires simultaneously generating novel molecules outside of training data and predicting their target properties, making it a hard task for generative models. To address this, we propose Joint Transformer that combines a Transformer decoder, a Transformer encoder, and a predictor in a joint generative model with shared weights. We show that training the model with a penalized log-likelihood objective results in state-of-the-art performance in molecule generation, while decreasing the prediction error on newly sampled molecules, as compared to a fine-tuned decoder-only Transformer, by 42%. Finally, we propose a probabilistic black-box optimization algorithm that employs Joint Transformer to generate novel molecules with improved target properties, as compared to the training data, outperforming other SMILES-based optimization methods in de novo drug design.

Artificial intelligence-driven antimicrobial peptide discovery

Aug 21, 2023

Abstract:Antimicrobial peptides (AMPs) emerge as promising agents against antimicrobial resistance, providing an alternative to conventional antibiotics. Artificial intelligence (AI) revolutionized AMP discovery through both discrimination and generation approaches. The discriminators aid the identification of promising candidates by predicting key peptide properties such as activity and toxicity, while the generators learn the distribution over peptides and enable sampling novel AMP candidates, either de novo, or as analogues of a prototype peptide. Moreover, the controlled generation of AMPs with desired properties is achieved by discriminator-guided filtering, positive-only learning, latent space sampling, as well as conditional and optimized generation. Here we review recent achievements in AI-driven AMP discovery, highlighting the most exciting directions.

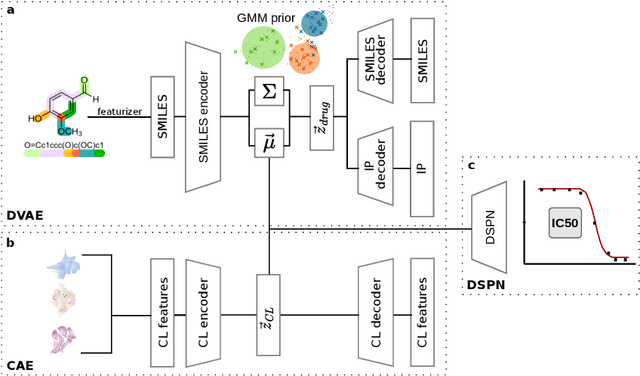

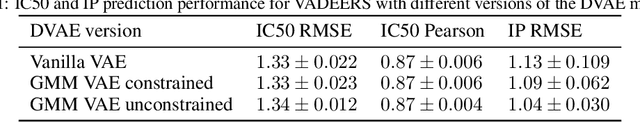

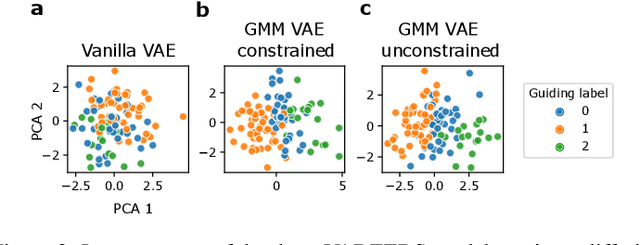

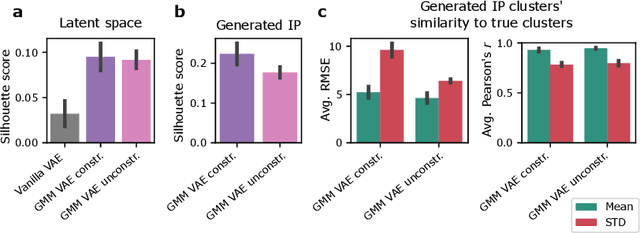

A generative recommender system with GMM prior for cancer drug generation and sensitivity prediction

Jun 07, 2022

Abstract:Recent emergence of high-throughput drug screening assays sparkled an intensive development of machine learning methods, including models for prediction of sensitivity of cancer cell lines to anti-cancer drugs, as well as methods for generation of potential drug candidates. However, a concept of generation of compounds with specific properties and simultaneous modeling of their efficacy against cancer cell lines has not been comprehensively explored. To address this need, we present VADEERS, a Variational Autoencoder-based Drug Efficacy Estimation Recommender System. The generation of compounds is performed by a novel variational autoencoder with a semi-supervised Gaussian Mixture Model (GMM) prior. The prior defines a clustering in the latent space, where the clusters are associated with specific drug properties. In addition, VADEERS is equipped with a cell line autoencoder and a sensitivity prediction network. The model combines data for SMILES string representations of anti-cancer drugs, their inhibition profiles against a panel of protein kinases, cell lines biological features and measurements of the sensitivity of the cell lines to the drugs. The evaluated variants of VADEERS achieve a high r=0.87 Pearson correlation between true and predicted drug sensitivity estimates. We train the GMM prior in such a way that the clusters in the latent space correspond to a pre-computed clustering of the drugs by their inhibitory profiles. We show that the learned latent representations and new generated data points accurately reflect the given clustering. In summary, VADEERS offers a comprehensive model of drugs and cell lines properties and relationships between them, as well as a guided generation of novel compounds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge