Doruk Senkal

EgoCHARM: Resource-Efficient Hierarchical Activity Recognition using an Egocentric IMU Sensor

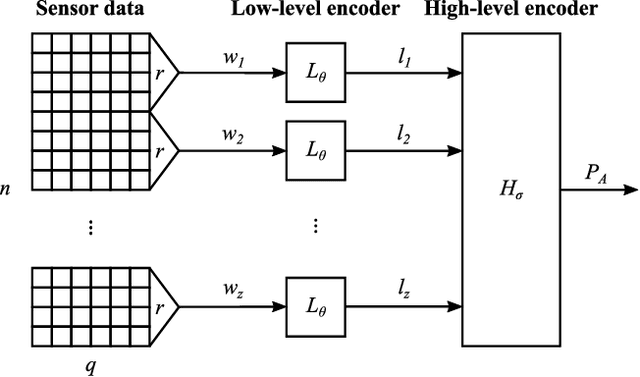

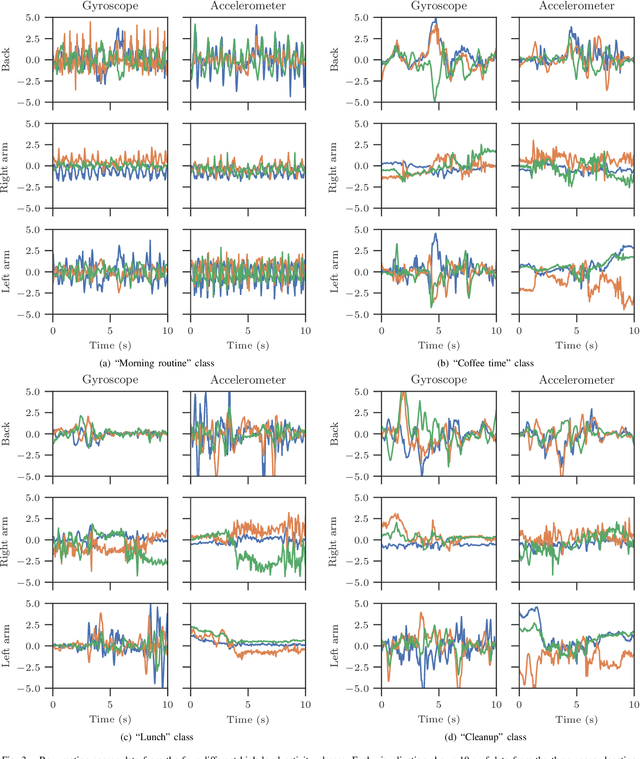

Apr 24, 2025Abstract:Human activity recognition (HAR) on smartglasses has various use cases, including health/fitness tracking and input for context-aware AI assistants. However, current approaches for egocentric activity recognition suffer from low performance or are resource-intensive. In this work, we introduce a resource (memory, compute, power, sample) efficient machine learning algorithm, EgoCHARM, for recognizing both high level and low level activities using a single egocentric (head-mounted) Inertial Measurement Unit (IMU). Our hierarchical algorithm employs a semi-supervised learning strategy, requiring primarily high level activity labels for training, to learn generalizable low level motion embeddings that can be effectively utilized for low level activity recognition. We evaluate our method on 9 high level and 3 low level activities achieving 0.826 and 0.855 F1 scores on high level and low level activity recognition respectively, with just 63k high level and 22k low level model parameters, allowing the low level encoder to be deployed directly on current IMU chips with compute. Lastly, we present results and insights from a sensitivity analysis and highlight the opportunities and limitations of activity recognition using egocentric IMUs.

CHARM: A Hierarchical Deep Learning Model for Classification of Complex Human Activities Using Motion Sensors

Jul 16, 2022

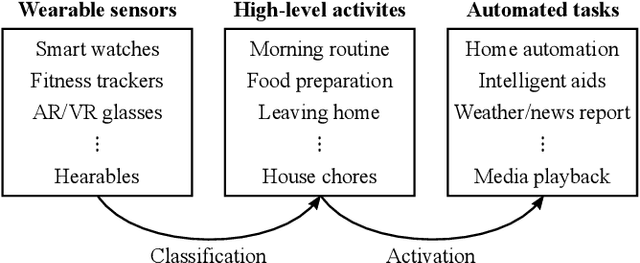

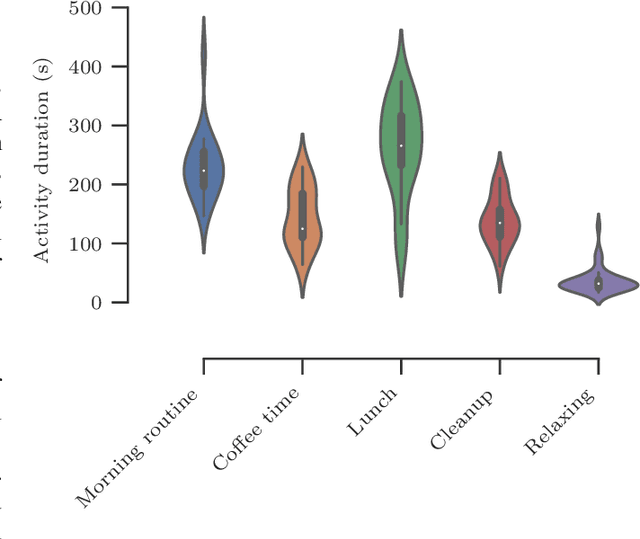

Abstract:In this paper, we report a hierarchical deep learning model for classification of complex human activities using motion sensors. In contrast to traditional Human Activity Recognition (HAR) models used for event-based activity recognition, such as step counting, fall detection, and gesture identification, this new deep learning model, which we refer to as CHARM (Complex Human Activity Recognition Model), is aimed for recognition of high-level human activities that are composed of multiple different low-level activities in a non-deterministic sequence, such as meal preparation, house chores, and daily routines. CHARM not only quantitatively outperforms state-of-the-art supervised learning approaches for high-level activity recognition in terms of average accuracy and F1 scores, but also automatically learns to recognize low-level activities, such as manipulation gestures and locomotion modes, without any explicit labels for such activities. This opens new avenues for Human-Machine Interaction (HMI) modalities using wearable sensors, where the user can choose to associate an automated task with a high-level activity, such as controlling home automation (e.g., robotic vacuum cleaners, lights, and thermostats) or presenting contextually relevant information at the right time (e.g., reminders, status updates, and weather/news reports). In addition, the ability to learn low-level user activities when trained using only high-level activity labels may pave the way to semi-supervised learning of HAR tasks that are inherently difficult to label.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge