Dongxiao Zhu

Defending against adversarial attacks on medical imaging AI system, classification or detection?

Jun 24, 2020

Abstract:Medical imaging AI systems such as disease classification and segmentation are increasingly inspired and transformed from computer vision based AI systems. Although an array of adversarial training and/or loss function based defense techniques have been developed and proved to be effective in computer vision, defending against adversarial attacks on medical images remains largely an uncharted territory due to the following unique challenges: 1) label scarcity in medical images significantly limits adversarial generalizability of the AI system; 2) vastly similar and dominant fore- and background in medical images make it hard samples for learning the discriminating features between different disease classes; and 3) crafted adversarial noises added to the entire medical image as opposed to the focused organ target can make clean and adversarial examples more discriminate than that between different disease classes. In this paper, we propose a novel robust medical imaging AI framework based on Semi-Supervised Adversarial Training (SSAT) and Unsupervised Adversarial Detection (UAD), followed by designing a new measure for assessing systems adversarial risk. We systematically demonstrate the advantages of our robust medical imaging AI system over the existing adversarial defense techniques under diverse real-world settings of adversarial attacks using a benchmark OCT imaging data set.

COVID-MobileXpert: On-Device COVID-19 Screening using Snapshots of Chest X-Ray

Apr 13, 2020

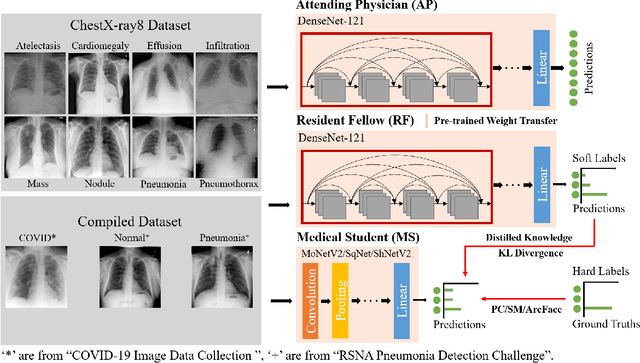

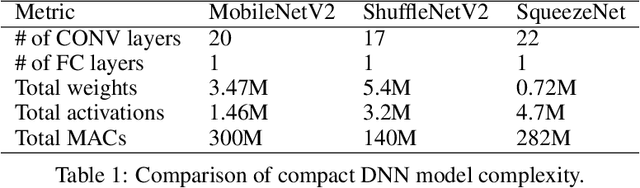

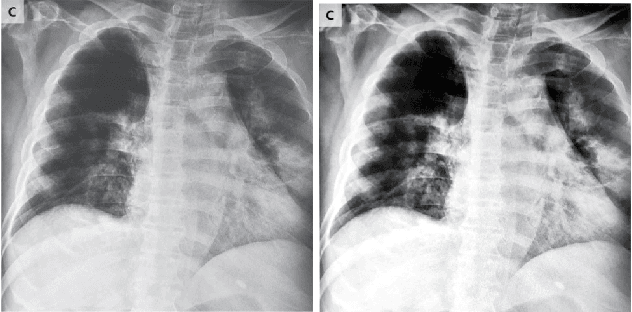

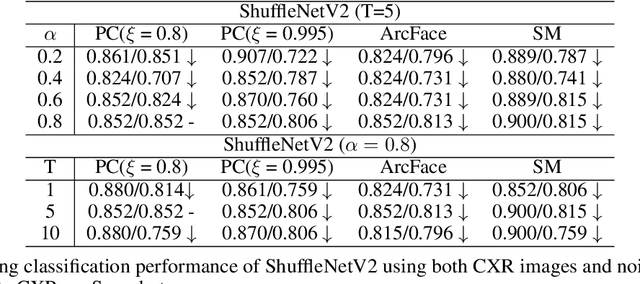

Abstract:With the increasing demand for millions of COVID-19 screenings, Computed Tomography (CT) based test has emerged as a promising alternative to the gold standard RT-PCR test. However, it is primarily provided in hospital setting due to the need for expensive equipment and experienced radiologists. An accurate, rapid yet inexpensive test that is suitable for COVID-19 population screenings at mobile, urgent and primary care clinics is urgently needed. We present COVID-MobileXpert: a lightweight deep neural network (DNN) based mobile app that can use noisy snapshots of chest X-ray (CXR) for point-of-care COVID-19 screening. We design and implement a novel three-player knowledge transfer and distillation (KTD) framework including a pre-trained attending physician (AP) network that extracts CXR imaging features from large scale of lung disease CXR images, a fine-tuned resident fellow (RF) network that learns the essential CXR imaging features to discriminate COVID-19 from pneumonia and/or normal cases using a small amount of COVID-19 cases, and a trained lightweight medical student (MS) network that performs on-device COVID-19 screening. To accommodate the need for screening using noisy snapshots of CXR images, we employ novel loss functions and training schemes for the MS network to learn the robust imaging features for accurate on-device COVID-19 screening. We demonstrate the strong potential of COVID-MobileXpert for rapid deployment via extensive experiments with diverse MS network architecture, CXR imaging quality, and tuning parameter settings. The source code of cloud and mobile based models are available from https://github.com/xinli0928/COVID-Xray.

Toward Tag-free Aspect Based Sentiment Analysis: A Multiple Attention Network Approach

Mar 22, 2020

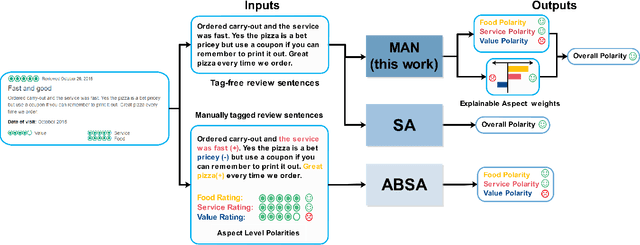

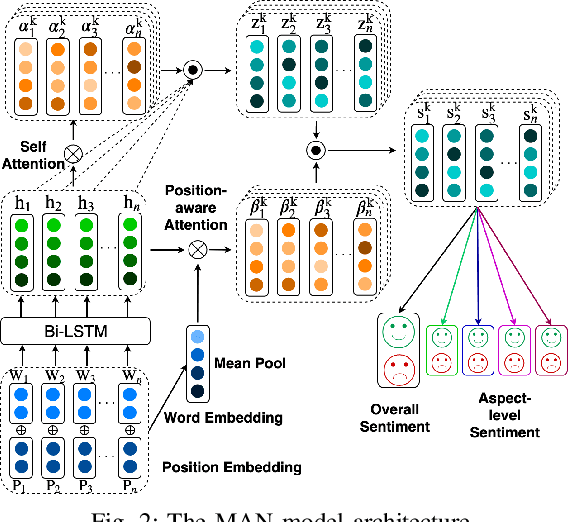

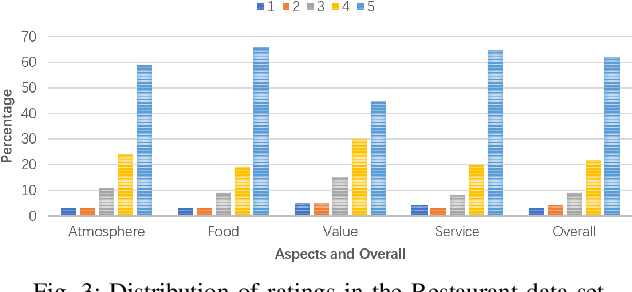

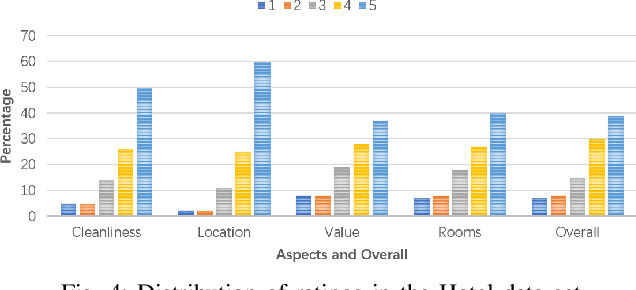

Abstract:Existing aspect based sentiment analysis (ABSA) approaches leverage various neural network models to extract the aspect sentiments via learning aspect-specific feature representations. However, these approaches heavily rely on manual tagging of user reviews according to the predefined aspects as the input, a laborious and time-consuming process. Moreover, the underlying methods do not explain how and why the opposing aspect level polarities in a user review lead to the overall polarity. In this paper, we tackle these two problems by designing and implementing a new Multiple-Attention Network (MAN) approach for more powerful ABSA without the need for aspect tags using two new tag-free data sets crawled directly from TripAdvisor ({https://www.tripadvisor.com}). With the Self- and Position-Aware attention mechanism, MAN is capable of extracting both aspect level and overall sentiments from the text reviews using the aspect level and overall customer ratings, and it can also detect the vital aspect(s) leading to the overall sentiment polarity among different aspects via a new aspect ranking scheme. We carry out extensive experiments to demonstrate the strong performance of MAN compared to other state-of-the-art ABSA approaches and the explainability of our approach by visualizing and interpreting attention weights in case studies.

On the Learning Property of Logistic and Softmax Losses for Deep Neural Networks

Mar 04, 2020

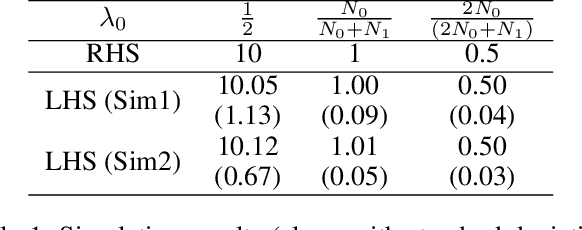

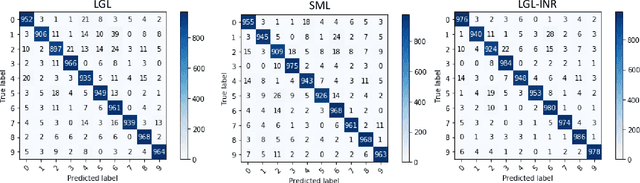

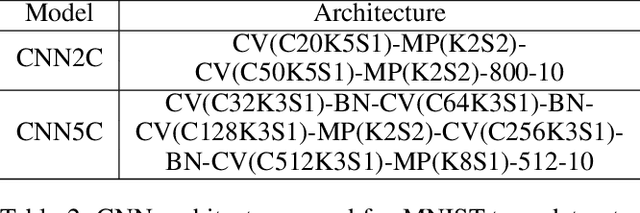

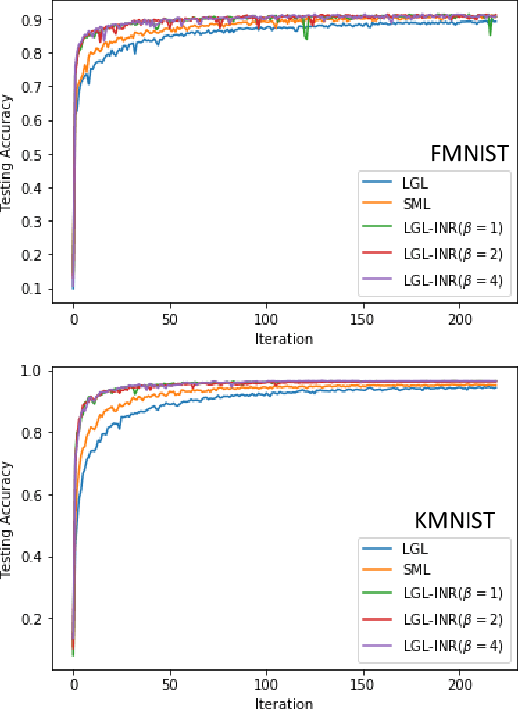

Abstract:Deep convolutional neural networks (CNNs) trained with logistic and softmax losses have made significant advancement in visual recognition tasks in computer vision. When training data exhibit class imbalances, the class-wise reweighted version of logistic and softmax losses are often used to boost performance of the unweighted version. In this paper, motivated to explain the reweighting mechanism, we explicate the learning property of those two loss functions by analyzing the necessary condition (e.g., gradient equals to zero) after training CNNs to converge to a local minimum. The analysis immediately provides us explanations for understanding (1) quantitative effects of the class-wise reweighting mechanism: deterministic effectiveness for binary classification using logistic loss yet indeterministic for multi-class classification using softmax loss; (2) disadvantage of logistic loss for single-label multi-class classification via one-vs.-all approach, which is due to the averaging effect on predicted probabilities for the negative class (e.g., non-target classes) in the learning process. With the disadvantage and advantage of logistic loss disentangled, we thereafter propose a novel reweighted logistic loss for multi-class classification. Our simple yet effective formulation improves ordinary logistic loss by focusing on learning hard non-target classes (target vs. non-target class in one-vs.-all) and turned out to be competitive with softmax loss. We evaluate our method on several benchmark datasets to demonstrate its effectiveness.

Improve SGD Training via Aligning Mini-batches

Feb 27, 2020

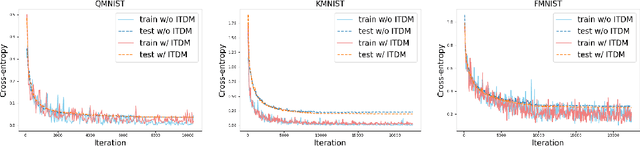

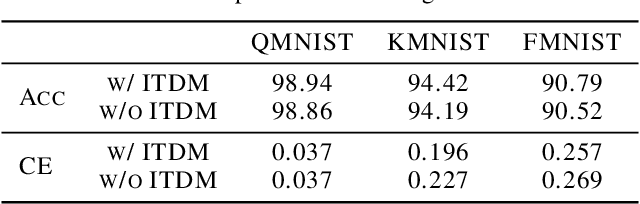

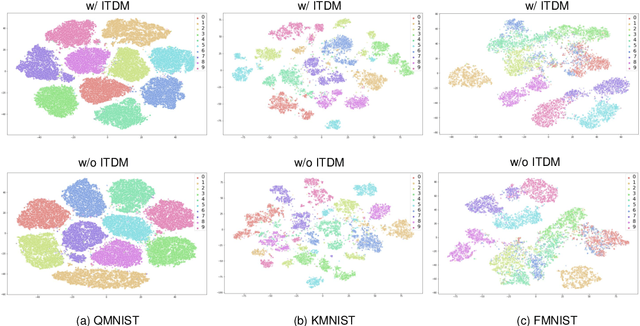

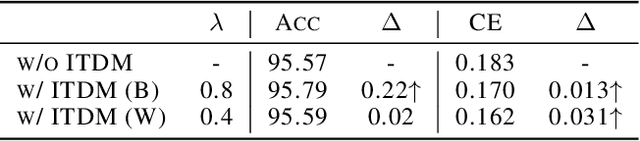

Abstract:Deep neural networks (DNNs) for supervised learning can be viewed as a pipeline of a feature extractor (i.e. last hidden layer) and a linear classifier (i.e. output layer) that is trained jointly with stochastic gradient descent (SGD). In each iteration of SGD, a mini-batch from the training data is sampled and the true gradient of the loss function is estimated as the noisy gradient calculated on this mini-batch. From the feature learning perspective, the feature extractor should be updated to learn meaningful features with respect to the entire data, and reduce the accommodation to noise in the mini-batch. With this motivation, we propose In-Training Distribution Matching (ITDM) to improve DNN training and reduce overfitting. Specifically, along with the loss function, ITDM regularizes the feature extractor by matching the moments of distributions of different mini-batches in each iteration of SGD, which is fulfilled by minimizing the maximum mean discrepancy. As such, ITDM does not assume any explicit parametric form of data distribution in the latent feature space. Extensive experiments are conducted to demonstrate the effectiveness of our proposed strategy.

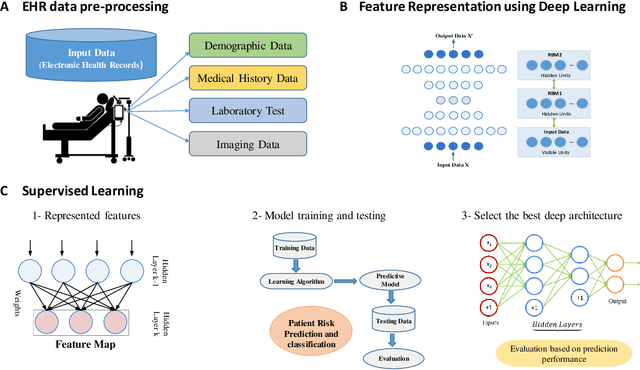

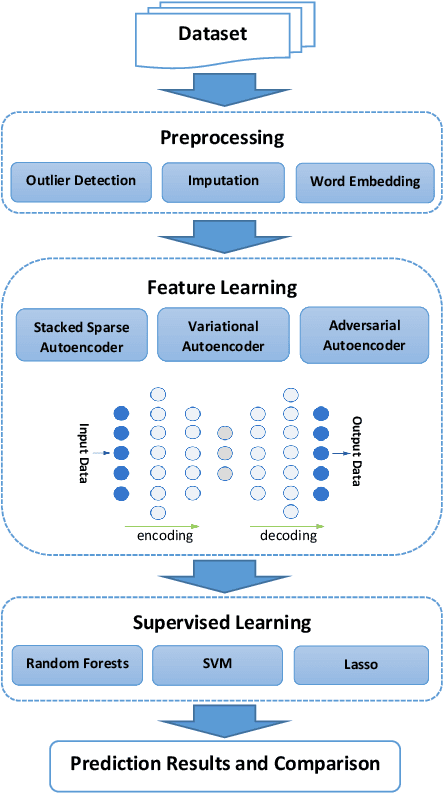

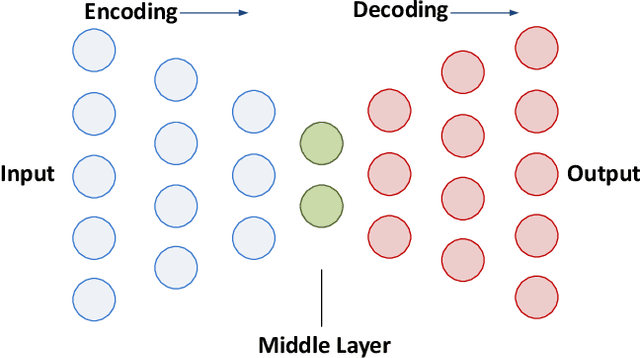

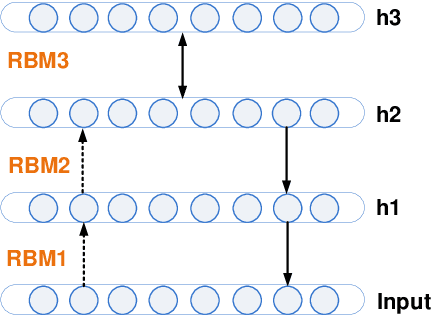

Representation Learning with Autoencoders for Electronic Health Records: A Comparative Study

Sep 20, 2019

Abstract:Increasing volume of Electronic Health Records (EHR) in recent years provides great opportunities for data scientists to collaborate on different aspects of healthcare research by applying advanced analytics to these EHR clinical data. A key requirement however is obtaining meaningful insights from high dimensional, sparse and complex clinical data. Data science approaches typically address this challenge by performing feature learning in order to build more reliable and informative feature representations from clinical data followed by supervised learning. In this paper, we propose a predictive modeling approach based on deep learning based feature representations and word embedding techniques. Our method uses different deep architectures (stacked sparse autoencoders, deep belief network, adversarial autoencoders and variational autoencoders) for feature representation in higher-level abstraction to obtain effective and robust features from EHRs, and then build prediction models on top of them. Our approach is particularly useful when the unlabeled data is abundant whereas labeled data is scarce. We investigate the performance of representation learning through a supervised learning approach. Our focus is to present a comparative study to evaluate the performance of different deep architectures through supervised learning and provide insights in the choice of deep feature representation techniques. Our experiments demonstrate that for small data sets, stacked sparse autoencoder demonstrates a superior generality performance in prediction due to sparsity regularization whereas variational autoencoders outperform the competing approaches for large data sets due to its capability of learning the representation distribution

Tackling Multiple Ordinal Regression Problems: Sparse and Deep Multi-Task Learning Approaches

Jul 29, 2019

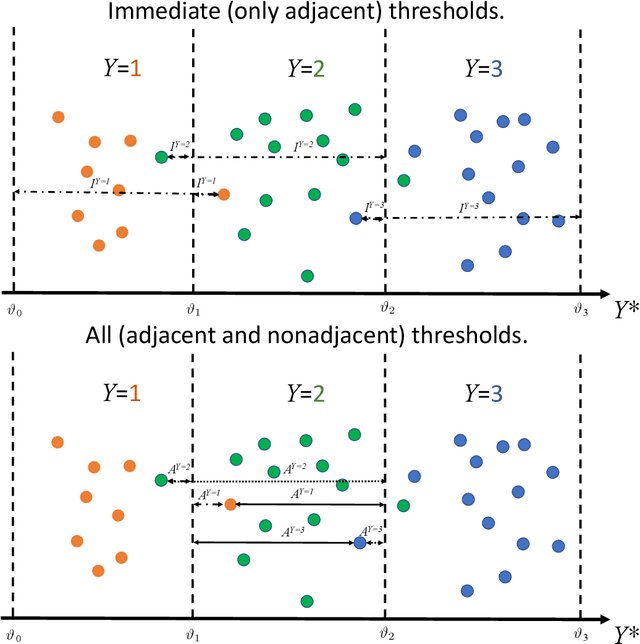

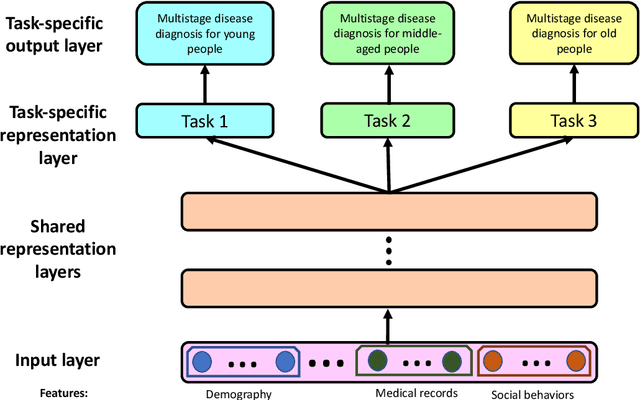

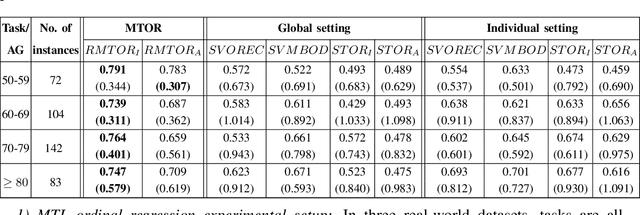

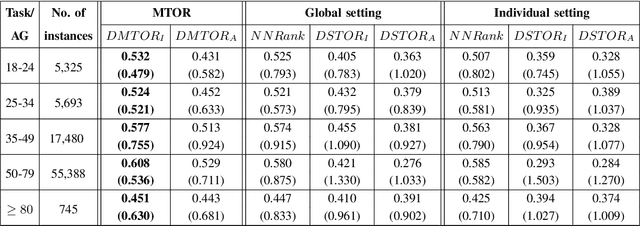

Abstract:Many real-world datasets are labeled with natural orders, i.e., ordinal labels. Ordinal regression is a method to predict ordinal labels that finds a wide range of applications in data-rich science domains, such as medical, social and economic sciences. Most existing approaches work well for a single ordinal regression task. However, they ignore the task relatedness when there are multiple related tasks. Multi-task learning (MTL) provides a framework to encode task relatedness, to bridge data from all tasks, and to simultaneously learn multiple related tasks to improve the generalization performance. Even though MTL methods have been extensively studied, there is barely existing work investigating MTL for data with ordinal labels. We tackle multiple ordinal regression problems via sparse and deep multi-task approaches, i.e., two regularized multi-task ordinal regression (RMTOR) models for small datasets and two deep neural networks based multi-task ordinal regression (DMTOR) models for large-scale datasets. The performance of the proposed multi-task ordinal regression models (MTOR) is demonstrated on three real-world medical datasets for multi-stage disease diagnosis. Our experimental results indicate that our proposed MTOR models markedly improve the prediction performance comparing with single-task learning (STL) ordinal regression models.

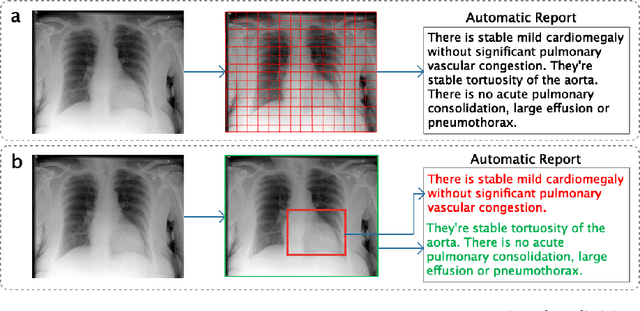

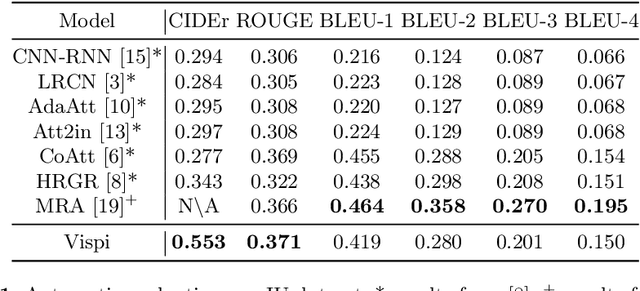

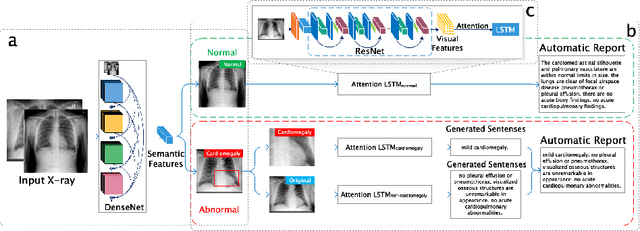

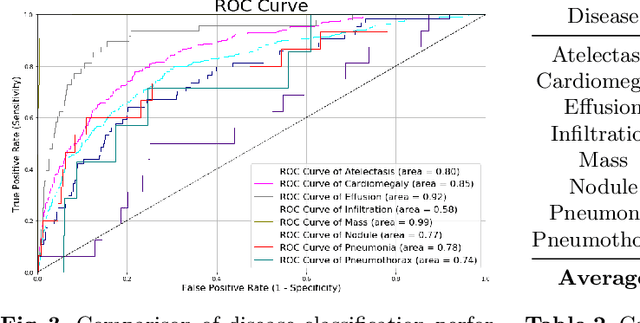

Vispi: Automatic Visual Perception and Interpretation of Chest X-rays

Jun 12, 2019

Abstract:Medical imaging contains the essential information for rendering diagnostic and treatment decisions. Inspecting (visual perception) and interpreting image to generate a report are tedious clinical routines for a radiologist where automation is expected to greatly reduce the workload. Despite rapid development of natural image captioning, computer-aided medical image visual perception and interpretation remain a challenging task, largely due to the lack of high-quality annotated image-report pairs and tailor-made generative models for sufficient extraction and exploitation of localized semantic features, particularly those associated with abnormalities. To tackle these challenges, we present Vispi, an automatic medical image interpretation system, which first annotates an image via classifying and localizing common thoracic diseases with visual support and then followed by report generation from an attentive LSTM model. Analyzing an open IU X-ray dataset, we demonstrate a superior performance of Vispi in disease classification, localization and report generation using automatic performance evaluation metrics ROUGE and CIDEr.

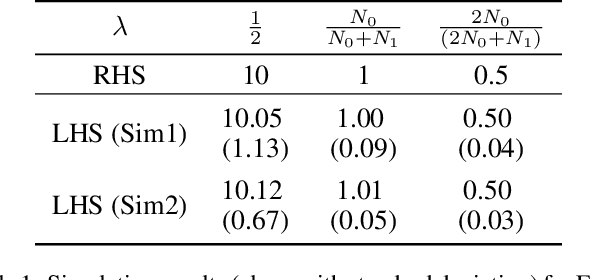

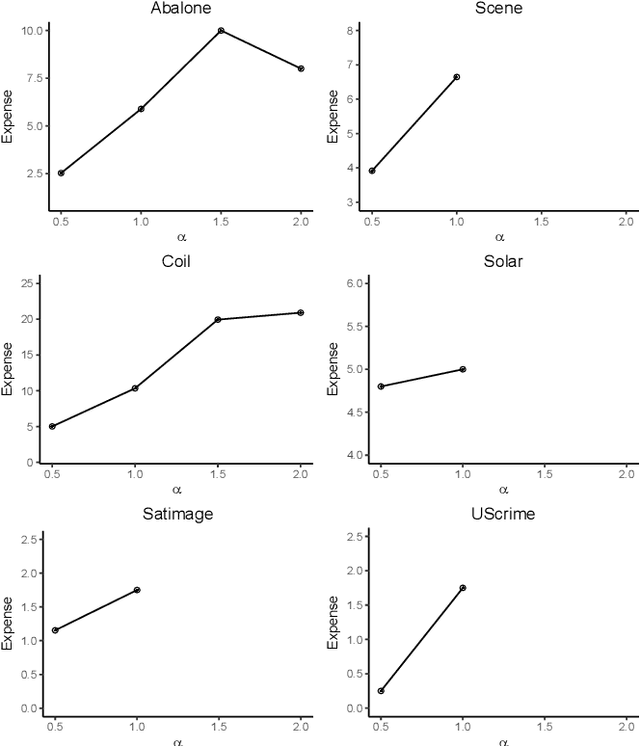

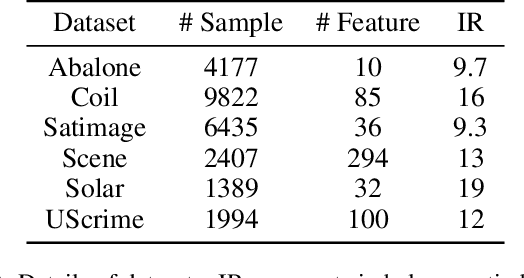

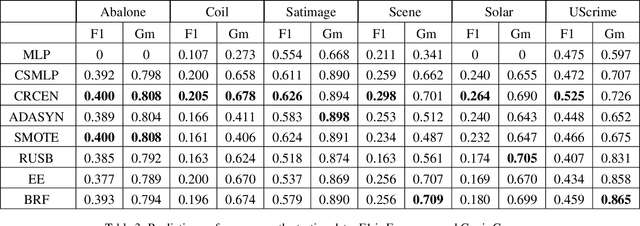

CRCEN: A Generalized Cost-sensitive Neural Network Approach for Imbalanced Classification

Jun 10, 2019

Abstract:Classification on imbalanced datasets is a challenging task in real-world applications. Training conventional classification algorithms directly by minimizing classification error in this scenario can compromise model performance for minority class while optimizing performance for majority class. Traditional approaches to the imbalance problem include re-sampling and cost-sensitive methods. In this paper, we propose a neural network model with novel loss function, CRCEN, for imbalanced classification. Based on the weighted version of cross entropy loss, we provide a theoretical relation for model predicted probability, imbalance ratio and the weighting mechanism. To demonstrate the effectiveness of our proposed model, CRCEN is tested on several benchmark datasets and compared with baseline models.

Interpreting Age Effects of Human Fetal Brain from Spontaneous fMRI using Deep 3D Convolutional Neural Networks

Jun 09, 2019

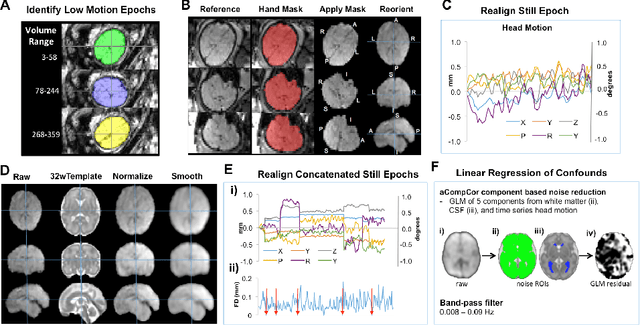

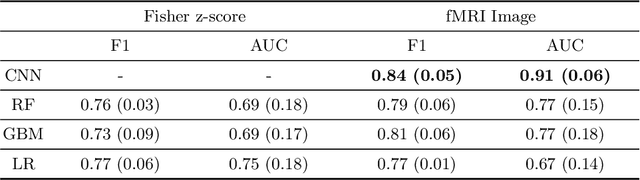

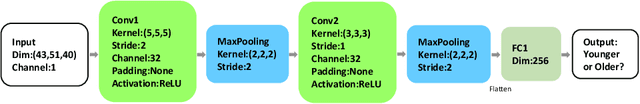

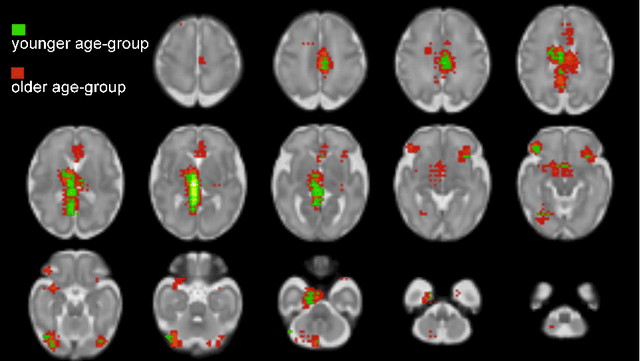

Abstract:Understanding human fetal neurodevelopment is of great clinical importance as abnormal development is linked to adverse neuropsychiatric outcomes after birth. Recent advances in functional Magnetic Resonance Imaging (fMRI) have provided new insight into development of the human brain before birth, but these studies have predominately focused on brain functional connectivity (i.e. Fisher z-score), which requires manual processing steps for feature extraction from fMRI images. Deep learning approaches (i.e., Convolutional Neural Networks) have achieved remarkable success on learning directly from image data, yet have not been applied on fetal fMRI for understanding fetal neurodevelopment. Here, we bridge this gap by applying a novel application of deep 3D CNN to fetal blood oxygen-level dependence (BOLD) resting-state fMRI data. Specifically, we test a supervised CNN framework as a data-driven approach to isolate variation in fMRI signals that relate to younger v.s. older fetal age groups. Based on the learned CNN, we further perform sensitivity analysis to identify brain regions in which changes in BOLD signal are strongly associated with fetal brain age. The findings demonstrate that deep CNNs are a promising approach for identifying spontaneous functional patterns in fetal brain activity that discriminate age groups. Further, we discovered that regions that most strongly differentiate groups are largely bilateral, share similar distribution in older and younger age groups, and are areas of heightened metabolic activity in early human development.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge