Dongmyeong Lee

BEV-Patch-PF: Particle Filtering with BEV-Aerial Feature Matching for Off-Road Geo-Localization

Dec 17, 2025Abstract:We propose BEV-Patch-PF, a GPS-free sequential geo-localization system that integrates a particle filter with learned bird's-eye-view (BEV) and aerial feature maps. From onboard RGB and depth images, we construct a BEV feature map. For each 3-DoF particle pose hypothesis, we crop the corresponding patch from an aerial feature map computed from a local aerial image queried around the approximate location. BEV-Patch-PF computes a per-particle log-likelihood by matching the BEV feature to the aerial patch feature. On two real-world off-road datasets, our method achieves 7.5x lower absolute trajectory error (ATE) on seen routes and 7.0x lower ATE on unseen routes than a retrieval-based baseline, while maintaining accuracy under dense canopy and shadow. The system runs in real time at 10 Hz on an NVIDIA Tesla T4, enabling practical robot deployment.

Spatiotemporal Contrastive Learning for Cross-View Video Localization in Unstructured Off-road Terrains

Jun 05, 2025Abstract:Robust cross-view 3-DoF localization in GPS-denied, off-road environments remains challenging due to (1) perceptual ambiguities from repetitive vegetation and unstructured terrain, and (2) seasonal shifts that significantly alter scene appearance, hindering alignment with outdated satellite imagery. To address this, we introduce MoViX, a self-supervised cross-view video localization framework that learns viewpoint- and season-invariant representations while preserving directional awareness essential for accurate localization. MoViX employs a pose-dependent positive sampling strategy to enhance directional discrimination and temporally aligned hard negative mining to discourage shortcut learning from seasonal cues. A motion-informed frame sampler selects spatially diverse frames, and a lightweight temporal aggregator emphasizes geometrically aligned observations while downweighting ambiguous ones. At inference, MoViX runs within a Monte Carlo Localization framework, using a learned cross-view matching module in place of handcrafted models. Entropy-guided temperature scaling enables robust multi-hypothesis tracking and confident convergence under visual ambiguity. We evaluate MoViX on the TartanDrive 2.0 dataset, training on under 30 minutes of data and testing over 12.29 km. Despite outdated satellite imagery, MoViX localizes within 25 meters of ground truth 93% of the time, and within 50 meters 100% of the time in unseen regions, outperforming state-of-the-art baselines without environment-specific tuning. We further demonstrate generalization on a real-world off-road dataset from a geographically distinct site with a different robot platform.

CLOVER: Context-aware Long-term Object Viewpoint- and Environment- Invariant Representation Learning

Jul 12, 2024

Abstract:In many applications, robots can benefit from object-level understanding of their environments, including the ability to distinguish object instances and re-identify previously seen instances. Object re-identification is challenging across different viewpoints and in scenes with significant appearance variation arising from weather or lighting changes. Most works on object re-identification focus on specific classes; approaches that address general object re-identification require foreground segmentation and have limited consideration of challenges such as occlusions, outdoor scenes, and illumination changes. To address this problem, we introduce CODa Re-ID: an in-the-wild object re-identification dataset containing 1,037,814 observations of 557 objects of 8 classes under diverse lighting conditions and viewpoints. Further, we propose CLOVER, a representation learning method for object observations that can distinguish between static object instances. Our results show that CLOVER achieves superior performance in static object re-identification under varying lighting conditions and viewpoint changes, and can generalize to unseen instances and classes.

Informable Multi-Objective and Multi-Directional RRT* System for Robot Path Planning

May 30, 2022

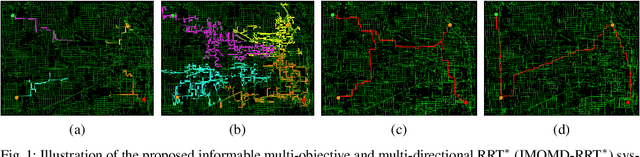

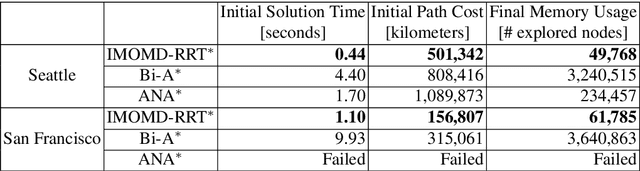

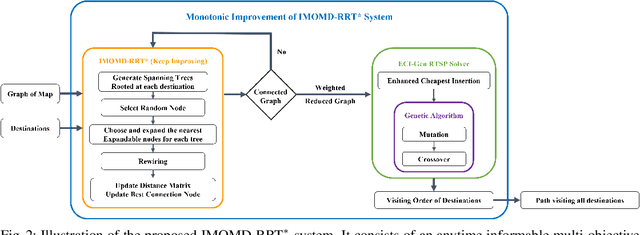

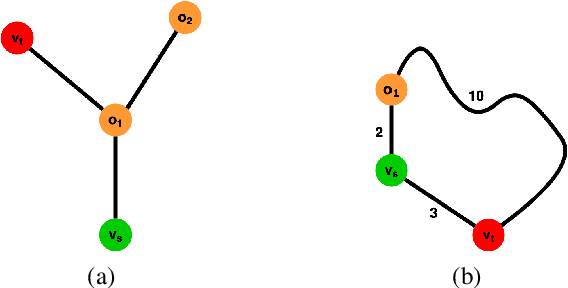

Abstract:Multi-objective or multi-destination path planning is crucial for mobile robotics applications such as mobility as a service, robotics inspection, and electric vehicle charging for long trips. This work proposes an anytime iterative system to concurrently solve the multi-objective path planning problem and determine the visiting order of destinations. The system is comprised of an anytime informable multi-objective and multi-directional RRT* algorithm to form a simple connected graph, and a proposed solver that consists of an enhanced cheapest insertion algorithm and a genetic algorithm to solve the relaxed traveling salesman problem in polynomial time. Moreover, a list of waypoints is often provided for robotics inspection and vehicle routing so that the robot can preferentially visit certain equipment or areas of interest. We show that the proposed system can inherently incorporate such knowledge, and can navigate through challenging topology. The proposed anytime system is evaluated on large and complex graphs built for real-world driving applications. All implementations are coded in multi-threaded C++ and are available at: https://github.com/UMich-BipedLab/IMOMD-RRTStar.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge